Quote for the day:

“Success is most often achieved by those who don't know that failure is inevitable.” -- Coco Chanel

Zero-knowledge cryptography is bigger than web3

Zero-knowledge proofs have existed since the 1980s, long before the advent of

web3. So why limit their potential to blockchain applications? Traditional

companies can—and should—adopt ZK technology without fully embracing web3

infrastructure. At a basic level, ZKPs unlock the ability to prove something is

true without revealing the underlying data behind that statement. Ideally, a

prover creates the proof, a verifier verifies it, and these two parties are

completely isolated from each other in order to ensure fairness. That’s really

it. There’s no reason this concept has to be trapped behind the learning curve

of web3. ... AI’s potential for deception is well-established. However, there

are ways we can harness AI’s creativity while still trusting its output. As

artificial intelligence pervades every aspect of our lives, it becomes

increasingly important that we know the models training the AIs we rely on are

legitimate because if they aren’t, we could literally be changing history and

not even realize it. With ZKML, or zero-knowledge machine learning, we avoid

those potential pitfalls, and the benefits can still be harnessed by web2

projects that have zero interest in going onchain. Recently, the University of

Southern California partnered with the Shoah Foundation to create something

called IWitness, where users are able to speak or type directly to holograms of

Holocaust survivors.

How to Make Security Auditing an Important Part of Your DevOps Processes

There's a difference between a security audit and a simple vulnerability scan,

however. Security auditing is a much more comprehensive evaluation of various

elements that make up an organization's cybersecurity posture. Because of the

sheer amount of data that most businesses store and use on a daily basis, it's

critical to ensure that it stays protected. Failure to do this can lead to

costly data compliance issues(link is external) and also lead to

significant financial losses. ... Quick development and rapid deployment are the

primary focus of most DevOps practices. However, security has also become an

equally, if not more important, component of modern-day software development.

It's critical that security finds its way into every stage of the development

lifecycle. Changing this narrative does, however, require everyone in the

organization to place security higher up on their priority lists. This means the

organization as a whole needs to develop a security-conscious business culture

that helps to shape all the decisions made. ... Another way that automation

can be used in software development is continuous security monitoring. In this

scenario, specialized monitoring tools are used to regularly monitor an

organization's system in real time.

The Critical Role of CISOs in Managing IAM, Including NHIs

As regulators catch up to the reality that NHIs pose the same (or greater)

risks, organizations will be held accountable for securing all identities. This

means enforcing least privilege for NHIs — just as with human users. It also

means tracking the full lifecycle of machine identities, from creation to

decommissioning, as well as auditing and monitoring API keys, tokens, and

service accounts with the same rigor as employee credentials. Waiting for

regulatory pressure after a breach is too late. CISOs must act proactively to

get ahead of the curve on these coming changes. ... A modern IAM strategy must

begin with comprehensive discovery and mapping of all identities across the

enterprise. This includes understanding not just where the associated secrets

are stored but also their origins, permissions, and relationships with other

systems. Organizations need to implement robust secrets management platforms

that can serve as a single source of truth, ensuring all credentials are

encrypted and monitored. The lifecycle management of NHIs requires particular

attention. Unlike human identities, which follow predictable patterns of

employment and human lifestyles, machine identities require automated processes

for creation, rotation, and decommissioning.

Preparing the Workforce for an AI-Driven Economy: Skills of the Future

As part of creating awareness about AI, the opportunities that come with it, and

its role in shaping our future, I speak at several global forums and

conferences. This is the question I am frequently asked: How did you start your

AI journey? Unlike the “hidden secret” that most would expect, my response is

fairly simple: data. I had worked with data long enough that transitioning to AI

seemed like a natural transition. Data is the core of AI, hence it is important

to build data literacy first. It involves the ability to read, work with,

analyze, and communicate data. In other words, interpreting data insights and

using them to drive decision-making is an absolute must for everyone from junior

employees to senior executives. No matter what is your role within an

organization, honing this skill will serve you well in this AI-driven economy.

Those who say that data is the new currency or the new oil are not entirely

overstating its importance. ... AI is a highly collaborative field. No one

person can build a high-performing, robust AI; it requires seamless

collaboration across diverse teams. With diverse skills and backgrounds, a

strong AI profile must possess the ability to communicate the results, the

process, and the algorithms. If you want to ace a career in AI, be the person

who can tailor the talk to the right audience and speak at the right

altitude.

Prioritizing data and identity security in 2025

First, it’s important to get the basics right. Yes, new security threats are

emerging on an almost daily basis, along with solutions designed to combat them.

Security and business leaders can get caught up in chasing the “shiny objects”

making headlines, but the truth is that most organizations haven’t even

addressed the known vulnerabilities in their existing environments. Major news

headline-generating hacks were launched on the backs of knowable, solvable

technological weaknesses. As tempting as it can be to focus on the latest

threats, organizations need to get the basics squared away. Many organizations

don’t even have multifactor authentication (MFA) enabled ... It’s not just

businesses racing to adopt AI—cybercriminals are already leveraging AI tools to

make their tactics significantly more effective. For example, many are using AI

to create persuasive, error-free phishing emails that are much more difficult to

spot. One of the biggest concerns is the fact that AI is lowering the barrier to

entry for attackers—even novice hackers can now use AI to code dangerous,

triple-threat ransomware. On the other end of the spectrum, well-resourced

nation-states are using AI to create manipulative deepfake videos that look just

like the real thing. Fortunately, strong security fundamentals can help combat

AI-enhanced attack tactics, but it’s important to be aware of how the technology

is being used.

Study reveals delays in SaaS implementations are costing Indian enterprises in crores

Delayed SaaS implementations create cascading effects, affecting both ongoing

and future digital transformation initiatives. As per the study, 92.5% of

Indian enterprises recognise that timely implementation is critical, while the

remaining consider it somewhat important. The study found that 67% of

enterprises reported increased costs due to extended deployment timelines,

making implementation overruns a direct financial burden. 53% of the

respondents indicated that delays hindered digital transformation progress,

slowing down innovation and business growth. Additionally, 48% of enterprises

experienced customer dissatisfaction, while 46% faced missed business revenue

and opportunities, impacting overall business performance. ... To mitigate

these challenges, enterprises are shifting toward a platform-driven approach

to SaaS implementation. This model enables faster deployments by leveraging

automation, reducing customisation efforts, and ensuring seamless

interoperability. The IDC study highlights that 59% of enterprises recognise

automation and DevOps practices as key factors in shortening deployment

timelines. By leveraging advanced automation, organisations can minimise

manual dependencies, reduce errors, and improve implementation speed.

Quantum Breakthrough: New Study Uncovers Hidden Behavior in Superconductors

To produce an electric current in normal conductors between two points one

needs to apply a voltage, which acts as the pressure that pushes electricity

between two points. But because of a peculiar quantum tunneling process known

as the “Josephson effect” current can flow between two superconductors without

the need for an applied voltage. The FMFs influence this Josephson current in

unique ways. In most systems, the current between two superconductors repeats

itself at regular intervals. However, FMFs manifest themselves in a pattern of

current that oscillates at half the normal rate, creating a unique signature

that can help in their detection. ... One of the key findings revealed by

Seradjeh and colleagues’ study is that the strength of the Josephson

current—the amount of electrical flow—can be tuned using the “chemical

potential” of the superconductors. Simply stated, the chemical potential acts

as a dial that adjusts the properties of the material, and the researchers

found that it could be modified by synching with the frequency of the external

energy source driving the system. This could provide scientists a new level of

control over quantum materials and opens up possibilities for applications in

quantum information processing, where precise manipulation of quantum states

is critical.

Data Center Network Topology: A Guide to Optimizing Performance

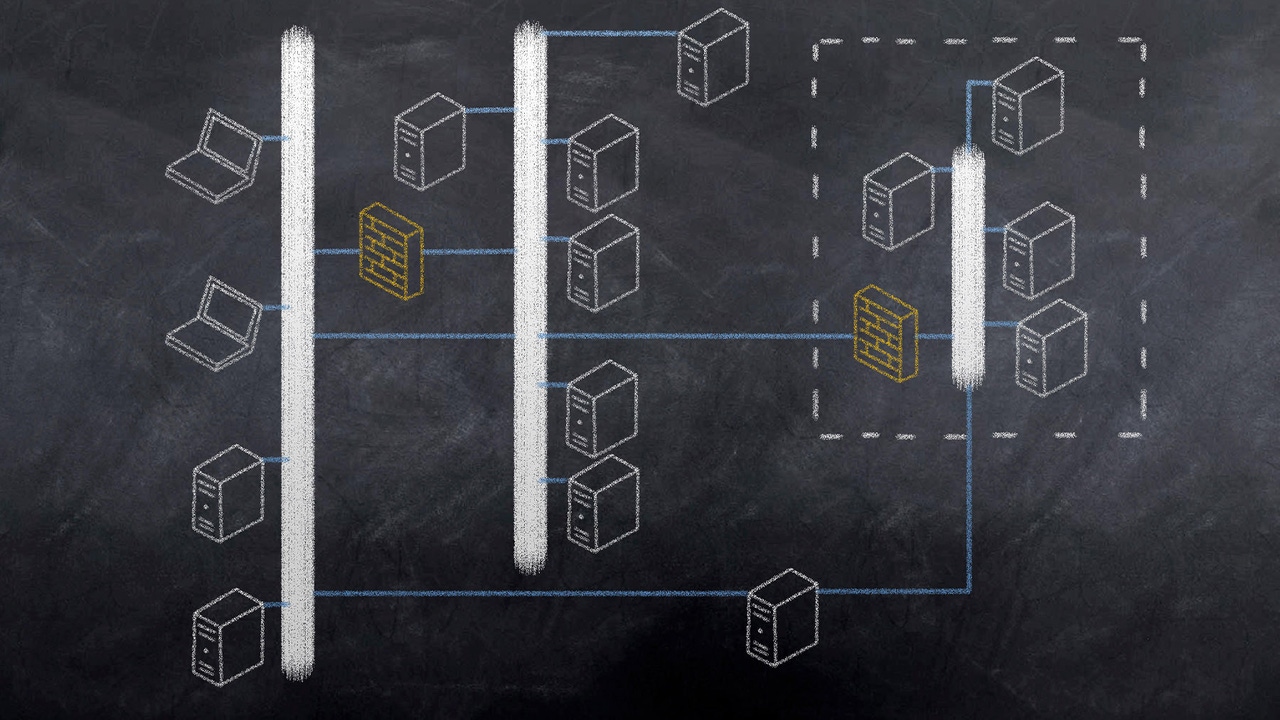

To understand fully what this means, let’s step back and talk about how

network traffic flows within a data center. Typically, traffic ultimately

needs to move to and from servers. ... Data center network topology is

important for several reasons: Network performance: Network performance hinges

on the ability to move packets as quickly as possible and with minimal latency

between servers and external endpoints. Poor network topologies may create

bottlenecks that reduce network performance. Scalability: The amount of

network traffic that flows through a data center may change over time. To

accommodate these changes, network topologies must be flexible enough to

scale. Cost-efficiency: Networking equipment can be expensive, and switches or

routers that are under-utilized are a poor use of money. Ideally, network

topology should ensure that switches and routers are used efficiently, but

without approaching the point that they become overwhelmed and reduce network

performance. Security: Although security is not a primary consideration when

designing a network topology because it’s possible to enforce security

policies using any common network design, topology does play a role in

determining how easy it is to segment servers from the Internet and filter

malicious traffic.

Ethics in action: Building trust through responsible AI development

The architecture discipline will always need to continuously evaluate the

landscape of emerging compliance directions to synthesize how the overall

definition and intent can be translated into actionable architecture and

design that best enables compliance. Parallel to this is to ensure their

implementations are auditable so that governing bodies can clearly see that

regulatory mandates are being met. When applied, various capabilities will

enable the necessary flexible designs and architectures with supporting

patterns for sustainable agility to ensure the various checks and policies are

being enforced. ... The heavy hand of governance can be a cause for diminished

innovation, however, this doesn’t need to happen. The same capabilities and

patterns used to ensure ethical behaviors and compliance can also be applied

to stimulate sensible innovation. As new LLMS, models, agents, etc. emerge,

flexible/agile architecture and best practices in responsive engineering can

provide the ability to infuse new market entries into a given product, service

or offering. Leveraging feature toggles and threshold logic will provide safe

inclusion of emerging technologies. ... While managing compliance through

agile solution designs and architectures promotes a trustworthy customer

experience, it does come with a cost of greater complexity.

NTT Unveils First Quantum Computing Architecture Separating Memory and Processor

In this study, researchers applied the design concept of the load-store-type

architecture used in modern computers to quantum computing. In a load-store

architecture, the device is divided into a memory and a processor to perform

calculations. By exchanging data using two abstracted instructions, “load” and

“store,” programs can be built in a portable way that does not depend on

specific processor or memory device structures. Additionally, the memory is only

required to hold data, allowing for high memory utilization. Load-store

computation is often associated with an increase in computation time due to the

limited memory bandwidth between memory and computation spaces. ... Researchers

expect these findings to enable the highly efficient utilization of quantum

hardware, significantly accelerating the practical application of quantum

computation. Additionally, the high program portability of this approach helps

to ensure the compatibility between hardware advancement, error correction

methods at the lower layer and the development of technology at the higher

layer, such as programming languages and compilation optimization. The findings

will facilitate the promotion of parallel advanced research in large-scale

quantum computer development.

No comments:

Post a Comment