Quote for the day:

“The only true wisdom is knowing that you know nothing.” -- Socrates

The secret to using generative AI effectively

It’s a shift from the way we’re accustomed to thinking about these sorts of

interactions, but it isn’t without precedent. When Google itself first launched,

people often wanted to type questions at it — to spell out long, winding

sentences. That wasn’t how to use the search engine most effectively, though.

Google search queries needed to be stripped to the minimum number of words.

GenAI is exactly the opposite. You need to give the AI as much detail as

possible. If you start a new chat and type a single-sentence question, you’re

not going to get a very deep or interesting response. To put it simply: You

shouldn’t be prompting genAI like it’s still 2023. You aren’t performing a web

search. You aren’t asking a question. Instead, you need to be thinking out loud.

You need to iterate with a bit of back and forth. You need to provide a lot of

detail, see what the system tells you — then pick out something that is

interesting to you, drill down on that, and keep going. You are co-discovering

things, in a sense. GenAI is best thought of as a brainstorming partner. Did it

miss something? Tell it — maybe you’re missing something and it can surface it

for you. The more you do this, the better the responses will get. ... Just be

prepared for the fact that ChatGPT (or other tools) won’t give you a single

streamlined answer. It will riff off what you said and give you something to

think about.

Rising attack exposure, threat sophistication spur interest in detection engineering

Detection engineering is about creating and implementing systems to identify

potential security threats within an organization’s specific technology

environment without drowning in false alarms. It’s about writing smart rules

that can tell when something potentially suspicious or malicious is happening

in an organization’s networks or systems and making sure those alerts are

useful. The process typically involves threat modeling, understanding attacker

TTPs, writing, testing and validating detection rules, and adapting detections

based on new threats and attack techniques. ... Proponents argue that

detection engineering differs from traditional threat detection practices in

approach, methodology, and integration with the development lifecycle. Threat

detection processes are typically more reactive and rely on pre-built rules

and signatures from vendors that offer limited customization for the

organizations using them. In contrast, detection engineering applies software

development principles to create and maintain custom detection logic for an

organization’s specific environment and threat landscape. Rather than relying

on static, generic rules and known IOCs, the goal with detection engineering

is to develop tailored mechanisms for detecting threats as they would actually

manifest in an organization’s specific environment.

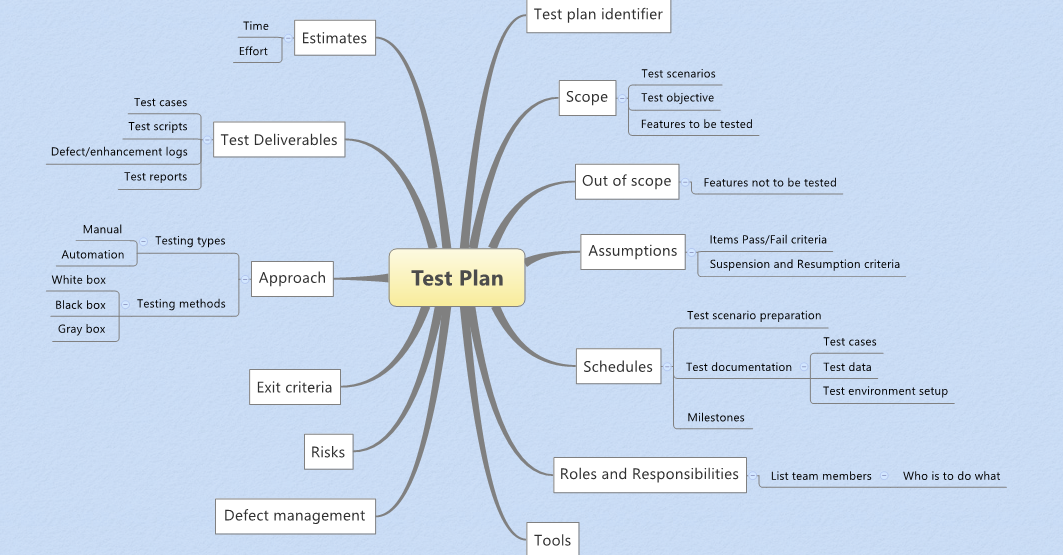

Fast and Furiant: Secrets of Effective Software Testing

Testing should always start as early as possible! It can begin as soon as a

new functionality idea is proposed or discussed, during the mockup phase, or

when requirements are first drafted. Early testing significantly helps me

speed up the process. Even if development hasn’t started yet, you can still

study the product areas that might be involved and familiarize yourself with

new technologies or tools that could be helpful during testing. A good

tester will never sit idle waiting for the perfect moment – they will always

find something to work on before development begins! ... Effective testing

begins with a well thought-out plan. Unfortunately, some testers postpone

this stage until the functional testing phase. It’s important to define the

priority areas for testing based on business requirements and areas where

errors are most likely. The plan should include the types and levels of

testing, as well as resource allocation. The plan can be formal or informal

and doesn’t necessarily need to be submitted for reporting. ... Automation

is the key to speeding up the testing process. It can begin even before or

simultaneously with manual testing. If automation is well-implemented in the

project with a clear purpose, process, and sufficient automated test

coverage — it can significantly accelerate testing, aid in bug detection,

provide a better understanding of product quality, and reduce the risk of

human error.

The Core Pillars of Cyber Resiliency

The first pillar of a strong cybersecurity strategy is Offensive Security

which focuses on a proactive approach to tackling vulnerabilities.

Organisations must implement advanced monitoring systems that can provide

real-time insights into network traffic, user behaviour, and system

vulnerabilities. By establishing a comprehensive overview through visibility

assessments, organisations can identify anomalies and potential threats

before they escalate into full-blown attacks. Cyber hygiene refers to the

practices and habits that users and organisations adopt to maintain the

security of their digital environments. Passwords are typically the first

line of defence against unauthorised access to systems, data and accounts.

Attackers often obtain credentials due to password reuse or users

inadvertently downloading infected software on corporate devices. ... Data

is often regarded as the most valuable asset for any organisation. Effective

data protection measures help organisations maintain the integrity and

confidentiality of their information, even in the face of cyber

threats. This includes implementing encryption for sensitive data,

employing access controls to restrict unauthorised access, and

deploying data loss prevention (DLP) solutions. Regular backups—both on-site

and in the cloud—are critical for ensuring that data can be restored quickly

in case of a breach or ransomware attack.

Cyber Risks Drive CISOs to Surf AI Hype Wave

Resilience, once viewed as an abstract concept, has gained practical

significance under frameworks like DORA, which links people, processes and

technology to tangible business outcomes. "Cybersecurity must align with the

organization's goals, emphasizing its indispensable role in ensuring overall

business success. While CISOs recognize cybersecurity's importance, many

businesses still see it as a single line item in enterprise risk,

overlooking its widespread implications," Gopal said. She said cybersecurity

leaders must demonstrate to the business how cybersecurity affects areas

such as financial risk, brand reputation and operational continuity. This

requires CISOs to shift their focus from traditional protective measures to

strategies that prioritize rapid response and recovery. This shift, evident

in evolving frameworks, underscores the importance of adaptability in

cybersecurity strategies. ... Gartner analysts said CISOs play a crucial

role in balancing innovation's rewards and risks by guiding intelligent

risk-taking. They must foster a culture of intelligent risk-taking by

enabling people to make intelligent decisions. "Transformation and

resilience themes dominate cybersecurity trends, with a focus on empowering

people to make intelligent risk decisions and enabling businesses to address

challenges effectively.

How Infrastructure-As-Code Is Revolutionizing Cloud Disaster Recovery

Infrastructure-as-Code allows organizations to manage and provision their

cloud infrastructure through programmable code, significantly reducing

manual processes and associated risks. Yemini pointed out that IaC's

standardization across the industry simplifies recovery efforts because

teams already possess the necessary expertise. With IaC, cloud

infrastructure recovery becomes quicker, more reliable, and integrated

directly into existing codebases, streamlining restoration and minimizing

downtime. ... The shift toward automation in disaster recovery empowers

organizations to move from reactive recovery to proactive resilience.

ControlMonkey launched its Automated Disaster Recovery solution to restore

the entire cloud infrastructure as opposed to just the data. Automation

substantially reduces recovery times—by as much as 90% in some

scenarios—thereby minimizing business downtime and operational disruptions.

... Shifting from data-focused recovery strategies to comprehensive

infrastructure automation enhances overall cloud resilience. Twizer

highlighted that adopting a holistic approach ensures the entire cloud

environment—network configurations, permissions, and compute resources—is

recoverable swiftly and accurately. Yet, Yemini identifies visibility and

configuration drift as key challenges.

A CISO’s guide to securing AI models

Unlike traditional IT applications, which rely on predefined rules and

static algorithms, ML models are dynamic—they develop their own internal

patterns and decision-making processes by analyzing training data. Their

behavior can change as they learn from new data. This adaptive nature

introduces unique security challenges. Securing these models requires a new

approach that not only addresses traditional IT security concerns, like data

integrity and access control, but also focuses on protecting the models’

training, inference, and decision-making processes from tampering. To

prevent these risks, a robust approach to model deployment and continuous

monitoring known as Machine Learning Security Operations (MLSecOps) is

required. ... To safeguard ML models from emerging threats, CISOs should

implement a comprehensive and proactive approach that integrates security

from their release to ongoing operation. ... Implementing security measures

at each stage of the ML lifecycle—from development to deployment—requires a

comprehensive strategy. MLSecOps makes it possible to integrate security

directly into AI/ML pipelines for continuous monitoring, proactive threat

detection, and resilient deployment practices.

From Human to Machines: Redefining Identity Security in the Age of Automation

In the past, identity security was primarily concentrated on human users-

employees, substitute workers, and collaborators – who could log into the

systems of the company. There was a level of implementation that

incorporated password policy, multi-factor authentication, and access review

after a defined period to ensure protection of identity. With the faster pace

of automation, this approach is increasingly insufficient. There is a

significant rise in identity with devices being routed through cloud

workloads, API’s, automation scripts, and IoT, creating an unimaginable

security gap that these non-human entities are now regarded as the riskiest

identity type. This also does not provide a lot of hope regarding these human

characteristics of the so-called automated devices. ... In the next 12 months,

identity populations are projected to triple, making it more difficult for

Indian organisations to depend on manual identity processes. Automation

platforms have the capability to analyse behavioral patterns and implement

privileged access control and mitigation in real time, all of which are

essential for modern infrastructure management. An integrated approach that

recognises the various forms of identities is more effective than the old,

fragmented approach to identity security.

Sustainable Development: Balancing Innovation With Longevity

For platforms, the Twelve-Factor principles provide a blueprint for building

scalable, maintainable and portable applications. By adhering to these

principles, platforms can ensure that applications deployed on them are

well-structured, easy to manage and can be scaled up or down as needed. The

principles promote a clear separation of concerns, making it easier to update

and maintain the platform and the applications running on it. This translates

to increased agility, reduced risk and improved overall sustainability of the

platform and the software ecosystem it supports. Adapting Twelve-Factor

for modern architectures requires careful consideration of containerization,

orchestration and serverless technologies. ... Sustainable software

development is not just a technical discipline; it’s a mindset. It requires a

commitment to building systems that are not only functional but also

maintainable, scalable and adaptable. By embracing these principles and

practices, developers and organizations can create software that delivers

value over the long term, balancing the need for innovation with the

imperative of longevity. Focus on building a culture that values quality and

maintainability, and invest in the tools and processes that support

sustainable software development.

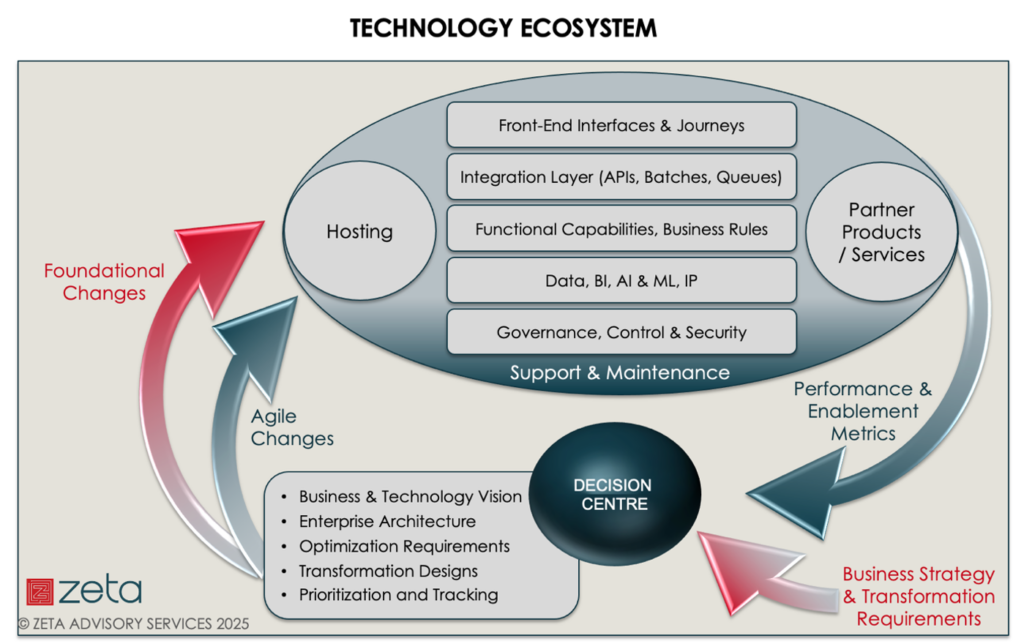

Four Criteria for Creating and Maintaining ‘FLOW’ in Architectures

Vertical alignment is required to transport information within the different

layers of the architecture – it needs to move through all areas of the

organization and, be stored for future reference. The movement of information

is usually achieved through API integration or file sharing. The design of

seamless data-sharing activities can be complicated where data structure and

stature are not formally managed ... The current trends of using SaaS

solutions and moving to the cloud have made the technology landscape’s

maintenance and risk management extremely difficult. There is no complete

control over the performance of the end-to-end landscape. Any of the parties

can change their solutions at any point, and those changes can have various

impacts – which can be tested if known but which often slip in under the

radar. ... Businesses must survive in very competitive environments and,

therefore, need to frequently update their business models and, operating

models (people and process structures). Ideally, updates would be planned

according to a well-defined strategy – serving as the focus for

transformation. However, in today’s agile world, these change requirements

originate mainly from short term goals with poorly defined requirements ,

enabled via hot-fix solutions – the long-term impact of such behaviour should

be known to all architects.

No comments:

Post a Comment