A new era of DevOps, powered by machine learning

While programming languages have evolved tremendously, at their core they all

still have one major thing in common: having a computer accomplish a goal in the

most efficient and error-free way possible. Modern languages have made

development easier in many ways, but not a lot has changed in how we actually

inspect the individual lines of code to make them error free. And even less has

been done to keep your when it comes to improving code quality that improves

performance and reduces operational cost. Where build and release schedules once

slowed down the time it took developers to ship new features, the cloud has

turbo charged this process by providing a step function increase in speed to

build, test, and deploy code. New features are now delivered in hours (instead

of months or years) and are in the hands of end users as soon as they are ready.

Much of this is made possible through a new paradigm in how IT and software

development teams collaboratively interact and build best practices: DevOps.

Although DevOps technology has evolved dramatically over the last 5 years, it is

still challenging.

Productizing Machine Learning Models

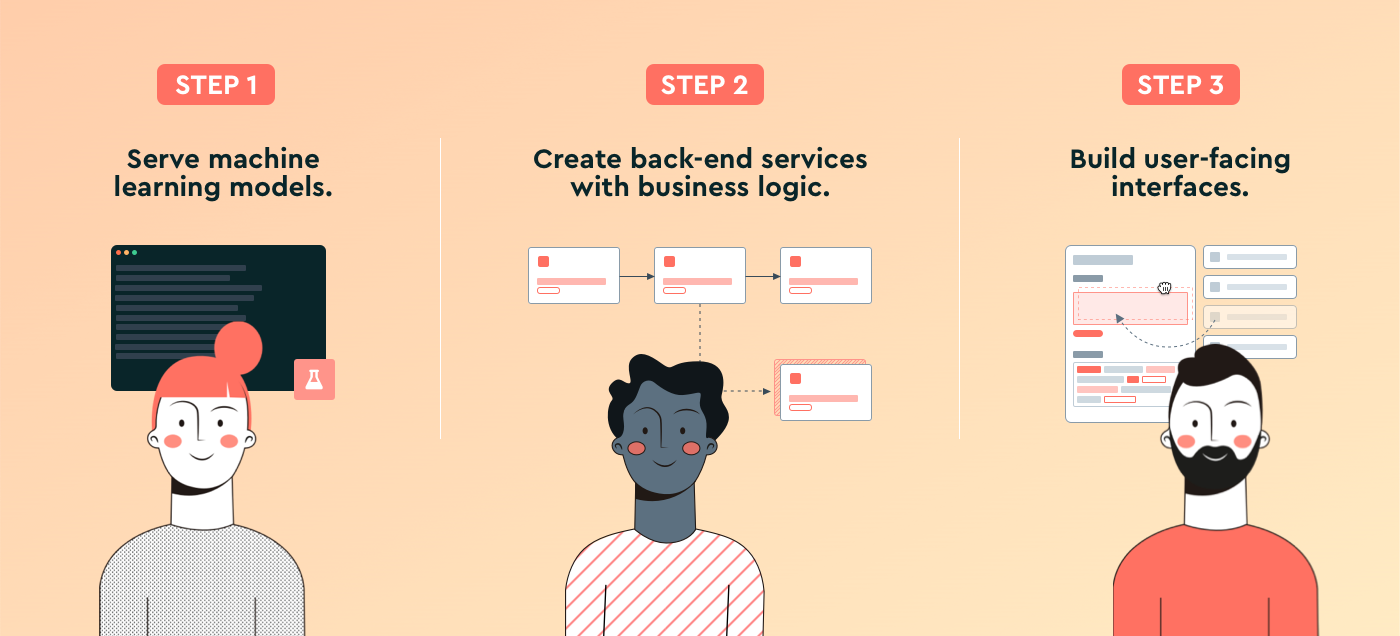

Typically, there are three distinct but interconnected steps towards

productizing an existing model: Serving the models; Writing the

application’s business logic and serving it behind an API; and Building the

user interface that interacts with the above APIs. Today, the first two steps

require a combination of DevOps and back-end engineering skills (e.g.

“Dockerizing” code, running a Kubernetes cluster if needed, standing up web

services…). The last step—building out an interface with which end users can

actually interact—requires front-end engineering skills. The range of skills

necessary means that feedback loops are almost impossible to establish and that

it takes too much time to get machine learning into usable products. Our team

experienced this pain first-hand as data scientists and engineers; so, we built

BaseTen. ... Oftentimes, serving models requires more than just calling it as an

API. For instance, there may be pre- and/or post-processing steps, or business

logic may need to be executed after the model is called. To do this, users can

write Python code in BaseTen and it will be wrapped in an API and served—no need

to worry about Kubernetes, Docker, and Flask.

The timeline for quantum computing is getting shorter

Financial traders rely heavily on computer financial simulations for making

buying and selling decisions. Specifically, “Monte Carlo” simulations are used

to assess risk and simulate prices for a wide range of financial instruments.

These simulations also can be used in corporate finance and for portfolio

management. But in a digital world where other industries routinely leverage

real-time data, financial traders are working with the digital equivalent of the

Pony Express. That’s because Monte Carlo simulations involve such an insanely

large number of complex calculations that they consume more time and

computational resources than a 14-team, two-quarterback online fantasy football

league with Superflex position. Consequently, financial calculations using Monte

Carlo methods typically are made once a day. While that might be fine in the

relatively tranquil bond market, traders trying to navigate more volatile

markets are at a disadvantage because they must rely on old data. If only there

were a way to accelerate Monte Carlo simulations for the benefit of our

lamentably ladened financial traders!

Pandemic tech use heightens consumer privacy fears

With user data the lifeblood of online platforms and digital brands, Marx said

there were clear lessons for tech companies to learn in the post-pandemic world.

Looking ahead, many study respondents agreed they would prefer to engage with

brands that made it easier for them to control their data, up on previous years.

Others called out “creepy” behaviour such as personalised offers or adverts that

stalk people around the internet based on their browsing habits, and many also

felt they wanted to see more evidence of appropriate data governance. Those

organisations that can successfully adapt to meet these expectations might find

they have a competitive advantage in years to come, suggested Marx. And

consumers already appear to be sending them a message that the issue needs to be

taken seriously, with over a third of respondents now rejecting website cookies

or unsubscribing from mailing lists, and just under a third switching on

incognito web browsing. Notably, in South Korea, many respondents said that

having multiple online personas for different services was a good way to manage

their privacy, raising concerns about data accuracy and the quality of insights

that can be derived from it.

Why great leaders always make time for their people

When people can’t find you, they aren’t getting the information they need to do

their job well. They waste time just trying to get your time. They may worry

that, when they do find you, because you’re so busy, you’ll be brittle or angry.

The whole organization may even be working around the assumption that you have

no bandwidth. The sad truth, however, is that when you are unavailable, it’s

also you who is not getting the message. You’re not picking up vital

information, feedback, and early warning signs. You’re not hearing the diverse

perspectives and eccentric ideas that only manifest in unpredictable,

uncontrolled, or unscheduled situations—so, exactly those times you don’t have

time for. And you’re not participating in the relaxed, social interactions that

build connection and cohesion in your organization. So, though you may be busy

doing lots of important stuff, your finger is off the pulse. But imagine being a

leader who does have time, and how this freeing up of resources changes a

leader’s influence on everyone below them. Great leaders know that being

available actually saves time. A leader who has time would not use “busy” as an

excuse. Indeed, you would take responsibility for time.

The road to successful change is lined with trade-offs

Leaders should borrow an important concept from the project management world: Go

slow to go fast. There is often a rush to dive in at the beginning of a project,

to start getting things done quickly and to feel a sense of accomplishment. This

desire backfires when stakeholders are overlooked, plans are not validated, and

critical conversations are ignored. Instead, project managers are advised to go

slow — to do the work needed up front to develop momentum and gain speed later

in the project. The same idea helps reframe notions about how to lead

organizational change successfully. Instead of doing the conceptual work quickly

and alone, leaders must slow down the initial planning stages, resist the

temptation and endorphin rush of being a “heroic” leader solving the problem,

and engage people in frank conversations about the trade-offs involved in

change. This does not have to take long — even just a few days or weeks. The key

is to build the capacity to think together and to get underlying assumptions out

in the open. Leaders must do more than just get the conversation started. They

also need to keep it going, often in the face of significant challenges.

With smart canvas, Google looks to better connect Workspace apps

The smart chips also connect to Google Drive and Calendar for files and

meetings, respectively. And while the focus of the smart canvas capabilities is

currently around Workspace apps, Google said that it plans to open the APIs for

third-party platforms to integrate, too. “Google didn’t reinvent Docs, Sheets

and Slides: They made it easier to meet while using them — and to integrate

other elements into the Smart Canvas,” said Wayne Kurtzman, a research director

at IDC. “Google seemingly focused on creating a single pane of glass to make

engaging over work easier - without reinventing the proverbial wheel.” The moves

announced this week are part of Google’s drive to integrate its various apps

more tightly; the company rebranded G Suite to Workspace last year. “The idea of

documents, spreadsheets and presentations as separate applications increasingly

feels like an archaic concept that makes much less sense in today’s cloud-based

environment, and this complexity gets in the way of getting things done,” said

Angela Ashenden, a principal analyst at CCS Insight.

Graph databases to map AI in massive exercise in meta-understanding

"It is one of the biggest trends that we're seeing today in AI," Den Hamer said.

"Because of this growing pervasiveness of this fundamental role of graph, we see

that this will lead to composite AI, which is about the notion that graphs

provide a common ground for the culmination, or if you like the composition of

notable existing and new AI techniques together, they'll go well beyond the

current generation of fully data-driven machine learning." Roughly speaking,

graph databases work by storing a thing in a node – say, a person or a company –

and then describing its relationship to other nodes using an edge, to which a

variety of parameters can be attached. ... Meanwhile, graph databases often come

in handy for data scientists, data engineers and subject matter experts trying

to quickly understand how the data is structured, using graph visualisation

techniques to start "identifying the likely most relevant features and input

variables that are needed for the prediction or the categorisation that they're

working on," he added.

Data Sharing Is a Business Necessity to Accelerate Digital Business

Gartner predicts that by 2023, organizations that promote data sharing will

outperform their peers on most business value metrics. Yet, at the same time,

Gartner predicts that through 2022, less than 5% of data-sharing programs will

correctly identify trusted data and locate trusted data sources. “There should

be more collaborative data sharing unless there is a vetted reason not to, as

not sharing data frequently can hamper business outcomes and be detrimental,”

says Clougherty Jones. Many organizations inhibit access to data, preserve data

silos and discourage data sharing. This undermines the efforts to maximize

business and social value from data and analytics — at a time when COVID-19 is

driving demand for data and analytics to unprecedented levels. The traditional

“don’t share data unless” mindset should be replaced with “must share data

unless.” By recasting data sharing as a business necessity, data and analytics

leaders will have access to the right data at the right time, enabling more

robust data and analytics strategies that deliver business benefit and achieve

digital transformation.

How AI could steal your data by ‘lip-reading’ your keystrokes

Today’s CV systems can make incredibly robust inferences with very small amounts

of data. For example, researchers have demonstrated the ability for computers to

authenticate users with nothing but AI-based typing biometrics and psychologists

have developed automated stress detection systems using keystroke analysis.

Researchers are even training AI to mimic human typing so we can develop better

tools to help us with spelling, grammar, and other communication techniques. The

long and short of it is, we’re teaching AI systems to make inferences from our

finger movements that most humans couldn’t. It’s not much of a stretch to

imagine the existence of a system capable of analyzing finger movement and

interpreting it as text in much the same way lip-readers convert mouth movement

into words. We haven’t seen an AI product like this yet, but that doesn’t mean

it’s not already out there. So what’s the worst that could happen? Not too long

ago, before the internet was ubiquitous, “shoulder surfing” was among the

biggest threats faced by people for whom computer security is a big deal.

Basically, the easiest way to steal someone’s password is to watch them type

it.

Quote for the day:

"Distinguished leaders impress, inspire

and invest in other leaders." -- Anyaele Sam Chiyson

No comments:

Post a Comment