How Biometric Identity Will Drive Personal Security In Smart Cities

While smart cities can offer unprecedented levels of convenience to improve our everyday lives they also rely on vast networks of data, including personal customer information to predict our preferences. This has led to concerns around the high levels of data used and stored by smart systems, and the security provided to our digital identity. We know that existing personal and unique identifiers, such as passwords and PINs are no longer secure enough to protect our systems, and this is even more important in hyper-connected cities as, once a city becomes ‘smart’ the inter-connected networks widen, and the potential for cyberattacks or data breaches grows. So as this trend continues, how can we develop smart cities that are both convenient and secure? To resolve this, providers of smart city networks need to establish a chain of trust in their technology. This is a process common in cybersecurity, where each component in a network is validated by a secure root. In wide connected networks, this is vital to protect sensitive personal or business data and ensure consumer trust in the whole system.

Coronavirus challenges remote networking

The security of home Wi-Fi networks is also an issue, Nolle said. IT pros should require workers to submit screenshots of their Wi-Fi configurations in order to validate the encryption being used. "Home workers often bypass a lot of the security built into enterprise locations," he said. Education of new home workers is also important, said Andrew Wertkin, chief strategy officer with DNS software company BlueCat. "There will be remote workers who have not substantially worked from home before, and may or may not understand the implications to security," Wertkin said. "This is especially problematic if the users are accessing the network via personal home devices versus corporate devices." An unexpected increase in remote corporate users using a VPN can also introduce cost challenges. "VPN appliances are expensive, and moving to virtualized environments in the cloud often can turn out to be expensive when you take into account compute cost and per-seat cost," Farmer said. A significant increase in per-seat VPN licenses have likely not been budgeted for.

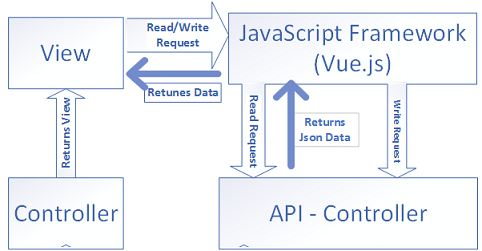

Implementing CQRS Pattern with Vue.js & ASP.NET Core MVC

If you’re a software professional, then you’re familiar with the Software enhancement and maintenance work. This is the part of software development life cycle; so that, you can correct the faults, delete/ enhance the existing features. The software maintenance cost can be minimized if you use software architectural pattern, choosing right technologies and be aware of the industry trends for the future, consider resource reliability/availability for now and future, use design pattern/principle in your code, re-use your code and keep open your option for future extension, etc. Anyway, if you use any known software architectural pattern in your application, then it will be easy for others to understand the structure/component design of your application. I’ll explain a sample project implementation according to the CQRS pattern using MediatR in ASP.NET Core MVC with vue.js. ... The main goal of this project is to explain the CQRS architectural pattern. I’m plaining to implement a tiny Single-Page-Application (SPA) project. The choice of the technology is important, and you should choose it according to your requirements.

What does 'network on demand' mean for enterprises?

Network on demand -- or on-demand networking -- can be delivered as either a managed network service or as cloud-based networking. In a managed network service model, a third party manages, meters and bills the infrastructure. In a cloud-based networking model, a business contracts directly with the cloud provider and makes all the decisions about its network. In either model, on-demand networking changes the dynamics from a Capex model in which customers pay upfront and amortize to a consumption-based model where users pay monthly for what they consume. Network on-demand options can be more flexible, enabling businesses to scale their network bandwidth and provision up and down to match business needs. In the on-demand world, burdens shift toward more planning and monitoring of service-level agreements and consumption versus hardware and traffic. The most logical customers for on-demand managed networking services are smaller businesses that don't have the internal resources to adequately handle networking.

Data is your best defence against a coronavirus downturn

Remember, good information in its many forms, including analytics, insights, predictions, diagnoses, prescriptions, and so forth, often is a lower-cost substitute for inventory, property and even money. Uber and Lyft for example have substituted information about who needs a ride and who has a car for fleets of taxis. Airbnb and HomeAway have done the same for bedrooms. Even most traditional retailers and manufacturers have been able to reduce their inventory levels, some to just-in-time inventory, based on detailed, near real-time supply and demand information. Moreover, more than 30% of companies today exchange information they collect or generate in return for goods and services from others. And this merely represents one of several ways to monetize your data. Investors themselves even seem to favor organisations that make significant investments in data and analytics. Public companies with chief data officers, data governance programs, and data science organizations command a nearly 2x market-to-book valuation over the rest of the market.

Needed: A Cybersecurity Good Samaritan Law

As the US becomes more sophisticated in protecting the digital world, physical systems are becoming a target — one with an attack surface that's relatively easy to penetrate. Gaining physical access is one of the easiest ways to hack into a network. This could include accessing paper records, installing equipment or software on the network, or simply putting in covert backdoor systems. The concept of combining physical attacks and cyberattacks to test a system is nothing new. The term "red teaming" is used in the industry to describe a method of system testing based on thinking and acting like a bad guy. Red teams help businesses to see how break-ins and business disruptions occur, to test strength and durability of their defenses, to identify where vulnerabilities exist, and to expose weaknesses that could be considered negligent and contributing to a breach. The risks of conducting red teaming increase as more bad guys hide themselves in cyberspace. Law enforcement and the legal system have the power to interpret the legality of our work.

CIO interview: Malcolm Lowe, head of IT, Transport for Greater Manchester

“The organisation has a lot of data and information,” he says. “It was in lots of pockets; people were using all sorts of different tools and techniques. We recognised there was a great opportunity for the organisation to really embrace analytics.” Lowe says his initial efforts were focused on getting people from across the organisation to understand what opportunities data might provide. He focused on showing business stakeholders what he calls “the art of the possible” through a proof of concept. “We had some spare capacity, we had some spare licences and we got a couple of data engineers to create an alpha,” he says. “I’ve got some bright people in my team. I tasked them to get as much data as they could from across the organisation for a single month. We put that data into an Azure SQL Server Data Warehouse and put Power BI over the top of it. “We found a couple of use cases across the organisation for people who were really interested in our ideas. We built something for them, they got to use it and they really liked it. I’m a big believer in people seeing something tangible...."

What is natural language processing? The business benefits of NLP explained

Natural language processing (NLP) is the branch of artificial intelligence (AI) that deals with communication: How can a computer be programmed to understand, process, and generate language just like a person? While the term originally referred to a system’s ability to read, it’s since become a colloquialism for all computational linguistics. Subcategories include natural language generation (NLG) — a computer’s ability to create communication of its own — and natural language understanding (NLU) — the ability to understand slang, mispronunciations, misspellings, and other variants in language. ... Machine translation is one of the better NLP applications, but it’s not the most commonly used. Search is. Every time you look something up in Google or Bing, you're feeding data into the system. When you click on a search result, the system sees this as confirmation that the results it has found are right and uses this information to better search in the future. Chatbots work the same way: They integrate with Slack, Microsoft Messenger, and other chat programs where they read the language you use, then turn on when you type in a trigger phrase. Voice assistants such as Siri and Alexa also kick into gear when they hear phrases like “Hey, Alexa.”

Keeping machine learning algorithms humble and honest in the ‘ethics-first’ era

Removing the complexity of the data science procedure will help users discover and address bias faster – and better understand the expected accuracy and outcomes of deploying a particular model. Machine learning tools with built-in explainability allow users to demonstrate the reasoning behind applying ML to a tackle a specific problem, and ultimately justify the outcome. First steps towards this explainability would be features in the ML tool to enable the visual inspection of data – with the platform alerting users to potential bias during preparation – and metrics on model accuracy and health, including the ability to visualise what the model is doing. Beyond this, ML platforms can take transparency further by introducing full user visibility, tracking each step through a consistent audit trail. This records how and when data sets have been imported, prepared and manipulated during the data science process. It also helps ensure compliance with national and industry regulations – such as the European Union’s GDPR ‘right to explanation’ clause – and helps effectively demonstrate transparency to consumers.

Decipher the true meaning of cloud native

The definition of cloud native has become more confusing as organizations and IT professionals incorporate it into their everyday usage, despite defining the term in different ways. The most oft-cited definition is the murky CNCF definition that was introduced in 2018. That cloud native definition mostly reiterates the points that the CNCF made when it launched in 2015, though it does emphasize some concepts not included at the CNCF launch, such as automation, observability and resiliency. Still, the current CNCF definition doesn't explain exactly what counts as cloud native and what doesn't. That is, unless you think any type of application that uses containers and microservices or relies on automation or resiliency counts as cloud native. ... At a high level, certain technologies, like containers and microservices, form an important part of what many people consider to be cloud native. Yet, there is virtually no specific guidance from any organization regarding how, exactly, these technologies need to be used in order for an app to meet the requirements of the cloud native definition.

Quote for the day:

"What great leaders have in common is that each truly knows his or her strengths - and can call on the right strength at the right time." -- Tom Rath

No comments:

Post a Comment