3 leadership lessons from Log4Shell

APIs add to an organization’s attack surface, so it’s important to know where

they are used. Gartner estimates that roughly 90% of web apps will soon have

more of their exposed attack surface area accounted for by APIs as opposed to

their own interfaces. Indeed, in 2021, malicious traffic around APIs grew by

nearly 350%. Despite these trends, API use only continues to grow. Gone are the

days of monolithic applications. Modern enterprise web applications are built

with coupled services that communicate through APIs galore, and each component

is a target for attackers if left unchecked. Pair that widened attack surface

with the insane growth of APIs, and the need for strong API security is clear.

Organizations need to cover their entire attack surface by implementing

automated and accurate scans via user interfaces and APIs if they want to

eliminate potential weak spots before they become problems. Put simply, security

debt is an organization’s total inventory of unresolved security issues. These

issues have a wide variety of sources, including knowledge gaps, inadequate

tooling or cutting corners during testing in the race to market.

Increasing security for single page applications (SPAs)

First and foremost, the frontend code operates in an insecure environment: a

user’s browser. SPAs often possess a refresh token that grants offline access to

a user’s resources and can obtain new access tokens without interaction from the

user. As these credentials are readable by the SPA, they are vulnerable to

cross-site scripting (XSS) attacks, which can have dangerous repercussions such

as attackers gaining access to users’ personal data and functionalities not

normally accessible through the user interface. As the online data pool grows

and hackers become more sophisticated, security must be taken seriously to

protect customers’ information and businesses’ reputations. However, designing

security solutions for SPAs is no easy feat. As well as the strongest browser

security and simple and reliable code, software developers must consider how to

deliver the best user experience – wrapping all this into a solution that can be

deployed anywhere. The SPA’s web content can be deployed to many global

locations via a Content Delivery Network (CDN). Web content is then close

geographically to all users so that web downloads are faster.

AI and CSR can strengthen anti-corruption efforts

In addition to CSR, there has been much excitement about the future of AI in

anti-corruption work. AI has increasingly become a part of our daily lives,

from digital assistants like Siri and Alexa, to self-driving cars like Teslas

and ride-hailing applications like Uber. Given that AI has been useful in so

many ventures, anti-corruption scholars are eager to apply it to their work.

In fact, AI has been described as “the next frontier in anti-corruption.” ...

However, AI and anti-corruption discussions so far have mostly focused on

governmental efforts to address corporate corruption, not on companies using

AI to mitigate corporate corruption — even though many of them already use AI

to maximize profit. In the corporate anti-corruption context, AI can provide

companies with a proposed investment destinations or transactions and help

detect corruption risks in such ventures and improve due diligence processes.

AI can also provide more information for yearly anti-corruption policy reviews

and assist in designing training based on AI analyses of company processes,

reports and operations.

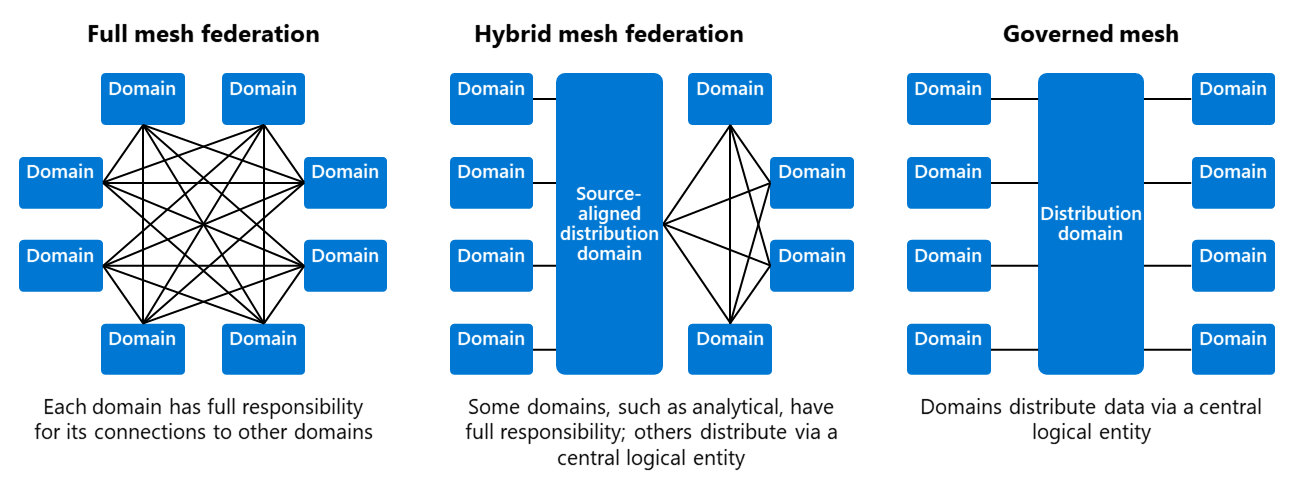

Data Mesh: The Balancing Act of Centralization and Decentralization

Another concept, which resonates well is data products. Managing and providing

data as a product isn't the extreme of dumping raw data, which would require all

consuming teams to perform repeatable work on data quality and compatibility

issues. It also isn't the extreme of building an integration layer, using one

(enterprise) canonical data model with strong conformation from all teams. Data

product design is a nuanced approach of taking data from your (complex)

operational and analytical systems and turning it into read-optimized versions

for organizational-wide consumption. This approach of data product design comes

with lots of best practices like aligning your data products with the language

of your domain, setting clear interoperability standards for fast consumption,

capturing it directly from the source of creation, addressing time-variant and

non-volatile concerns, encapsulating metadata for security, ensuring

discoverability, and so on. More of these best practices you can find here.

Role of the Metaverse, AI and digitalization — Are brands and consumers prepared for the new era?

The metaverse has a mostly positive impact on brands, but there are still some

loopholes that worry them. For instance, the French champagne Armand de

Brignac has recently filed trademark applications to register the appearance

of its gold bottle packaging in virtual reality, augmented reality, video,

social media and the web. Like this, many brands have established identities

when it comes to product and packaging. Since this alternate reality is a

fairly new territory to brands, it is difficult for them to gauge if a product

or its packaging has distinctiveness outside the metaverse. Even if it does,

it is unclear whether those rights will be sufficient to claim infringement

inside the metaverse. Among other concerns, the metaverse also brings issues

regarding privacy and security risks to light. Being an online-enabled space,

it is uncertain whether consumers and brands may face new and unknown privacy

and authenticity issues. The rise of the metaverse is just like that of the

internet – former Amazon strategist Matthew Ball estimates that by 2027, every

company will be a gaming company, implying that the metaverse will soon become

a normal part of people’s lives.

Data Protection In The EU: New GDPR Right Of Access Guidelines

The right of access has a broad scope: in addition to basic personal data,

according to the EDPB it also includes, for example, subjective notes made

during a job application, a history of internet and search engine activity,

etc. Unless explicitly stated otherwise, the request must be understood to

relate to all personal data relating to the data subject, but the controller

may ask the data subject to specify the request if it processes a large amount

of data. This applies to each request: if a data subject makes more than one

request, it would therefore not be sufficient to provide access only to the

changes since the last request. Even data that may have been processed

incorrectly or unlawfully should be provided. Data that has already been

deleted, for example in accordance with a retention policy, and is therefore

no longer available to the controller, does not need to be provided.

Specifically, the controller will have to search all IT systems and other

archives for personal data using search criteria that reflect the way the

information is structured, for example, name and customer or employee number.

Even 'Perfect' APIs Can Be Abused

Even those organizations that do bring a proactive focus to application

security tend to put more emphasis on protecting APIs created for web and

mobile applications. In these cases, many organizations often incorrectly

assume that their web application firewalls (WAFs) will bear much of the load

of securing this type of API usage. But the biggest API protection gap

intended — even in sophisticated organizations — is protection of APIs that

are open to partners. These APIs are ripe for abuse. Even if they are

perfectly written and have no vulnerabilities, they can be abused in

unanticipated ways to expose the core business functions and data of the

organizations that share them. Perhaps the best example of this is the

Cambridge Analytica (CA) scandal that rocked Facebook in 2018. As a brief

refresher, CA exploited Facebook's open API to gather extensive data about at

least 87 million users. This was accomplished by using a Facebook quiz app

that exploited a permissive setting that allowed third-party apps to collect

information about the quiz-taker, as well as all of their friends' interests,

location data, and more.

Five cloud security risks your business needs to address

“Misconfigurations remain a top risk for cloud applications and data,” says

Paul Bischoff, privacy advocate and editor at Comparitech, a website that

rates technologies on their cybersecurity. A misconfiguration happens when an

IT team inadvertently leaves the door open for hackers by, say, failing to

change a default security setting. This is often down to human error and/or a

misunderstanding of how a firm’s systems operate and interact. If

misconfigurations happen on a non-cloud-connected network, they’re

self-contained and, potentially, accessible only to those in the physical

workplace. But, once your data is in the cloud, “it is subject to someone

else’s security. You do not have any direct control or ability to test it,”

notes Steven Furnell, professor of cybersecurity at the University of

Nottingham. “This means trusting another party’s measures, so look for the

appropriate assurances from them rather than making assumptions.”

8 technology trends for innovative leaders in a post-pandemic world

Leaders today are faced with the task of taking difficult decisions that can

have a profound impact on their workforce and employee wellbeing (although

it’s not all grim) in a very uncertain environment. New risks have also

emerged with the staggering amount of data created on the internet, such as

cyber-attacks that are increasingly frequent and costly. What our Young Global

Leaders know well is that it’s easy to lead when times are going well, but

real responsibility emerges when you must stand up for what you believe in.

Responsible leaders truly shine in times of crisis. With this in mind, we

asked eight Young Global Leaders how they will leverage technology and

innovate to become better leaders in 2022. New computational and AI tools are

already being used by business leaders to guide strategic decision-making. In

the next decade, this software will become more powerful and will be applied

in new and different settings. Built upon the mathematics of game theory, AI

tools harness the computational innovations that power chess engines.

As cloud costs spiral upward, enterprises turn to a thing called FinOps

Enter FinOps. This practice is intended to help organizations get maximum

business value from cloud "by helping engineering, finance, technology and

business teams to collaborate on data-driven spending decisions," according to

the FinOps Foundation. (Yes, there's now even an entire foundation devoted to

the practice.) In many cases, they are practicing the art of FinOps without

even calling it that. Respondents are actively involved in the ongoing usage

and cost management for both SaaS (69%) and public cloud IaaS and PaaS (66%).

"More and more users are swimming in the FinOps side of the pool, even if they

may not know it -- or call it FinOps yet," the Flexera survey's authors state.

In addition, for the sixth year in a row, "optimizing the existing use of

cloud is the top initiative for all respondents, underscoring the need for

FinOps teams or similar ways to improve cost savings initiatives," they also

note. While the survey doesn't explicitly ask about FinOps adoption, the

authors also state that some organizations have organized FinOps teams to

assist in evaluating cloud computing metrics and value.

Quote for the day:

"The art of leadership is saying no,

not yes. It is very easy to say yes." -- Tony Blair

No comments:

Post a Comment