How the recent pandemic has driven digital transformation in a borderless enterprise

Talking about the biggest innovations in the last year, Harishankar says AIML,

“Data Science and digital core transformation are big areas for most companies.

The whole digital core transformation is a big agenda and a lot of that is being

run out of India, we are working with other centres as well but we have both

existing talent, a lot of new hires with expertise in this area particularly

around digital core transformation. Therefore I would say on the front end,

commercial transformation, digital core transformation as well as Data Science,

AIML areas, there is a lot that has been happening in the centre. In the new

digital way of working it is very important to position your centre in that

manner. We are leading innovation and not just part of it. We are equal partners

in innovation across any centres in the world.” Talking about technologies that

can be deployed or exploited from Indian centres, Bannerjee says once you start

to enhance your digital adoption effectively, your store becomes your phone or

your PC. You basically have the engineering capabilities to build your front end

channels, your ability to quickly access the throughput.

MLOps Best Practices for Data Scientists

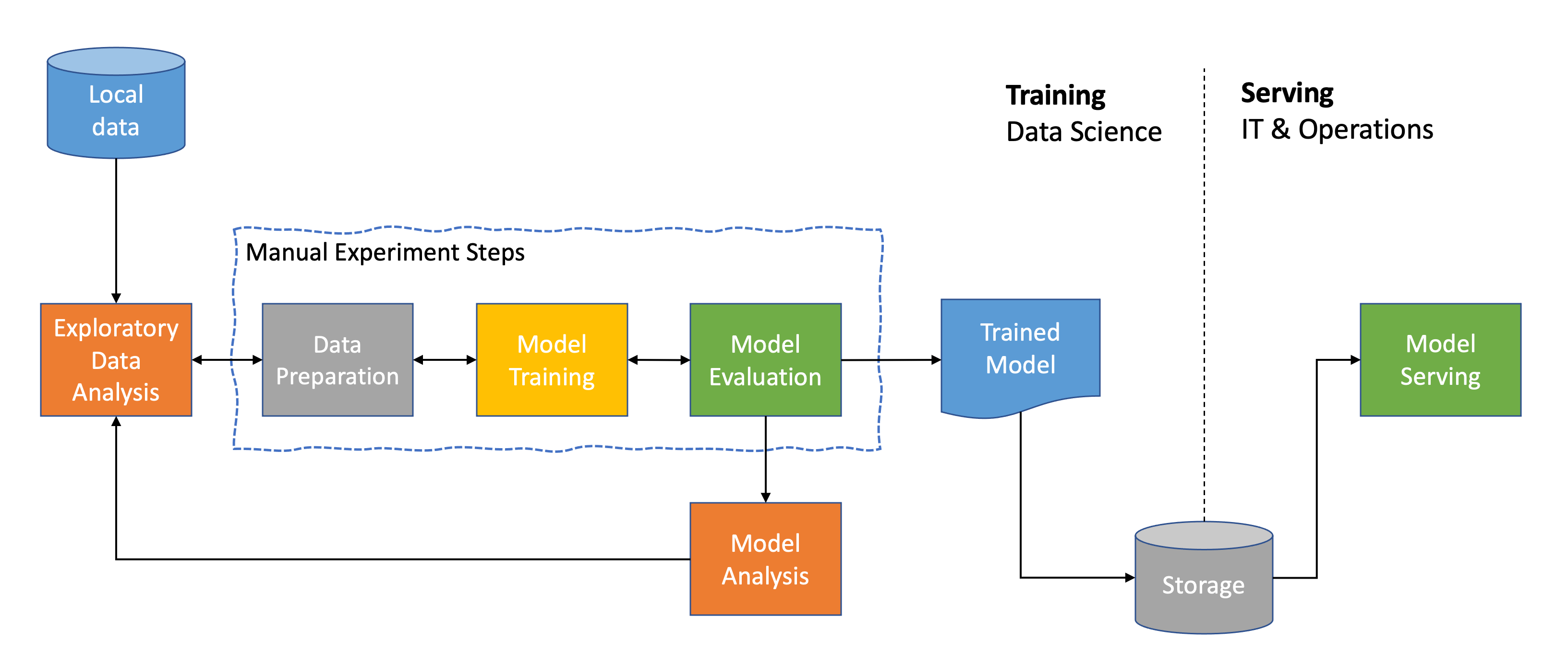

Today most ML journeys to get a machine learning model into production look

something like this. As a data scientist, it starts with an ML use case and a

business objective. With the use case at hand, we start gathering and exploring

the data that seems relevant from different data sources to understand and

assess their quality. ... Once we get a sense of our data, we start crafting and

engineering some features we deem interesting for our problem. We then get into

the modeling stage and begin tackling some experiments. At this phase, we are

manually executing the different experimental steps regularly. For each

experiment, we would be doing some data preparation, some feature engineering,

and testing. Then we do some model training and hyperparameter tuning on any

models or model architectures that we consider particularly promising. Last but

not least, we would be evaluating all of the generated models, testing them

against a holdout dataset, evaluating the different metrics, looking at

performance, and comparing those models with one another to see which one works

best or which one yields the highest evaluation metric.

How Uber’s Michelangelo Contributed To The ML World

The motivation to build Michelangelo came when the team started finding it

excessively difficult to develop and deploy machine learning models at scale.

Before Michelangelo, the engineering teams relied mainly on creating separate

predictive models or one-off bespoke systems. But such short term solutions were

limited in many aspects. Michelangelo is an end-to-end system that standardises

workflows and tools across teams to build and operate machine learning models at

scale easily. It has now emerged as the de-facto system for machine learning for

Uber engineers and data scientists, with several teams leveraging it to build

and deploy models. Michelangelo is built on open-source components such as HDFS,

XGBoost, Tensorflow, Cassandra, MLLib, Samza, and Spark. It uses Uber’s data and

the compute infrastructure to provide a data lake that stores Uber’s

transactional and logged data; Kafka brokers for aggregating logged messages; a

Samza streaming compute engine; managed Cassandra clusters; and in-house service

provisioning and deployment tools. ... The platform consists of a data lake that

is accessed during training and inferencing.

Review: Group-IB Threat Hunting Framework

Group-IB’s Threat Hunting Framework (THF) is a solution that helps organizations

identify their security blind spots and gives a holistic layer of protection to

their most critical services both in IT and OT environments. The framework’s

objective is to uncover unknown threats and adversaries by detecting anomalous

activities and events and correlating them with Group-IB’s Threat Intelligence

& Attribution system, which is capable of attributing cybersecurity

incidents to specific adversaries. In other words, when you spot a suspicious

domain/IP form in your network traffic, with a few clicks you can pivot and

uncover what is behind this infrastructure, view historical evidence of previous

malicious activities and available attribution information to help you broaden

or quickly close your investigation. THF closely follows the incident response

process by having a dedicated component for every step. There are two flavors of

THF: the enterprise version, which is tailored for most business organizations

that use a standard technology stack, and the industrial version, which is able

to analyze industrial-grade protocols and protect industrial control system

(ICS) devices and supervisory control and data acquisition (SCADA) systems.

How organisations can stay one step ahead of cybercriminals

To get ahead of the hackers, IT teams must be wary of unusual password activity,

files being created and deleted quickly, inconsistencies in email usage, and

data moving around in unexpected ways. One form of cyberattack is through

hackers accessing software patch code and adding malicious code to the patch

before it is delivered to customers as a routine update. This method of attack

is especially devious because updates and patches are routine maintenance tasks,

meaning IT teams are much less likely to be suspicious about them. Anti-malware

solutions are also less likely to scrutinise incoming data like a patch from a

trusted vendor. One key component that enables these types of attacks is

credential compromise. Hackers are careful to obtain authentic credentials

whenever possible in order to gain entry to the systems and data that they want

to access inconspicuously, minimising their digital footprint. As a result, IT

teams need to be wary of unusual password activity, such as an uptick in resets

or permission change requests. ... Another powerful tool to reduce the risk of a

cyber-attack is security awareness training. This can lower the chance of an

incident such as a data breach by 70%.

Testing Games is Not a Game

Games are getting more and more complex with the years. And gamers are a very demanding public. For those titles labeled as AAA (tripleA, high-budget projects) we are expected to deliver novel mechanics, mind blowing gameplay and exotic plot-twists. With each iteration, testing all of these becomes harder and the established ways of working need to be assessed and tweaked. That is quite hard, taking in consideration there are so many different kinds of games that it would be almost impossible to unify the way a game tester works. Committing to a general agreement on how to tackle testing processes, tools or even a job description with required skills is not feasible at all in the industry. From one game to another, from one game’s company to another, the required skills vary, the role changes. Also, due to the pretty common overuse of test cases and testing documentation, alive games grow exponentially and rather quickly into monsters. Game testers are usually forced to come up with better scoping techniques and risk/impact based testing. It opens up space for gaps where quality falls down with the consequent impact in gamers’ happiness.

Experian’s Identity GM Addresses Industry’s Post-COVID Challenges

"Today with so many bad actors focused on how to create automatic ways to fool

systems into thinking they are legitimate, it's getting harder to validate that

the business is transacting with a real person," Haller said. As a result,

identity verification has gotten more sophisticated and better, too. For

instance, it looks at IP addresses, device IDs, and GPS coordinates. Another

field that is emerging is called behavior biometrics that captures data about

how you interact with your keyboard and mouse and then uses that information

about your behaviors to verify your identity, Haller said. "It is looking at how

quickly you are typing, how you are using your phone, how you carry your phone,"

he said. "These are all behaviors associated with an identity. It might help

determine whether someone has taken your device and is pretending to be you." To

help IT security pros to tap into the most advanced technology for verifying

identity and preventing fraud, Experian created CrossCore Orchestration Hub to

connect the newest and most advanced services with customers. "We are trying to

help our clients be more effective in discovering new risks and put new

technology into production so they can protect themselves," Haller said.

Quantum computing just got its first developer certification.

"The focus right now is on preparing the workforce and skillsets so that

businesses have an opportunity to leverage quantum computing in the future,"

Chirag Dekate, research lead for quantum at analysis firm Gartner, tells ZDNet.

"But at the moment, it's a scattershot. One of the questions that always comes

across from IT leaders is: 'How do I go about creating a quantum group?'" In

many cases, they don't know where to start: according to Dekate, a certification

like the one IBM unveiled will go a long way in pointing out to employers that a

candidate has the ability to identify business-relevant problems and map them to

the quantum space. Although adapted specifically for Qiskit, many of the

competencies that are required to pass IBM's quantum developer certification

exam are reflective of a wider understanding of quantum computing. Candidates

will be quizzed on their ability to represent qubit states, on their knowledge

of backends, or on how well they can plot data, plot a density matrix or plot a

gate map with error rates; they will be required to know what stands behind the

exotic-sounding but quantum-staple Block spheres, Pauli matrices and Bell

states.

The importance of endpoint security in breaking the cyber kill chain

The term ‘kill chain’ was originally used as a military concept relating to

structuring an attack into stages from identifying an adversary’s weaknesses to

exploiting them. It consisted of target identification, forced dispatch to the

target, decision, order to attack the target, and finally, destruction of the

target. In simple terms it can be viewed as a stereotypical burglary, whereby

the thief will perform reconnaissance on a building before trying to infiltrate

and then go through several more steps before taking off with the valuables. ...

For those defending systems and data, understanding the cyber kill chain can

help identify the differing and varying defences you need in place. While

attackers are constantly evolving their methods, their approach always consists

of these general stages. The closer to the start of the cyber kill chain an

attack can be stopped the better, so a good understanding of adversaries and

their tools and tactics will help to build more effective defences. ... Endpoint

protection (EPP) can detect and prevent many stages of the cyber kill chain,

completely preventing most threats or allowing you to remediate the most

sophisticated ones in later stages.

Interview With Karthik Kumar, Director Of Data Science For Auto Practise, Epsilon

As they say, “Data is the new code”. The machine learning code is only a small portion of the puzzle and would not suffice to take the model from a POC stage to production. Deployment is a process where it is a continuous data flow and learning journey, making ML an iterative process. Hence maintaining high quality in all phases of the ML life cycle is the most important task. The first step is to understand the business problem and to translate it into a statistical/machine learning problem. In this expedition, the quality of the data is critical and this is where a data scientist has to spend maximum of his efforts to better comprehend, and transform the data to understand its characteristics to build a robust machine solution leading to successful business outcomes. The amount of work on mining the right data, improving and understanding the data is the most important step which I would emphasise on my projects. An extensive feature engineering from the data would help build a strong data science model versus iterating the models on a fixed data set. My tip to budding data scientists would be to invest maximum time in gathering the right data, exploring and creating the features innovatively.

Quote for the day:

"Uncertainty is not an indication of

poor leadership; it underscores the need for leadership." --

Andy Stanley

No comments:

Post a Comment