How Confidential Computing is dispelling the climate of distrust around cloud security

Confidential Computing offers a number of additional advantages that go beyond

simple safeguarding. By ensuring that data is processed in a shielded

environment it is possible to securely collaborate with partners without

compromising IP or divulging proprietary information. ... Until now, many

enterprises have held back from migrating some of their most sensitive

applications to the cloud because of worries about data exposure. Confidential

computing addresses this hurdle; not only is data protected during processing,

companies can also securely and efficiently collaborate with partners in the

cloud. For businesses migrating workloads into the cloud, a major concern is the

ability to provide security for customers and continued compliance with EU data

privacy regulations. This is especially the case where businesses are the

stewards of sensitive data, such as healthcare information or bank account

numbers. An important feature of Confidential Computing is its use of embedded

encryption keys, which locks data in a secure enclave during processing. This

keeps it concealed from the operating system as well as any privileged users

i.e. administrators or site reliability engineers.

A Good Data Scientist Should Combine Domain-Specific Knowledge With Technical Competence

Technological expertise augmented by strong domain knowledge is important for an

aspiring data scientist. One should have a clear understanding of the rules and

practices of the industry before applying technological aspects to it. Be it

automotive, BFSI, manufacturing or ecommerce, you can be a good data scientist

in the field if you couple domain-specific knowledge with technical competence.

Ideal candidates would have a degree or background knowledge of computer science

or information technology. Data science is vast and may not suit everyone.

Therefore, it is vital to have an aptitude to understand the data, see patterns,

analyse from different perspectives and present findings to suit the end-user

while also being open to understanding the domain. ... Industry partnerships are

crucial to educational institutions. The two key components of a data science

course are the fundamental conceptual foundation laid by highly qualified

academicians and industry stalwarts with on-ground expertise and visibility.

Both ensure that the key takeaways are beyond theoretical knowledge and include

practical insights and understanding.

Can Digital Twins Help Modernize Electric Grids?

Digital twins could help guide decision-making as California completes its

transition to 100% renewables, according to Parris, who points out that GE

Digital is working with Southern California Edison, one of the state’s three

largest investor-owned utility, to help model its operations. However, the mix

of renewables in in the Golden State, not to mention Gov. Gavin Newsom’s ban on

gasoline- and diesel-powered cars starting in 2035, will make it much harder to

find a balance than in the Lone Star State. “It’s not just the heating [and

cooling] of the buildings, but the cars,” Parris says. “It will be more

distributed energy resources, like EVs [electric vehicles]. How do I bring them

in? They add another complexity, because I don’t know when you’re going to

charge your EV. I don’t know how much you’re going to use your car.” Backers of

renewable energy are banking on large battery plants being able to handle

short-term spikes in energy demand that have traditionally been handled by

natural gas-powered “peaker” plants in California. But grid-scale battery

technology is still unproven, and it also introduces more variables into the

grid equation that will have to be accounted for. How long does that battery

live [is] based on how often you charge and discharge it, so the life of the

battery is a factor,” Parris says.

Stop Calling Everything AI, Machine-Learning Pioneer Says

Computers have not become intelligent per se, but they have provided

capabilities that augment human intelligence, he writes. Moreover, they have

excelled at low-level pattern-recognition capabilities that could be performed

in principle by humans but at great cost. Machine learning–based systems are

able to detect fraud in financial transactions at massive scale, for example,

thereby catalyzing electronic commerce. They are essential in the modeling and

control of supply chains in manufacturing and health care. They also help

insurance agents, doctors, educators, and filmmakers. Despite such developments

being referred to as “AI technology,” he writes, the underlying systems do not

involve high-level reasoning or thought. The systems do not form the kinds of

semantic representations and inferences that humans are capable of. They do not

formulate and pursue long-term goals. “For the foreseeable future, computers

will not be able to match humans in their ability to reason abstractly about

real-world situations,” he writes. “We will need well-thought-out interactions

of humans and computers to solve our most pressing problems. ...”

AI And HR Tech: Three Critical Questions Leaders Need To Support Diverse Teams

When dealing with HR AI tech, the limitations around diversity are the

by-product of how solutions are designed. We are rapidly moving into space where

solutions provide emotional recognition. AI analyzes facial expressions or body

posture to determine decisions around recruitment. Current estimates expect

emotion recognition is projected to be worth $25billion by 2023. Despite

extraordinary growth in this area, there are challenges and significant kinks to

be addressed, namely, ethical elements concerning the creation of the

algorithms. Companies are grappling with HR AI and ethics. Recent examples

demonstrate the enormity of the ramifications when things don't go according to

plan. In other words, when things go wrong, they go badly wrong. Consider, for

example, Uber, when fourteen couriers were fired due to a failure of recognition

by facial identification software. In this case, the technology based on

Microsoft's face-matching software has a track record of failing to identify

darker-skinned faces, with 20.8 percent failure rate for darker-skinned female

faces. The same technology has zero percent failure for white men.

How AI Can Solve The COBOL Challenge

Fortunately, using an old-school approach to AI and applying that to a different

scope of the problem can save developers time in finding code by automating the

process of precisely identifying the code that requires attention — regardless

of how spread out it might be. Much like how contemporary AI tools cannot

comprehend a book in a way a human does, human developers struggle to comprehend

the intent of previous developers encoded in the software. By describing

behaviors that need to change to AI tools, developers no longer have to labor

searching through and understanding code to get to the specific lines

implementing that behavior. Instead, developers can quickly and efficiently find

potential bugs. Rather than dealing with a deluge of code and spending weeks

searching for functionality, developers can collaborate with the AI tool to

rapidly get to the code on which they need to work. This approach requires a

different kind of AI: one that doesn’t focus on assisting the developer with

syntax. Instead, AI that focuses on understanding the intent of the code is able

to “reimagine” what computation represents into concepts, thereby doing what a

developer does when they code — but at machine speed.

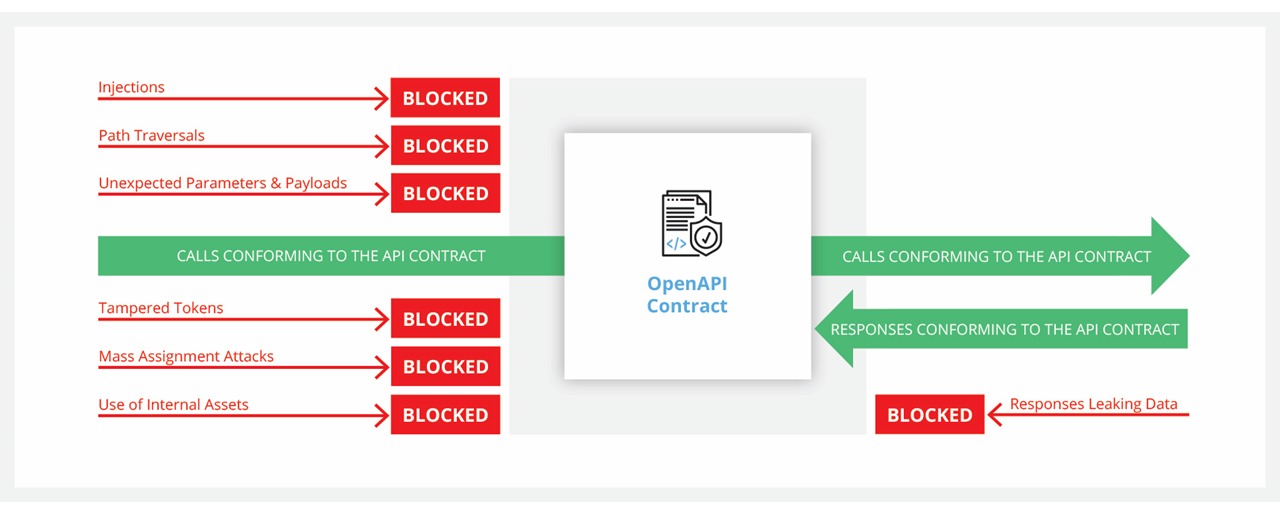

Secure API Design With OpenAPI Specification

API security is at the forefront of cybersecurity. Emerging trends and

technologies like cloud-native applications, serverless, microservices,

single-page applications, and mobile and IoT devices have led to the

proliferation of APIs. Application components are no longer internal objects

communicating with each other on a single machine within a single process — they

are APIs talking to each other over a network. This significantly increases the

attack surface. Moreover, by discovering and attacking back-end APIs, attackers

can often bypass the front-end controls and directly access sensitive data and

critical internal components. This has led to the proliferation of API attacks.

Every week, there are new API vulnerabilities reported in the news. OWASP now

has a separate list of top 10 vulnerabilities specifically for APIs. And Gartner

estimates that by 2022, APIs are going to become the number one attack vector.

Traditional web application firewalls (WAF) with their manually configured deny

and allow rules are not able to determine which API call is legitimate and which

one is an attack. For them, all calls are just GETs and POSTs with some JSON

being exchanged.

Zero Trust creator talks about implementation, misconceptions, strategy

“The strategic concepts of Zero Trust have not changed since I created the

original concept, through I have refined some of the terminologies,” he told

Help Net Security. “I used to say that the first step in the five-step

deployment model was to ‘Define Your Data.’ Now I say that the first step is to

‘Define Your Protect Surface.’ My idea of a protect surface centers on the

understanding that the attack surface is massive and always growing and

expanding, which makes dealing with it an unscalable problem. I have inverted

the idea of an attack surface to create protect surfaces, which are orders of

magnitude smaller and easily known.” Among the pitfalls that organizations that

opt to implement a zero-trust model should try to avoid he singles out two:

thinking that Zero Trust is binary (that either everything is Zero Trust or none

of it is), and deploying products without a strategy. “Zero Trust is

incremental. It is built out one protect surface at a time so that it is done in

an iterative and non-disruptive manner,” he explained. He also advises starting

with creating zero-trust networks for the least sensitive/critical protect

surfaces first, and then slowly working one’s way towards implementing Zero

Trust for the more and the most critical ones.

How can businesses gain the most value from their cloud investments?

Innovation can come from the smallest and simplest of places. And the chances

are, the cloud can take your business there, whether it’s to be more

productive or agile, more sustainable, or secure. The important thing is for

this vision to be clear, well communicated, and considered in all tech

investments, hires and processes. For example, if a business wants to make

better use of data across its operations, technologies such as IoT, AI and

robotics will be critical to gathering, deciphering, and actioning that data

across the cloud. Businesses will also be hiring and developing the talent to

operate these tools. And we know this isn’t easy. UK businesses are hungry for

cloud computing skills and the talent pool is not as big as they would like.

They will also be thinking about the platforms available that enable the

entire organisation — not just the tech team — to partake in this culture of

data-driven operations. On the other hand, perhaps a business wants their

cloud investment to bring them cost savings — a key driver for many

migrations. To do successfully, CIOs will need to think strategically about

how they are leveraging the cloud’s pay as you go ‘as a service’ model,

whether they are using technologies, such as cloud virtualisation, to be more

efficient or unlock revenue opportunities.

NFT Thefts Reveal Security Risks in Coupling Private Keys & Digital Assets

Like other blockchain-based platforms, NFT marketplaces are targeted by

hackers. The centralized design of the marketplaces and the high value

attached to NFTs make them prized targets. They can be subject to a range of

attack vectors, including phishing, insider threats, supply chain attacks,

brute-force attacks against account credentials, ransomware, and even

distributed denial-of-service attacks. Blockchain design encompassing

NFTs provides certain fundamental properties applicable to security, such as

immutability and integrity checks. Immutability inherent in blockchain design

is considered one of the core tenets of any transaction-security strategy.

It's introduced to create a single source of truth and supports

nonrepudiation, which is crucial for accountability of actions. But this still

does not guard the platform against attacks leading to an account takeover

(ATO), a major threat. There is a clear, exploitable scenario here as once an

NFT has been transferred to someone else's wallet or sold, it may not be

recovered by the sender or a third party. Enabling private keys to serve as

gatekeepers is bound to create concentrated risk in one area, leading to a

single-point-of-failure scenario.

Quote for the day:

"Most people live with pleasant

illusions, but leaders must deal with hard realities." --

Orrin Woodward

No comments:

Post a Comment