How far have we come? The evolution of securing identities

With internal, enterprise-facing identity, these individuals work for your

organization and are probably on the payroll. You can make them do things that

you can’t ask customers to do. Universal 2nd Factor (U2F) is a great example.

You can ship U2F to everyone in your organization because you’re paying them.

Plus, you can train internal staff and bring them up to speed with how to use

these technologies. We have a lot more control in the internal organization.

Consumers are much harder. They are more likely to just jump ship if they don’t

like something. Adoption rates of technologies, like multifactor authentication,

are extremely low in consumer land because people don’t know what it is or the

value proposition. We also see organizations reticent to push it. A few years

ago, a client had a 1 percent adoption rate of two-factor authentication. I

asked, “Why don’t you push it harder?” They said that every time they have more

people using two-factor authentication, there are more people who get a new

phone and don’t migrate their soft token or save the recovery codes. Then, they

call them up and say, “I have my username or password but not my one-time

password.

With internal, enterprise-facing identity, these individuals work for your

organization and are probably on the payroll. You can make them do things that

you can’t ask customers to do. Universal 2nd Factor (U2F) is a great example.

You can ship U2F to everyone in your organization because you’re paying them.

Plus, you can train internal staff and bring them up to speed with how to use

these technologies. We have a lot more control in the internal organization.

Consumers are much harder. They are more likely to just jump ship if they don’t

like something. Adoption rates of technologies, like multifactor authentication,

are extremely low in consumer land because people don’t know what it is or the

value proposition. We also see organizations reticent to push it. A few years

ago, a client had a 1 percent adoption rate of two-factor authentication. I

asked, “Why don’t you push it harder?” They said that every time they have more

people using two-factor authentication, there are more people who get a new

phone and don’t migrate their soft token or save the recovery codes. Then, they

call them up and say, “I have my username or password but not my one-time

password.Informatica debuts its intelligent data management cloud

As data becomes more valuable, so does data management, Informatica argues. Its

IDMC offers more than 200 intelligent cloud services, powered by Informatica's

AI engine CLAIRE. It applies AI to metadata to give an organization an

understanding of its "data estate," Ghai explained. The "data estate" tells you

about the fragmentation of data -- its location and the various domains of data.

"And through that insight," Ghai said, Informatica will "automate the ability to

connect to data, to build data pipelines, process data, provision it for

analytics... to apply advanced transformations to cleanse that data and trust

it... to match, merge and build a single source of truth." From there, the

platform aims to make data more accessible to business users with features like

the "data marketplace." With the marketplace, users can "shop for data" much as

one would shop for consumer goods on the Amazon marketplace, Ghai explained. The

IDMC is micro-services based and API-driven, with elastic and serverless

processing. It's built for hybrid and multi-cloud environments. The platform is

already running at scale, processing more than 17 trillion transactions each

month.

As data becomes more valuable, so does data management, Informatica argues. Its

IDMC offers more than 200 intelligent cloud services, powered by Informatica's

AI engine CLAIRE. It applies AI to metadata to give an organization an

understanding of its "data estate," Ghai explained. The "data estate" tells you

about the fragmentation of data -- its location and the various domains of data.

"And through that insight," Ghai said, Informatica will "automate the ability to

connect to data, to build data pipelines, process data, provision it for

analytics... to apply advanced transformations to cleanse that data and trust

it... to match, merge and build a single source of truth." From there, the

platform aims to make data more accessible to business users with features like

the "data marketplace." With the marketplace, users can "shop for data" much as

one would shop for consumer goods on the Amazon marketplace, Ghai explained. The

IDMC is micro-services based and API-driven, with elastic and serverless

processing. It's built for hybrid and multi-cloud environments. The platform is

already running at scale, processing more than 17 trillion transactions each

month.Microservices in the Cloud-Native Era

With developer tools and platforms like Docker, Kubernetes, AWS, GitHub, etc.,

software development has become very approachable and easy. You have a

monolithic architecture and three million lines of code. Making changes to the

code base whenever required and releasing new features was not an easy task

before. It created a lot of dilemmas between the developer teams. Finding the

mistake that was causing the code to break was a monumental task. That’s where

microservices architecture shines. Many companies have recently moved from their

humongous monolithic architecture to microservices architecture for a bright

future. There are many advantages of shifting to microservices architecture.

While a monolithic application puts all of its functionality into a single code

base and scales by replicating on multiple servers, a microservices architecture

breaks an application down into several smaller services. It then segments them

by logical domains. Together, microservices communicate with one another over

APIs to form what appears as a single application to end-users. The problem with

a monolithic application, when something goes wrong, the operations team blames

development, and development blames QA.

With developer tools and platforms like Docker, Kubernetes, AWS, GitHub, etc.,

software development has become very approachable and easy. You have a

monolithic architecture and three million lines of code. Making changes to the

code base whenever required and releasing new features was not an easy task

before. It created a lot of dilemmas between the developer teams. Finding the

mistake that was causing the code to break was a monumental task. That’s where

microservices architecture shines. Many companies have recently moved from their

humongous monolithic architecture to microservices architecture for a bright

future. There are many advantages of shifting to microservices architecture.

While a monolithic application puts all of its functionality into a single code

base and scales by replicating on multiple servers, a microservices architecture

breaks an application down into several smaller services. It then segments them

by logical domains. Together, microservices communicate with one another over

APIs to form what appears as a single application to end-users. The problem with

a monolithic application, when something goes wrong, the operations team blames

development, and development blames QA.Modern Data Warehouse & Reverse ETL

“Reverse ETL” is the process of moving data from a modern data warehouse into

third party systems to make the data operational. Traditionally data stored in

a data warehouse is used for analytical workloads and business intelligence

(i.e. identify long-term trends and influencing long-term strategy), but some

companies are now recognizing that this data can be further utilized for

operational analytics. Operational analytics helps with day-to-day decisions

with the goal of improving the efficiency and effectiveness of an

organization’s operations. In simpler terms, it’s putting a company’s data to

work so everyone can make better and smarter decisions about the business. As

examples, if your MDW ingested customer data which was then cleaned and

mastered, that customer data can then by copied into multiple SaaS systems

such as Salesforce to make sure there is a consistent view of the customer

across all systems. Customer info can also be copied to a customer support

system to provide better support to that customer by having more info about

that person, or copied to a sales system to give the customer a better sales

experience.

Microsoft has had its hand in voice technology since debuting its virtual

assistant Cortana in 2015 as part the initial Windows 10 release. Since then,

Cortana has evolved to support Android and iOS devices, Xbox, the Edge

browser, Windows Mixed Reality headsets, and third-party devices such as

thermostats and smart speakers. According to Microsoft, Cortana is currently

used by more than 150 million people. More recently, the company shifted

Cortana to position it as more of an office assistant rather than for more

general use. “Voice recognition is gaining momentum and will be used in every

type of industry — from transcription to command-and-control types of

applications — and acquiring a leading vendor in this area just makes sense,”

Pleasant said. She stressed that as users become familiar with Cortana, Siri

and Amazon's Alexa at home, they expect to see similar speech-enabled

technologies at work. She also noted that Microsoft is one of the few

companies with the resources to acquire a company like Nuance, allowing it to

jump ahead of rivals who might have wanted to do the same thing.

Microsoft has had its hand in voice technology since debuting its virtual

assistant Cortana in 2015 as part the initial Windows 10 release. Since then,

Cortana has evolved to support Android and iOS devices, Xbox, the Edge

browser, Windows Mixed Reality headsets, and third-party devices such as

thermostats and smart speakers. According to Microsoft, Cortana is currently

used by more than 150 million people. More recently, the company shifted

Cortana to position it as more of an office assistant rather than for more

general use. “Voice recognition is gaining momentum and will be used in every

type of industry — from transcription to command-and-control types of

applications — and acquiring a leading vendor in this area just makes sense,”

Pleasant said. She stressed that as users become familiar with Cortana, Siri

and Amazon's Alexa at home, they expect to see similar speech-enabled

technologies at work. She also noted that Microsoft is one of the few

companies with the resources to acquire a company like Nuance, allowing it to

jump ahead of rivals who might have wanted to do the same thing.

The Microsoft-Nuance Deal: A new push for voice technology?

Microsoft has had its hand in voice technology since debuting its virtual

assistant Cortana in 2015 as part the initial Windows 10 release. Since then,

Cortana has evolved to support Android and iOS devices, Xbox, the Edge

browser, Windows Mixed Reality headsets, and third-party devices such as

thermostats and smart speakers. According to Microsoft, Cortana is currently

used by more than 150 million people. More recently, the company shifted

Cortana to position it as more of an office assistant rather than for more

general use. “Voice recognition is gaining momentum and will be used in every

type of industry — from transcription to command-and-control types of

applications — and acquiring a leading vendor in this area just makes sense,”

Pleasant said. She stressed that as users become familiar with Cortana, Siri

and Amazon's Alexa at home, they expect to see similar speech-enabled

technologies at work. She also noted that Microsoft is one of the few

companies with the resources to acquire a company like Nuance, allowing it to

jump ahead of rivals who might have wanted to do the same thing.

Microsoft has had its hand in voice technology since debuting its virtual

assistant Cortana in 2015 as part the initial Windows 10 release. Since then,

Cortana has evolved to support Android and iOS devices, Xbox, the Edge

browser, Windows Mixed Reality headsets, and third-party devices such as

thermostats and smart speakers. According to Microsoft, Cortana is currently

used by more than 150 million people. More recently, the company shifted

Cortana to position it as more of an office assistant rather than for more

general use. “Voice recognition is gaining momentum and will be used in every

type of industry — from transcription to command-and-control types of

applications — and acquiring a leading vendor in this area just makes sense,”

Pleasant said. She stressed that as users become familiar with Cortana, Siri

and Amazon's Alexa at home, they expect to see similar speech-enabled

technologies at work. She also noted that Microsoft is one of the few

companies with the resources to acquire a company like Nuance, allowing it to

jump ahead of rivals who might have wanted to do the same thing.Get your firm to say goodbye to password headaches

In a passwordless environment, no password storage or management is needed. Therefore, IT teams are no longer burdened by setting password policies, detecting leaks, resetting forgotten passwords and having to comply with password storage regulation. It’s fair to say that for many helpdesk teams, password reset requests will be the most commonly asked-for thing (from users). Past research has determined that for some larger organizations, up to $1 million per year can be spent on staffing and infrastructure to handle password resets alone. Resetting passwords is probably not a particularly complex issue for most IT departments to deal with, but it’s the sheer number of requests makes handling these requests an extremely time-consuming task. Just how much time does that take away from helpdesks on a daily, weekly or monthly basis? It’s one of those hidden costs that your firm will be incurring that can be streamlined by giving people passwordless connections into their environment. Passwords remain a weakness for those trying to secure customer and corporate data and passwords are the number one target of cyber criminals.Modernising the insurance industry with a shared IT platform model

Pockets of the insurance industry are heading this way, by, for example, using

vehicle trackers that reward good driving with lower premiums. But behind the

scenes for many organisations is a mass of hugely complex products and equally

unwieldy legacy systems that don’t provide them with the ability to work in a

way that is agile and digital-first. Assess your systems as they stand today

and you may find that several, or possibly hundreds, have been redundant for

some time. Eliminating these systems, which are nothing more than drains on

the business’ resources, will allow for a greater level of agility. By moving

away from cumbersome legacy systems that are no longer fit for purpose,

insurers can create a simplified system that unifies silos, making everyday

work more efficient, and saves the business money. Money that they can

reinvest into creating a customer-centric company that can rival its strongest

competitors. Imagine a world where you could simplify your product range,

providing cover for the highest number of people with the fewest number of

insurance products.

Pockets of the insurance industry are heading this way, by, for example, using

vehicle trackers that reward good driving with lower premiums. But behind the

scenes for many organisations is a mass of hugely complex products and equally

unwieldy legacy systems that don’t provide them with the ability to work in a

way that is agile and digital-first. Assess your systems as they stand today

and you may find that several, or possibly hundreds, have been redundant for

some time. Eliminating these systems, which are nothing more than drains on

the business’ resources, will allow for a greater level of agility. By moving

away from cumbersome legacy systems that are no longer fit for purpose,

insurers can create a simplified system that unifies silos, making everyday

work more efficient, and saves the business money. Money that they can

reinvest into creating a customer-centric company that can rival its strongest

competitors. Imagine a world where you could simplify your product range,

providing cover for the highest number of people with the fewest number of

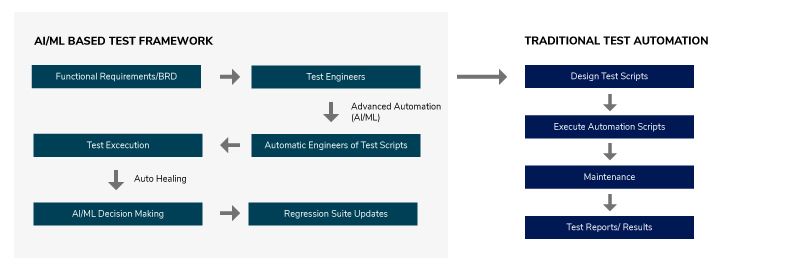

insurance products.5 Great Ways To Achieve Complete Automation With AI and ML

The self-healing technique in test automation solves major issues that involve

test script maintenance where automation scripts break at every stage of

change in object property, including name, ID, CSS, etc. This is where dynamic

location strategy comes into the picture. Here, programs automatically detect

these changes and fix them dynamically without human intervention. This

changes the overall approach to test automation to a great extent as it allows

teams to utilize the shift-left approach in agile testing methodology that

makes the process more efficient with increased productivity and faster

delivery. ... This self-healing technique saves a lot of time invested by

developers in identifying the changes and updating them simultaneously in the

UI. Mentioned below is the end-to-end process flow of the self-healing

technique which is handled by artificial intelligence-based test

platforms. As per this process flow, the moment an AI engine figures out

that the project test may break because the object property has been changed,

it extracts the entire DOM and studies the properties. It runs the test cases

effortlessly without anyone getting to know that any such changes have been

made using dynamic location strategy.

The self-healing technique in test automation solves major issues that involve

test script maintenance where automation scripts break at every stage of

change in object property, including name, ID, CSS, etc. This is where dynamic

location strategy comes into the picture. Here, programs automatically detect

these changes and fix them dynamically without human intervention. This

changes the overall approach to test automation to a great extent as it allows

teams to utilize the shift-left approach in agile testing methodology that

makes the process more efficient with increased productivity and faster

delivery. ... This self-healing technique saves a lot of time invested by

developers in identifying the changes and updating them simultaneously in the

UI. Mentioned below is the end-to-end process flow of the self-healing

technique which is handled by artificial intelligence-based test

platforms. As per this process flow, the moment an AI engine figures out

that the project test may break because the object property has been changed,

it extracts the entire DOM and studies the properties. It runs the test cases

effortlessly without anyone getting to know that any such changes have been

made using dynamic location strategy.Apache Software Foundation retires slew of Hadoop-related projects

ASF's Vice President for Marketing & Publicity, Sally Khudairi, who

responded by email, said "Apache Project activity ebbs and flows throughout

its lifetime, depending on community participation." Khudairi added:

"We've...had an uptick in reviewing and assessing the activity of several

Apache Projects, from within the Project Management Committees (PMCs) to the

Board, who vote on retiring the Project to the Attic." Khudairi also said that

Hervé Boutemy, ASF's Vice President of the Apache Attic "has been

super-efficient lately with 'spring cleaning' some of the loose ends with the

dozen-plus Projects that have been preparing to retire over the past several

months." Despite ASF's assertion that this big data clearance sale is simply a

spike of otherwise routine project retirements, it's clear that things in big

data land have changed. Hadoop has given way to Spark in open source analytics

technology dominance, the senseless duplication of projects between

Hortonworks and the old Cloudera has been halted, and the Darwinian natural

selection process among those projects completed.

ASF's Vice President for Marketing & Publicity, Sally Khudairi, who

responded by email, said "Apache Project activity ebbs and flows throughout

its lifetime, depending on community participation." Khudairi added:

"We've...had an uptick in reviewing and assessing the activity of several

Apache Projects, from within the Project Management Committees (PMCs) to the

Board, who vote on retiring the Project to the Attic." Khudairi also said that

Hervé Boutemy, ASF's Vice President of the Apache Attic "has been

super-efficient lately with 'spring cleaning' some of the loose ends with the

dozen-plus Projects that have been preparing to retire over the past several

months." Despite ASF's assertion that this big data clearance sale is simply a

spike of otherwise routine project retirements, it's clear that things in big

data land have changed. Hadoop has given way to Spark in open source analytics

technology dominance, the senseless duplication of projects between

Hortonworks and the old Cloudera has been halted, and the Darwinian natural

selection process among those projects completed.DNS Vulnerabilities Expose Millions of Internet-Connected Devices to Attack

In a new technical report, Forescout and JSOF describe the set of nine vulnerabilities they discovered as giving attackers a way to knock devices offline or to download malware on them in order to steal data and disrupt production systems in operational technology environments. Among the most affected are organizations in the healthcare and government sectors because of the widespread use of devices running the vulnerable DNS implementations in both environments, Forescout and JSOF say. According to the two companies, patches are available for the vulnerabilities in FreeBSD, Nucleus NET, and NetX. Device vendors using the vulnerable stacks should provide updates to customers. But because it may not always be possible to apply patches easily, organizations should consider mitigation measures, such as discovering and inventorying vulnerable systems, segmenting them, monitoring network traffic, and configuring systems to rely on internal DNS servers, they say. The two companies also released tools that other organizations can use to find and fix DNS implementation errors in their own products.Quote for the day:

"Coaching isn't an addition to a leader's job, it's an integral part of it." -- George S. Odiorne

No comments:

Post a Comment