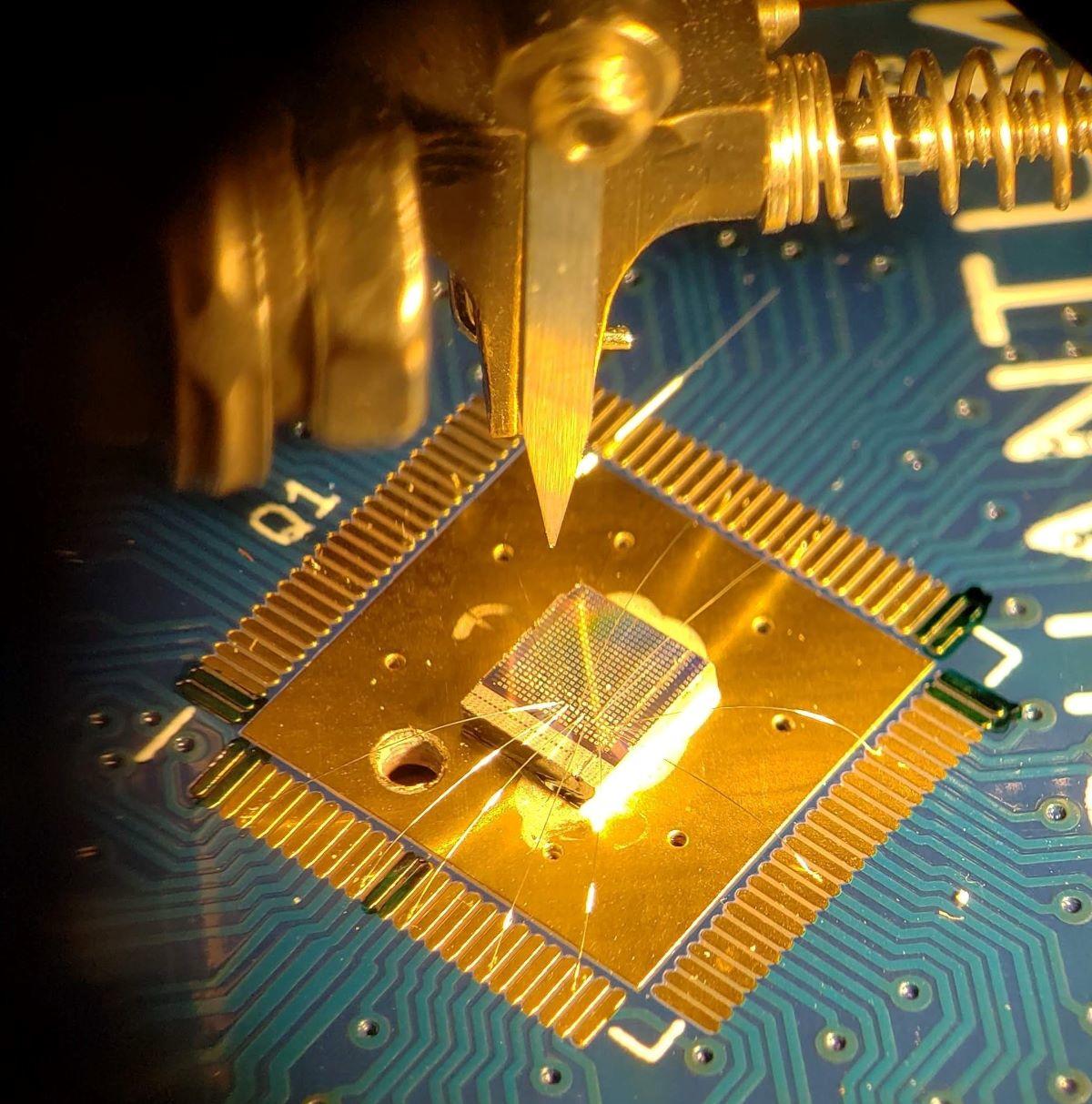

How standard silicon chips could be used for quantum computing

To create and read qubits, which are the building blocks of those devices,

scientists first have to retain control over the smallest, quantum particles

that make up a material; but there are different ways to do that, with varying

degrees of complexity. IBM and Google, for example, have both opted for creating

superconducting qubits, which calls for an entirely new manufacturing process;

while Honeywell has developed a technology that individually traps atoms, to let

researchers measure the particles' states. These approaches require creating new

quantum processors in a lab, and are limited in scale. Intel, for example, has

created a 49-qubit superconducting quantum processor that is about three inches

square, which the company described as already "relatively large", and likely to

cause complications when it comes to producing the million-qubit chips that will

be required for real-world implementations at commercial scale. With this in

mind, Quantum Motion set off to find out whether a better solution could be

found in proven, existing technologies. "We need millions of qubits, and there

are very few technologies that will make millions of anything – but the silicon

transistor is the exception," John Morton, ... tells ZDNet.

Top 5 Attack Techniques May Be Easier to Detect Than You Think

The analysis shows attackers for the most part are continuing to rely on the

same techniques and tactics they have been using for years. And, despite all the

concern about sophisticated advanced persistent threat (APT) actors and related

threats, the most common threats that organizations encountered last year are

what some would classify as commodity malware. "Although the threat landscape

can be overwhelming, there are many opportunities we have as defenders to catch

threats in [our] networks," says Katie Nickels, director of intelligence at Red

Canary. "The challenge for defenders is to balance the 'tried and true'

detection opportunities that adversaries reuse with keeping an eye on new

techniques and threats." Red Canary's analysis shows attackers most commonly

abused command and script interpreters like PowerShell and Windows Command Shell

to execute commands, scripts, and binaries. ... Attackers most commonly took

advantage of PowerShell's interactive command-line interface and scripting

features to execute malicious commands, obfuscate malware, and malicious

activity to download additional payloads and spawn additional processes.

Preparing for enterprise-class containerisation

Beyond the challenges of taking a cloud-native approach to legacy IT

modernisation, containers also offer IT departments a way to rethink their

software development pipeline. More and more companies are adopting containers,

as well as Kubernetes, to manage their implementations, says Sergey Pronin,

product owner at open source database company Percona. “Containers work well in

the software development pipeline and make delivery easier,” he says. “After a

while, containerised applications move into production, Kubernetes takes care of

the management side and everyone is happy.” Thanks to Kubernetes, applications

can be programmatically scale up and down to handle peaks in usage by

dynamically handling processor, memory, network and storage requirements, he

adds. However, while the software engineering teams have done their bit by

setting up auto-scalers in Kubernetes to make applications more available and

resilient, Pronin warns that IT departments may find their cloud bills starting

to snowball. For example, an AWS Elastic Block Storage user will pay for 10TB of

provisioned EBS volumes even if only 1TB is really used. This can lead to

sky-high cloud costs.

Practical Applications of Complexity Theory in Software and Digital Products Development

The first radical idea has to do with the theory and practice of Complexity. The

second radical idea has to do with the human element in Complexity theory. Let’s

start with the first one. Most of the literature on Complexity and most of the

conversations revolving around Complexity are theoretical. This is true and has

been true in the last 17 years, also in the software development community, in

the products development community, and more in general in the broader Lean and

Agile community. When you look into real teams and organisations, here and there

you will find some individual who is passionate about Complexity, who knows the

theory, and who is using it to interpret, understand, and make sense of the

events happening around her/him and reacting in more effective ways. Complexity

gives her/him an edge. But such a presence of Complexity thinking is confined.

The first new radical idea is to shift up-side-down the centre of gravity of the

conversation around Complexity; to make the practical applications of Complexity

theory prominent.

Researchers show that quantum computers can reason

Admittedly, it’s not like you can run down to Best Buy today and purchase a

quantum computer. They are not yet ubiquitous. IBM apparently is collecting

quantum computers the way Jerry Seinfeld collects classic and rare cars. Big

Blue also is installing a quantum computer at Cleveland Clinic, the first

private-sector recipient of an IBM Quantum System One. But quantum computing’s

time in the sun inches inexorably closer. “Quantum computing (QC) proof of

concept (POC) projects abound in 2021 with commercialization already happening

in pilots and building to broader adoption before 2025,” REDDS Capital Chairman

and General Partner Stephen Ibaraki writes in Forbes. “In my daily engagements’

pro bono with global communities – CEOs, computing science/engineering

organizations, United Nations, investments, innovation hubs – I am finding

nearly 50% of businesses see applications for QC in five years, though most

don’t fully understand how this will come about.” IBM has not been the only

major tech company developing quantum computing technology.

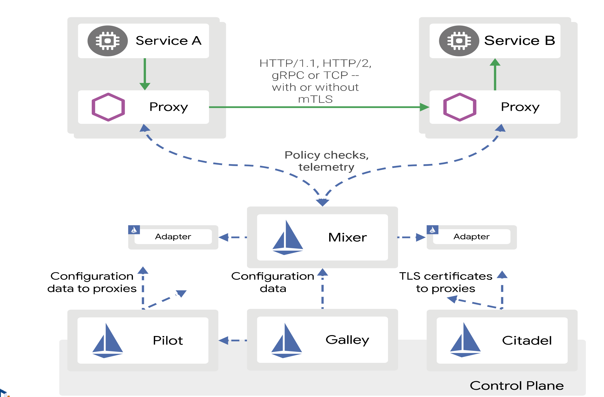

Service Meshes: Why Istio? An Introduction

In any microservice-based architecture, whenever there is a service call from

one microservice to another. We are not able to infer or debug what is happening

inside the networked service calls. This might lead to serious problems when we

are not able to diagnose properly what is the problem if an unwanted situation

arises. For example; performance issues, security, load balancing problems,

tracing the service calls, or proper observability of the service calls. The

severity of the issue gets multiplied when you have to cater to many

microservices for any application to work properly. ... Istio has the most

features and flexibility of any of these three service meshes by far: Cascading

failure prevention (circuit breaking); Authentication and authorization.

The service mesh can authorize and authenticate requests made from both outside

and within the app, sending only validated requests to

instances; Resiliency features (retries, timeouts, deadlines,

etc.); Robust load balancing algorithms.

Control over request routing

(useful for things like CI/CD release patterns); The ability to introduce

and manage TLS termination between communication endpoints; Rich sets of

metrics to provide instrumentation at the service-to-service layer ...

Is Explainability In AI Always Necessary?

With increasing sophistication and completeness, the system becomes less

understandable. “As a model grows more realistic, it becomes more difficult to

understand,” said David Hauser at the recently concluded machine learning

developers conference. According to Hauser, clients want the model to be

understandable and realistic.This is another paradox a data scientist has to

live with. He also stressed that understandable solutions give up on accuracy.

For instance, network pruning one such technique which takes a hit on

accuracy. The moment non-linearities or interactions are introduced, the

answers become less intuitive. ... One of the vital purposes of explanations

is to improve ML engineers’ understanding of their models to refine and

improve performance. Since machine learning models are “dual-use”,

explanations or other tools could enable malicious users to increase

capabilities and performance of undesirable systems. There is no denying that

explanations allow model refinement. And, as we go forward, apart from the

debugging and auditing of the models, organisations are looking at data

privacy through the lens of explainability.

Leaker Dismisses MobiKwik's Not-So-Nimble Breach Denial

MobiKwik hasn't done itself any favors in its handling of this episode, noting

that when the allegedly stolen data came to light in February, it "undertook a

thorough investigation with the help of external security experts and did not

find any evidence of a breach." Subsequently, after they reviewed the leaker's

sample of stolen data, "some users have reported that their data is visible on

the dark web," it adds, but then it says other breaches must be to blame.

"While we are investigating this, it is entirely possible that any user could

have uploaded her/ his information on multiple platforms. Hence, it is

incorrect to suggest that the data available on the dark web has been accessed

from MobiKwik or any identified source," MobiKwik claims. But the company says

that despite having already brought in "external security experts" to

investigate, it's now bringing in more, "to conduct a forensic data security

audit." Hence, it's unclear what the first group of investigators might have

done. ... Reuters on Thursday, citing an anonymous source with knowledge of

the discussions, reported that the Reserve Bank of India was "not happy" with

MobiKwik's statements, and ordered it to immediately launch a full digital

forensic investigation.

New Storage Trends Promise to Help Enterprises Handle a Data Avalanche

Data virtualization has been around for some time, but with increasing data

usage, complexity, and redundancy, the approach is gaining increasing traction.

On the downside, data virtualization can be a performance drag if the

abstractions, or data mappings, are too complex, requiring extra processing,

Linthicum noted. There's also a longer learning curve for developers, often

requiring more training. ... While not exactly a cutting-edge technology,

hyper-converged storage is also being adopted by a growing number of

organizations. The technology typically arrives as a component within a

hyper-converged infrastructure in which storage is combined with computing and

networking in a single system, explained Yan Huang, an assistant professor of

business technologies at Carnegie Mellon University's Tepper School of Business.

Huang noted that hyper-converged storage streamlines and simplifies data

storage, as well as the processing of the stored data. "It also allows

independently scaling computing and storage capacity in a disaggregated way,"

she said.

The importance of tech, training and education in data classification

We have seen how automation plays a key role in establishing a firm foundation

for an organisation’s security culture, but given employees play such a vital

role in ensuring that business maintains a strong data privacy posture, the

ability to work with stakeholders and users to understand data protection

requirements and policies is key. Security and data protection education must be

conducted company-wide and must exist at a level that is workable and

sustainable. Regular security awareness training and a company-wide inclusive

security culture within the business will ensure that data security becomes a

part of everyday working practice, embedded into all actions and the very heart

of the business. A robust data protection protocol is critical for all

organisations, and will particularly be the case as we move beyond Covid-19 into

the new normal. Delivering optimal operational efficiencies, data management and

data classification provision under post-pandemic budget constraints will be an

ongoing business-critical challenge. To do nothing, however, will set up an

organisation to fail, and we have already seen large fines incurred for those

that have not given data security enough of a priority.

Quote for the day:

"Leadership cannot just go along to get

along. Leadership must meet the moral challenge of the day." --

Jesse Jackson

No comments:

Post a Comment