How to apply security at the source using GitOps

Let’s talk about the security aspects now. Most security tools detect potential

vulnerabilities and issues at runtime (too late). In order to fix them, either a

reactive manual process needs to be performed (e.g., modifying directly a

parameter in your k8s object with kubectl edit) or ideally, the fix will happen

at source and will be propagated all along your supply chain. This is what is

called “Shift Security Left”. From fixing the problem when it is too late to

fixing it before it happens. This doesn’t mean every security issue can be fixed

at the source, but adding a security layer directly at the source can prevent

some issues. ... Imagine you introduced a potential security issue in a specific

commit by modifying some parameter in your application deployment. Leveraging

the Git capabilities, you can rollback the change if needed directly at the

source and the GitOps tool will redeploy your application without user

interaction. ... Those benefits are good enough to justify using GitOps

methodologies to improve your security posture and they came out of the box, but

GitOps is a combination of a few more things.

How to Open Source Your Project: Don’t Just Open Your Repo!

When opening your source code, the first task should be to select a license that

fits your use case. In most cases, it is advisable to include your legal

department in this discussion, and GitHub has many great resources to help you

with this process. For StackRox, we oriented ourselves on similar Red Hat and

popular open source projects and picked Apache 2.0 where possible. After you’ve

decided on what parts you open up and how you will open them, the next question

is, how will you make this available? Besides the source code itself, for

StackRox, there are also Docker images, as mentioned. That means we also open

the CI process to the public. For that to happen, I highly recommend you review

your CI process. Assume that any insecure configuration will be used against

you. Review common patterns for internal CI processes like credentials, service

accounts, deployment keys or storage access. Also, it should be abundantly clear

who can trigger CI runs, as your CI credits/resources are usually quite limited,

and CI integrations have been known to run cryptominers or other harmful

software.

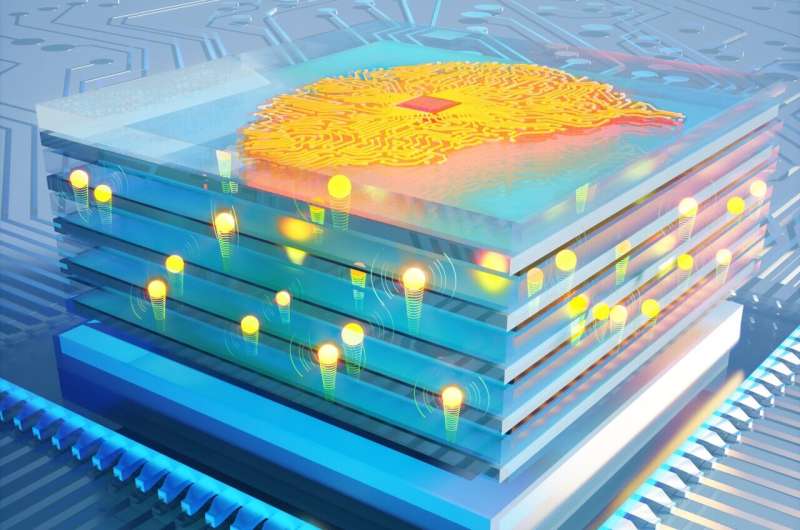

New hardware offers faster computation for artificial intelligence, with much less energy

Programmable resistors are the key building blocks in analog deep learning, just

like transistors are the core elements for digital processors. By repeating

arrays of programmable resistors in complex layers, researchers can create a

network of analog artificial "neurons" and "synapses" that execute computations

just like a digital neural network. This network can then be trained to achieve

complex AI tasks like image recognition and natural language processing. A

multidisciplinary team of MIT researchers set out to push the speed limits of a

type of human-made analog synapse that they had previously developed. They

utilized a practical inorganic material in the fabrication process that enables

their devices to run 1 million times faster than previous versions, which is

also about 1 million times faster than the synapses in the human brain.

Moreover, this inorganic material also makes the resistor extremely

energy-efficient. Unlike materials used in the earlier version of their device,

the new material is compatible with silicon fabrication techniques.

Simplifying DevOps and Infrastructure-as-Code (IaC)

Configuration drift is what happens when a declarative forward engineering model

no longer matches the state of a system. It can happen when a developer changes

a model’s code without updating the systems built using that model. It could

also be the result of an engineer that does exploratory ad-hoc operations and

changes a system but fails to go back into the template and update its code. Is

it realistic to ban operators from ad-hoc exploration? Actually, some companies

have policies forbidding any operator or developer to touch a live production

environment. Ironically, when a production system breaks, it’s the first rule

overridden: Ad-hoc exploration is welcomed by anyone able to get to the bottom

of the issue. Engineers who adopt IaC usually don’t like the work that comes

with remodeling an existing system. Still, because of high demand fueled by user

frustration, there’s a tool for every configuration management language—they

just fail to live up to engineer expectations. The best-case scenario is that an

engineer can use a reverse-engineered template to copy and paste segments into

one but will need to manually write it elsewhere.

Is Your Team Agile Or Fragile?

Agility is the ability to move your body quickly and easily and also the ability

to think quickly and clearly, so it makes sense why project managers chose this

term. The opposite of agile is fragile, rigid, clumsy, slow, etc. Not only are

they not flattering adjectives, but they are also dangerous traits if they were

descriptions of a team within an organization. ... How many times have you been

in a meeting and you have an idea or you disagree with what most participants

agree on—but you don't say anything and you conform to the norms? Why don't you

speak up? In general, there might be two reasons for it. The most likely one is

the lack of psychological safety, the feeling that your idea would be shut down

or ignored. Perhaps you worried you would ruin team harmony, or maybe you

quickly started doubting yourself. That is one of the guaranteed ways of

preventing progress and limiting growth. Psychological safety is the number-one

trait of high-performing teams, according to Google’s Aristotle research. It is

the ability to speak up without fearing any negative consequences, combined with

the willingness to contribute, which leads us to the third factor.

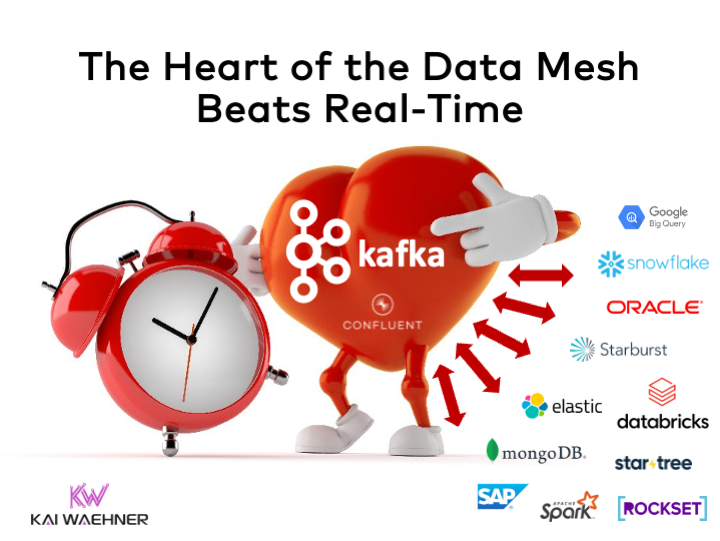

The Heart of the Data Mesh Beats Real-Time with Apache Kafka

A data mesh enables flexibility through decentralization and best-of-breed data

products. The heart of data sharing requires reliable real-time data at any

scale between data producers and data consumers. Additionally, true decoupling

between the decentralized data products is key to the success of the data mesh

paradigm. Each domain must have access to shared data but also the ability to

choose the right tool (i.e., technology, API, product, or SaaS) to solve its

business problems. The de facto standard for data streaming is Apache Kafka. A

cloud-native data streaming infrastructure that can link clusters with each

other out-of-the-box enables building a modern data mesh. No Data Mesh will use

just one technology or vendor. Learn from inspiring posts from your favorite

data products vendors like AWS, Snowflake, Databricks, Confluent, and many more

to successfully define and build your custom Data Mesh. Data Mesh is a journey,

not a big bang. A data warehouse or data lake (or in modern days, a lakehouse)

cannot be the only infrastructure for data mesh and data products.

Microservice Architecture, Its Design Patterns And Considerations

Microservices architecture is one of the most useful architectures in the

software industry. These can help in the creation of a lot of better software

applications if followed properly. Here you’ll get to know what microservices

architecture is, the design patterns necessary for its efficient implementation,

and why and why not to use this architecture for your next software.

... Services in this pattern are easy to develop, test, deploy and maintain

individually. Small teams are sufficient and responsible for each service, which

reduces extensive communication and also makes things easier to manage. This

allows teams to adopt different technology stacks, upgrading technology in

existing services and scale, and change or deploy each service independently.

... Both microservices and monolithic services are architectural patterns that

are used to develop software applications in order to serve the business

requirements. They each have their own benefits, drawbacks, and challenges. On

the one hand, when Monolithic Architectures serve as a Large-Scale system, this

can make things difficult.

Discussing Backend For Front-end

Mobile clients changed this approach. The display area of mobile clients is

smaller: just smaller for tablets and much smaller for phones. A possible

solution would be to return all data and let each client filter out the

unnecessary ones. Unfortunately, phone clients also suffer from poorer

bandwidth. Not every phone has 5G capabilities. Even if it was the case, it’s no

use if it’s located in the middle of nowhere with the connection point providing

H+ only. Hence, over-fetching is not an option. Each client requires a different

subset of the data. With monoliths, it’s manageable to offer multiple endpoints

depending on each client. One could design a web app with a specific layer at

the forefront. Such a layer detects the client from which the request originated

and filters out irrelevant data in the response. Over-fetching in the web app is

not an issue. Nowadays, microservices are all the rage. Everybody and their

neighbours want to implement a microservice architecture. Behind microservices

lies the idea of two-pizzas teams.

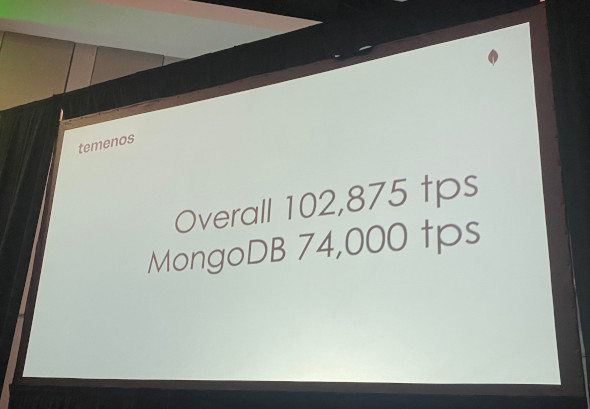

Temenos Benchmarks Show MongoDB Fit for Banking Work

In a mock scenario, Temenos’ research team created 100 million customers with

200 million accounts through pushed through 24,000 transactions and 74,000

MongoDB queries a second. Even with that considerable workload, the MongoDB

database, running on an Amazon Web Services’ M80 instance, consistently kept

response times under a millisecond, which is “exceptionally consistent,” Coleman

said. (This translated into an overall response time for the end user’s app at

around 18 milliseconds, which is pretty much so fast as to be unnoticeable).

Coleman compared this test with an earlier one the company did in 2015, using

all Oracle gear. He admits this is not a fair comparison, given the older

generation of hardware. Still, the comparison is eye-opening. In that setup, an

Oracle 32-core cluster was able to push out 7,200 transactions per second. In

other words, a single MongoDB instance was able to do the work of 10 Oracle 32

core clusters, using much less power.

The role of the CPTO in creating meaningful digital products

It’s easy to see that product, design and more traditional business analysis

have a lot of crossover, so where do you draw the line, if at all? This then

poses the problem of scale. Having a CPTO over a team of 100-150 is fine, but if

you scale that to the multiple disciplines over hundreds of people, it starts to

become a genuine challenge. Again, having strong “heads of” protects you from

this, but it forces the CxTO to become slightly more abstracted from reality.

Creating autonomy (and thus decentralisation) in established product delivery

teams helps make scaling less of a problem, but it requires both discipline and

trust. Could you do this with both a CPO and a CTO? Yes, but by making it a

person’s explicit role to harmonise the two concerns, you create a more concrete

objective, and there is a need to resolve any conflict between the two worlds,

centralising accountability. In reality, many CTOs are starting to pick up

product thinking, and I’m sure the reverse is also true of CPOs. However,

switching between two idioms is a big ask. It’s tiring (but rewarding), and

could mean your focus can’t be as deep in either of the two camps.

Quote for the day:

"It is one thing to rouse the passion of

a people, and quite another to lead them." -- Ron Suskind

No comments:

Post a Comment