How legacy systems are threatening security in mergers & acquisitions

Legacy systems are far more likely to get hacked. This is especially true for companies that become involved in private equity transactions, such as mergers, acquisitions, and divestitures. These transactions often result in IT system changes and large movements of data and financial capital which leave organizations acutely vulnerable. With details of these transactions being publicized or publicly accessible, threat actors can specifically target companies likely to be involved in such deals. We have seen two primary trends throughout 2023: Threat groups are closely following news cycles, enabling them to quickly target entire portfolios with zero-day attacks designed to upend aging technologies; disrupting businesses and their supply chains; Corporate espionage cases are also on the rise as threat actors embrace longer dwell times and employ greater calculation in methods of monetizing attacks. Together, this means the number of strategically calculated attacks — which are more insidious than hasty smash-and-grabs — are on the rise.

How Frontend Devs Can Take Technical Debt out of Code

To combat technical debt, developers — even frontend developers — must see their work as a part of a greater whole, rather than in isolation, Purighalla advised. “It is important for developers to think about what they are programming as a part of a larger system, rather than just that particular part,” he said. “There’s an engineering principle, ‘Excessive focus on perfection of art compromises the integrity of the whole.’” That means developers have to think like full-stack developers, even if they’re not actually full-stack developers. For the frontend, that specifically means understanding the data that underlies your site or web application, Purighalla explained. “The system starts with obviously the frontend, which end users touch and feel, and interface with the application through, and then that talks to maybe an orchestration layer of some sort, of APIs, which then talks to a backend infrastructure, which then talks to maybe a database,” he said. “That orchestration and the frontend has to be done very, very carefully.” Frontend developers should take responsibility for the data their applications rely on, he said.

Digital Innovation: Getting the Architecture Foundations Right

While the benefits of modernization are clear, companies don’t need to be

cutting edge everywhere, but they do need to apply the appropriate architectural

patterns to the appropriate business processes. For example, Amazon Prime

recently moved away from a microservices-based architecture for streaming media.

In considering the additional complexity of service-oriented architectures, the

company decided that a "modular monolith” would deliver most of the benefits for

much less cost. Companies that make a successful transition to modern enterprise

architectures get a few things right. ... Enterprise technology architecture

isn’t something that most business leaders have had to think about, but they

can’t afford to ignore it any longer. Together with the leaders of the

technology function, they need to ask whether they have the right architecture

to help them succeed. Building a modern architecture requires ongoing

experimentation and a commitment to investment over the long term.

GenAI isn’t just eating software, it’s dining on the future of work

As we step into this transformative era, the concept of “no-collar jobs” takes

center stage. Paul introduced this idea in his book “Human + Machine,” where new

roles are expected to emerge that don’t fit into the traditional white-collar or

blue-collar jobs; instead, it’s giving rise to what he called ‘no-collar jobs.’

These roles defy conventional categories, relying increasingly on digital

technologies, AI, and automation to enhance human capabilities. In this

emergence of new roles, the only threat is to those “who don’t learn to use the

new tools, approaches and technologies in their work.” While this new future

involves a transformation of tasks and roles, it does not necessitate jobs

disappearing. ... Just as AI has become an integral part of enterprise software

today, GenAI will follow suit. In the coming year, we can expect to see

established software companies integrating GenAI capabilities into their

products. “It will become more common for companies to use generative AI

capabilities like Microsoft Dynamics Copilot, Einstein GPT from Salesforce or,

GenAI capabilities from ServiceNow or other capabilities that will become

natural in how they do things.”

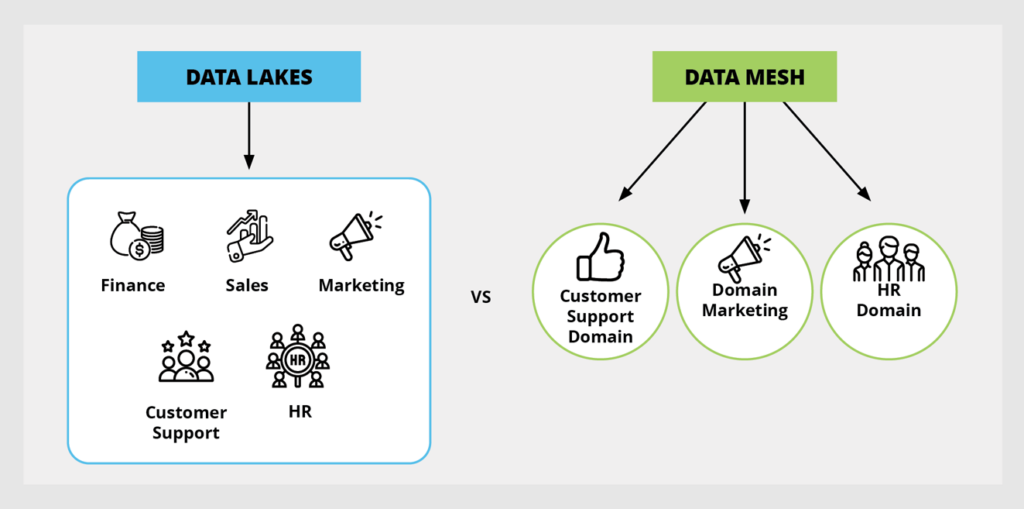

The components of a data mesh architecture

In a monolithic data management approach, technology drives ownership. A single

data engineering team typically owns all the data storage, pipelines, testing,

and analytics for multiple teams—such as Finance, Sales, etc. In a data mesh

architecture, business function drives ownership. The data engineering team

still owns a centralized data platform that offers services such as storage,

ingestion, analytics, security, and governance. But teams such as Finance and

Sales would each own their data and its full lifecycle (e.g. making code changes

and maintaining code in production). Moving to a data mesh architecture brings

numerous benefits:It removes roadblocks to innovation by creating a self-service

model for teams to create new data products: It democratizes data while

retaining centralized governance and security controls; It decreases data

project development cycles, saving money and time that can be driven back into

the business. Because it’s evolved from previous approaches to data management,

data mesh uses many of the same tools and systems that monolithic approaches

use, yet exposes these tools in a self-service model combining agility, team

ownership, and organizational oversight.

Six major trends in data engineering

Some modern data warehouse solutions, including Snowflake, allow data providers

to seamlessly share data with users by making it available as a feed. This does

away with the need for pipelines, as live data is shared in real-time without

having to move the data. In this scenario, providers do not have to create APIs

or FTPs to share data and there is no need for consumers to create data

pipelines to import it. This is especially useful for activities such as data

monetisation or company mergers, as well as for sectors such as the supply

chain. ... Organisations that use data lakes to store large sets of structured

and semi-structured data are now tending to create traditional data warehouses

on top of them, thus generating more value. Known as a data lakehouse, this

single platform combines the benefits of data lakes and warehouses. It is able

to store unstructured data while providing the functionality of a data

warehouse, to create a strategic data storage/management system. In addition to

providing a data structure optimised for reporting, the data lakehouse provides

a governance and administration layer and captures specific domain-related

business rules.

From legacy to leading: Embracing digital transformation for future-proof growth

Digital transformation without a clear vision and roadmap is identified as a big

reason for failure. Several businesses may adopt change because of emerging

trends and rapid innovation without evaluating their existing systems or

business requirements. To avoid such failure, every tech leader must develop a

clear vision, and comprehensive roadmap aligned with organizational goals,

ensuring each step of the transformation contributes to the overarching vision.

... The rapid pace of technological change often outpaces the availability of

skilled professionals. In the meantime, tech leaders may struggle to find

individuals with the right expertise to drive the transformation forward. To

address this, businesses should focus on strategic upskilling using IT value

propositions and hiring business-minded technologists. Furthermore, investing in

individual workforce development can bridge this gap effectively. ... Many

organizations grapple with legacy systems and outdated infrastructure that may

not seamlessly integrate with modern digital solutions.

7 Software Testing Best Practices You Should Be Talking About

What sets the very best testers apart from the pack is that they never lose

sight of why they’re conducting testing in the first place, and that means

putting user interest first. These testers understand that testing best

practices aren’t necessarily things to check off a list, but rather steps to

take to help deliver a better end product to users. The very best testers never

lose sight of why they’re conducting testing in the first place, and that means

putting user interest first. To become such a tester, you need to always

consider software from the user’s perspective and take into account how the

software needs to work in order to deliver on the promise of helping users do

something better, faster and easier in their daily lives. ... In order to keep

an eye on the bigger picture and test with the user experience in mind, you need

to ask questions and lots of them. Testers have a reputation for asking

questions, and it often comes across as them trying to prove something, but

there’s actually an important reason why the best testers ask so many

questions.

Why Data Mesh vs. Data Lake Is a Broader Conversation

Most businesses with large volumes of data use a data lake as their central

repository to store and manage data from multiple sources. However, the growing

volume and varied nature of data in data lakes makes data management

challenging, particularly for businesses operating with various domains. This is

where a data mesh approach can tie in to your data management efforts. The data

mesh is a microservice, distributed approach to data management whereby

extensive organizational data is split into smaller, multiple domains and

managed by domain experts. The value provided by implementing a data mesh for

your organization includes simpler management and faster access to your domain

data. By building a data ecosystem that implements a data lake with data mesh

thinking in mind, you can grant every domain operating within your business its

product-specific data lake. This product-specific data lake helps provide

cost-effective and scalable storage for housing your data and serving your

needs. Additionally, with proper management by domain experts like data product

owners and engineers, your business can serve independent but interoperable data

products.

The Hidden Costs of Legacy Technology

Maintaining legacy tech can prove to be every bit as expensive as a digital

upgrade. This is because IT staff have to spend time and money to keep the

obsolete software functioning. This wastes valuable staff hours that could be

channeled into improving products, services, or company systems. A report from

Dell estimates that organizations currently allocate 60-80% of their IT budget

to maintaining existing on-site hardware and legacy apps, which leaves only

20-40% of the budget for everything else. ... No company can defer

upgrading its tech indefinitely: sooner or later, the business will fail as its

rivals outpace it. Despite this urgency, many business leaders mistakenly

believe that they can afford to defer their tech improvements and rely on dated

systems in the meantime. However, this is a misapprehension and can lead to

‘technical debt.’ ‘Technical debt' describes the phenomenon in which the use of

legacy systems defers short-term costs in favor of long-term losses that are

incurred when reworking the systems later on.

Quote for the day:

"Always remember, your focus determines

your reality." -- George Lucas

No comments:

Post a Comment