How to Future-Proof Your IT Organization

Effective future-proofing begins with strong leadership support and investments in essential technologies, such as the cloud and artificial intelligence (AI). Leaders should encourage an agile mindset across all business segments to improve processes and embrace potentially useful new technologies, says Bess Healy ... Important technology advancements frequently emerge from various expert ecosystems, utilizing the knowledge possessed by academic, entrepreneurial, and business startup organizations, Velasquez observes. “Successful IT leaders encourage team members to operate as active participants in these ecosystems, helping reveal where the business value really is while learning how new technology could play a role in their enterprises.” It’s important to educate both yourself and your teams on how technologies are evolving, says Chip Kleinheksel, a principal at business consultancy Deloitte. “Educating your organization about transformational changes while simultaneously upskilling for AI and other relevant technical skillsets, will arm team members with the correct resources and knowledge ahead of inevitable change.”

Effective future-proofing begins with strong leadership support and investments in essential technologies, such as the cloud and artificial intelligence (AI). Leaders should encourage an agile mindset across all business segments to improve processes and embrace potentially useful new technologies, says Bess Healy ... Important technology advancements frequently emerge from various expert ecosystems, utilizing the knowledge possessed by academic, entrepreneurial, and business startup organizations, Velasquez observes. “Successful IT leaders encourage team members to operate as active participants in these ecosystems, helping reveal where the business value really is while learning how new technology could play a role in their enterprises.” It’s important to educate both yourself and your teams on how technologies are evolving, says Chip Kleinheksel, a principal at business consultancy Deloitte. “Educating your organization about transformational changes while simultaneously upskilling for AI and other relevant technical skillsets, will arm team members with the correct resources and knowledge ahead of inevitable change.”How one CSO secured his environment from generative AI risks

"We always try to stay ahead of things at Navan; it’s just the nature of our

business. When the company decided to adopt this technology, as a security team

we had to do a holistic risk assessment.... So I sat down with my leadership

team to do that. The way my leadership team is structured is, I have a leader

who runs product platform security, which is on the engineering side; then we

have SecOps, which is a combination of enterprise security, DLP – detection and

response; then there’s a governance, risk and compliance and trust function, and

that’s responsible for risk management, compliance and all of that. "So, we sat

down and did a risk assessment for every avenue of the application of this

technology. ... "The way we do DLP here is it’s based on context. We don’t do

blanket blocking. We always catch things and we run in it like an incident. It

could be insider risk or external, then we involve legal and HR counterparts.

This is part and parcel with running a security team. We’re here to identify

threats and build protections against them."

"We always try to stay ahead of things at Navan; it’s just the nature of our

business. When the company decided to adopt this technology, as a security team

we had to do a holistic risk assessment.... So I sat down with my leadership

team to do that. The way my leadership team is structured is, I have a leader

who runs product platform security, which is on the engineering side; then we

have SecOps, which is a combination of enterprise security, DLP – detection and

response; then there’s a governance, risk and compliance and trust function, and

that’s responsible for risk management, compliance and all of that. "So, we sat

down and did a risk assessment for every avenue of the application of this

technology. ... "The way we do DLP here is it’s based on context. We don’t do

blanket blocking. We always catch things and we run in it like an incident. It

could be insider risk or external, then we involve legal and HR counterparts.

This is part and parcel with running a security team. We’re here to identify

threats and build protections against them."Governor at Fed Cautiously Optimistic About Generative AI

The adverse impact of AI on jobs will only be borne by a small set of people, in

contrast to the many workers throughout the economy who will benefit from it,

she said. "When the world switched from horse-drawn transport to motor vehicles,

jobs for stable hands disappeared, but jobs for auto mechanics took their

place." And it goes beyond just creating and eliminating positions. Economists

encourage a perception of work in terms of tasks, not jobs, Cook said. This will

require humans to obtain skills to adapt themselves to the new world. "As firms

rethink their product lines and how they produce their goods and services in

response to technical change, the composition of the tasks that need to be

performed changes. Here, the portfolio of skills that workers have to offer is

crucial." AI's benefits to society will depend on how workers adapt their skills

to the changing requirements, how well their companies retrain or redeploy them,

and how policymakers support those that are hardest hit by these changes, she

said.

The adverse impact of AI on jobs will only be borne by a small set of people, in

contrast to the many workers throughout the economy who will benefit from it,

she said. "When the world switched from horse-drawn transport to motor vehicles,

jobs for stable hands disappeared, but jobs for auto mechanics took their

place." And it goes beyond just creating and eliminating positions. Economists

encourage a perception of work in terms of tasks, not jobs, Cook said. This will

require humans to obtain skills to adapt themselves to the new world. "As firms

rethink their product lines and how they produce their goods and services in

response to technical change, the composition of the tasks that need to be

performed changes. Here, the portfolio of skills that workers have to offer is

crucial." AI's benefits to society will depend on how workers adapt their skills

to the changing requirements, how well their companies retrain or redeploy them,

and how policymakers support those that are hardest hit by these changes, she

said.6 IT rules worth breaking — and how to get away with it

Automation, particularly when incorporating artificial intelligence, presents

many benefits, including enhanced productivity, efficiency, and cost savings. It

should be, and usually is, a top IT priority. That is, unless an organization is

dealing with a complex or novel task that requires a nuanced human touch, says

Hamza Farooq, a startup founder and an adjunct professor at UCLA and Stanford.

Breaking a blanket commitment to automation prioritization can be justified when

tasks involve creative problem-solving, ethical considerations, or situations in

which AI’s understanding of a particular activity or process may be limited.

“For instance, handling delicate customer complaints that demand empathy and

emotional intelligence might be better suited for human interaction,” Farooq

says. While sidelining automation may, in some situations, lead to more ethical

outcomes and improved customer satisfaction, there’s also a risk of hampering a

key organization process. “Overreliance on manual intervention could impact

scalability and efficiency in routine tasks,” Farooq warns, noting that it’s

important to establish clear guidelines for identifying cases in which an

automation process should be bypassed.

Automation, particularly when incorporating artificial intelligence, presents

many benefits, including enhanced productivity, efficiency, and cost savings. It

should be, and usually is, a top IT priority. That is, unless an organization is

dealing with a complex or novel task that requires a nuanced human touch, says

Hamza Farooq, a startup founder and an adjunct professor at UCLA and Stanford.

Breaking a blanket commitment to automation prioritization can be justified when

tasks involve creative problem-solving, ethical considerations, or situations in

which AI’s understanding of a particular activity or process may be limited.

“For instance, handling delicate customer complaints that demand empathy and

emotional intelligence might be better suited for human interaction,” Farooq

says. While sidelining automation may, in some situations, lead to more ethical

outcomes and improved customer satisfaction, there’s also a risk of hampering a

key organization process. “Overreliance on manual intervention could impact

scalability and efficiency in routine tasks,” Farooq warns, noting that it’s

important to establish clear guidelines for identifying cases in which an

automation process should be bypassed.Introduction to Azure Infrastructure as Code

One of the core benefits of IaC is that it allows you to check infrastructure

code files to source control, just like you would with software code. This means

that you can version and manage your infrastructure code just like any other

codebase, which is important for ensuring consistency and enabling collaboration

among team members. In early project work, IaC allows for quick iteration on

potential configuration options through automated deployments instead of a

manual "hunt and peck" approach. Templates can be parameterized to reuse code

assets, making deploying repeatable environments such as dev, test and

production easy. During the lifecycle of a system, IaC serves as an effective

change-control mechanism. All changes to the infrastructure are first reflected

in the code, which is then checked in as files in source control. The changes

are then applied to each environment based on current CI/CD processes and

pipelines, ensuring consistency and reducing the risk of human error.

One of the core benefits of IaC is that it allows you to check infrastructure

code files to source control, just like you would with software code. This means

that you can version and manage your infrastructure code just like any other

codebase, which is important for ensuring consistency and enabling collaboration

among team members. In early project work, IaC allows for quick iteration on

potential configuration options through automated deployments instead of a

manual "hunt and peck" approach. Templates can be parameterized to reuse code

assets, making deploying repeatable environments such as dev, test and

production easy. During the lifecycle of a system, IaC serves as an effective

change-control mechanism. All changes to the infrastructure are first reflected

in the code, which is then checked in as files in source control. The changes

are then applied to each environment based on current CI/CD processes and

pipelines, ensuring consistency and reducing the risk of human error.National Cybersecurity Strategy: What Businesses Need to Know

Defending critical infrastructure, including systems and assets, is vital for

national security, public safety, and economic prosperity. The NCS will

standardize cybersecurity standards for critical infrastructure—for example,

mandatory penetration tests and formal vulnerability scans—and make it easier to

report cybersecurity incidents and breaches. ... Once the national

infrastructure is protected and secured, the NCS will go bullish in efforts to

neutralize threat actors that can compromise the cyber economy. This effort will

rely upon global cooperation and intelligence-sharing to deal with rampant

cybersecurity campaigns and lend support to businesses by using national

resources to tactically disrupt adversaries. ... As the world’s largest economy,

the U.S. has sufficient resources to lead the charge in future-proofing

cybersecurity and driving confidence and resilience in the software sector. The

goal is to make it possible for private firms to trust the ecosystem, build

innovative systems, ensure minimal damage, and provide stability to the market

during catastrophic events.

Defending critical infrastructure, including systems and assets, is vital for

national security, public safety, and economic prosperity. The NCS will

standardize cybersecurity standards for critical infrastructure—for example,

mandatory penetration tests and formal vulnerability scans—and make it easier to

report cybersecurity incidents and breaches. ... Once the national

infrastructure is protected and secured, the NCS will go bullish in efforts to

neutralize threat actors that can compromise the cyber economy. This effort will

rely upon global cooperation and intelligence-sharing to deal with rampant

cybersecurity campaigns and lend support to businesses by using national

resources to tactically disrupt adversaries. ... As the world’s largest economy,

the U.S. has sufficient resources to lead the charge in future-proofing

cybersecurity and driving confidence and resilience in the software sector. The

goal is to make it possible for private firms to trust the ecosystem, build

innovative systems, ensure minimal damage, and provide stability to the market

during catastrophic events. Preparing for the post-quantum cryptography environment today

"Post-quantum cryptography is about proactively developing and building

capabilities to secure critical information and systems from being compromised

through the use of quantum computers," Rob Joyce, Director of NSA Cybersecurity,

writes in the guide. "The transition to a secured quantum computing era is a

long-term intensive community effort that will require extensive collaboration

between government and industry. The key is to be on this journey today and not

wait until the last minute." This perfectly aligns with Baloo's thinking that

now is the time to engage, and not to wait until it becomes an urgent situation.

The guide notes how the first set of post-quantum cryptographic (PQC) standards

will be released in early 2024 "to protect against future, potentially

adversarial, cryptanalytically-relevant quantum computer (CRQC) capabilities. A

CRQC would have the potential to break public-key systems (sometimes referred to

as asymmetric cryptography) that are used to protect information systems

today."

"Post-quantum cryptography is about proactively developing and building

capabilities to secure critical information and systems from being compromised

through the use of quantum computers," Rob Joyce, Director of NSA Cybersecurity,

writes in the guide. "The transition to a secured quantum computing era is a

long-term intensive community effort that will require extensive collaboration

between government and industry. The key is to be on this journey today and not

wait until the last minute." This perfectly aligns with Baloo's thinking that

now is the time to engage, and not to wait until it becomes an urgent situation.

The guide notes how the first set of post-quantum cryptographic (PQC) standards

will be released in early 2024 "to protect against future, potentially

adversarial, cryptanalytically-relevant quantum computer (CRQC) capabilities. A

CRQC would have the potential to break public-key systems (sometimes referred to

as asymmetric cryptography) that are used to protect information systems

today."Future of payments technology

Embedded finance requires technology to build into products and services the

capability to move money in certain circumstances, such as paying a toll on a

motorway. The idea is to embed finance into the consumer journey where they

don’t have to actually pay but based on a contract or agreement in advance.

Consumers pay without consciously having to dig out their debit card. One

example is Uber, where we widely use the service without having to make an

actual payment upfront. Sometimes referred to “contextual payments” – where the

context of the situation allows for payment to be frictionlessly executed. ...

Artificial intelligence is already being used in payments to improve the

customer journey and also how products are delivered. So far, this has been

machine learning. Generative AI, where the AI itself is able to make decisions,

will be the next generational jump and have a huge impact on payments,

especially when it comes to protection against fraud. The problem is that

artificial intelligence could be a positive or a negative, depending on who gets

to exploiting it first, for good or will.

Embedded finance requires technology to build into products and services the

capability to move money in certain circumstances, such as paying a toll on a

motorway. The idea is to embed finance into the consumer journey where they

don’t have to actually pay but based on a contract or agreement in advance.

Consumers pay without consciously having to dig out their debit card. One

example is Uber, where we widely use the service without having to make an

actual payment upfront. Sometimes referred to “contextual payments” – where the

context of the situation allows for payment to be frictionlessly executed. ...

Artificial intelligence is already being used in payments to improve the

customer journey and also how products are delivered. So far, this has been

machine learning. Generative AI, where the AI itself is able to make decisions,

will be the next generational jump and have a huge impact on payments,

especially when it comes to protection against fraud. The problem is that

artificial intelligence could be a positive or a negative, depending on who gets

to exploiting it first, for good or will. Hiring revolutionised: Tackling skill demands with agile recruitment

Tech-enabled smart assessment frameworks not only provide scalability and

objectivity in talent assessment but also help build a perception of fairness

amongst candidates and internal stakeholders. L&T uses virtual assessments

at the entry level, and Venkat believes in its tremendous scope for mid-level

and leadership assessments too. Apurva shared that when infusing technology,

many companies make the mistake of merely making things fancy without actually

creating a winning EVP. The key to tech success is balancing personalised

training with broader skill requirements. HR must develop a very good funnel by

inculcating thought leadership around the quality of employees and must also

focus on how these prospective employees absorb the culture of the organisation.

This is a huge change exercise that entails identifying the skill gap,

restructuring the job responsibilities, mapping specific roles with specific

skills, assessing a person’s personality traits, and offering a very

personalised onboarding so that people are productive when they join from day

one.

Tech-enabled smart assessment frameworks not only provide scalability and

objectivity in talent assessment but also help build a perception of fairness

amongst candidates and internal stakeholders. L&T uses virtual assessments

at the entry level, and Venkat believes in its tremendous scope for mid-level

and leadership assessments too. Apurva shared that when infusing technology,

many companies make the mistake of merely making things fancy without actually

creating a winning EVP. The key to tech success is balancing personalised

training with broader skill requirements. HR must develop a very good funnel by

inculcating thought leadership around the quality of employees and must also

focus on how these prospective employees absorb the culture of the organisation.

This is a huge change exercise that entails identifying the skill gap,

restructuring the job responsibilities, mapping specific roles with specific

skills, assessing a person’s personality traits, and offering a very

personalised onboarding so that people are productive when they join from day

one. Designing Databases for Distributed Systems

As the name suggests, this pattern proposes that each microservices manages its

own data. This implies that no other microservices can directly access or

manipulate the data managed by another microservice. Any exchange or

manipulation of data can be done only by using a set of well-defined APIs. The

figure below shows an example of a database-per-service pattern. At face value,

this pattern seems quite simple. It can be implemented relatively easily when we

are starting with a brand-new application. However, when we are migrating an

existing monolithic application to a microservices architecture, the demarcation

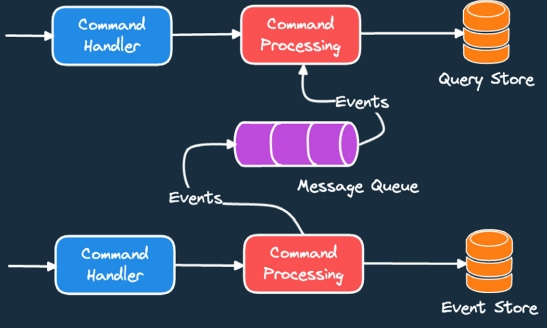

between services is not so clear. ... In the command query responsibility

segregation (CQRS) pattern, an application listens to domain events from other

microservices and updates a separate database for supporting views and queries.

We can then serve complex aggregation queries from this separate database while

optimizing the performance and scaling it up as needed.

As the name suggests, this pattern proposes that each microservices manages its

own data. This implies that no other microservices can directly access or

manipulate the data managed by another microservice. Any exchange or

manipulation of data can be done only by using a set of well-defined APIs. The

figure below shows an example of a database-per-service pattern. At face value,

this pattern seems quite simple. It can be implemented relatively easily when we

are starting with a brand-new application. However, when we are migrating an

existing monolithic application to a microservices architecture, the demarcation

between services is not so clear. ... In the command query responsibility

segregation (CQRS) pattern, an application listens to domain events from other

microservices and updates a separate database for supporting views and queries.

We can then serve complex aggregation queries from this separate database while

optimizing the performance and scaling it up as needed. Quote for the day:

"Wisdom equals knowledge plus courage. You have to not only know what to do and when to do it, but you have to also be brave enough to follow through." -- Jarod Kint z

No comments:

Post a Comment