Software is eating the world–and supercharging global inequality

There’s no denying that the world is rapidly changing, with innovations such as

artificial intelligence, robotics, blockchain, and the cloud. Each of the

previous three industrial revolutions, including the most recent digital

revolution, led to economic growth and helped eliminate mass poverty in many

countries. However, these moments also concentrated wealth in the hands of those

that control new technologies. VCs will play an increasing role in determining

what tech factors into our daily lives over the next ten years and we must

ensure that technology is used to modernize antiquated industries and can create

a better standard of living worldwide. Ensuring that this new wave of technology

benefits as many as possible is the challenge of our generation, especially

considering that the pending climate crisis will disproportionately impact

lower-income and marginalized communities. Bitcoin farms do not benefit maize

farmers in Lagos who face deadly floods but VCs' obsession with crypto generates

outsized investment and the wealthy get wealthier.

How open source is unlocking climate-related data's value

One of the barriers to assessing the cost of risks and opportunities in

climate-change research is the lack of reliable and readily accessible data

about climate. This data gap prevents financial sector stakeholders and others

from assessing the financial stability of mitigation and resilience efforts and

channeling global capital flows towards them. It also forces businesses to

engage in costly, improvised ingestion and curation efforts without the benefit

of shared data or open protocols. To address these problems, the Open Source

Climate (OS-Climate) initiative is building an open data science platform that

supports complex data ingestion, processing, and quality management

requirements. It takes advantage of the latest advances in open source data

platform tools and machine learning and the development of scenario-based

predictive analytics by OS-Climate community members. To build a data platform

that is open, auditable, and supports durable and repeatable deployments, the

OS-Climate initiative leverages the Operate First program.

Multicloud Strategy: How to Get Started?

Managing security in the cloud is a daunting task, especially for a

multicloud. For this reason, the recommended approach is to have Cloud

Security Control Framework early in any cloud migration strategy. But what

does this mean? Today the best practice is to change the security mentality

from perimetral security to a more holistic approach, which considers

cybersecurity risks from the very design of the multicloud deployment. It

starts by allowing the DevSecOps team to build/automate modular guardrails

around the infrastructure and application code right from the beginning. And

you can consider these guardrails as cross-cloud security controls based on

the current trend of implementing Zero Trust networking architecture. Under

this new paradigm, all users and services are “mistrusted” even within the

security perimeter. This approach requires a rethinking of access controls

since the workloads may get distributed and deployed across different cloud

providers. Implementing security controls at all levels is a key, from

infrastructure to application code, services, networks, data, users’ access,

etc.

The future of enterprise: digging into data governance

Enterprise technology is always moving forward, and so, as more businesses

move to a cloud-focused strategy, the boundaries of what that means are

evolving. New models such as serverless and multi-cloud are redefining the

ways in which companies will need to manage the flow and ownership of their

data, and they’ll require new ways of thinking about how data is governed.

According to Syed, these new models are going to make even more important the

ability to decentralize data architecture while maintaining centralized

governance policies. “A lot of companies are going to invest in trying to

figure out, ‘How do I build something that combines not just my one data

source, but my data warehouse, my data lake, my low-latency data store and

pretty much any data object I have?’ How do you bring it all together under

one umbrella? The tooling has to be very configurable and flexible to meet all

the different lines of businesses’ unique requirements, but also ensure all

the central policies are being enforced while you are producing and consuming

the data.”

Cloud security training is pivotal as demand for cloud services explode

Organizations can be caught out by thinking that they can lift-and-shift their

existing applications, services and data to the cloud, where they will be

secure by default. The reality is that migrating workloads to the cloud

requires significant planning and due diligence, and the addition of cloud

management expertise to their workforce. Workloads in the cloud rely on a

shared responsibility model, with the cloud provider assuming responsibility

for the fabric of the cloud, and the customer assuming responsibility for the

servers, services, applications and data within (assuming an IaaS model).

However, these boundaries can seem somewhat fuzzy, especially as there isn’t a

uniform shared responsibility model across cloud providers, which can result

in misunderstandings for companies that use multi-cloud environments. With so

much invested in cloud infrastructure – and with a general lack of awareness

of cloud security issues and responsibilities, as well as a lack of skills to

manage and secure these environments – there is much to be done.

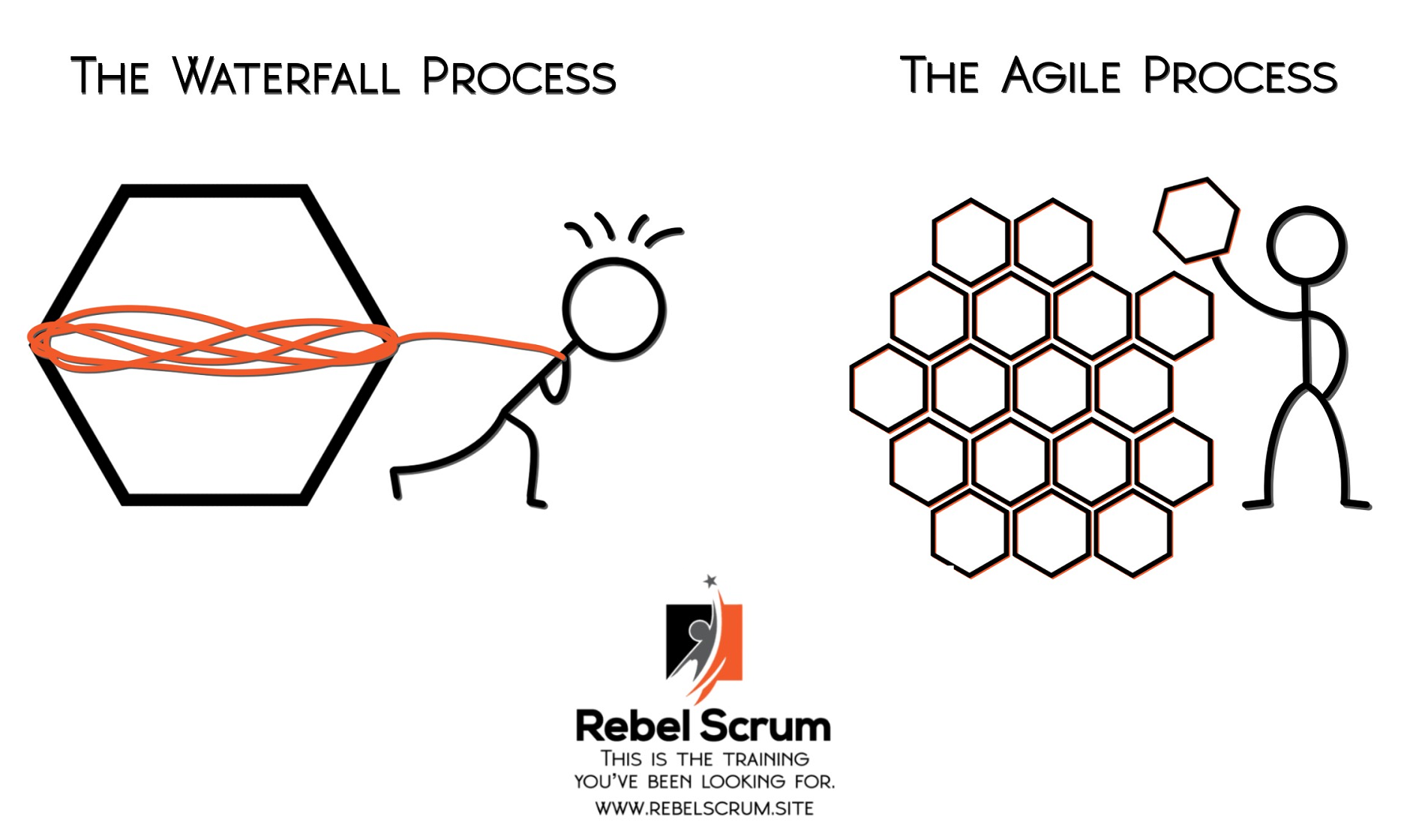

How the 12 principles in the Agile Manifesto work in real life

Leaders who work with agile teams focus on ensuring that the teams have the

support (tools, access, resources) and environment (culture, people, external

processes) they need, and then trust them to get the job done. This principle

can scare some leaders who have a more command-and-control management style.

They wonder how they'll know if their team is succeeding and focusing on the

right things. My response to these concerns is to focus on the team’s

outcomes. Are they delivering working product frequently? Are they making

progress towards their goals? Those are the metrics that warrant attention. It

is a necessary shift in perspective and mindset, and it is one that leaders as

well as agile teams need to make to achieve the best results. To learn more

about how to support agile teams, leaders should consider attending the

Professional Agile Leadership - Essential class. Successful agile leaders

enable teams to deliver value by providing them with the tools that they need

to be successful, providing guidance when needed, embracing servant leadership

and focusing on outcomes.

How to Pick the Right Automation Project

With the beginning and end states clearly articulated, you can then specify a

step-by-step journey, with projects sequenced according to which ones can do

the most in early days to lay essential foundations for later initiatives.

Here’s an example to illustrate how this approach can lead to better choices.

At a construction equipment manufacturer, there are three tempting areas to

automate. One is the solution a vendor is offering: a chatbot tool that can be

fairly simply implemented in the internal IT help desk with immediate impact

on wait times and headcount. A second possibility is in finance, where sales

forecasting could be enhanced by predictive modeling boosted by AI pattern

recognition. The third idea is a big one: if the company could use intelligent

automation to create a “connected equipment” environment on customer job

sites, its business model could shift to new revenue streams from digital

services such as monitoring and controlling machinery remotely. If you’re

going for a relatively easy implementation and fast ROI, the first option is a

no-brainer. If instead you’re looking for big publicity for your

organization’s bold new vision, the third one’s the ticket.

Hybrid work and the Great Resignation lead to cybersecurity concerns

As the fallout of the Great Resignation is still being felt by many

enterprises, there are four main concerns raised by Code42’s report. As 4.5

million employees left their jobs in November 2021 alone, this has created the

first big challenge for industry leaders in protecting their data. Many

employees leaving their roles have accidentally or intentionally taken data

with them to competitors within the same industry, or even sometimes leveraged

their former employers’ data for ransom. Business leaders are concerned with

the types of data that are leaving, according to 49% of respondents, and 52%

said they are concerned with what information is being saved on local machines

and personal hard drives. Additionally, business leaders are more concerned

with the content of the data that is exposed rather than how the data is

exposed. Another major concern comes in the form of a disconnect when it comes

to the problem of employees leaving in droves, creating uncertainty about

ownership of data. Cybersecurity practitioners want more say in setting their

company’s security policies and priorities to the company since they are

dealing with the risks their employers face.

Interoperability must be a priority for building Web3 in 2022

In addition to being time-consuming to build, once-off bridges are often

highly centralized, acting as intermediaries between protocols. Built, owned,

and operated by a single entity, these bridges become bottlenecks between

different ecosystems. The controlling entity decides which tokens to support

and which new networks to connect. ... Another impact of the siloed nature of

the blockchain space is that developers are forced to choose between

blockchain protocols, and end up building dapps that can be used on only one

network, but not the others. This cuts the potential user base of any solution

down significantly and prevents dapps from reaching mass adoption. Developers

then have to spend resources deploying their apps across multiple networks

which for many means to fragment their liquidity across their network-specific

applications. From these struggles and drains on time and money, we know that

a more universal solution for interoperability is the only way forward. Our

industry, perhaps the most innovative in the world today and packed with the

most talented minds, must prioritize the principles of universality,

decentralization, security, and accessibility when it comes to

interoperability.

Better Data Modeling with Lean Methodology

Lean is a methodology for organizational management based on Toyota’s 1930

manufacturing model, which has been adapted for knowledge work. Where Agile

was developed specifically for software development, Lean was developed for

organizations, and focuses on continuous small improvement, combined with a

sound management process in order to minimize waste and maximize value.

Quality standards are maintained through collaborative work and repeatable

processes. ... Eliminate anything not adding value as well as anything

blocking the ability to deliver results quickly. At the same time, empower

everyone in the process to take responsibility for quality. Automate processes

wherever possible, especially those prone to human error, and get constant

test-driven feedback throughout development. Improvement is only possible

through learning, which requires proper documentation of the iterative process

so knowledge is not lost. All aspects of communication, the way conflicts are

handled, and the onboarding of team members should always occur within a

culture of respect.

Quote for the day:

"Take time to deliberate; but when the

time for action arrives, stop thinking and go in." --

Andrew Jackson

No comments:

Post a Comment