These hackers are hitting victims with ransomware in an attempt to cover their tracks

Once installed on a compromised machine, PowerLess allows attackers to download

additional payloads, and steal information, while a keylogging tool sends all

the keystrokes entered by the user direct to the attacker. Analysis of PowerLess

backdoor campaigns appear to link attacks to tools, techniques and motivations

associated with Phosphorus campaigns. In addition to this, analysis of the

activity seems to link the Phosphorus threat group to ransomware attacks. One of

the IP addresses being used in the campaigns also serves as a command and

control server for the recently discovered Momento ransomware, leading

researchers to suggest there could be a link between the ransomware attacks and

state-backed activity. "A connection between Phosphorus and the Memento

ransomware was also found through mutual TTP patterns and attack infrastructure,

strengthening the connection between this previously unattributed ransomware and

the Phosphorus group," said the report. Cybereason also found a link between a

second Iranian hacking operation, named Moses Staff, and additional ransomware

attacks, which are deployed with the aid of another newly identified trojan

backdoor, dubbed StrifeWater.

Managing Technical Debt in a Microservice Architecture

Paying down technical debt while maintaining a competitive velocity delivering

features can be difficult, and it only gets worse as system architectures get

larger. Managing technical debt for dozens or hundreds of microservices is much

more complicated than for a single service, and the risks associated with not

paying it down grow faster. Every software company gets to a point where dealing

with technical debt becomes inevitable. At Optum Digital, a portfolio – also

known as a software product line – is a collection of products that, in

combination, serve a specific need. Multiple teams get assigned to each product,

typically aligned with a software client or backend service. There are also

teams for more platform-oriented services that function across several

portfolios. Each team most likely is responsible for various software

repositories. There are more than 700 engineers developing hundreds of

microservices. They take technical debt very seriously because the risks of it

getting out of control are very real.

How to approach modern data management and protection

European tech offers a serious alternative to US and Chinese models when it

comes to data. It’s also a necessary alternative and must have an evolution

towards European technologic autonomy, according to D’urso. “The loss of

economic autonomy will impact political power. In other words, data and

economic frailty will only further weaken Europe’s role at the global power

table and open the door to a variety of potential flash points (military,

cyber, industrial, social and so on). “Europe should be proud of its model,

which re-injects tax revenues into a fair and respectful social and cultural

framework. The GDPR policy is clearly at the heart of a European digital

mindset.” Luc went further and suggested that data regulation, including

management, protection and storage, is central to the upcoming French

presidential election and the current French Presidency of the Council of the

European Union. “The French Presidency of the Council of the EU will clearly

place data protection into the spotlight of political debates. It is not about

protectionism, but Europe must safeguard its data against foreign competition

to enhance its autonomy and build a prosperous future.

Edge computing strategy: 5 potential gaps to watch for

Edge strategies that depend on one-off “snowflake” patterns for their success

will cause long-term headaches. This is another area where experience with

hybrid cloud architecture will likely benefit edge thinking: If you already

understand the importance of automation and repeatability to, say, running

hundreds of containers in production, then you’ll see a similar value in terms

of edge computing. “Follow a standardized architecture and avoid fragmentation

– the nightmare of managing hundreds of different types of systems,” advises

Shahed Mazumder, global director, telecom solutions at Aerospike. “Consistency

and predictability will be key in edge deployments, just like they are key in

cloud-based deployments.” Indeed, this is an area where the cloud-edge

relationship deepens. Some of the same approaches that make hybrid cloud both

beneficial and practical will carry forward to the edge, for example. In

general, if you’ve already been solving some of the complexity involved in

hybrid cloud or multi-cloud environments, then you’re on the right path.

Top Scam-Fighting Tactics for Financial Services Firms

At its core, a scam is a situation in which the customer has been duped into

initiating a fraudulent transaction that they believe to be authentic.

Applying traditional controls for verifying or authenticating the activity may

therefore fail. But the underlying ability to detect the anomaly remains

critical. "Instead of validating the transaction or the individual, we are

going to have to place more importance on helping the customer understand that

what they believe to be legitimate is actually a lie," Mitchell of Omega

FinCrime says. He says fraud operations teams will need to become more

customer-centric, education-focused and careful in their interactions.

Mitigating a scam apocalypse will require mobilization across the market,

which includes financial institutions, solution providers, payment networks,

regulators, telecom carriers, social media companies and law enforcement

agencies. In the short term, investment priorities must expand beyond identity

controls to include orchestration controls and decision support systems that

allow financial institutions to see the interaction more holistically, Fooshee

says.

Better Integration Testing With Spring Cloud Contract

Imagine a simple microservice with a producer and a consumer. When writing

tests in the consumer project, you have to write mocks or stubs that model

the behavior of the producer project. Conversely, when you write tests in

the producer project, you have to write mocks or stubs that model the

behavior of the consumer project. As such, multiple sets of related,

redundant code have to be maintained in parallel in disconnected projects.

... “Mock” gets used in ways online that is somewhat generic, meaning any

fake object used for testing, and this can get confusing when

differentiating “mocks” from “stubs”. However, specifically, a “mock” is

an object that tests for behavior by registering expected method calls

before a test run. In contrast, a “stub” is a testable version of the

object with callable methods that return pre-set values. Thus, a mock

checks to see if the object being tested makes an expected sequence of

calls to the object being mocked, and throws an error if the behavior

deviates (that is, makes any unexpected calls). A stub does not do any

testing itself, per se, but instead will return canned responses to

pre-determined methods to allow tests to run.

Now for the hard part: Deploying AI at scale

Fortunately, AI tools and platforms have evolved to the point in which

more governable, assembly-line approaches to development are possible,

most of which are being harnessed under the still-evolving MLOps model.

MLOps is already helping to cut the development cycle for AI projects from

months, and sometimes years, down to as little as two weeks. Using

standardized components and other reusable assets, organizations are able

to create consistently reliable products with all the embedded security

and governance policies needed to scale them up quickly and easily. Full

scalability will not happen overnight, of course. Accenture’s Michael

Lyman, North American lead for strategy and consulting, says there are

three phases of AI implementation. ... To accelerate this process, he

recommends a series of steps, such as starting out with the best use cases

and then drafting a playbook to help guide managers through the training

and development process. From there, you’ll need to hone your

institutional skills around key functions like data and security analysis,

process automation and the like.

How to measure security efforts and have your ideas approved

In the world of cybersecurity, the most frequently asked question focuses

on “who” is behind a particular attack or intrusion – and may also delve

into the “why”. We want to know whom the threat actor or threat agent is,

whether it is a nation state, organized crime, an insider, or some

organization to which we can ascribe blame for what occurred and for the

damage inflicted. Those less familiar with cyberattacks may often ask,

“Why did they hack me?” As someone who has been responsible for managing

information risk and security in the enterprise for 20-plus years, I can

assure you that I have no real influence over threat actors and threat

agents – the “threat” part of the above equation. These questions are

rarely helpful, providing only psychological comfort, like a blanket for

an anxious child, and quite often distract us from asking the one question

that can really make a difference: “HOW did this happen?” But even those

who asked HOW – have answered with simple vulnerabilities – we had an

unpatched system, we lacked MFA, or the user clicked on a link.

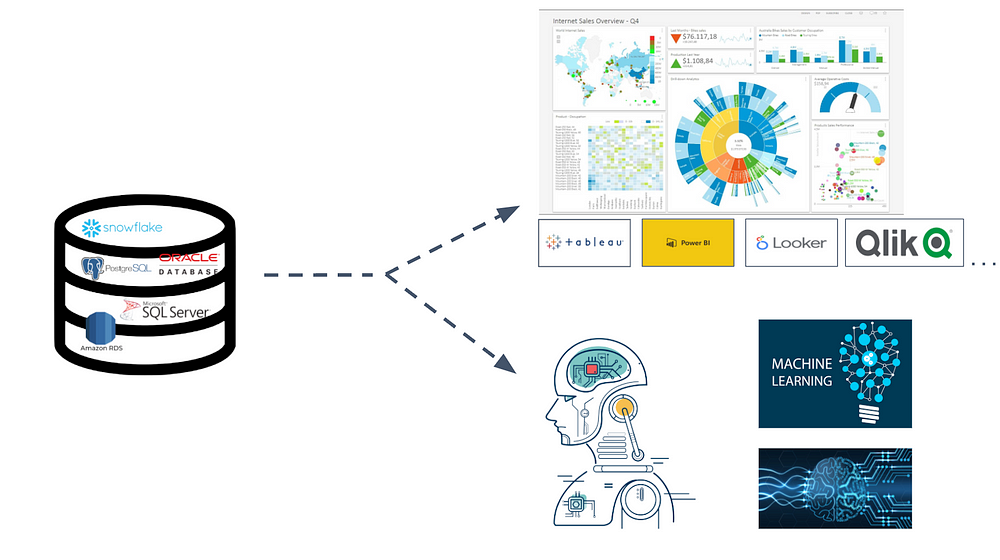

Data analysts are one of the data consumers. A data analyst answers

questions about the present such as: what is going on now? What are the

causes? Can you show me XYZ? What should we do to avoid/achieve ABC? What is

the trend in the past 3 years? Is our product doing well? ... Data

scientists are another data consumer. Instead of answering questions about

the present, they try to find patterns in the data and answer the questions

about the future, i.e prediction. This technique has actually existed for a

long time. You must have heard of it, it’s called statistics. Machine

learning and deep learning are the 2 most popular ways to utilise the power

of computers to find patterns in data. ... How do data analysts and

scientists get the data? How does the data come from user behaviour to the

database? How do we make sure the data is accountable? The answer is data

engineers. Data consumers cannot perform their work without having data

engineers set up the whole structure. They build data pipelines to ingest

data from users’ devices to the cloud then to the database.

The Value of Machine Unlearning for Businesses, Fairness, and Freedom

Another way machine unlearning could deliver value for both individuals and

organizations is the removal of biased data points that are identified after

model training. Despite laws that prohibit the use of sensitive data in

decision-making algorithms, there is a multitude of ways bias can find its

way in through the back door, leading to unfair outcomes for minority groups

and individuals. There are also similar risks in other industries, such as

healthcare. When a decision can mean the difference between life-changing

and, in some cases, life-saving outcomes, algorithmic fairness becomes a

social responsibility and often algorithms may be unfair due to the data

they are being trained on. For this reason, financial inclusion is an area

that is rightly a key focus for financial institutions, and not just for the

sake of social responsibility. Challengers and fintechs continue to innovate

solutions that are making financial services more accessible. From a model

monitoring perspective, machine unlearning could also safeguard against

model degradation.

Quote for the day:

"Good leaders make people feel that

they're at the very heart of things, not at the periphery." --

Warren G. Bennis

No comments:

Post a Comment