Reflections on Failure, Part One

There are many reasons why failures in security are inevitable. As I wrote

previously, the human minds practicing security are fatally flawed and will

therefore make mistakes over time. And even if our reasoning abilities were free

of bias, we would still not know everything there is to know about every

possible system. Security is about reasoning under uncertainty for both attacker

and defender, and sometimes our uncertainty will result in failure. None of us

know how to avoid all mistakes in our code, all configuration errors, and all

deployment issues. Further still, learning technical skills in general and

“security” in particular requires a large amount of trial and error over time.

But we can momentarily disregard our biased minds, the practically unbridgeable

gap between what we can know and what is true, and even the simple need to learn

skills and knowledge on both sides of the fence. The inevitability of failure

follows directly from our earlier observation about conservation. If failure is

conserved between red and blue, then every action in this space can be

interpreted as one.

APIs in Web3 with The Graph — How It Differs from Web 2.0

The Graph protocol is being built by a company called Edge & Node, which

Yaniv Tal is the CEO of. Nader Dabit, a senior engineer who I interviewed for a

recent post about Web3 architecture, also works for Edge & Node. The plan

for the company seems to be to build products based on The Graph, as well as

make investments in the nascent ecosystem. There’s some serious API DNA in Edge

& Node. Three of the founders (including Tal) worked together at MuleSoft,

an API developer company acquired by Salesforce in 2018. MuleSoft was founded in

2007, near the height of Web 2.0. Readers familiar with that era may also recall

that MuleSoft acquired the popular API-focused blog, ProgrammableWeb, in 2013.

Even though none of the Edge & Node founders were executives at MuleSoft,

it’s interesting that there is a thread connecting the Web 2.0 API world and

what Edge & Node hopes to build in Web3. There are a lot of technical

challenges for the team behind The Graph protocol — not least of all trying to

scale to accommodate multiple different blockchain platforms. Also, the

“off-chain” data ecosystem is complex and it’s not clear how compatible

different storage solutions are to each other.

Introducing Apache Arrow Flight SQL: Accelerating Database Access

While standards like JDBC and ODBC have served users well for decades, they fall

short for databases and clients which wish to use Apache Arrow or columnar data

in general. Row-based APIs like JDBC or PEP 249 require transposing data in this

case, and for a database which is itself columnar, this means that data has to

be transposed twice—once to present it in rows for the API, and once to get it

back into columns for the consumer. Meanwhile, while APIs like ODBC do provide

bulk access to result buffers, this data must still be copied into Arrow arrays

for use with the broader Arrow ecosystem, as implemented by projects like

Turbodbc. Flight SQL aims to get rid of these intermediate steps. Flight SQL

means database servers can implement a standard interface that is designed

around Apache Arrow and columnar data from the start. Just like how Arrow

provides a standard in-memory format, Flight SQL saves developers from having to

design and implement an entirely new wire protocol. As mentioned, Flight already

implements features like encryption on the wire and authentication of requests,

which databases do not need to re-implement.

The Graph (GRT) gains momentum as Web3 becomes the buzzword among techies

One of the main reasons for the recent increase in attention for The Graph is

the growing list of subgraphs offered by the network for popular decentralized

applications and blockchain protocols. Subgraphs are open application

programming interfaces (APIs) that can be built by anyone and are designed to

make data easily accessible. The Graph protocol is working on becoming a global

graph of all the world’s public information, which can then be transformed,

organized and shared across multiple applications for anyone to query. ... A

third factor helping boost the prospects for GRT is the rising popularity of

Web3, a topic and sector that has increasingly begun to make its way into

mainstream conversations. Web3 as defined by Wikipedia is an “idea of a new

iteration of the World Wide Web that is based on blockchain technology and

incorporates concepts such as decentralization and token-based economics.” The

overall goal of Web3 is to move beyond the current form of the internet where

the vast majority of data and content is controlled by big tech companies, to a

more decentralized environment where public data is more freely accessible and

personal data is controlled by individuals.

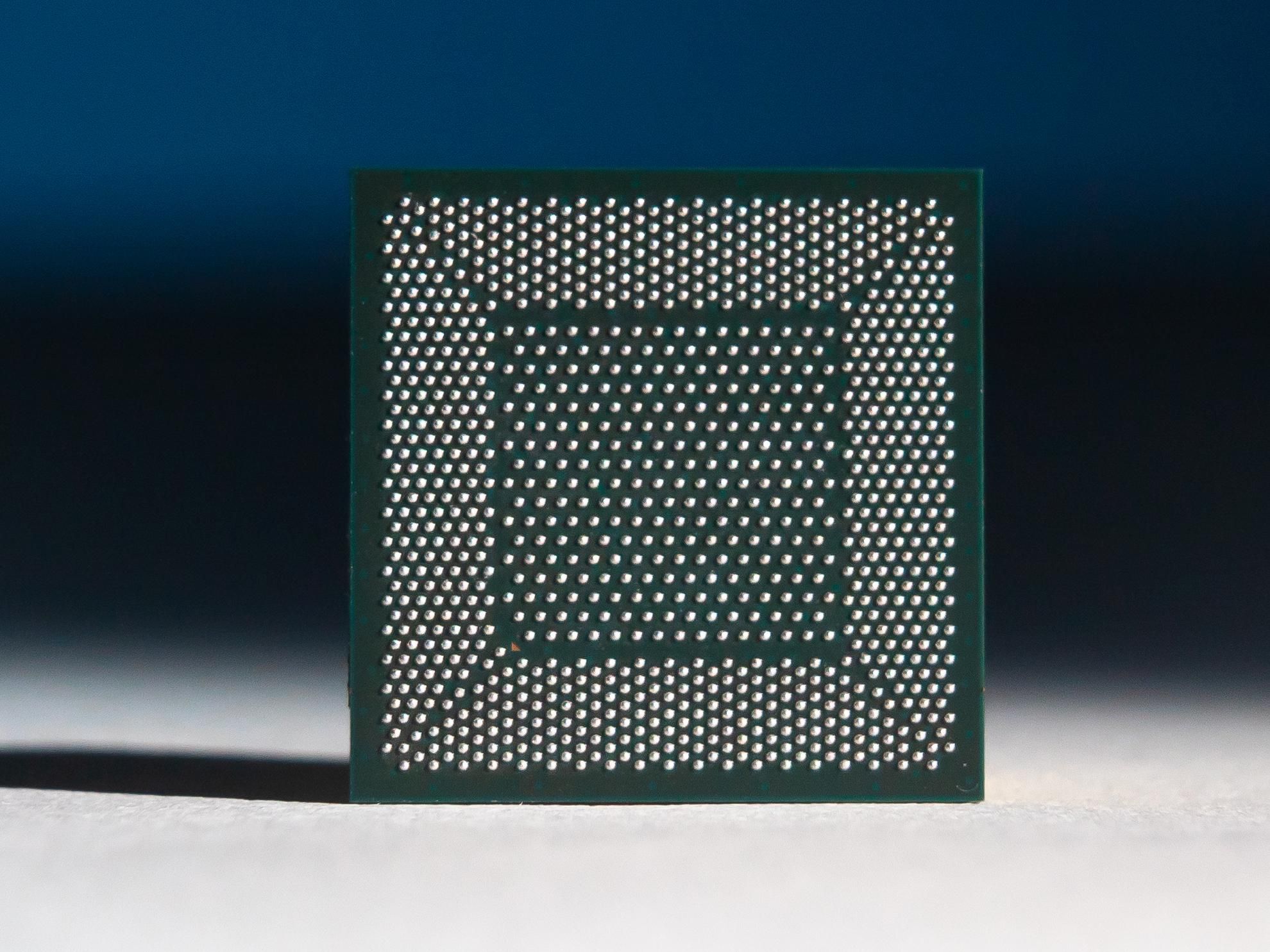

Brain-Inspired Chips Good for More than AI, Study Says

Neuromorphic chips typically imitate the workings of neurons in a number of

different ways, such as running many computations in parallel. ... Furthermore,

whereas conventional microchips use clock signals fired at regular intervals to

coordinate the actions of circuits, the activity in neuromorphic architecture

often acts in a spiking manner, triggered only when an electrical charge reaches

a specific value, much like what happens in brains like ours. Until now, the

main advantage envisioned with neuromorphic computing to date was in power

efficiency: Features such as spiking and the uniting of memory and processing

resulted in IBM’s TrueNorth chip, which boasted a power density four orders of

magnitude lower than conventional microprocessors of its time. “We know from a

lot of studies that neuromorphic computing is going to have power-efficiency

advantages, but in practice, people won’t care about power savings if it means

you go a lot slower,” says study senior author James Bradley Aimone, a

theoretical neuroscientist at Sandia National Laboratories in Albuquerque.

Could Biology Hold the Clue to Better Cybersecurity?

The framework is designed to inoculate a user from ransomware, remote code

execution, supply chain poisoning, and memory-based attacks. "If we're going to

change the way we protect assets, we need to take a completely different

approach," says Dave Furneaux, CEO of Virsec. "Companies are spending more and

more money on solutions and not seeing any improvement." Furneaux likens the

approach to the mRNA technology that vaccine makers Moderna and Pfizer have

used. "Once you determine how to adapt a cell and the way it might behave in

response to a threat, you can better protect the organism," Furneaux says. In

biology, the approach relies on an inside-out approach. In cybersecurity, the

method goes down into the lowest building blocks of software — which are like

the cells in a body — to protect the entire system. "By understanding the RNA

and DNA, we can create the equivalent of a vaccine," Furneaux adds. Other

cybersecurity vendors, including Darktrace, Vectra AI, and BlackBerry

Cybersecurity, have also developed products that rely to some degree on

biological models.

In the Web3 Age, Community-Owned Protocols Will Deliver Value to Users

It's a virtuous cycle, Oshiro told Decrypt. As adoption of 0x increases, the

protocol becomes Web3's foundational layer for tokenized value exchange. That,

in turn, drives adoption by integrators who build on 0x, generating more

economic value for themselves and users—ultimately bringing the trillions of

dollars of economic value that the Internet has already created to the users of

the next-generation decentralized Internet. ... Building exchange infrastructure

on top of rapidly evolving blockchains means the 0x Protocol will need to be

constantly tweaked and improved. Since its launch, 0x has been gradually

transitioning all decisions over infrastructure upgrades and management of the

treasury to its token holders. “The ability to upgrade comes along with an

immense amount of power and a ton of downstream externalities,” Warren said.

“And so it’s critical that the only ones who can update the infrastructure are

the stakeholders and the people who are building businesses on top of it—that is

how we’re thinking about this.”

How to Make Cybersecurity Effective and Invisible

CIOs have a balance to strike: Security should be robust, but instead of being

complicated or restrictive, it should be elegant and simple. How do CIOs achieve

that "invisible" cybersecurity posture? It requires the right teams, superior

design, and cutting-edge technology, processes, and automation. Expertise and

Design: Putting the Right Talent and Security Architecture to Work for You.

Organizations hoping to achieve invisible cybersecurity must first focus on

talent and technical expertise. Security can no longer be handled only through

awareness, policy, and controls. It must be baked into everything IT does as a

fundamental design element. The IT landscape should be assessed for weaknesses,

and an action plan should then be put in place to mitigate risk through

short-term actions. Long term, organizations need to design a landscape that is

more compartmentalized and resilient, by implementing strategies like zero trust

and microsegmentation. For this, companies need the right expertise. Given

cybersecurity workforce shortages, organizations may need to identify and

onboard an IT partner with strong cyber capabilities and offerings.

Secure Code Quickly as You Write It

Most developers aren’t security experts, so tools that are optimized for the

needs of the security team are not always efficient for them. A single developer

doesn’t need to know every bug in the code; they just need to know the ones that

affect the work they’ve been assigned to fix. Too much noise is disruptive and

causes developers to avoid using security tools. Developers also need tools that

won’t disrupt their work. By the time security specialists find issues

downstream, developers have moved on. Asking them to leave the IDE to analyze

issues and determine potential fixes results in costly rework and kills

productivity. Even teams that recognize the upside of checking their code and

open source dependencies for security issues often avoid the security tools

they’ve been given because it drags down their productivity rates. What

developers need are tools that provide fast, lightweight application security

analysis of source code and open source dependencies right from the IDE. Tooling

like this enables developers to focus on issues that are relevant to their

current work without being burdened by other unrelated issues.

Data Patterns on Edge

/filters:no_upscale()/articles/data-patterns-edge/en/resources/51-1644849493259.jpeg)

Most of the data in the internet space fall into this bucket, the enterprise

data set comprises many interdependent services working in a hierarchical nature

to extract required data sets which could be personalized or generic in format.

The feasibility of moving this data to edge traditionally was limited to

supporting static resources or header data set or media files to edge or the

CDN, however the base data set was pretty much retrieved from the source DC or

the cloud provider. When you look at the User experiences, the optimization

surrounds the principles of Critical rendering path and associated improvement

in the navigation timelines for web-based experiences and around how much of the

view model is offloaded to the app binary in device experiences. In hybrid

experiences, the state model is updated periodically from the server push or

poll. The use case in discussion is how we can enable data retrieval from the

edge for data sets that are personalized.

Quote for the day:

"A leader is the one who climbs the

tallest tree, surveys the entire situation and yells wrong jungle." --

Stephen Covey

No comments:

Post a Comment