Assessing the Value of Corporate Data

“Value can be determined in a qualitative way,” says Grasso. This can be done via “a deep analysis of what are the key data the enterprise should harness in order to get a profitable return, [and that depends] on the business model of each organization.” That’s not to say there aren’t incentives, and means, to quantify the value of digital data. “I use a structured approach [by] aggregating a few components of our data’s’ value – intrinsic, derivative, and algorithmic,” says Lassalle at JLS Technology USA. “I use this approach to assess the true value, placing a real dollar amount on what data is worth to help organizations manage the risk around data as their most important asset.” Qualitative or quantitative, the value of data isn’t static. As data ages, for example, its value can wane. Conversely, real-time data is often extremely valuable, as is data supplemented with complementary data from other sources. Most people tend to focus on, and put value on, the data that emerges from their own operations or other familiar sources, notes Mark Thiele

Deep Learning Architectures for Action Recognition

We have learned that deep learning has revolutionized the way we process videos for action recognition. Deep learning literature has come a long way from using improved Dense Trajectories. Many learnings from the sister problem of image classification has been used in advancing deep networks for action recognition. Specifically, the usage of convolution layers, pooling layers, batch normalization, and residual connections have been borrowed from the 2D space and applied in 3D with substantial success. Many models that use a spatial stream are pretrained on extensive image datasets. Optical flow has also had an important role in representing temporal features in early deep video architectures like the two stream networks and fusion networks. Optical flow is our mathematical definition of how we believe movement in subsequent frames can be described as densely calculated flow vectors for all pixels. Originally, networks bolstered performance by using optical flow. However, this made networks unable to be end-to-end trained and limited real-time capabilities. In modern deep learning, we have moved beyond optical flow, and we instead architect networks that are able to natively learn temporal embeddings and are end-to-end trainable.

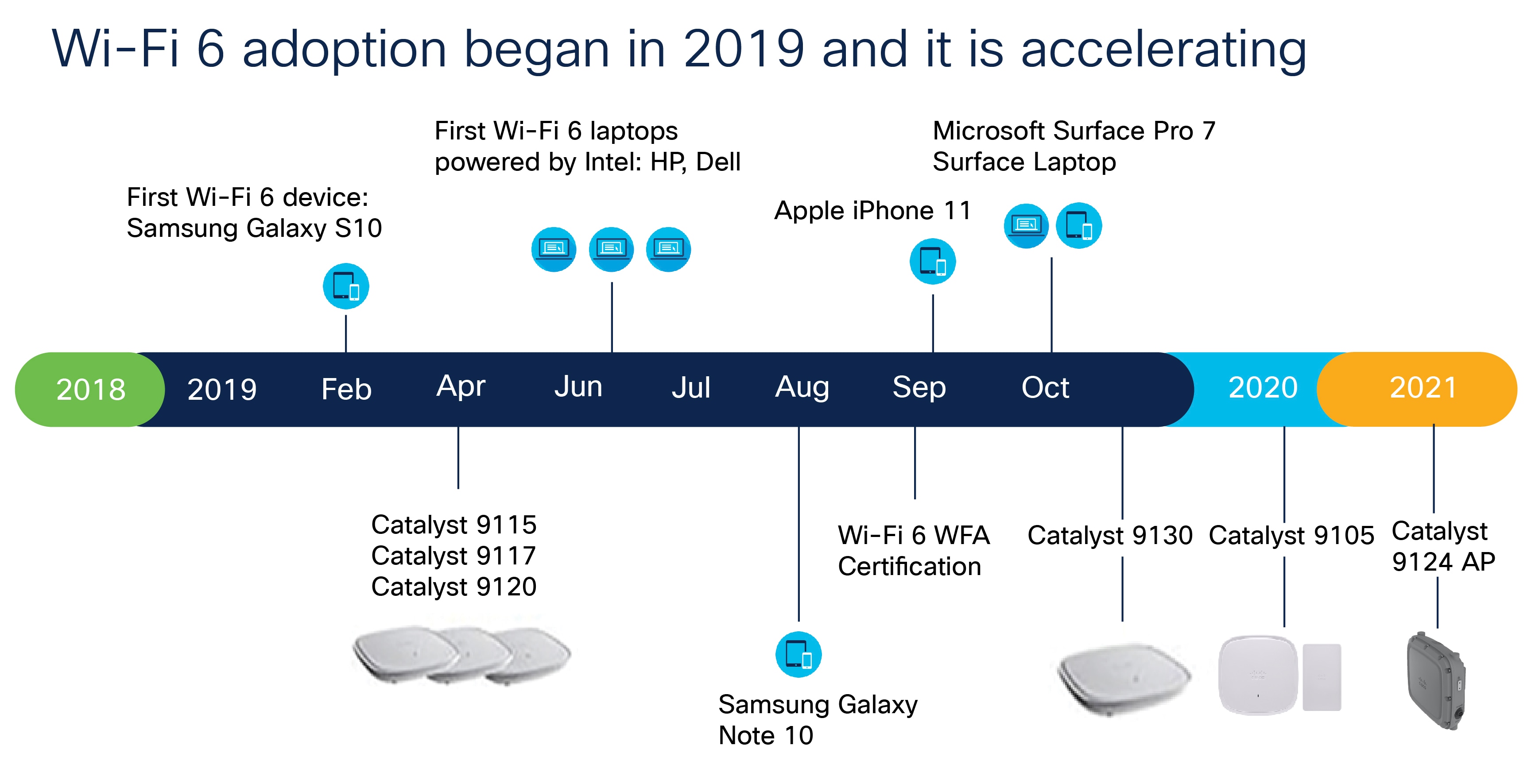

The Road to Wi-Fi 6

we are more dependent on the network than ever before and Wi-Fi 6 gives you more of what you need. It is a more consistent and dependable network connection that will deliver speeds up to four times faster than 802.11ac Wave 2 with four times the capacity. This standard provides a seamless experience for clients and enables next-generation applications such as 4K/8K streaming HD, augmented reality (AR) and virtual reality (VR) video, and more device and IoT capacity for high-density environments such as university lecture halls, malls, stadiums, and manufacturing facilities. Wi-Fi 6 also promises reduced latency, greater reliability, and improved power efficiency. With higher performance for mobile devices and the ability to support the Internet of Things (IoT) on a massive scale (IoT use has been trending upwards lately and is now also called “the new mobile”), Wi-Fi 6 will improve experiences across the entire wireless landscape. Wi-Fi 6 also offers improved security, with WPA3 and improved interference mitigation with better QoE.

Increasing Software Velocity While Maintaining Quality and Efficiency

Throughout the software lifecycle there are many opportunities for automation — from software design, to development, to build, test, deploy and, ultimately, the production state. The more of these steps that can be automated, the faster developers can work. Perhaps the biggest area for potential time savings is testing, because of all the phases of the software lifecycle, testing assumes the most manual labor. The Vanson Bourne survey found test automation to be the single most important factor in accelerating innovation, according to 90% of IT leaders. ... Integration helps software development technologies interoperate with each other. An integrated pipeline leverages existing investments and enables developers to build, test and deploy faster while providing the additional benefit of reducing errors resulting from human intervention (furthering quality). Integration also decreases the manual labor needed to execute and manage workflow within software delivery processes.

Every Data Scientist needs some SparkMagic

Spark is the data industries gold standard for working with data in distributed data lakes. But to work with Spark clusters cost-efficiently, and even allow multi-tenancy, it is difficult to accommodate individual requirements and dependencies. The industry trend for distributed data infrastructure is towards ephemeral clusters which makes it even harder for data scientists to deploy and manage their Jupyter notebook environments. It’s no surprise that many data scientists work locally on high-spec laptops where they can install and persist their Jupyter notebook environments more easily. So far so understandable. How do many data scientists then connect their local development environment with the data in the production data lake? They materialise csv files with Spark and download them from the cloud storage console. Manually downloading csv files from cloud storage consoles is neither productive nor is it particularly robust. Wouldn’t it be so much better to seamlessly connect a local Jupyter Notebook with a remote cluster in an end-user friendly and transparent way? Meet SparkMagic! Sparkmagic is a project to interactively work with remote Spark clusters in Jupyter notebooks through the Livy REST API. It provides a set of Jupyter Notebook cell magics and kernels to turn Jupyter into an integrated Spark environment for remote clusters.

ML.NET Model Builder is now a part of Visual Studio

ML.NET is a cross-platform, machine learning framework for .NET developers. Model Builder is the UI tooling in Visual Studio that uses Automated Machine Learning (AutoML) to train and consume custom ML.NET models in your .NET apps. You can use ML.NET and Model Builder to create custom machine learning models without having prior machine learning experience and without leaving the .NET ecosystem. ... Model Builder’s Scenario screen got an update with a new, modern design and with updated scenario names to make it even easier to map your own business problems to the machine learning scenarios offered. Additionally, anomaly detection, clustering, forecasting, and object detection have been added as example scenarios. These example scenarios are not yet supported by AutoML but are supported by ML.NET, so we’ve provided links to tutorials and sample code via the UI to help get you started.

Speaking of which, libraries and external dependencies are an efficient way to reuse functionality without reusing code. It’s almost like copying code, except that you aren’t responsible for the maintenance of it. Heck, most of the web today operates on a variety of frameworks and plugin libraries that simplify development. Reusing code in the form of libraries is incredibly efficient and allows each focused library to be very good at what it does and only that. And unlike in academia, many libraries don’t even require anything to indicate you’re building with or on top of someone else’s code. The JavaScript package manager npm takes this to the extreme. You can install tiny, single function libraries—some as small as a single line of code—into your project via the command line. You can grab any of over one million open source packages and start building their functionality into your app. Of course, as with every approach to work, there’s downside to this method. By installing a package, you give up some control over the code. Some malicious coders have created legitimately useful packages, waited until they had a decent adoption rate, then updated the code to steal bitcoin wallets.

Security & Trust Ratings Proliferate: Is That a Good Thing?

The information security world is rapidly gaining its own sets of scoring systems. Last week, NortonLifeLock announced a research project that will score whether Twitter accounts are likely to belong to a human or a bot. Security awareness companies such as KnowBe4 and Infosec assign workers grades for how well they perform on phishing simulations and the risk that they may pose to their employer. And companies such as BitSight and SecurityScorecard rate companies using external indicators of security and potential breaches. Businesses will increasingly look to scores to evaluate the risk of partnering with another firm or even employing a worker. A business's cyber-insurance security score could determine its cyber-insurance premium or whether a larger client will work with the firm, says Stephen Boyer, CEO at BitSight, a security ratings firm. "A lot of our customers will not engage with a vendor below a certain threshold because they have a certain risk tolerance," he says. "Business decisions are absolutely being made on this."

Microsoft launches Project Bonsai, an AI development platform for industrial systems

Project Bonsai is a “machine teaching” service that combines machine learning, calibration, and optimization to bring autonomy to the control systems at the heart of robotic arms, bulldozer blades, forklifts, underground drills, rescue vehicles, wind and solar farms, and more. Control systems form a core component of machinery across sectors like manufacturing, chemical processing, construction, energy, and mining, helping manage everything from electrical substations and HVAC installations to fleets of factory floor robots. But developing AI and machine learning algorithms atop them — algorithms that could tackle processes previously too challenging to automate — requires expertise. ... Project Bonsai is an outgrowth of Microsoft’s 2018 acquisition of Berkeley, California-based Bonsai, which previously received funding from the company’s venture capital arm M12. Bonsai is the brainchild of former Microsoft engineers Keen Browne and Mark Hammond, who’s now the general manager of business AI at Microsoft.

Hacked Law Firm May Have Had Unpatched Pulse Secure VPN

Mursch says that while his firm can scan open internet ports for vulnerable Pulse Secure VPN servers, he doesn't have insight into the law firm's internal network and can't say for sure whether the REvil operators used it to plant ransomware and encrypt files. Some security experts, including Kevin Beaumont, who is now with Microsoft, have previously warned that the REvil ransomware gang is known to target unpatched Pulse Secure VPN servers. When the gang attacked the London-based foreign currency exchange firm Travelex on New Year's Day, it was reported that the company used a Pulse Secure VPN server that was patched. Brett Callow, a threat analyst with security firm Emsisoft, also notes that REvil is known to use vulnerable Pulse Secure VPN servers to gain a foothold in a network and wait for some time before starting a ransomware attack against a target. "In other incidents, it's been established that groups have had access for months prior to finally deploying the ransomware," Callow tells ISMG.

Quote for the day:

"Becoming a leader is synonymous with becoming yourself. It is precisely that simple, and it is also that difficult." -- Warren G. Bennis

No comments:

Post a Comment