4 Ways to Avoid Cost-Cutting Amid Economic Uncertainty

Traditional approaches to budget management simply won’t cut it in this stark new landscape. Indeed, they never did. Imprecise, tactical budget-cutting is little more than a panic-driven, high-risk response to crisis. And as you start thinking about what -- and how and when-- you need to cut, you can’t afford to think strictly in terms of reducing expenses. Dollars matter, of course: Just don’t be myopic. Focus instead on business value, on the things you retain that drive that value, and on what the business will require as you eventually shift into recovery mode. ... CIOs must now identify and focus on initiatives that help the CEO and the business ensure the organization survives and thrives during this crisis. Partnership across the board is key for IT as the department must work in lockstep with the rest of the organization to identify big-ticket items that should be kept if they result in long-term savings. This may even include cost increases as the organization doubles down on the things that matter most. But if they drive long-term value and all partners are on the same page, it’s infinitely smarter than blunt-force cutting.

Tech-Driven Next-Gen Corporate Banking

Indeed, the biggest challenge may be persuading top executives to put the priority on a comprehensive and inclusive approach to fostering organizational excellence across corporate banking operations. In many instances, it is difficult for all of the business units and support units to embrace a wholesale paradigm shift. Attachment to internal organizational silos, new team dynamics, and modifying control functions mean that making the necessary change is never easy. At the same time, by taking the lead in the shift to digital, cutting-edge corporate banking operations can establish a superior position versus other challengers and new entrants. Some pioneering banks have already carved out comparatively large customer bases and are steadily accruing expertise related to data gathering, remittance processing, conflict resolution, and payment making. Notably, some banks are already making pioneering efforts in data analysis and AI.

Why metadata is crucial in implementing a solid data strategy

Aside from the critical compliance issue, businesses can find great advantages in good metadata management. A host of misguided decisions are ordinarily made based on wrong or inaccurate information – usually due to non-consistent record labelling, duplicates, or non-explicit naming practices, which means that the latest and most accurate data might easily be lost or missed among the old or wrong ones. This is why it’s crucial to ensure all data is combined in a single source of truth which can yield accurate insights for businesses to make well-informed decisions on. Ensuring that the file metadata is kept organised and up to date –what is commonly referred to as data lineage– is important for quality control. It allows for better visibility, and so helps organisations to keep track of all data iterations and movements. Accurate metadata records play a key role in managing the rest of the data as well, helping maintain, integrate, edit, secure it and audit it as benefits the business. Correctly governed, metadata can be a vital factor in enabling innovation, future-forward initiatives and what will eventually become the new normal. One such example is AI.

Business Service vs. Product Thinking

If by product you just mean software as a trade good, services are more attractive. If by service you mean something low level and technical, products are more attractive. The legacy of definitional disagreement between ITSM vs. SOA plays into this issue”. Hinchcliffe said, “I’d say that you can’t have a product without a service. But you can’t have a good service without it being treated as a product”. With respect to question, Hinchcliffe said, “yes, project portfolio and service management still have value, but they are becoming much more operational and productized”. CIO David Seidl agrees with Dion when he says, “massive scaling of how we do online instruction, handling growth in conferencing, softphones, and collaboration technology. Remote support issues for people who have never worked at home. Even things like re-engineering solutions for remote work. We need to plan and run these darn things. We need to support them and their integrations. We need to understand their lifecycle, and where that intersects with all of the other things we have running. If you don’t keep a broad view…you fail”.

Sonatype Nexus vs. JFrog: Pick an open source security scanner

Both Sonatype and JFrog frame their open source security scanning strategies in the broader context of an SDLC rapid development framework. Sonatype prioritizes automation, while JFrog centers on swift code delivery. The products have similar security scanning processes. Each tool analyzes defined policies and checks code against a set of online repositories of problems. The scanning process is recursive; a vulnerable low-level element will reflect on any higher-level packages that include it, up to the application and project levels. Users see the issues the tools find, and the hierarchies those issues affect, in the GUI. Both JFrog and Sonatype also can generate alerts for violations, which in turn can trigger specific actions. Sonatype's Nexus platform enables teams to universally manage artifact libraries. Nexus harmonizes project management and code management, to accelerate development.

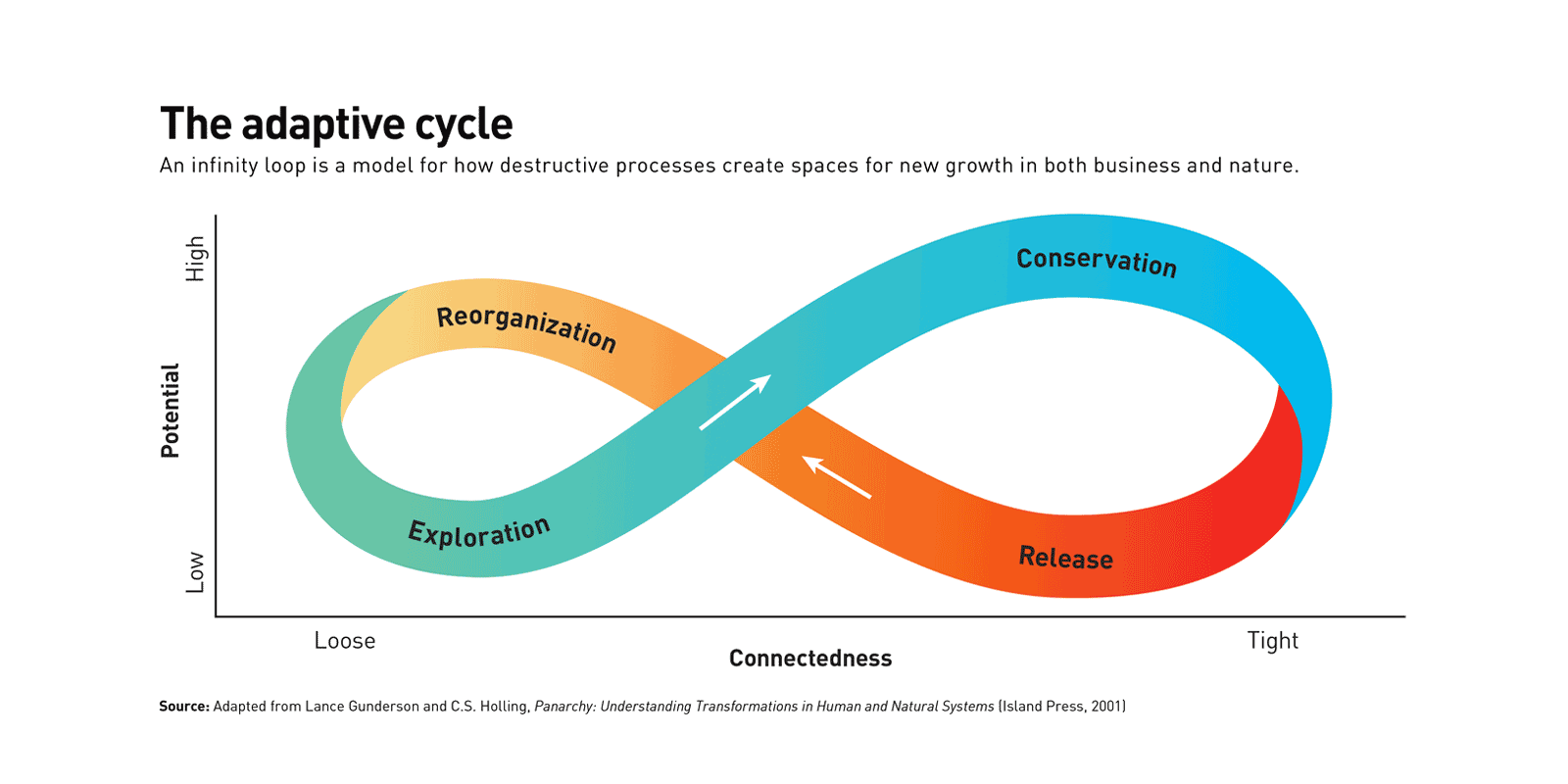

Forces of nature

Most nascent enterprises die in the early stage, because passion is not sufficient to guarantee commercial success. Those startups that survive develop a logic for their value creation process and assemble their value chain, moving into a stage of Reason. Former innovators evolve into managers. They are still free to act, but now they know what to do and their task is clear: to scale the enterprise as rapidly as possible. As companies move into the Reason part of the cycle, their priorities become raising financial resources, managing growth, recruiting people, and preserving the startup culture. But these priorities become increasingly challenging as scale and geographic dispersion grow. According to anthropologist Robin Dunbar, head of the Social and Evolutionary Neuroscience Research Group at Oxford University, the maximum number of personal relationships that human beings can comfortably maintain is about 150. So once an organization grows beyond that size, more formality is required. Managers must turn to the panoply of mainstream management methods. They do so for the very best of reasons: to embed and preserve the enterprise’s recipe for success.

Cisco spotlights new IT roles you've never heard of

Business translator: The business translator works to better turn the needs of business into service-level, security and compliance requirements that can be applied and monitored across the network. The translator also works to use network and network data for business value and innovation, and their knowledge of networking and application APIs will help them glue the business to the IT landscape. Network guardian: A network guardian works to bridge network and security architectures. They build the distributed intelligence of the network into security architecture and the SecOps process. This is where networking and security meet, and the guardian is at the center of it all, pulling in and pushing out vast amounts of data, distilling it and then taking action to identify faults or adapt to shutdown attackers. Network commander: Intent-based networking builds on controller-based automation and orchestration processes. The network commander takes charge of these processes and practices that ensure the health and continuous operation of the network controller and underlying network.

Critical Metrics to Keep Delivering Software Effectively in the "New Normal" World

For organisations delivering software in an Agile way, a sensible place to start is a set of metrics that tie back to core Agile principles – so that everyone is focused on the ultimate Agile goal of increasing customer satisfaction through “the early and continuous delivery of valuable software” – despite the challenges thrown up by the ‘new normal’ world. As Reuben Sutton, Plandek’s VP Engineering notes, “We have had to move to a fully remote working environment overnight, during one of the most intense software delivery periods our company has ever known. The Agile delivery metrics that our teams track and understand have been our ‘North star’. We know that we are still going in the right direction, as we can see it objectively in the metrics.” If Agile principles are the ‘north star’ around which you set your goals in the ‘new normal’ world, then you will need an effective framework for adopting them. In our experience, this framework needs to provide a simple hierarchy of metrics, so that they are understood and adopted by everyone.

Should you let a cloud maturity model judge you?

The issue that I have now with the many cloud computing maturity models out there—and there are many—is that people often rely on them too much. They can dilute the larger picture of the right way to do cloud adoption and how an organization should set the appropriate priorities. For instance, it never should be about using a specific cloud-based technology, such as serverless, containers, Kubernetes, or machine learning. It’s about leveraging the cloud for the right purposes that are consistent with serving the business. These maturity models do offer a beneficial measure of culture and internal processes, which are actually more important than adopting trendy cloud technology. Indeed, unless technology is employed specifically to serve the needs of the business, technology (including cloud technology) can take you back a few steps. You’re ultimately not aligning business requirements with the correct and pragmatic use of cloud and noncloud technology. Don’t get me wrong, there are some helpful and some not so helpful maturity models out there. As I practice enterprise cloud migrations, including assessment and planning, I use some of these models as foundational benchmarks at times.

Example of Writing Functional Requirements for Enterprise Systems

It is worth mentioning that while system requirements described all object types without exception, we didn't need to write use cases for all of them. Many of the object types represented lists of something (countries, months, time zones, etc.) and were used similarly. This allowed us to save our analysts’ time. An interesting question is which stakeholders and project team members use which requirement level. Future end users can read general scenarios, but use cases are too complicated for them. Because of this, our analysts just discussed them with end users and didn’t ask them to read or review use cases. Programmers usually need algorithms, checks and system requirements. You definitely can respect a programmer who reads use cases. Test engineers need all levels of requirements, since they test the system at all levels. In comparison with, for example, MS Word documents that are still widely used, Wiki allowed our requirements to be changed by several team members at the same time.

Quote for the day:

"Humility is a great quality of leadership which derives respect and not just fear or hatred." -- Yousef Munayyer

No comments:

Post a Comment