Creating a safe path to digital with open standards

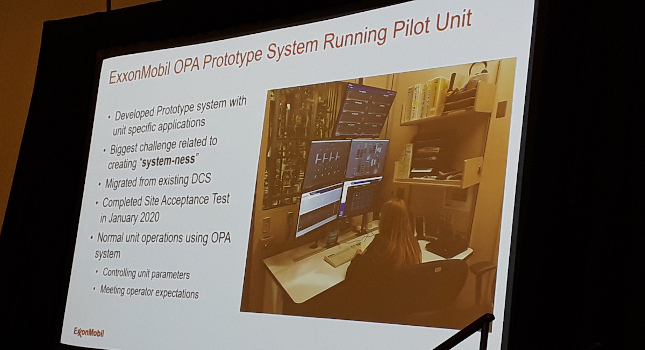

Despite the process automation industries being vastly different in their outputs, there are many commonalities in the desire for efficiency, interoperability and the ability to integrate best-in-class technologies. Recognizing the need for cross-industry collaboration, a group of companies representing a variety of verticals got together three years ago to discuss the possibility of developing an open standard for process automation. Each company in attendance was driven by the need for more flexible solutions. Shortly after, the Open Process Automation Forum (OPAF) was born under the guidance of The Open Group. Since then, the Forum has worked to lay the foundations for developing a standard to ensure the security, interoperability and scalability of new control systems. A year ago, over 90 member organizations were involved with the creation of OPAF’s O-PAS Standard, Version 1.0, which is now a full standard of The Open Group. While industry standards for process automation are already available in the marketplace and fit-for-purpose, the O-PAS Standard focuses on interoperability, using existing industry standards and adopting and adapting them to create a “standard of standards.”

Should AI assist in surgical decision-making?

Fully automated surgeries performed by robots is still a ways off. In the meantime, developers are trying to beat those grim numbers by harnessing the best of human decision making and coupling it with truly exceptional technology tools designed to assist surgeons. Artificial intelligence and machine learning are often touted as solutions for call centers and to provide intelligent insights to companies that have reams of data that needs to be processed, but leveraging AI/ML to better medical outcomes could be one of the transformative technologies of our time. "Surgical decision-making is dominated by hypothetical-deductive reasoning, individual judgment, and heuristics," write the authors of a recent JAMA Surgery paper called Artificial Intelligence and Surgical Decision-making. "These factors can lead to bias, error, and preventable harm. Traditional predictive analytics and clinical decision-support systems are intended to augment surgical decision-making, but their clinical utility is compromised by time-consuming manual data management and suboptimal accuracy."

Home office technology will need to evolve in the new work normal

Technology will have to know our contexts. The home technology experience will have to adapt to our various modes and have the capacity to manage the compute requirements. "There is a very large innovation cycle coming to really make the world at home adaptable to all of these contexts as we look forward," said Roese. Edge computing will come to the home. As remote work evolves more to include augmented and virtual reality as well as video conferencing and data intensive applications IT infrastructure at home will change. Roese said that edge computing devices may be deployed in homes by enterprises to beef up home infrastructure. "Early, when we were talking about edge, it was all about smart factories and smart cities and smart hospitals, but there's another class of edge compute that's really interesting in this new world," said Roese. "And that is to augment the compute capacity of the devices that attach to that edge." 5G, AR, VR and applications that need horsepower would use these edge compute devices. Edge computing in the home could provide more real-time experiences, compute capacity and improve experiences.These edge devices at home would also offer scale on demand.

Grafana: The Open Observability Platform

Grafana is open-source visualization and analytics software that works with lots of different databases and data sources. It connects to data regardless of where it resides — in the cloud, on-premises, or somewhere else — and helps organizations build the perfect picture to help them understand their data. Perhaps Grafana's most unique feature is that its data source neutral, meaning it doesn't matter where your data is stored, Grafana can unify it. These sources can include time-series, logging, SQL and document databases, cloud data sources, enterprise plugins, and more options from community-contributed plugins. No matter the source, the data stays where it is, and you can visualize and analyze it at will. This makes Grafana a versatile tool and open to use for a wide range of applications. There is one caveat to the statement above, and that's that for Grafana to be useful, your data should be time-series data, i.e., data taken at particular points in time. This describes a lot of data sources, but not all of them.

Why open source is heading for a new breakthrough

While anticipating an increase in uptake, Miller doesn't anticipate Apple and Microsoft fans to begin jumping ship en masse – indeed, he acknowledges the platform will likely retain its more geeky audience. But that's not to say that Fedora 32 Workstation doesn't have the technical chops to go toe-to-toe with mainstream operating systems, with Miller alluding to the huge advances that Linux as a desktop has made over the past 15 years as it has moved from the server to being the default choice for embedded everything everywhere. "It's so flexible and so able to fit into all of these different use cases," he says. "To me, it's clear that Linux is technically superior." And he adds: "It's not a money-saver option – this is something you should pick if you actually want this." Of course, the technical capability of Fedora is just one small piece of the package that forms the philosophy not just of Fedora Workstation but Linux and the open-source community in its entirety. "The real appeal of it is that this is an operating system that we own. It belongs to the people," he says. Looking to the future, Miller sees Linux as well-positioned to capitalize on the move to hybrid-type mobile devices, particularly as more OEMs throw their support behind the platform.

Will the solo open source developer survive the pandemic?

The last several weeks have been anything but. I’m not alone in finding it rough-going. For Julia Ferraioli, this isn’t because of “WFH.” It’s because of “WDP” [working during pandemic]: “I’ve been working remotely for 2.5 years. The past 2.5 months have left me more exhausted than ever before. This is your reminder that you’re not working remotely. You’re working remotely during a global health crisis.” This same pressure applies to open source maintainers, Fischer says: Today independent maintainers are, like many people, under more time and financial pressure than they were only a month or two ago. Most of these creators work on their projects on the side — not as their main day jobs — and personal and professional obligations come before open source work for many. Even before the coronavirus pandemic hit, this was a true statement. In my interviews with a diverse range of open source maintainers, from curl’s Daniel Stenberg SolveSpace’s Whitequark, most have contributed as a side project, not their day job.

Why a pandemic-specific BCP matters

If you have not already done so, your organisation should develop BCPs specific to a pandemic or epidemic. Most existing BCPs address business recovery and resumption after events such as extreme weather, terrorism and power outages, but do not adequately address the repercussions of a pandemic. Unlike these other risks, disease outbreaks affect people more than they do datacentres and corporate facilities, and their duration is much longer. As already seen, disease outbreaks can flare up, subside, and then flare up again. Forrester recommended a three-step process to ensure that a pandemic response plan is thorough and effective. That includes identifying an executive sponsor and building a pandemic planning team, assessing critical operations, supplier and customer relationships, as well as the impact on the workforce. According to Forrester’s data and its own direct experience, organisations still fail to exercise their plans on a regular basis.

Time is Running Out on Silverlight

This situation came about because Silverlight is not a stand-alone platform, it requires a browser to host it. And in a way, it was doomed from the start. Silverlight was first released in 2007, the very same year that Apple announced that it won’t support browser plugins such as Adobe Flash for iPhone. This essentially killed the consumer market for Silverlight, though it did live on for a while thanks to streaming services such as Netflix. Currently the only browsers that continues to run Silverlight are Internet Explorer 10 and 11. “There is no longer support for Chrome, Firefox, or any browser using the Mac operating system.” While Silverlight is essentially gone from the public web, it did get some popularity was internal applications. For many companies this was seen as a way of quickly building line-of-business applications with better features and performance than HTML/JavaScript applications of the time. Such applications would normally be written in WinForms or WPF, but Silverlight made deployment and updating easier.

How Technologists Can Translate Cybersecurity Risks Into Business Acumen

The technology space can easily seem abstract, and therefore confusing and overwhelming. To alleviate the fear that stems from uncertainty, technologists can distill foundational principles into checkpoints that empower business people to ask the right questions in the right environment. A good place to start is by establishing the top metrics affecting an organization by answering questions such as, “Does the organization have subject matter experts leading security?” “Who is assigned to manage this specific piece of technology?” “How do we measure this space?” “What portion of the budget is invested in protecting this technology?” “How does this technology tie into our broader risk appetite statement?” You may well find that how you measure these risks is your greatest risk. Most organizations assess risk on a quarterly basis, in addition to an annual deep-dive. In general, the more time devoted to assessing and reassessing cybersecurity threats and technology, the better. One of the foundational principles of security and risk management is that the efficacy of controls degrades over time. Technology is analogous to topography in this regard; just as you would expect natural elements like water and wind to erode a stone wall over time, technology’s architecture will likewise deteriorate – only much more quickly.

Data protection and GDPR: what are my legal obligations as a business?

The GDPR requires that anyone holding or processing personal data take both ‘technical’ and ‘organisational’ measures to ensure that personal data is secure and that data subjects’ rights are maintained. Technical measures refer to firewalls, password protection, penetration testing etc. and anyone holding personal data on electronic systems should consult with IT professionals to ensure that adequate security measures are in place to protect data. Organisational measures refers to internal policies, staff training etc. Ideally businesses will have both internal data protection policies and a program of staff training (often this is done online). ... Some countries have been deemed to have an adequate data protection framework (e.g. Switzerland, Canada) and data can be transferred to these territories (but note that any processors will still need to enter into a formal processing agreement as described above). If you are transferring to a US company then they may be certified under the “Privacy Shield” framework which allows for transfers to those specific companies.

Quote for the day:

"Time is neutral and does not change things. With courage and initiative, leaders change things." -- Jesse Jackson

No comments:

Post a Comment