The Best Approach to Help Developers Build Security into the Pipeline

DevOps culture and the drive to work faster and more efficiently affects everyone in the organization. When it comes to creating software and applications, though, the responsibility for cranking out code and producing quality code falls on developers. The pace of DevOps culture doesn’t allow for anything to be an afterthought. It’s important for developers to support security directly as a function of application development in the first place, and to operationalize security within the continuous integration/continuous deployment (CI/CD) pipeline. Unfortunately, traditional education does little to prepare them. It’s possible to get a PhD in computer science and never learn the things you need to know to develop secure code. As organizations embrace DevSecOps and integrate security in the development pipeline, it’s important to ensure developers have the skills necessary. You also need to focus on both the “why” and the “how” in order to build a successful DevSecOps training program. Not all training is created equal.

Adversarial attacks come in different flavors. In the backdoor attack scenario, the attacker must be able to poison the deep learning model during the training phase, before it is deployed on the target system. While this might sound unlikely, it is in fact totally feasible. But before we get to that, a short explanation on how deep learning is often done in practice. One of the problems with deep learning systems is that they require vast amounts of data and compute resources. In many cases, the people who want to use these systems don’t have access to expensive racks of GPUs or cloud servers. And in some domains, there isn’t enough data to train a deep learning system from scratch with decent accuracy. This is why many developers use pre-trained models to create new deep learning algorithms. Tech companies such as Google and Microsoft, which have vast resources, have released many deep learning models that have already been trained on millions of examples. A developer who wants to create a new application only needs to download one of these models and retrain it on a small dataset of new examples to finetune it for a new task. The practice has become widely popular among deep learning experts. It’s better to build-up on something that has been tried and tested than to reinvent the wheel from scratch.

Currently, not all organisations have a robust data governance, data privacy or data management strategy in place. Many see implementing extra technology as a cost, but the technology deployed for GDPR compliance can also help to implement a robust data management strategy, as well as with achieving compliance. Thinking about these technologies as a balancing act between increasing risk and cost, and more exposure for new opportunities to a business, has led many to differentiate and innovate at a slower pace, taking more time than they need to undergo digital transformation and implement a robust data strategy that accelerates value creation. It has never been easier to utilise technology to support organisations in automating a good data management strategy. Five years ago, if you wanted to carry out a data audit of your sensitive information, it was often a manual, laborious and time-consuming process.

Adversarial AI: Blocking the hidden backdoor in neural networks

Adversarial attacks come in different flavors. In the backdoor attack scenario, the attacker must be able to poison the deep learning model during the training phase, before it is deployed on the target system. While this might sound unlikely, it is in fact totally feasible. But before we get to that, a short explanation on how deep learning is often done in practice. One of the problems with deep learning systems is that they require vast amounts of data and compute resources. In many cases, the people who want to use these systems don’t have access to expensive racks of GPUs or cloud servers. And in some domains, there isn’t enough data to train a deep learning system from scratch with decent accuracy. This is why many developers use pre-trained models to create new deep learning algorithms. Tech companies such as Google and Microsoft, which have vast resources, have released many deep learning models that have already been trained on millions of examples. A developer who wants to create a new application only needs to download one of these models and retrain it on a small dataset of new examples to finetune it for a new task. The practice has become widely popular among deep learning experts. It’s better to build-up on something that has been tried and tested than to reinvent the wheel from scratch.

Two years on: Has GDPR been taken seriously enough by companies?

Currently, not all organisations have a robust data governance, data privacy or data management strategy in place. Many see implementing extra technology as a cost, but the technology deployed for GDPR compliance can also help to implement a robust data management strategy, as well as with achieving compliance. Thinking about these technologies as a balancing act between increasing risk and cost, and more exposure for new opportunities to a business, has led many to differentiate and innovate at a slower pace, taking more time than they need to undergo digital transformation and implement a robust data strategy that accelerates value creation. It has never been easier to utilise technology to support organisations in automating a good data management strategy. Five years ago, if you wanted to carry out a data audit of your sensitive information, it was often a manual, laborious and time-consuming process.

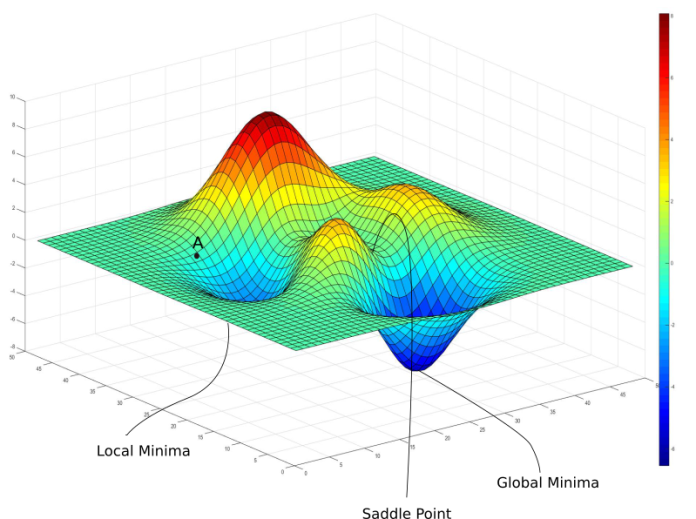

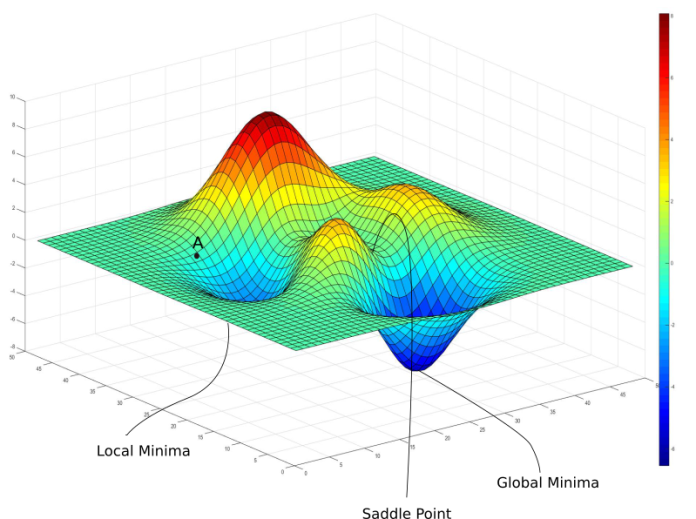

A Primer on Data Drift

Monitoring model performance drift is a crucial step in production ML; however, in practice, it proves challenging for many reasons, one of which is the delay in retrieving the labels of new data. Without ground truth labels, drift detection techniques based on the model’s accuracy are off the table. ... If we have the ground truth labels of the new data, one straightforward approach is to score the new dataset and then compare the performance metrics between the original training set and the new dataset. However, in real life, acquiring ground truth labels for new datasets is usually delayed. In our case, we would have to buy and drink all the bottles available, which is a tempting choice… but probably not a wise one. Therefore, in order to be able to react in a timely manner, we will need to base performance solely on the features of the incoming data. The logic is that if the data distribution diverges between the training phase and testing phase, it is a strong signal that the model’s performance won’t be the same.

Monitoring model performance drift is a crucial step in production ML; however, in practice, it proves challenging for many reasons, one of which is the delay in retrieving the labels of new data. Without ground truth labels, drift detection techniques based on the model’s accuracy are off the table. ... If we have the ground truth labels of the new data, one straightforward approach is to score the new dataset and then compare the performance metrics between the original training set and the new dataset. However, in real life, acquiring ground truth labels for new datasets is usually delayed. In our case, we would have to buy and drink all the bottles available, which is a tempting choice… but probably not a wise one. Therefore, in order to be able to react in a timely manner, we will need to base performance solely on the features of the incoming data. The logic is that if the data distribution diverges between the training phase and testing phase, it is a strong signal that the model’s performance won’t be the same.Why Data Science Isn't Primarily For Daya Scientists Anymore

Jonny Brooks-Bartlett, data scientist at Deliveroo, puts his finger on the crux of the problem. “Now if a data scientist spends their time only learning how to write and execute machine learning algorithms, then they can only be a small (albeit necessary) part of a team that leads to the success of a project that produces a valuable product,” he says. “This means that data science teams that work in isolation will struggle to provide value! Despite this, many companies still have data science teams that come up with their own projects and write code to try and solve a problem.” Without the engineers, analysts, and other team members that you need to complete your projects, you give your data scientists work that they are overqualified for and don’t enjoy. It’s not surprising that they then deliver poor results. Unfortunately, they also get in the way of the work that needs to be done by engineers or developers. Iskander, principal data scientist at DataPastry, puts it succinctly. “I have a confession to make. I hardly ever do data science,” he admits That’s because he’s repeatedly asked to fill roles that don’t require his specialized skills.

Unleashing the power of AI with optimized architectures for H20.ai

H2O.ai is the creator of H20, a leading machine learning and artificial intelligence platform trusted by hundreds of thousands of data scientists and more than 18,000 enterprises around the world. H20 is a fully open‑source distributed in‑memory AI and machine learning software platform with linear scalability. It supports some of the most widely used statistical and ML algorithms — including gradient boosted machines, generalized linear models, deep learning and more. H2O is also incredibly flexible. It works on bare metal, with existing Apache Hadoop or Apache Spark clusters. It can ingest data directly from HDFS, Spark, S3, Microsoft Azure Data Lake and other data sources into its in‑memory distributed key value store. To further simply AI, H2O has leading-edge AutoML functionality that automatically runs through algorithms and their hyperparameters to produce a leaderboard of the best performing models. And under the hood, H2O takes advantage of the computing power of distributed systems and in‑memory computing to accelerate ML using industry parallelized algorithms, which take advantage of fine‑grained in‑memory MapReduce.

The missing link in your SOC: Secure the mainframe

Simply hiring the right person may seem obvious but hiring talent with either mainframe or cybersecurity skills is getting harder as job openings far outpace the number of knowledgeable and available people. And even if your company is able to compete with top dollar salaries, finding the unique individual with both of these skills may still prove to be infeasible. This is where successful organizations are investing in their current resources to defend their critical systems. This often takes the form of on-the-job training through in-house education from senior technicians or technical courses from industry experts. A good example of this is taking a security analyst with a strong foundation in cybersecurity and teaching the fundamentals of the mainframe. The same security principles will apply, and a talented analyst will quickly be able to understand the nuances of the new operating system which in turn will provide your SOC with the necessary skills to defend the entire enterprise, not just the Windows and Linux systems that are most prevalent.3 Ways Every Company Should Prepare For The Internet Of Things

The IoT refers to the ever-growing network of smart, connected devices, objects, and appliances that surround us every day. These devices are constantly gathering and transmitting data via the internet – think of how a fitness tracker can sync with an app on your phone – and many are capable of carrying out tasks autonomously. A smart thermostat that intelligently regulates the temperature of your home is a common example of the IoT in action. Other examples include Amazon Echo and similar smart speakers, smart lightbulbs, smart home security systems; you name it. These days, pretty much anything for the home can be made "smart," including smart toasters, smart hairbrushes and, wait for it, smart toilets. ... Wearable technology, such as fitness trackers or smart running socks (yes, these are a thing too), also fall under the umbrella of the IoT. Even cars can be connected to the internet, making them part of the IoT. Market forecasts from Business Insider Intelligence predict that there will be more than 64 billion of these connected devices globally by 2026 – a huge increase on the approximately 10 billion IoT devices that were around in 2018.

Why the UK leads the global digital banking industry

The UK remains a front runner for its supportive regulatory approach to innovation in financial services. In 2015, the UK was the first nation to put into operation its own regulatory fintech sandbox to enable innovation in products and services. In fact, the success of the UK’s fintech investment led to a whole host of nations including Singapore and Australia announcing their plans for fintech sandboxes at the end of 2016, according to the Financial Conduct Authority. Government policy makers and regulatory bodies in the UK have created a progressive, open-minded and internationally focused regulatory scheme. The launch of Payment Services Directive (PSD2) inspired the creation of Open Banking and a new wave of innovation. A report by EY revealed that 94% of fintechs are considering open banking to enhance current services and 81% are using it to enable new services. The use of open APIs enable third parties access to data traditionally held by incumbent banks, meaning that fintechs can use these insights to produce new products and services.

The legal conversation around facial recognition is a hot topic around the world. In the US, for example, the government dropped the compulsory use of facial recognition of citizens in airports at US borders at the end of 2019. Also, last year, New York legislators updated local privacy laws to prohibit “use of a digital replica to create sexually explicit material in an expressive audiovisual work” (otherwise known as DeepFake tech) to counter this increasing threat. These decisions show that despite its many benefits, facial recognition could also have negative impacts on personal privacy and liberty. Consider the use of facial recognition by law enforcement, where in certain situations public spaces are monitored without the public’s knowledge via CCTV and bodyworn cameras. My take on this is that the only faces stored on the databases at the back-end of this technology should be those of convicted criminals, not everyday people. The data should never be used to ‘mine’ faces – a term which refers to the gathering and storage of information about peoples faces – as this isn’t ethical.

Quote for the day:

"Leadership is familiar, but not well understood." -- Gerald Weinberg

No comments:

Post a Comment