Quote for the day:

"All progress takes place outside the comfort zone." -- Michael John Bobak

What Are Deepfakes? Everything to Know About These AI Image and Video Forgeries

Deepfakes rely on deep learning, a branch of AI that mimics how humans recognize

patterns. These AI models analyze thousands of images and videos of a person,

learning their facial expressions, movements and voice patterns. Then, using

generative adversarial networks, AI creates a realistic simulation of that

person in new content. GANs are made up of two neural networks where one creates

content (the generator), and the other tries to spot if it's fake (the

discriminator). The number of images or frames needed to create a convincing

deepfake depends on the quality and length of the final output. For a single

deepfake image, as few as five to 10 clear photos of the person's face may be

enough. ... While tech-savvy people might be more vigilant about spotting

deepfakes, regular folks need to be more cautious. I asked John Sohrawardi, a

computing and information sciences Ph.D. student leading the DeFake Project,

about common ways to recognize a deepfake. He advised people to look at the

mouth to see if the teeth are garbled." Is the video more blurry around the

mouth? Does it feel like they're talking about something very exciting but act

monotonous? That's one of the giveaways of more lazy deepfakes." ... "Too often,

the focus is on how to protect yourself, but we need to shift the conversation

to the responsibility of those who create and distribute harmful content,"

Dorota Mani tells CNET

Deepfakes rely on deep learning, a branch of AI that mimics how humans recognize

patterns. These AI models analyze thousands of images and videos of a person,

learning their facial expressions, movements and voice patterns. Then, using

generative adversarial networks, AI creates a realistic simulation of that

person in new content. GANs are made up of two neural networks where one creates

content (the generator), and the other tries to spot if it's fake (the

discriminator). The number of images or frames needed to create a convincing

deepfake depends on the quality and length of the final output. For a single

deepfake image, as few as five to 10 clear photos of the person's face may be

enough. ... While tech-savvy people might be more vigilant about spotting

deepfakes, regular folks need to be more cautious. I asked John Sohrawardi, a

computing and information sciences Ph.D. student leading the DeFake Project,

about common ways to recognize a deepfake. He advised people to look at the

mouth to see if the teeth are garbled." Is the video more blurry around the

mouth? Does it feel like they're talking about something very exciting but act

monotonous? That's one of the giveaways of more lazy deepfakes." ... "Too often,

the focus is on how to protect yourself, but we need to shift the conversation

to the responsibility of those who create and distribute harmful content,"

Dorota Mani tells CNETBeyond GenAI: Why Agentic AI Was the Real Conversation at RSA 2025

Contrary to GenAI, that primarily focuses on the divergence of information,

generating new content based on specific instructions, SynthAI developments

emphasize the convergence of information, presenting less but more pertinent

content by synthesizing available data. SynthAI will enhance the quality and

speed of decision-making, potentially making decisions autonomously. The most

evident application lies in summarizing large volumes of information that

humans would be unable to thoroughly examine and comprehend independently.

SynthAI’s true value will be in aiding humans to make more informed decisions

efficiently. ... Trust in AI also needs to evolve. This isn’t a surprise as

AI, like all technologies, is going through the hype cycle and in the same way

that cloud and automation suffered with issues around trust in the early

stages of maturity, so AI is following a very similar pattern. It will be some

time before trust and confidence are in balance with AI. ... Agentic AI

encompasses tools that can understand objectives, make decisions, and act.

These tools streamline processes, automate tasks, and provide intelligent

insights to aid in quick decision making. In a use case involving repetitive

processes, take a call center as an example, agentic AI can have significant

value.

Contrary to GenAI, that primarily focuses on the divergence of information,

generating new content based on specific instructions, SynthAI developments

emphasize the convergence of information, presenting less but more pertinent

content by synthesizing available data. SynthAI will enhance the quality and

speed of decision-making, potentially making decisions autonomously. The most

evident application lies in summarizing large volumes of information that

humans would be unable to thoroughly examine and comprehend independently.

SynthAI’s true value will be in aiding humans to make more informed decisions

efficiently. ... Trust in AI also needs to evolve. This isn’t a surprise as

AI, like all technologies, is going through the hype cycle and in the same way

that cloud and automation suffered with issues around trust in the early

stages of maturity, so AI is following a very similar pattern. It will be some

time before trust and confidence are in balance with AI. ... Agentic AI

encompasses tools that can understand objectives, make decisions, and act.

These tools streamline processes, automate tasks, and provide intelligent

insights to aid in quick decision making. In a use case involving repetitive

processes, take a call center as an example, agentic AI can have significant

value. The Privacy Challenges of Emerging Personalized AI Services

The nature of the search business will change substantially in this world of

personalized AI services. It will evolve from a service for end users to an

input into an AI service for end users. In particular, search will become a

component of chatbots and AI agents, rather than the stand-alone service it is

today. This merger has already happened to some degree. OpenAI has offered a

search service as part of its ChatGPT deployment since last October. Google

launched AI Overview in May of last year. AI Overview returns a summary of its

search results generated by Google’s Gemini AI model at the top of its search

results. When a user asks a question to ChatGPT, the chatbot will sometimes

search the internet and provide a summary of its search results in its answer.

... The best way forward would not be to invent a sector-specific privacy

regime for AI services, although this could be made to work in the same way

that the US has chosen to put financial, educational, and health information

under the control of dedicated industry privacy regulators. It might be a good

approach if policymakers were also willing to establish a digital regulator

for advanced AI chatbots and AI agents, which will be at the heart of an

emerging AI services industry. But that prospect seems remote in today’s

political climate, which seems to prioritize untrammeled innovation over

protective regulation.

The nature of the search business will change substantially in this world of

personalized AI services. It will evolve from a service for end users to an

input into an AI service for end users. In particular, search will become a

component of chatbots and AI agents, rather than the stand-alone service it is

today. This merger has already happened to some degree. OpenAI has offered a

search service as part of its ChatGPT deployment since last October. Google

launched AI Overview in May of last year. AI Overview returns a summary of its

search results generated by Google’s Gemini AI model at the top of its search

results. When a user asks a question to ChatGPT, the chatbot will sometimes

search the internet and provide a summary of its search results in its answer.

... The best way forward would not be to invent a sector-specific privacy

regime for AI services, although this could be made to work in the same way

that the US has chosen to put financial, educational, and health information

under the control of dedicated industry privacy regulators. It might be a good

approach if policymakers were also willing to establish a digital regulator

for advanced AI chatbots and AI agents, which will be at the heart of an

emerging AI services industry. But that prospect seems remote in today’s

political climate, which seems to prioritize untrammeled innovation over

protective regulation.What CISOs can learn from the frontlines of fintech cybersecurity

For Shetty, the idea that innovation competes with security is a false choice.

“They go hand in hand,” she says. User trust is central to her approach.

“That’s the most valuable currency,” she explains. Lose it, and it’s hard to

get back. That’s why transparency, privacy, and security are built into every

step of her team’s work, not added at the end. ... Supply chain attacks remain

one of her biggest concerns. Many organizations still assume they’re too small

to be a target. That’s a dangerous mindset. Shetty points to many recent

examples where attackers reached big companies by going through smaller

suppliers. “It’s not enough to monitor your vendors. You also have to hold

them accountable,” she says. Her team helps clients assess vendor cyber

hygiene and risk scores, and encourages them to consider that when choosing

suppliers. “It’s about making smart choices early, not reacting after the

fact.” Vendor security needs to be an active process. Static questionnaires

and one-off audits are not enough. “You need continuous monitoring. Your

supply chain isn’t standing still, and neither are attackers.” ... The speed

of change is what worries her most. Threats evolve quickly. The amount of data

to protect grows every day. At the same time, regulators and customers expect

high standards, and they should.

Despite the growing alarm, some tech executives like OpenAI’s Sam Altman have

recently reversed course, downplaying the need for regulation after previously

warning of AI’s risks. This inconsistency, coupled with massive federal

contracts and opaque deployment practices, erodes public trust in both

corporate actors and government regulators. What’s striking is how bipartisan

the concern has become. According to the Pew survey, only 17 percent of

Americans believe AI will have a positive impact on the U.S. over the next two

decades, while 51 percent express more concern than excitement about its

expanding role. These numbers represent a significant shift from earlier years

and a rare area of consensus between liberal and conservative constituencies.

... Bias in law enforcement AI systems is not simply a product of technical

error; it reflects systemic underrepresentation and skewed priorities in AI

design. According to the Pew survey, only 44 percent of AI experts believe

women’s perspectives are adequately accounted for in AI development. The

numbers drop even further for racial and ethnic minorities. Just 27 percent

and 25 percent say the perspectives of Black and Hispanic communities,

respectively, are well represented in AI systems.

Despite the growing alarm, some tech executives like OpenAI’s Sam Altman have

recently reversed course, downplaying the need for regulation after previously

warning of AI’s risks. This inconsistency, coupled with massive federal

contracts and opaque deployment practices, erodes public trust in both

corporate actors and government regulators. What’s striking is how bipartisan

the concern has become. According to the Pew survey, only 17 percent of

Americans believe AI will have a positive impact on the U.S. over the next two

decades, while 51 percent express more concern than excitement about its

expanding role. These numbers represent a significant shift from earlier years

and a rare area of consensus between liberal and conservative constituencies.

... Bias in law enforcement AI systems is not simply a product of technical

error; it reflects systemic underrepresentation and skewed priorities in AI

design. According to the Pew survey, only 44 percent of AI experts believe

women’s perspectives are adequately accounted for in AI development. The

numbers drop even further for racial and ethnic minorities. Just 27 percent

and 25 percent say the perspectives of Black and Hispanic communities,

respectively, are well represented in AI systems.

Infostealers steal browser cookies, VPN credentials, MFA (multi-factor

authentication) tokens, crypto wallet data, and more. Cybercriminals sell the

data that infostealers grab through dark web markets, giving attackers easy

access to corporate systems. “This shift commoditizes initial access, enabling

nation-state goals through simple transactions rather than complex attacks,”

says Ben McCarthy, lead cyber security engineer at Immersive. ... Threat

actors are systematically compromising the software supply chain by embedding

malicious code within legitimate development tools, libraries, and frameworks

that organizations use to build applications. “These supply chain attacks

exploit the trust between developers and package repositories,” Immersive’s

McCarthy tells CSO. “Malicious packages often mimic legitimate ones while

running harmful code, evading standard code reviews.” ... “There’s been a

notable uptick in the use of cloud-based services and remote management

platforms as part of ransomware toolchains,” says Jamie Moles, senior

technical marketing manager at network detection and response provider

ExtraHop. “This aligns with a broader trend: Rather than relying solely on

traditional malware payloads, adversaries are increasingly shifting toward

abusing trusted platforms and ‘living-off-the-land’ techniques.”

Infostealers steal browser cookies, VPN credentials, MFA (multi-factor

authentication) tokens, crypto wallet data, and more. Cybercriminals sell the

data that infostealers grab through dark web markets, giving attackers easy

access to corporate systems. “This shift commoditizes initial access, enabling

nation-state goals through simple transactions rather than complex attacks,”

says Ben McCarthy, lead cyber security engineer at Immersive. ... Threat

actors are systematically compromising the software supply chain by embedding

malicious code within legitimate development tools, libraries, and frameworks

that organizations use to build applications. “These supply chain attacks

exploit the trust between developers and package repositories,” Immersive’s

McCarthy tells CSO. “Malicious packages often mimic legitimate ones while

running harmful code, evading standard code reviews.” ... “There’s been a

notable uptick in the use of cloud-based services and remote management

platforms as part of ransomware toolchains,” says Jamie Moles, senior

technical marketing manager at network detection and response provider

ExtraHop. “This aligns with a broader trend: Rather than relying solely on

traditional malware payloads, adversaries are increasingly shifting toward

abusing trusted platforms and ‘living-off-the-land’ techniques.”

Constructive criticism can be an excellent instrument for growth, both

individually and on the team level, says Edward Tian, CEO of AI detection

service provider GPTZero. "Many times, and with IT teams in particular, work

is very independent," he observes in an email interview. "IT workers may not

frequently collaborate with one another or get input on what they're doing,"

Tian states. ... When using constructive criticism, take an approach that

focuses on seeking improvement with the poor result, Chowning advises.

Meanwhile, use empathy to solicit ideas on how to improve on a poor result.

She adds that it's important to ask questions, listen, seek to understand,

acknowledge any difficulties or constraints, and solicit improvement ideas.

... With any IT team there are two key aspects of constructive criticism:

creating the expectation and opportunity for performance improvement, and --

often overlooked -- instilling recognition in the team that performance is

monitored and has implications, Chowning says. ... The biggest mistake IT

leaders make is treating feedback as a one-way directive rather than a dynamic

conversation, Avelange observes. "Too many IT leaders still operate in a

command-and-control mindset, dictating what needs to change rather than

co-creating solutions with their teams."

Constructive criticism can be an excellent instrument for growth, both

individually and on the team level, says Edward Tian, CEO of AI detection

service provider GPTZero. "Many times, and with IT teams in particular, work

is very independent," he observes in an email interview. "IT workers may not

frequently collaborate with one another or get input on what they're doing,"

Tian states. ... When using constructive criticism, take an approach that

focuses on seeking improvement with the poor result, Chowning advises.

Meanwhile, use empathy to solicit ideas on how to improve on a poor result.

She adds that it's important to ask questions, listen, seek to understand,

acknowledge any difficulties or constraints, and solicit improvement ideas.

... With any IT team there are two key aspects of constructive criticism:

creating the expectation and opportunity for performance improvement, and --

often overlooked -- instilling recognition in the team that performance is

monitored and has implications, Chowning says. ... The biggest mistake IT

leaders make is treating feedback as a one-way directive rather than a dynamic

conversation, Avelange observes. "Too many IT leaders still operate in a

command-and-control mindset, dictating what needs to change rather than

co-creating solutions with their teams."

Tech optimism collides with public skepticism over FRT, AI in policing

Despite the growing alarm, some tech executives like OpenAI’s Sam Altman have

recently reversed course, downplaying the need for regulation after previously

warning of AI’s risks. This inconsistency, coupled with massive federal

contracts and opaque deployment practices, erodes public trust in both

corporate actors and government regulators. What’s striking is how bipartisan

the concern has become. According to the Pew survey, only 17 percent of

Americans believe AI will have a positive impact on the U.S. over the next two

decades, while 51 percent express more concern than excitement about its

expanding role. These numbers represent a significant shift from earlier years

and a rare area of consensus between liberal and conservative constituencies.

... Bias in law enforcement AI systems is not simply a product of technical

error; it reflects systemic underrepresentation and skewed priorities in AI

design. According to the Pew survey, only 44 percent of AI experts believe

women’s perspectives are adequately accounted for in AI development. The

numbers drop even further for racial and ethnic minorities. Just 27 percent

and 25 percent say the perspectives of Black and Hispanic communities,

respectively, are well represented in AI systems.

Despite the growing alarm, some tech executives like OpenAI’s Sam Altman have

recently reversed course, downplaying the need for regulation after previously

warning of AI’s risks. This inconsistency, coupled with massive federal

contracts and opaque deployment practices, erodes public trust in both

corporate actors and government regulators. What’s striking is how bipartisan

the concern has become. According to the Pew survey, only 17 percent of

Americans believe AI will have a positive impact on the U.S. over the next two

decades, while 51 percent express more concern than excitement about its

expanding role. These numbers represent a significant shift from earlier years

and a rare area of consensus between liberal and conservative constituencies.

... Bias in law enforcement AI systems is not simply a product of technical

error; it reflects systemic underrepresentation and skewed priorities in AI

design. According to the Pew survey, only 44 percent of AI experts believe

women’s perspectives are adequately accounted for in AI development. The

numbers drop even further for racial and ethnic minorities. Just 27 percent

and 25 percent say the perspectives of Black and Hispanic communities,

respectively, are well represented in AI systems.6 rising malware trends every security pro should know

Infostealers steal browser cookies, VPN credentials, MFA (multi-factor

authentication) tokens, crypto wallet data, and more. Cybercriminals sell the

data that infostealers grab through dark web markets, giving attackers easy

access to corporate systems. “This shift commoditizes initial access, enabling

nation-state goals through simple transactions rather than complex attacks,”

says Ben McCarthy, lead cyber security engineer at Immersive. ... Threat

actors are systematically compromising the software supply chain by embedding

malicious code within legitimate development tools, libraries, and frameworks

that organizations use to build applications. “These supply chain attacks

exploit the trust between developers and package repositories,” Immersive’s

McCarthy tells CSO. “Malicious packages often mimic legitimate ones while

running harmful code, evading standard code reviews.” ... “There’s been a

notable uptick in the use of cloud-based services and remote management

platforms as part of ransomware toolchains,” says Jamie Moles, senior

technical marketing manager at network detection and response provider

ExtraHop. “This aligns with a broader trend: Rather than relying solely on

traditional malware payloads, adversaries are increasingly shifting toward

abusing trusted platforms and ‘living-off-the-land’ techniques.”

Infostealers steal browser cookies, VPN credentials, MFA (multi-factor

authentication) tokens, crypto wallet data, and more. Cybercriminals sell the

data that infostealers grab through dark web markets, giving attackers easy

access to corporate systems. “This shift commoditizes initial access, enabling

nation-state goals through simple transactions rather than complex attacks,”

says Ben McCarthy, lead cyber security engineer at Immersive. ... Threat

actors are systematically compromising the software supply chain by embedding

malicious code within legitimate development tools, libraries, and frameworks

that organizations use to build applications. “These supply chain attacks

exploit the trust between developers and package repositories,” Immersive’s

McCarthy tells CSO. “Malicious packages often mimic legitimate ones while

running harmful code, evading standard code reviews.” ... “There’s been a

notable uptick in the use of cloud-based services and remote management

platforms as part of ransomware toolchains,” says Jamie Moles, senior

technical marketing manager at network detection and response provider

ExtraHop. “This aligns with a broader trend: Rather than relying solely on

traditional malware payloads, adversaries are increasingly shifting toward

abusing trusted platforms and ‘living-off-the-land’ techniques.”How Constructive Criticism Can Improve IT Team Performance

Constructive criticism can be an excellent instrument for growth, both

individually and on the team level, says Edward Tian, CEO of AI detection

service provider GPTZero. "Many times, and with IT teams in particular, work

is very independent," he observes in an email interview. "IT workers may not

frequently collaborate with one another or get input on what they're doing,"

Tian states. ... When using constructive criticism, take an approach that

focuses on seeking improvement with the poor result, Chowning advises.

Meanwhile, use empathy to solicit ideas on how to improve on a poor result.

She adds that it's important to ask questions, listen, seek to understand,

acknowledge any difficulties or constraints, and solicit improvement ideas.

... With any IT team there are two key aspects of constructive criticism:

creating the expectation and opportunity for performance improvement, and --

often overlooked -- instilling recognition in the team that performance is

monitored and has implications, Chowning says. ... The biggest mistake IT

leaders make is treating feedback as a one-way directive rather than a dynamic

conversation, Avelange observes. "Too many IT leaders still operate in a

command-and-control mindset, dictating what needs to change rather than

co-creating solutions with their teams."

Constructive criticism can be an excellent instrument for growth, both

individually and on the team level, says Edward Tian, CEO of AI detection

service provider GPTZero. "Many times, and with IT teams in particular, work

is very independent," he observes in an email interview. "IT workers may not

frequently collaborate with one another or get input on what they're doing,"

Tian states. ... When using constructive criticism, take an approach that

focuses on seeking improvement with the poor result, Chowning advises.

Meanwhile, use empathy to solicit ideas on how to improve on a poor result.

She adds that it's important to ask questions, listen, seek to understand,

acknowledge any difficulties or constraints, and solicit improvement ideas.

... With any IT team there are two key aspects of constructive criticism:

creating the expectation and opportunity for performance improvement, and --

often overlooked -- instilling recognition in the team that performance is

monitored and has implications, Chowning says. ... The biggest mistake IT

leaders make is treating feedback as a one-way directive rather than a dynamic

conversation, Avelange observes. "Too many IT leaders still operate in a

command-and-control mindset, dictating what needs to change rather than

co-creating solutions with their teams."How AI will transform your Windows web browser

Google isn’t the only one sticking AI everywhere imaginable, of course.

Microsoft Edge already has plenty of AI integration — including a Copilot icon

on the toolbar. Click that, and you’ll get a Copilot sidebar where you can

talk about the current web page. But the integration runs deeper than most

people think, with more coming yet: Copilot in Edge now has access to Copilot

Vision, which means you can share your current web view with the AI model and

chat about what you see with your voice. This is already here — today.

Following Microsoft’s Build 2025 developers’ conference, the company is

starting to test a Copilot box right on Edge’s New Tab page. Rather than a

traditional Bing search box in that area, you’ll soon see a Copilot prompt box

so you can ask a question or perform a search with Copilot — not Bing. It

looks like Microsoft is calling this “Copilot Mode” for Edge. And it’s not

just a transformed New Tab page complete with suggested prompts and a

Copilot box, either: Microsoft is also experimenting with “Context Clues,”

which will let Copilot take into account your browser history and preferences

when answering questions. It’s worth noting that Copilot Mode is an optional

and experimental feature. ... Even the less AI-obsessed browsers of Mozilla

Firefox and Brave are now quietly embracing AI in an interesting way.

Google isn’t the only one sticking AI everywhere imaginable, of course.

Microsoft Edge already has plenty of AI integration — including a Copilot icon

on the toolbar. Click that, and you’ll get a Copilot sidebar where you can

talk about the current web page. But the integration runs deeper than most

people think, with more coming yet: Copilot in Edge now has access to Copilot

Vision, which means you can share your current web view with the AI model and

chat about what you see with your voice. This is already here — today.

Following Microsoft’s Build 2025 developers’ conference, the company is

starting to test a Copilot box right on Edge’s New Tab page. Rather than a

traditional Bing search box in that area, you’ll soon see a Copilot prompt box

so you can ask a question or perform a search with Copilot — not Bing. It

looks like Microsoft is calling this “Copilot Mode” for Edge. And it’s not

just a transformed New Tab page complete with suggested prompts and a

Copilot box, either: Microsoft is also experimenting with “Context Clues,”

which will let Copilot take into account your browser history and preferences

when answering questions. It’s worth noting that Copilot Mode is an optional

and experimental feature. ... Even the less AI-obsessed browsers of Mozilla

Firefox and Brave are now quietly embracing AI in an interesting way.

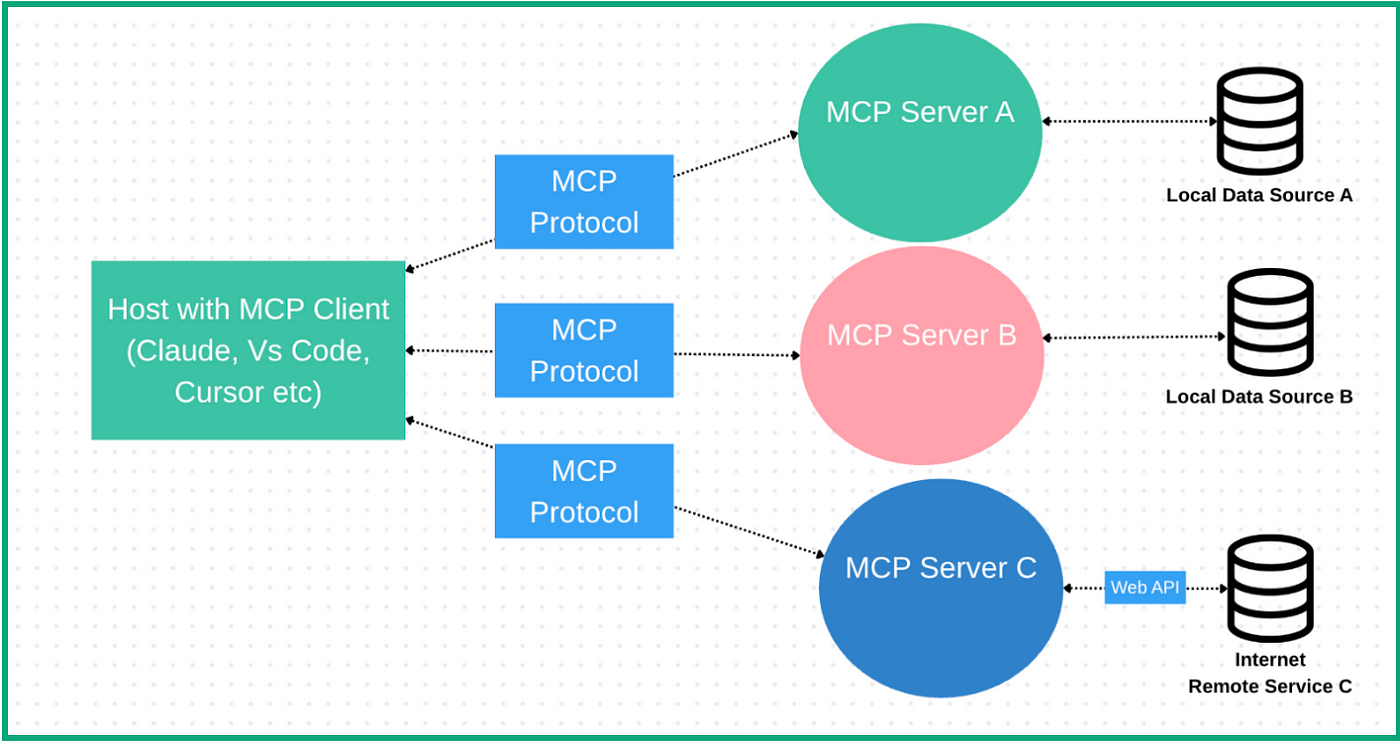

No, MCP Hasn’t Killed RAG — in Fact, They’re Complementary

Just as agentic systems are all the rage this year, so is MCP. But MCP is

sometimes talked about as if it’s a replacement for RAG. So let’s review the

definitions. In his “Is RAG dead yet?” post, Kiela defined RAG as follows: “In

simple terms, RAG extends a language model’s knowledge base by retrieving

relevant information from data sources that a language model was not trained

on and injecting it into the model’s context.” As for MCP (and the middle

letter stands for “context”), according to Anthropic’s documentation, it

“provides a standardized way to connect AI models to different data sources

and tools.” That’s the same definition, isn’t it? Not according to Kiela. In

his post, he argued that MCP complements RAG and other AI tools: “MCP

simplifies agent integrations with RAG systems (and other tools).” In our

conversation, Kiela added further (ahem) context. He explained that MCP is a

communication protocol — akin to REST or SOAP for APIs — based on JSON-RPC. It

enables different components, like a retriever and a generator, to speak the

same language. MCP doesn’t perform retrieval itself, he noted, it’s just the

channel through which components interact. “So I would say that if you have a

vector database and then you make that available through MCP, and then you let

the language model use it through MCP, that is RAG,” he continued.

Just as agentic systems are all the rage this year, so is MCP. But MCP is

sometimes talked about as if it’s a replacement for RAG. So let’s review the

definitions. In his “Is RAG dead yet?” post, Kiela defined RAG as follows: “In

simple terms, RAG extends a language model’s knowledge base by retrieving

relevant information from data sources that a language model was not trained

on and injecting it into the model’s context.” As for MCP (and the middle

letter stands for “context”), according to Anthropic’s documentation, it

“provides a standardized way to connect AI models to different data sources

and tools.” That’s the same definition, isn’t it? Not according to Kiela. In

his post, he argued that MCP complements RAG and other AI tools: “MCP

simplifies agent integrations with RAG systems (and other tools).” In our

conversation, Kiela added further (ahem) context. He explained that MCP is a

communication protocol — akin to REST or SOAP for APIs — based on JSON-RPC. It

enables different components, like a retriever and a generator, to speak the

same language. MCP doesn’t perform retrieval itself, he noted, it’s just the

channel through which components interact. “So I would say that if you have a

vector database and then you make that available through MCP, and then you let

the language model use it through MCP, that is RAG,” he continued.AI didn’t kill Stack Overflow

Stack Overflow’s most revolutionary aspect was its reputation system. That is

what elevated it above the crowd. The brilliance of the rep game allowed Stack

Overflow to absorb all the other user-driven sites for developers and more or

less kill them off. On Stack Overflow, users earned reputation points and

badges for asking good questions and providing helpful answers. In the

beginning, what was considered a good question or answer was not

predetermined; it was a natural byproduct of actual programmers upvoting some

exchanges and not others. ... For Stack Overflow, the new model, along with

highly subjective ideas of “quality” opened the gates to a kind of Stanford

Prison Experiment. Rather than encouraging a wide range of interactions and

behaviors, moderators earned reputation by culling interactions they deemed

irrelevant. Suddenly, Stack Overflow wasn’t a place to go and feel like you

were part of a long-lived developer culture. Instead, it became an arena where

you had to prove yourself over and over again. ... Whether the culture of

helping each other will survive in this new age of LLMs is a real question. Is

human helping still necessary? Or can it all be reduced to inputs and outputs?

Maybe there’s a new role for humans in generating accurate data that feeds the

LLMs. Maybe we’ll evolve into gardeners of these vast new tracts of synthetic

data.

Stack Overflow’s most revolutionary aspect was its reputation system. That is

what elevated it above the crowd. The brilliance of the rep game allowed Stack

Overflow to absorb all the other user-driven sites for developers and more or

less kill them off. On Stack Overflow, users earned reputation points and

badges for asking good questions and providing helpful answers. In the

beginning, what was considered a good question or answer was not

predetermined; it was a natural byproduct of actual programmers upvoting some

exchanges and not others. ... For Stack Overflow, the new model, along with

highly subjective ideas of “quality” opened the gates to a kind of Stanford

Prison Experiment. Rather than encouraging a wide range of interactions and

behaviors, moderators earned reputation by culling interactions they deemed

irrelevant. Suddenly, Stack Overflow wasn’t a place to go and feel like you

were part of a long-lived developer culture. Instead, it became an arena where

you had to prove yourself over and over again. ... Whether the culture of

helping each other will survive in this new age of LLMs is a real question. Is

human helping still necessary? Or can it all be reduced to inputs and outputs?

Maybe there’s a new role for humans in generating accurate data that feeds the

LLMs. Maybe we’ll evolve into gardeners of these vast new tracts of synthetic

data.