Quote for the day:

"A true dreamer is one who knows how to navigate in the dark." -- John Paul Warren

How Microsoft wants AI agents to use your PC for you

Microsoft’s concept revolves around the Model Context Protocol (MCP), which

was created by Anthropic (the company behind the Claude chatbot) last year.

That’s an open-source protocol that AI apps can use to talk to other apps and

web services. Soon, Microsoft says, you’ll be able to let a chatbot — or “AI

agent” — connect to apps running on your PC and manipulate them on your

behalf. ... Compared to what Microsoft is proposing, past “agentic” AI

solutions that promised to use your computer for you aren’t quite as

compelling. They’ve relied on looking at your computer’s screen and using that

input to determine what to click and type. This new setup, in contrast, is

neat — if it works as promised — because it lets an AI chatbot interact

directly with any old traditional Windows PC app. But the Model Context

Protocol solution is even more advanced and streamlined than that. Rather than

a chatbot having to put together a Spotify playlist by dragging and dropping

songs in the old-fashioned way, it would give the AI the ability to give

instructions to the Spotify app in a more simplified form. On a more technical

level, Microsoft will let application developers make their applications

function as MCP servers — a fancy way of saying they’d act like a bridge

between the AI models and the tasks they perform.

Microsoft’s concept revolves around the Model Context Protocol (MCP), which

was created by Anthropic (the company behind the Claude chatbot) last year.

That’s an open-source protocol that AI apps can use to talk to other apps and

web services. Soon, Microsoft says, you’ll be able to let a chatbot — or “AI

agent” — connect to apps running on your PC and manipulate them on your

behalf. ... Compared to what Microsoft is proposing, past “agentic” AI

solutions that promised to use your computer for you aren’t quite as

compelling. They’ve relied on looking at your computer’s screen and using that

input to determine what to click and type. This new setup, in contrast, is

neat — if it works as promised — because it lets an AI chatbot interact

directly with any old traditional Windows PC app. But the Model Context

Protocol solution is even more advanced and streamlined than that. Rather than

a chatbot having to put together a Spotify playlist by dragging and dropping

songs in the old-fashioned way, it would give the AI the ability to give

instructions to the Spotify app in a more simplified form. On a more technical

level, Microsoft will let application developers make their applications

function as MCP servers — a fancy way of saying they’d act like a bridge

between the AI models and the tasks they perform. How vulnerable are undersea cables?

The only way to effectively protect a cable against sabotage is to bury the

entire cable, says Liwång, which is not economically justifiable. In the

Baltic Sea, it is easier and more sensible to repair the cables when they

break, and it is more important to lay more cables than to try to protect a

few.

The only way to effectively protect a cable against sabotage is to bury the

entire cable, says Liwång, which is not economically justifiable. In the

Baltic Sea, it is easier and more sensible to repair the cables when they

break, and it is more important to lay more cables than to try to protect a

few.Burying all transoceanic cables is hardly feasible in practice either. ... “Cable breaks are relatively common even under normal circumstances. In terrestrial networks, they can be caused by various factors, such as excavators working near the fiber installation and accidentally cutting it. In submarine cables, cuts can occur, for example due to irresponsible use of anchors, as we have seen in recent reports,” says Furdek Prekratic. Network operators ensure that individual cable breaks do not lead to widespread disruptions, she notes: “Optical fiber networks rely on two main mechanisms to handle such events without causing a noticeable disruption to public transport. The first is called protection. The moment an optical connection is established over a physical path between two endpoints, resources are also allocated to another connection that takes a completely different path between the same endpoints. If a failure occurs on any link along the primary path, the transmission quickly switches to the secondary path. The second mechanism is called failover. Here, the secondary path is not reserved in advance, but is determined after the primary path has suffered a failure.”

Driving business growth through effective productivity strategies

In times of economic uncertainty, it is to be expected that businesses grow

more cautious with their spending. However, this can result in missed

opportunities to improve productivity in favour of cost reductions. While

cutting costs can seem an attractive option in light of economic doubts, it

is merely a short-term solution. When businesses hold back from knee-jerk

reactions and maintain a focus on sustainable productivity gains, they will

find themselves reaping rewards in the long term. Strategic investments in

technology solutions are essential to support businesses in driving their

productivity strategies forward. With new technology constantly being

introduced, there are a lot of options for business decision makers to

consider. Most obviously, there are technology features in our ERP systems,

and in our project management and collaboration tools, that can be used to

facilitate significant flexibility or performance advantages compared to

legacy approaches and processes. ... While technology is a vital part of any

innovative productivity model, it’s just one piece of the puzzle. It is no

use installing modern technology if internal processes remain outdated.

Businesses must also look to weed out inefficient practices to improve and

streamline resource management.

In times of economic uncertainty, it is to be expected that businesses grow

more cautious with their spending. However, this can result in missed

opportunities to improve productivity in favour of cost reductions. While

cutting costs can seem an attractive option in light of economic doubts, it

is merely a short-term solution. When businesses hold back from knee-jerk

reactions and maintain a focus on sustainable productivity gains, they will

find themselves reaping rewards in the long term. Strategic investments in

technology solutions are essential to support businesses in driving their

productivity strategies forward. With new technology constantly being

introduced, there are a lot of options for business decision makers to

consider. Most obviously, there are technology features in our ERP systems,

and in our project management and collaboration tools, that can be used to

facilitate significant flexibility or performance advantages compared to

legacy approaches and processes. ... While technology is a vital part of any

innovative productivity model, it’s just one piece of the puzzle. It is no

use installing modern technology if internal processes remain outdated.

Businesses must also look to weed out inefficient practices to improve and

streamline resource management.

Synthetic data’s fine line between reward and disaster

Generating large volumes of training data on demand is appealing compared to

slow, expensive gathering of real-world data, which can be fraught with

privacy concerns, or just not available. Synthetic data ought to help preserve

privacy, speed up development, and be more cost effective for long-tail

scenarios enterprises couldn’t otherwise tackle, she adds. It can even be used

for controlled experimentation, assuming you can make it accurate enough.

Purpose-built data is ideal for scenario planning and running intelligent

simulations, and synthetic data detailed enough to cover entire scenarios

could predict future behavior of assets, processes, and customers, which would

be invaluable for business planning. ... Created properly, synthetic data

mimics statistical properties and patterns of real-world data without

containing actual records from the original dataset, says Jarrod Vawdrey,

field chief data scientist at Domino Data Lab. And David Cox, VP of AI Models

at IBM Research suggests viewing it as amplifying rather than creating data.

“Real data can be extremely expensive to produce, but if you have a little bit

of it, you can multiply it,” he says. “In some cases, you can make synthetic

data that’s much higher quality than the original. The real data is a sample.

It doesn’t cover all the different variations and permutations you might

encounter in the real world.”

Generating large volumes of training data on demand is appealing compared to

slow, expensive gathering of real-world data, which can be fraught with

privacy concerns, or just not available. Synthetic data ought to help preserve

privacy, speed up development, and be more cost effective for long-tail

scenarios enterprises couldn’t otherwise tackle, she adds. It can even be used

for controlled experimentation, assuming you can make it accurate enough.

Purpose-built data is ideal for scenario planning and running intelligent

simulations, and synthetic data detailed enough to cover entire scenarios

could predict future behavior of assets, processes, and customers, which would

be invaluable for business planning. ... Created properly, synthetic data

mimics statistical properties and patterns of real-world data without

containing actual records from the original dataset, says Jarrod Vawdrey,

field chief data scientist at Domino Data Lab. And David Cox, VP of AI Models

at IBM Research suggests viewing it as amplifying rather than creating data.

“Real data can be extremely expensive to produce, but if you have a little bit

of it, you can multiply it,” he says. “In some cases, you can make synthetic

data that’s much higher quality than the original. The real data is a sample.

It doesn’t cover all the different variations and permutations you might

encounter in the real world.”AI Interventions to Reduce Cycle Time in Legacy Modernization

/articles/AI-legacy-modernization/en/smallimage/AI-legacy-modernization-thumbnail-1746528855677.jpg) As the software becomes difficult to change, businesses may choose to tolerate

conceptual drift or compensate for it through their operations. When the

difficulty of modifying the software poses a significant enough business risk,

a legacy modernization effort is undertaken. Legacy modernization efforts

showcase the problem of concept recovery. In these circumstances, recovering a

software system’s underlying concept is the labor-intensive bottleneck step to

any change. Without it, the business risks a failed modernization or losing

customers that depend on unknown or under-considered functionality. ... The

goal of a software modernization’s design phase is to perform enough

validation of the approach to be able to start planning and development while

minimizing the amount of rework that could result due to missed information.

Traditionally, substantial lead time is spent in the design phase inspecting

legacy source code, producing a target architecture, and collecting business

requirements. These activities are time-intensive, mutually interdependent,

and usually the bottleneck step in modernization. While exploring how to use

LLMs for concept recovery, we encountered three challenges to effectively

serving teams performing legacy modernizations: which context was needed and

how to obtain it, how to organize context so humans and LLMs can both make use

of it, and how to support iterative improvement of requirements

documents.

As the software becomes difficult to change, businesses may choose to tolerate

conceptual drift or compensate for it through their operations. When the

difficulty of modifying the software poses a significant enough business risk,

a legacy modernization effort is undertaken. Legacy modernization efforts

showcase the problem of concept recovery. In these circumstances, recovering a

software system’s underlying concept is the labor-intensive bottleneck step to

any change. Without it, the business risks a failed modernization or losing

customers that depend on unknown or under-considered functionality. ... The

goal of a software modernization’s design phase is to perform enough

validation of the approach to be able to start planning and development while

minimizing the amount of rework that could result due to missed information.

Traditionally, substantial lead time is spent in the design phase inspecting

legacy source code, producing a target architecture, and collecting business

requirements. These activities are time-intensive, mutually interdependent,

and usually the bottleneck step in modernization. While exploring how to use

LLMs for concept recovery, we encountered three challenges to effectively

serving teams performing legacy modernizations: which context was needed and

how to obtain it, how to organize context so humans and LLMs can both make use

of it, and how to support iterative improvement of requirements

documents. OWASP proposes a way for enterprises to automatically identify AI agents

“The confusion about ANS versus protocols like MCP, A2A, ACP, and Microsoft

Entra is understandable, but there’s an important distinction to make: ANS is

a discovery service, not a communication protocol,” Narajala said. “MCP, A2A

and ACP define how agents talk to each other once connected, like HTTP for

web. ANS defines how agents find and verify each other before communication,

like DNS for web. Microsoft Entra provides identity services, but primarily

within Microsoft’s ecosystem.” ... “We’re fast approaching the point where the

need for a standard to identify AI agents becomes painfully obvious. Right

now, it’s a mess. Companies are spinning up agents left and right, with no

trusted way to know what they are, what they do, or who built them,” Tvrdik

said. “The Wild West might feel exciting, but we all know how most of those

stories end. And it’s not secure.” As for ANS, he said. “it makes sense in

theory. Treat agents like domains. Give them names, credentials, and a way to

verify who’s talking to what. That helps with security, sure, but also with

keeping things organized. Without it, we’re heading into chaos.” But Tvrdik

stressed that the deployment mechanisms will ultimately determine if ANS

works.

“The confusion about ANS versus protocols like MCP, A2A, ACP, and Microsoft

Entra is understandable, but there’s an important distinction to make: ANS is

a discovery service, not a communication protocol,” Narajala said. “MCP, A2A

and ACP define how agents talk to each other once connected, like HTTP for

web. ANS defines how agents find and verify each other before communication,

like DNS for web. Microsoft Entra provides identity services, but primarily

within Microsoft’s ecosystem.” ... “We’re fast approaching the point where the

need for a standard to identify AI agents becomes painfully obvious. Right

now, it’s a mess. Companies are spinning up agents left and right, with no

trusted way to know what they are, what they do, or who built them,” Tvrdik

said. “The Wild West might feel exciting, but we all know how most of those

stories end. And it’s not secure.” As for ANS, he said. “it makes sense in

theory. Treat agents like domains. Give them names, credentials, and a way to

verify who’s talking to what. That helps with security, sure, but also with

keeping things organized. Without it, we’re heading into chaos.” But Tvrdik

stressed that the deployment mechanisms will ultimately determine if ANS

works.Driving DevOps With Smart, Scalable Testing

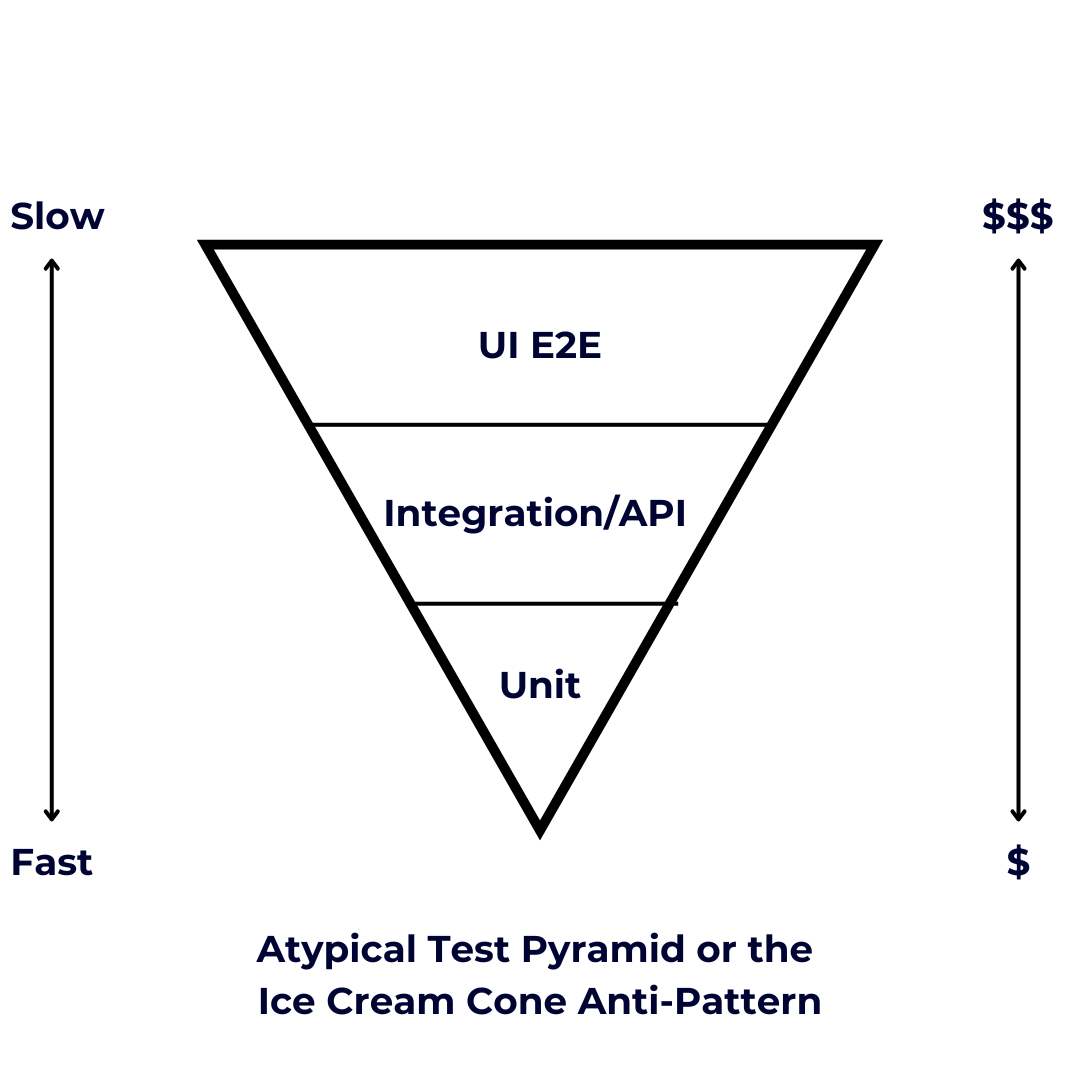

Testing apps manually isn’t easy and consumes a lot of time and money. Testing

complex ones with frequent releases requires an enormous number of human hours

when attempted manually. This will affect the release cycle, results will take

longer to appear, and if shown to be a failure, you’ll need to conduct another

round of testing. What’s more, the chances of doing it correctly, repeatedly

and without any human error, are highly unlikely. Those factors have driven

the development of automation throughout all phases of the testing process,

ranging from infrastructure builds to actual testing of code and applications.

As for who should write which tests, as a general rule of thumb, it’s a task

best-suited to software engineers. They should create unit and integration

tests as well as UI e2e tests. QA analysts should also be tasked with writing

UI E2E tests scenarios together with individual product owners. QA teams

collaborating with business owners enhance product quality by aligning testing

scenarios with real-world user experiences and business objectives. ... AWS

CodePipeline can provide completely managed continuous delivery that creates

pipelines, orchestrates and updates infrastructure and apps. It also works

well with other crucial AWS DevOps services, while integrating with

third-party action providers like Jenkins and Github.

Testing apps manually isn’t easy and consumes a lot of time and money. Testing

complex ones with frequent releases requires an enormous number of human hours

when attempted manually. This will affect the release cycle, results will take

longer to appear, and if shown to be a failure, you’ll need to conduct another

round of testing. What’s more, the chances of doing it correctly, repeatedly

and without any human error, are highly unlikely. Those factors have driven

the development of automation throughout all phases of the testing process,

ranging from infrastructure builds to actual testing of code and applications.

As for who should write which tests, as a general rule of thumb, it’s a task

best-suited to software engineers. They should create unit and integration

tests as well as UI e2e tests. QA analysts should also be tasked with writing

UI E2E tests scenarios together with individual product owners. QA teams

collaborating with business owners enhance product quality by aligning testing

scenarios with real-world user experiences and business objectives. ... AWS

CodePipeline can provide completely managed continuous delivery that creates

pipelines, orchestrates and updates infrastructure and apps. It also works

well with other crucial AWS DevOps services, while integrating with

third-party action providers like Jenkins and Github. Bridging the Digital Divide: Understanding APIs

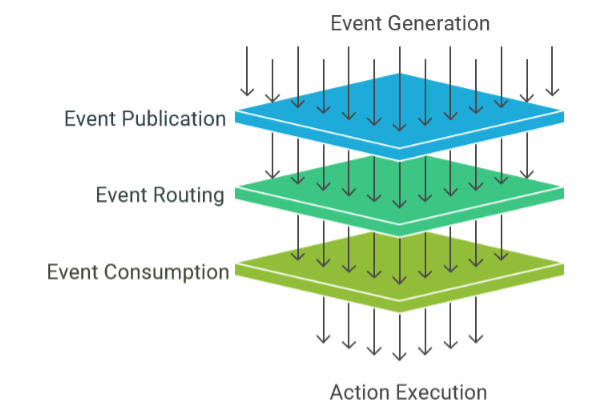

While both Event-Driven Architecture (EDA) and Data-Driven Architecture (DDA)

are crucial for modern enterprises, they serve distinct purposes, operate on

different core principles, and manifest through different architectural

characteristics. Understanding these differences is key for enterprise

architects to effectively leverage their individual strengths and potential

synergies. While EDA is often highly operational and tactical, facilitating

immediate responses to specific triggers, DDA can span both operational and

strategic domains. A key differentiator between the two lies in the

“granularity of trigger.” EDA is typically triggered by fine-grained,

individual events—a single mouse click, a specific sensor reading, a new

message arrival. Each event is a distinct signal that can initiate a process.

DDA, on the other hand, often initiates its processes or derives its triggers

from aggregated data, identified patterns, or the outcomes of analytical

models that have processed numerous data points. For example, an analytical

process in DDA might be triggered by the availability of a complete daily

sales dataset, or an alert might be generated when a predictive model

identifies an anomaly based on a complex evaluation of multiple data streams

over time. This distinction in trigger granularity directly influences the

design of processing logic, the selection of underlying technologies, and the

expected immediacy and nature of the system’s response.

While both Event-Driven Architecture (EDA) and Data-Driven Architecture (DDA)

are crucial for modern enterprises, they serve distinct purposes, operate on

different core principles, and manifest through different architectural

characteristics. Understanding these differences is key for enterprise

architects to effectively leverage their individual strengths and potential

synergies. While EDA is often highly operational and tactical, facilitating

immediate responses to specific triggers, DDA can span both operational and

strategic domains. A key differentiator between the two lies in the

“granularity of trigger.” EDA is typically triggered by fine-grained,

individual events—a single mouse click, a specific sensor reading, a new

message arrival. Each event is a distinct signal that can initiate a process.

DDA, on the other hand, often initiates its processes or derives its triggers

from aggregated data, identified patterns, or the outcomes of analytical

models that have processed numerous data points. For example, an analytical

process in DDA might be triggered by the availability of a complete daily

sales dataset, or an alert might be generated when a predictive model

identifies an anomaly based on a complex evaluation of multiple data streams

over time. This distinction in trigger granularity directly influences the

design of processing logic, the selection of underlying technologies, and the

expected immediacy and nature of the system’s response.

What good threat intelligence looks like in practice

The biggest shortcoming is often in the last mile, connecting intelligence to real-time detection, response, and risk mitigation. Another challenge is organizational silos. In many environments, the CTI team operates separately from SecOps, incident response, or threat hunting teams. Without seamless collaboration between those functions, threat intelligence remains a standalone capability rather than a force multiplier. This is often where threat intelligence teams can be challenged to demonstrate value into security operations. ... Rather than picking one over the other, CISOs should focus on blending these sources and correlating them with internal telemetry. The goal is to reduce noise, enhance relevance, and produce enriched insights that reflect the organization’s actual threat surface. Feed selection should also consider integration capabilities — intelligence is only as useful as the systems and people that can act on it. When threat intelligence is operationalized, a clear picture can be formed from the variety of available threat feeds. ... The threat intel team should be seen not as another security function, but as a strategic partner in risk reduction and decision support. CISOs can encourage cross-functional alignment by embedding CTI into security operations workflows, incident response playbooks, risk registers, and reporting frameworks.4 ways to safeguard CISO communications from legal liabilities

“Words matter incredibly in any legal proceeding,” Brown agreed. “The first

thing that will happen will be discovery. And in discovery, they will collect

all emails, all Teams, all Slacks, all communication mechanisms, and then run

queries against that information.” Speaking with professionalism is not only a

good practice in building an effective cybersecurity program, but it can go a

long way to warding off legal and regulatory repercussions, according to Scott

Jones, senior counsel at Johnson & Johnson. “The seriousness and the

impact of your words and all other aspects of how you conduct yourself as a

security professional can have impacts not only on substantive cybersecurity,

but also what harms might befall your company either through an enforcement

action or litigation,” he said. ... CISOs also need to pay attention to what

they say based on the medium in which they are communicating. Pay attention to

“how we communicate, who we’re communicating with, what platforms we’re

communicating on, and whether it’s oral or written,” Angela Mauceri, corporate

director and assistant general counsel for cyber and privacy at Northrop

Grumman, said at RSA. “There’s a lasting effect to written communications.”

She added, “To that point, you need to understand the data governance and,

more importantly, the data retention policy of those electronic communication

platforms, whether it exists for 60 days, 90 days, or six months.”

“Words matter incredibly in any legal proceeding,” Brown agreed. “The first

thing that will happen will be discovery. And in discovery, they will collect

all emails, all Teams, all Slacks, all communication mechanisms, and then run

queries against that information.” Speaking with professionalism is not only a

good practice in building an effective cybersecurity program, but it can go a

long way to warding off legal and regulatory repercussions, according to Scott

Jones, senior counsel at Johnson & Johnson. “The seriousness and the

impact of your words and all other aspects of how you conduct yourself as a

security professional can have impacts not only on substantive cybersecurity,

but also what harms might befall your company either through an enforcement

action or litigation,” he said. ... CISOs also need to pay attention to what

they say based on the medium in which they are communicating. Pay attention to

“how we communicate, who we’re communicating with, what platforms we’re

communicating on, and whether it’s oral or written,” Angela Mauceri, corporate

director and assistant general counsel for cyber and privacy at Northrop

Grumman, said at RSA. “There’s a lasting effect to written communications.”

She added, “To that point, you need to understand the data governance and,

more importantly, the data retention policy of those electronic communication

platforms, whether it exists for 60 days, 90 days, or six months.”

No comments:

Post a Comment