Quote for the day:

"Integrity is the soul of leadership! Trust is the engine of leadership!" -- Amine A. Ayad

Real-world use cases for agentic AI

There’s a wealth of public code bases on which models can be trained. And larger

companies typically have their own code repositories, with detailed change logs,

bug fixes, and other information that can be used to train or fine-tune an AI

system on a company’s internal coding methods. As AI model context windows get

larger, these tools can look through more and more code at once to identify

problems or suggest fixes. And the usefulness of AI coding tools is only

increasing as developers adopt agentic AI. According to Gartner, AI agents

enable developers to fully automate and offload more tasks, transforming how

software development is done — a change that will force 80% of the engineering

workforce to upskill by 2027. Today, there are several very popular agentic AI

systems and coding assistants built right into integrated development

environments, as well as several startups trying to break into the market with

an AI focus out of the gate. ... Not every use case requires a full agentic

system, he notes. For example, the company uses ChatGPT and reasoning models for

architecture and design. “I’m consistently impressed by these models,” Shiebler

says. For software development, however, using ChatGPT or Claude and

cutting-and-pasting the code is an inefficient option, he says.

Rethinking AppSec: How DevOps, containers, and serverless are changing the rules

Application security and developers have not always been on friendly terms,

but the practice indicates that innovative security solutions are bridging the

gaps, bringing developers and security closer together in a seamless fashion,

with security no longer being a hurdle in developers’ daily work. Quite the

contrary – security is nested in CI/CD pipelines, it’s accessible,

non-obstructive, and it’s gone beyond scanning for waves and waves of

false-positive vulnerabilities. It’s become, and is poised to remain, about

empowering developers to fix issues early, in context, and without affecting

delivery and its velocity. ... Another considerate battleground is identity.

With reliance on distributed microservices, each component acts as both client

and server, so misconfigured identity providers or weak token validation logic

make room for lateral movement and exponentially increased attack

opportunities. Without naming names, there are sufficient amounts of cases

illustrating how breaches can occur from token forgery or authorization header

manipulations. Additional headaches are exposed APIs and shadow services.

Developers create new endpoints, and due to the fast pace of the process, they

can easily escape scrutiny, further emphasizing the importance of continuous

discovery and dynamic testing that will “catch” those endpoints and ensure

they’re covered in securing the development process.

The Hidden Cost of Complexity: Managing Technical Debt Without Losing Momentum

Outdated, fragmented, or overly complex systems become the digital equivalent

of cognitive noise. They consume bandwidth, blur clarity, and slow down both

decision-making and delivery. What should be a smooth flow from idea to

outcome becomes a slog. ... In short, technical debt introduces a constant

low-grade drag on agility. It limits responsiveness. It multiplies cost. And

like visual clutter, it contributes to fatigue—especially for architects,

engineers, and teams tasked with keeping transformation moving. So what can we

do?Assess System Health: Inventory your landscape and identify outdated

systems, high-maintenance assets, and unnecessary complexity. Use KPIs like

total cost of ownership, incident rates, and integration overhead. Prioritize

for Renewal or Retirement: Not everything needs to be modernized. Some systems

need replacement. Others, thoughtful containment. The key is intentionality.

... Technical debt is a measure of how much operational risk and complexity is

lurking beneath the surface. It’s not just code that’s held together by duct

tape or documentation gaps—it’s how those issues accumulate and impact

business outcomes. But not all technical debt is created equal. In fact, some

debt is strategic. It enables agility, unlocks short-term wins, and helps

organizations experiment quickly.

The Cost Conundrum of Cloud Computing

When exploring cloud pricing structures, the initial costs may seem quite

attractive but after delving deeper to examine the details, certain aspects

may become cloudy. The pricing tiers add a layer of complexity which means

there isn’t a single recurring cost to add to the balance sheet. Rather, cloud

fees vary depending on the provider, features, and several usage factors such

as on-demand use, data transfer volumes, technical support, bandwidth, disk

performance, and other core metrics, which can influence the overall

solution’s price. However, the good news is there are ways to gain control of

and manage these costs. ... Whilst understanding the costs associated with

using a public cloud solution is critical, it is important to emphasise that

modern cloud platforms provide robust, comprehensive and cutting-edge

technologies and solutions to help drive businesses forward. Cloud platforms

provide a strong foundation of physical infrastructure, robust platform-level

services, and a wide array of resilient connectivity and data solutions. In

addition, cloud providers continually invest in the security of their

solutions to physically and logically secure the hardware and software layers

with access control, monitoring tools, and stringent data security measures to

keep the data safe.

Operating in the light, and in the dark (net)

While the takedown of sites hosting CSA cannot be directly described in the

same light, the issue is ramping up. The Internet continues to expand - like

the universe - and attempting to monitor it is a never-ending challenge. As

IWF’s Sexton puts it: “Right now, the Internet is so big that its sort of

anonymity with obscurity.” While some emerging (and already emerged)

technologies such as AI can play a role in assisting those working on the side

of the light - for example, the IWF has tested using AI for triage when

assessing websites with thousands of images, and AI can be trained for content

moderation by industry and others, the proliferation of AI has also added to

the problem.AI-generated content has now also entered the scene. From a

legality standpoint, it remains the same as CSA content. Just because an AI

created it, does not mean that it’s permitted - at least in the UK where IWF

primarily operates. “The legislation in the UK is robust enough to cover both

real material, photo-realistic synthetic content, or sheerly synthetic

content. The problem it does create is one of quantity. Previously, to create

CSA, it would require someone to have access to a child and conduct abuse.

“Then with the rise of the Internet we also saw an increase in self-generated

content. Now, AI has the ability to create it without any contact with a child

at all. People now have effectively an infinite ability to generate this

content.”

Why LLM applications need better memory management

Developers assume generative AI-powered tools are improving

dynamically—learning from mistakes, refining their knowledge, adapting. But

that’s not how it works. Large language models (LLMs) are stateless by design.

Each request is processed in isolation unless an external system supplies

prior context. That means “memory” isn’t actually built into the model—it’s

layered on top, often imperfectly. ... Some LLM applications have the opposite

problem—not forgetting too much, but remembering the wrong things. Have you

ever told ChatGPT to “ignore that last part,” only for it to bring it up later

anyway? That’s what I call “traumatic memory”—when an LLM stubbornly holds

onto outdated or irrelevant details, actively degrading its usefulness. ... To

build better LLM memory, applications need: Contextual working memory:

Actively managed session context with message summarization and selective

recall to prevent token overflow. Persistent memory systems: Long-term storage

that retrieves based on relevance, not raw transcripts. Many teams use

vector-based search (e.g., semantic similarity on past messages), but

relevance filtering is still weak. Attentional memory controls: A system that

prioritizes useful information while fading outdated details. Without this,

models will either cling to old data or forget essential corrections.

DARPA’s Quantum Benchmarking Initiative: A Make-or-Break for Quantum Computing

While the hype around quantum computing is certainly warranted, it is often

blown out of proportion. This arises occasionally due to a lack of fundamental

understanding of the field. However, more often, this is a consequence of

corporations obfuscating or misrepresenting facts to influence the stock

market and raise capital. ... If it becomes practically applicable, quantum

computing will bring a seismic shift in society, completely transforming areas

such as medicine, finance, agriculture, energy, and the military, to name a

few. Nonetheless, this enormous potential has resulted in rampant hype around

it, while concomitantly resulting in the proliferation of bad actors seeking

to take advantage of a technology not necessarily well understood by the

general public. On the other hand, negativity around the technology can also

cause the pendulum to swing in the other direction. ... Quantum computing is

at a critical juncture. Whether it reaches its promised potential or

disappears into the annals of history, much like its many preceding

technologies, will be decided in the coming years. As such, a transparent and

sincere approach in quantum computing research leading to practically useful

applications will inspire confidence among the masses, while false and

half-baked claims will deter investments in the field, eventually leading to

its inevitable demise.

The reality check every CIO needs before seeking a board seat

“CIOs think technology will get them to the boardroom,” says Shurts, who has

served on multiple public- and private-company boards. “Yes, more boards want

tech expertise, but you have to provide the right knowledge, breadth, and

depth on topics that matter to their businesses.” ... Herein lies another

conundrum for CIOs seeking spots on boards. Many see those findings and think

they can help with that. But the context is more important. “In your

operational role as a CIO, you’re very much involved in the details, solving

problems every day,” Zarmi says. “On the board, you don’t solve the problems.

You help, coach, mentor, ask questions, make suggestions, and impart wisdom,

but you’re not responsible for execution.” That’s another change IT leaders

need to make to position themselves for board seats. Luckily, there are tools

that can help them make the leap. Quinlan, for example, got a certification

from the National Association of Corporate Directors (NACD), which offers a

variety of resources for aspiring board members. And he took it a few steps

further by attaining a financial certification. Sure, he’d been involved in

P&L management, but the certification helped him understand finance at the

board’s altitude. He also added a cybersecurity certification even though he

runs multi-hundred-million-dollar cyber programs. “Right, but I haven’t run it

at the board, and I wanted to do that,” he says.

Applying the OODA Loop to Solve the Shadow AI Problem

Organizations should have complete visibility of their AI model inventory.

Inconsistent network visibility arising from siloed networks, a lack of

communication between security and IT teams, and point solutions encourages

shadow AI. Complete network visibility must therefore become the priority for

organizations to clearly see the extent and nature of shadow AI in their

systems, thus promoting compliance, reducing risk, and promoting responsible

AI use without hindering innovation. ... Organizations need to identify the

effect of shadow AI once it has been discovered. This includes identifying the

risks and advantages of such shadow software. ... Organizations must set

clearly defined yet flexible policies regarding the acceptable use of AI to

enable employees to use AI responsibly. Such policies need to allow granular

control from binary approval to more sophisticated levels like providing

access based on users’ role and responsibility, limiting or enabling certain

functionalities within an AI tool, or specifying data-level approvals where

sensitive data can be processed only in approved environments. ...

Organizations must evaluate and formally incorporate shadow AI tools offering

substantial value to ensure their use in secure and compliant environments.

Access controls need to be tightened to avoid unapproved installations; zero

trust and privilege management policies can assist in this regard.

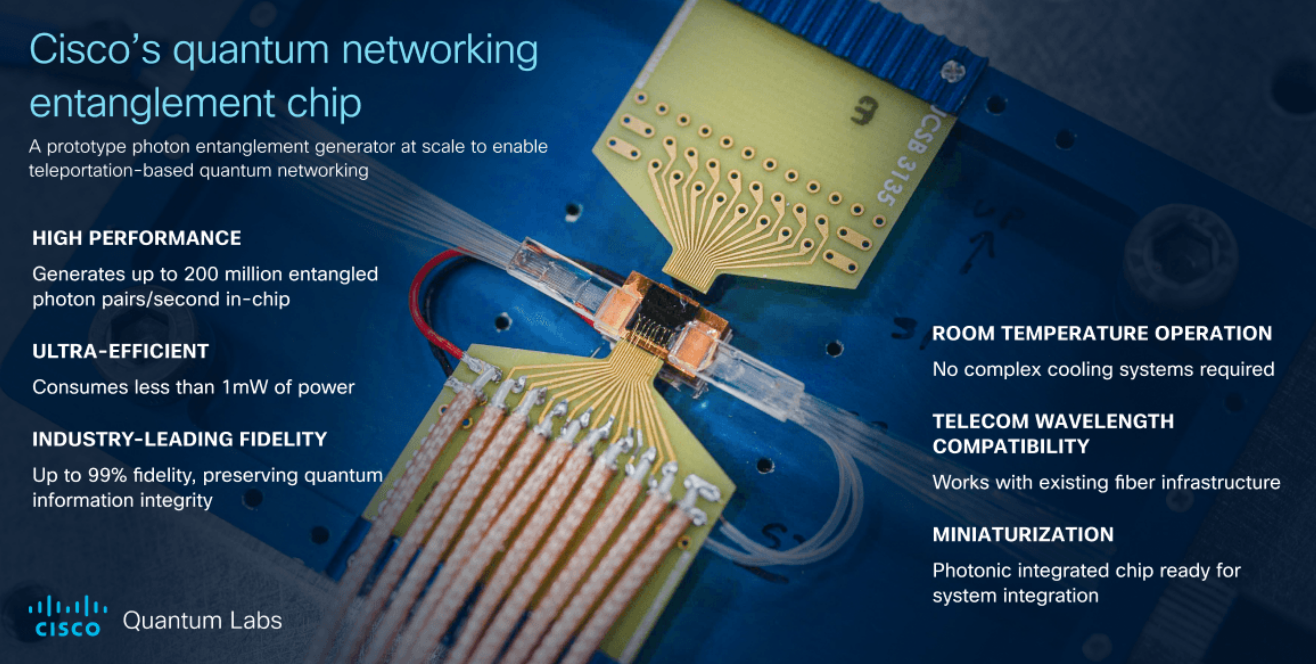

Cisco Pulls Together A Quantum Network Architecture

It will take a quantum network infrastructure to tie create a distributed

quantum computing environment possible and allow it to scale more quickly

beyond the relatively small number of qubits that are found in current and

near-future systems, Cisco scientists wrote in a research paper. Such quantum

datacenters involve “multiple QPUs [quantum processing units] … networked

together, enabling a distributed architecture that can scale to meet the

demands of large-scale quantum computing,” they wrote. “Ultimately, these

quantum data centers will form the backbone of a global quantum network, or

quantum internet, facilitating seamless interconnectivity on a planetary

scale.” ... The entanglement chip will be central to an entire quantum

datacenter the vendor is working toward, with new versions of what is found in

current classical networks, including switches and NICs. “A quantum network

requires fundamentally new components that work at the quantum mechanics

level,” they wrote. “When building a quantum network, we can’t digitize

information as in classical networks – we must preserve quantum properties

throughout the entire transmission path. This requires specialized hardware,

software, and protocols unlike anything in classical networking.”

No comments:

Post a Comment