How AI is strengthening XDR to consolidate tech stacks

XDR platforms need AI/ML technologies to identify malware-free breach attempts

while also looking for signals of attackers relying on legitimate system tools

and living-off-the-land (LOTL) techniques to breach endpoints undetected. ...

VentureBeat spoke with several CEOs at RSAC 2023 to learn how each perceives the

value of AI in their product strategies today and in the future. Connie Stack,

CEO of NextDLP, told VentureBeat, “AI and machine learning can significantly

enhance data loss prevention by adding intelligence and automation to detecting

and preventing data loss. AI and machine learning algorithms can analyze

patterns in data and detect anomalies that may indicate a security breach or

unauthorized access to sensitive information well before any policy violation

occurs.” XDR providers tell VentureBeat that the challenge of parsing an

exponential increase in telemetry data, performing telemetry enrichment and

mapping data to schema are the immediate architectural requirements they have.

There’s also the need for real-time cross-collaboration, analytics and alert

prioritization. XDR’s current and future ecosystem is dependent on AI’s

continued growth.

10 ways generative AI will transform software development

The ability to prompt for code adds risks if the code generated has security

issues, defects, or introduces performance issues. The hope is that if coding is

easier and faster, developers will have more time, responsibility, and better

tools for validating the code before it gets embedded in applications. But will

that happen? “As developers adopt AI for productivity benefits, there’s a

required responsibility to gut-check what it produces,” says Peter McKee, head

of developer relations at Sonar. “Clean as you code ensures that by performing

checks and continuous monitoring during the delivery process, developers can

spend more time on new tasks rather than remediating bugs in human-created or

AI-generated code.” CIOs and CISOs will expect developers to perform more code

validation, especially if AI-generated code introduces significant

vulnerabilities. ... Another implication of code developed with genAI

concerns how enterprise leaders develop policies and monitor the supply chain of

what code is embedded in enterprise applications. Until now, organizations were

most concerned about tracking open source and commercial software components,

but genAI adds new dimensions.

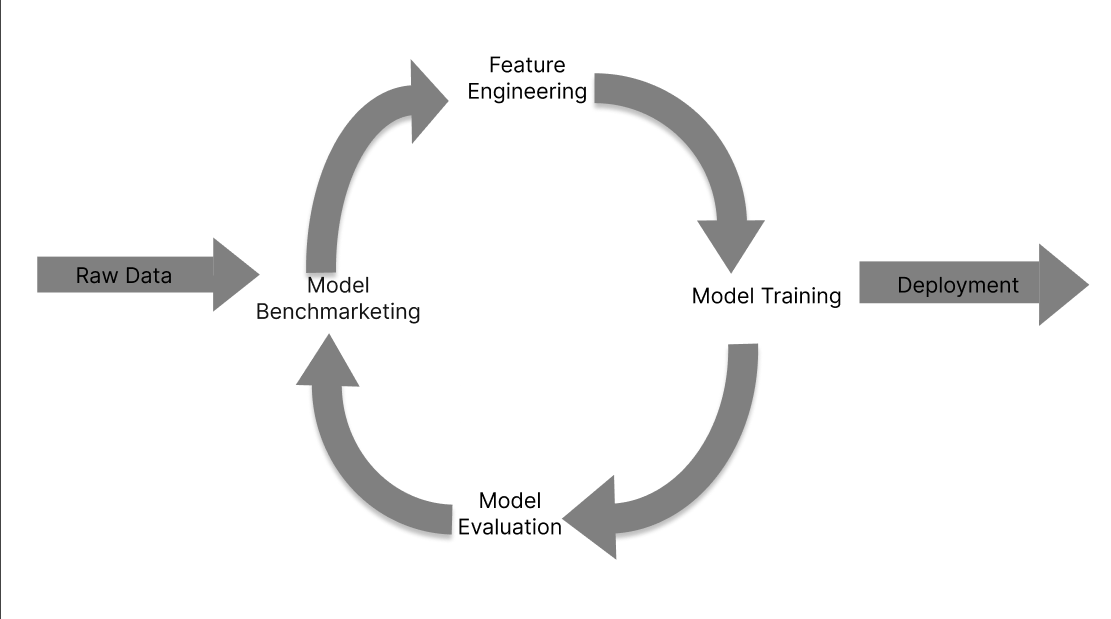

Agile Methodologies In The Era Of Machine Learning Development

Both emphasize adaptability and continuous improvement, providing a solid

foundation for building robust ML models. The iterative cycles of Agile resonate

with the constant refinement required in ML algorithms, fostering an environment

conducive to experimentation and learning. Bringing together Agile and Machine

Learning (ML) is like mixing the best of teamwork and smart strategies for

computer programs. Agile is like a way of working that’s flexible and can adapt

quickly, and ML is all about smart machines learning from data. When they come

together, it’s like using a super-smart and flexible approach to make really

cool and smart computer programs ... Everyone has a special skill, like some

friends are good at building, and others are good at deciding what the robot dog

should do.This teamwork also helps if you discover something new, like a better

way for the robot dog to move. Agile allows you to quickly change and improve,

just like trying a new game. ... Unlike traditional software, ML projects

grapple with inherent uncertainties in data and model outcomes, requiring a more

adaptive approach. Navigating these uncertainties is paramount when

incorporating Agile principles.

The AI data-poisoning cat-and-mouse game — this time, IT will win

The offensive technique works in one of two ways. One, it tries to target a

specific company by making educated guesses about the kind of sites and material

they would want to train their LLMs with. The attackers then target, not that

specific company, but the many places where it is likely to go for training. If

the target is, let’s say Nike or Adidas, the attackers might try and poison the

databases at various university sports departments with high-profile sports

teams. If the target were Citi or Chase, the bad guys might target databases at

key Federal Reserve sites. The problem is that both ends of that attack plan

could easily be thwarted. The university sites might detect and block the

manipulation efforts. To make the attack work, the inserted data would likely

have to include malware executables, which are relatively easy to detect. Even

if the bad actors’ goal was to simply feed incorrect data into the target

systems — which would, in theory, make their analysis flawed — most LLM training

absorbs such a massively large number of datasets that the attack is unlikely to

work well.

What Is API Sprawl and Why Is It Important?

Inconsistencies between APIs can stunt the developer experience around

integration. For example, many different design paradigms are used in modern API

development, including SOAP, REST, gRPC and more asynchronous formats like

webhooks or Kafka streams. An organization might adopt various styles

simultaneously. Using various API styles provides best-of-breed options for the

task at hand. That said, style inconsistencies can make it challenging for a

single developer to navigate disparate components without guidance. ... As

cybersecurity experts often say, you can’t secure what you don’t know. Amid

technology sprawl, you likely won’t be aware of the hundreds, if not thousands,

of APIs being developed and consumed daily. Without inventory management, APIs

can slip under the rug and rot. API sprawl can also lead to insecure coding

practices. Security researchers at Escape recently found 18,000 high-risk

API-related secrets and tokens after performing a scan of the web. ... Life

cycle management can also suffer with sprawl. If API versioning and retirement

schedules aren’t communicated effectively, it can easily lead to breaking

changes on the client side.

Rise in cyberwarfare tactics fueled by geopolitical tensions

There are a number of ways in which public-private partnerships can be effective

in addressing cybersecurity threats. First, governments and private companies

can share information about cyber threats and vulnerabilities. This can help to

improve the overall security posture of both the public and private sectors.

Second, governments and private companies can develop joint cybersecurity

initiatives. These initiatives can focus on a variety of areas, such as

developing new security technologies, improving incident response capabilities,

or providing cybersecurity training to employees. Third, governments and private

companies can collaborate on research and development efforts. This can help to

identify new cybersecurity threats and develop new ways to protect against them.

Caveat, when talking about public-private partnerships – what is needed is real

operational and ongoing public-private collaboration is essential for sharing

information, developing best practices, and mitigating risks and is essential

for building a more secure and resilient cyber ecosystem.

New media could bring fresh competition to tape archive market

Glass is becoming another alternative to tape. Microsoft's Project Silica uses

femtosecond lasers to write data to quartz glass and "polarization-sensitive

microscopy using regular light to read," according to Microsoft. Another

company, Cerabyte, uses lasers to etch patterns into ceramic nanocoatings on

glass. Ceramic is resistant to heat, moisture, corrosion, UV light, radiation

and electromagnetic pulse blasts. Ceramic also has another advantage over tape:

Its high durability leads to fewer refresh cycles, according to Martin Kunze,

chief marketing officer and co-founder of Cerabyte, a startup headquartered in

Munich. "Tape has limited durability and needs to be either refreshed or all

migrated onto new formats," Kunze said. This undertaking is expensive and

time-consuming, he said. Kunze added that tape is vulnerable to vertical market

failure. Western Digital is the only company manufacturing the reading and

writing heads for tape. "Assume there is a decision on the board: 'We don't

[want to] run this company anymore because it doesn't bring in as much

revenue,'" he said. The single point of failure could leave enterprises in the

lurch. He sees another problem with tape -- it's stodgy.

Apache Pekko: Simplifying Concurrent Development With the Actor Model

/filters:no_upscale()/news/2024/02/apache-pekko-actor/en/resources/1actor_top_tree-1707735840414.png)

In the actor model, actors communicate by sending messages to each other,

without transferring the thread of execution. This non-blocking communication

enables actors to accomplish more in the same amount of time compared to

traditional method calls. Actors behave similarly to objects in that they

react to messages and return execution when they finish processing the current

message. Upon reception of a message an Actor can do the following three

fundamental actions: send a finite number of messages to Actors it knows;

create a finite number of new Actors; and designate the behavior to be applied

to the next message. ... Pekko is designed as a modular application and

encompasses different modules to provide extensibility. The main components

are: Pekko Persistence enables actors to persist events for recovery on

failure or during migration within a cluster that provides abstractions for

developing event-sourced applications; Pekko Streams module provides a

solution for stream processing, incorporating back-pressure handling

seamlessly and ensuring interoperability with other Reactive Streams

implementations...

How Can Synthetic Data Impact Data Privacy in the New World of AI

Data from the real world is often inherently biased. This is because the data

used to train models is largely gathered from across the internet, reflecting

biases present in society and the socio-economic groups prevalent in the

social media spaces used to gather this data. Data scientists have turned to

synthetic data and ‘Digital Humans’ to combat these biases. With Digital

Humans, data scientists can vary elements of ‘Digital DNA,’ such as et,’ city,

size, and clothing, and mix with real-world data to create more representative

and diverse datasets. Of course, this also protects image rights and PII

exposure that could come from using images and footage of people in the real

world. Mindtech worked with a construction company that wanted to develop

autonomous site vehicles. The company wanted to enhance these vehicles’ safety

and accrue a broader range of data to train them. As a result, it used

synthetic data to create diverse synthetic datasets to train these vehicles to

identify various people on site, no matter size/shape/sex/ethnicity/clothing/

– the vehicles could stop their journey if someone were blocking their way.

The Great Superapp Dilemma: Business Ambitions vs User Privacy

If we put privacy aside for a moment, the benefits of a possible superapp

cannot be denied. We could say goodbye to the hundreds of online accounts that

operate as an isolated silo managed by unrelated services and domains and the

chore of updating account details across them all, one by one. And, as well as

promising a much simpler user experience through a single application, it

would unlock new convenient services using a broader set of data, and allow

for increased innovation that adds value for users – such as unified health

metrics, consolidated banking services, cohesive government-related accounts,

integrated social networks, or unified marketplaces. However, managing vast

volumes of accessible data – which has grown excessively since the era of big

data, and will no doubt continue with the advent of AI – is operationally

challenging to say the least. ... With these concerns in mind, companies

working on superapp development must address issues including managing and

recovering from identity theft, securing data against breaches, and ensuring

that data access aligns with the user’s consented sharing policy.

Quote for the day:

''Effective questioning brings

insight, which fuels curiosity, which cultivates wisdom.'' --

Chip Bell

No comments:

Post a Comment