How Banks Can Turn Risk Into Reward Through Data Governance

To understand why data governance is critical for banks, we must understand the

underlying challenges facing financial services organizations as they modernize.

Rolling out new cloud applications or Internet of Things (IoT) devices into an

environment where legacy on-premises systems are already in place means more

data silos and data sets to manage. Often, this results in data volumes,

variety, and velocity increasing much too quickly for banks. This gives rise to

IT complexity—driven by technical debt or the reliance on systems cobbled

together and one-off connections. Not only that, it also raises the specter of

'shadow IT' as employees look for workarounds to friction in executing tasks.

This can create difficulties for banks trying to identify and manage their data

assets in a consistent, enterprise-wide way that is aligned with business

strategy. Ultimately, barely controlled data leads to errant financial

reporting, data privacy breaches, and non-compliance with consumer data

regulations. Failing to counter these risks can lead to fines, hurt brand image,

and trigger lost sales.

Key Considerations for Developing Organizational Generative AI Policies

It's crucial to ensure that all relevant stakeholders have a voice in the

process, both to make the policy comprehensive and actionable and to ensure

adherence to legal and ethical standards. The breadth and depth of

stakeholders involved will depend on the organizational context, such as,

regulatory/legal requirements, the scope of AI usage and the potential risks

associated (e.g., ethics, bias, misinformation). Stakeholders offer technical

expertise, ensure ethical alignment, provide legal compliance checks, offer

practical operational feedback, collaboratively assess risks, and jointly

define and enforce guiding principles for AI use within the organization. Key

stakeholders—ranging from executive leadership, legal teams and technical

experts to communication teams, risk management/compliance and business group

representatives—play crucial roles in shaping, refining and implementing the

policy. Their contributions ensure legal compliance, technical feasibility and

alignment with business and societal values.x

CIOs sharpen cloud cost strategies — just as gen AI spikes loom

One key skill CIOs are honing to lower costs is their ability to negotiate

with cloud providers, said one CIO who declined to be named. “People better

understand the charges, and [they] better negotiate costs. After being in

cloud and leveraging it better, we are able to manage compute and storage

better ourselves,” said the CIO, who notes that vendors are not cutting costs

on licenses or capacity but are offering more guidance and tools. “After some

time, people have understood the storage needs better based on usage and

preventing data extract fees.” Thomas Phelps, CIO and SVP of corporate

strategy at Laserfiche, says cloud contracts typically include several

“gotchas” that IT leaders and procurement chiefs should be aware of, and he

stresses the importance of studying terms of use before signing. ... CIOs may

also fall into the trap of misunderstanding product mixes and the downside of

auto-renewals, he adds. “I often ask vendors to walk me through their product

quote and explain what each product SKU or line item is, such as the cost for

an application with the microservices and containerization,” Phelps

says.

Misdirection for a Price: Malicious Link-Shortening Services

Security researchers gave the service the codename "Prolific Puma." They

discovered it by identifying patterns in links being used by some scammers and

phishers that appeared to trace to a common source. The service appears to be

have active since at least 2020 and regularly is used to route victims to

malicious domains, sometimes first via other link-shortening service URLs.

"Prolific Puma is not the only illicit link shortening service that we have

discovered, but it is the largest and the most dynamic," said Renee Burton,

senior director of threat intelligence for Infoblox, in a new report on the

cybercrime service. "We have not found any legitimate content served through

their shortener." Infoblox, a Santa Clara, California-based IT automation and

security company, published a list of 60 URLs it has tied to Prolific Puma's

attacks. The URLS employ such domains as hygmi.com, yyds.is, 0cq.us, 4cu.us

and regz.information. Infoblox said many domains registered by the group are

parked for several weeks while being used, since many reputation-based

security defenses will treat freshly registered domains as more likely to be

malicious.

DNS security poses problems for enterprise IT

EMA asked research participants to identify the DNS security challenges that

cause them the most pain. The top response (28% of all respondents) is DNS

hijacking. Also known as DNS redirection, this process involves intercepting

DNS queries from client devices so that connection attempts go to the wrong IP

address. Hackers often achieve this buy infecting clients with malware so that

queries go to a rogue DNS server, or they hack a legitimate DNS server and

hijacks queries as more massive scale. The latter method can have a large

blast radius, making it critical for enterprises to protect DNS infrastructure

from hackers. The second most concerning DNS security issue is DNS tunneling

and exfiltration (20%). Hackers typically exploit this issue once they have

already penetrated a network. DNS tunneling is used to evade detection while

extracting data from a compromised. Hackers hide extracted data in outgoing

DNS queries. Thus, it’s important for security monitoring tools to closely

watch DNS traffic for anomalies, like abnormally large packet sizes. The third

most pressing security concern is a DNS amplification attack (20%).

Data governance that works

Once we've found our targeted business initiatives and the data is ready to

meet the needs of those initiatives, there are three major governance pillars

we want to address for that data: understand, curate, and protect. First, we

want to understand the data. That means having a catalog of data that we can

analyze and explain. We need to be able to profile the data, to look for

anomalies, to understand the lineage of that data, and so on. We also want to

curate the data, or make it ready for our particular initiatives. We want to

be able to manage the quality of the data, integrate it from a variety of

sources across domains, and so on. And we want to protect the data, making

sure we comply with regulations and manage the life cycle of the data as it

ages. More importantly, we need to enable the right people to get to the right

data when they need it. AWS has tools, including Amazon DataZone and AWS Glue,

to help companies do all of this. It's really tempting to attack these issues

one by one and to support each individually. But in each pillar, there are so

many possible actions that we can take. This is why it's better to work

backwards from business initiatives.

EU digital ID reforms should be ‘actively resisted’, say experts

The group’s concerns over the amendments largely centre on Article 45 of the

reformed eIDAS, where it says the text “radically expands the ability of

governments to surveil both their own citizens and residents across the EU by

providing them with the technical means to intercept encrypted web traffic, as

well as undermining the existing oversight mechanisms relied on by European

citizens”. “This clause came as a surprise because it wasn’t about governing

identities and legally binding contracts, it was about web browsers, and that

was what triggered our concern,” explained Murdoch. ... All websites today are

authenticated by root certificates controlled by certificate authorities,

which assure the user that the cryptographic keys used to authenticate the

website content belong to the website. The certificate owner can intercept a

user’s web traffic by replacing these cryptographic keys with ones they

control, even if the website has chosen to use a different certificate

authority with a different certificate. There are multiple cases of this

mechanism having been abused in reality, and legislation to govern certificate

authorities does exist and, by and large, has worked well.

The key to success is to think beyond the obvious, to innovate and look for solutions

AI systems, including machine learning models, make critical decisions and

recommendations. Ensuring the accuracy and reliability of these AI models is

paramount. AI heavily relies on data and ensuring data quality, integrity, and

consistency is a crucial task. Data pre-processing and validation are

necessary steps to make AI models work effectively. Integration of software

testing in the software development life cycle helps identify and rectify

issues that could lead to incorrect predictions or decisions, minimizing the

risks associated with AI tools. AI models are susceptible to adversarial

attacks and robust security testing helps identify vulnerabilities and

weaknesses in AI systems, protecting them from cyber threats and ensuring the

safety of automated processes. Testing is not a one-time effort; it’s an

ongoing process. Regular testing and monitoring are necessary to identify

issues that may arise as AI models and automated systems evolve. High-quality,

well-tested AI-driven automation can provide a competitive advantage.

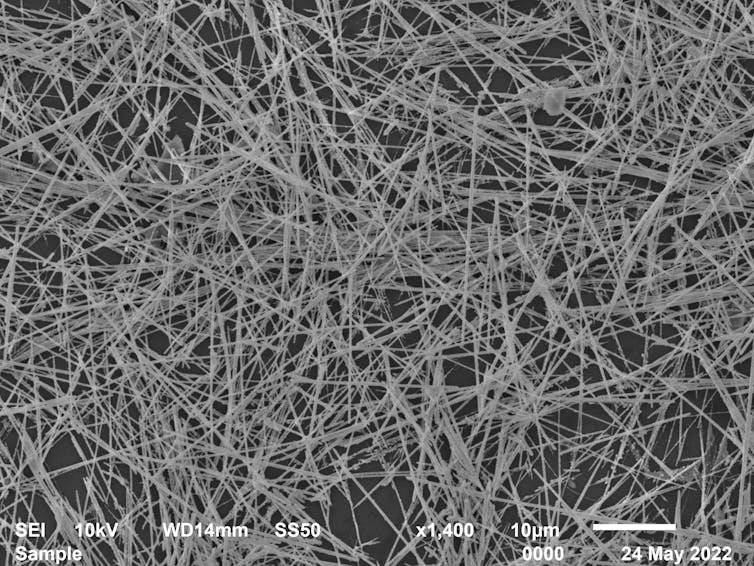

We built a ‘brain’ from tiny silver wires.

We are working on a completely new approach to “machine intelligence”. Instead

of using artificial neural network software, we have developed a physical

neural network in hardware that operates much more efficiently. ... Using

nanotechnology, we made networks of silver nanowires about one thousandth the

width of a human hair. These nanowires naturally form a random network, much

like the pile of sticks in a game of pick-up sticks. The nanowires’ network

structure looks a lot like the network of neurons in our brains. Our research

is part of a field called neuromorphic computing, which aims to emulate the

brain-like functionality of neurons and synapses in hardware. Our nanowire

networks display brain-like behaviours in response to electrical signals.

External electrical signals cause changes in how electricity is transmitted at

the points where nanowires intersect, which is similar to how biological

synapses work. There can be tens of thousands of synapse-like intersections in

a typical nanowire network, which means the network can efficiently process

and transmit information carried by electrical signals.

Why public/private cooperation is the best bet to protect people on the internet

Neither the FTC nor the SEC was empowered by Congress with responsibility for

cyberspace, and both have relied on pre-existing authorities related to

corporate representations to bring actions against individuals who did not

have corporate duties managing legal or external communications. They are

using the tools at their disposal to change expectations, even if it means

bringing a bazooka to a knife fight. These cases make CISOs worried that in

addition to being technical experts they also need to personally become

experts on data breach disclosure laws and experts on SEC reporting

requirements rather than trusting their peers in the legal and communications

departments of their organizations. What we need is a real partnership between

the public and the private sector, clear rules and expectations for IT

professionals and law enforcement, and an executive branch that will attempt

regulation through rulemaking rather than through ugly and costly enforcement

actions that target IT professionals for doing their jobs and further deepens

the adversarial public-private divide.

Quote for the day:

"Leadership is working with goals and

vision; management is working with objectives." --

Russel Honore

No comments:

Post a Comment