OpenAI jettisons CEO Sam Altman for dishonesty

Board Chairman Greg Brockman will also leave his role as a result of the shakeup. OpenAI initially stated that Brockman would remain in his role as president, but shortly after the announcement of the reshuffling, he announced that he would quit the company entirely. The company’s CTO, Mira Murati, will take over as interim CEO, according to OpenAI, and the board will begin to conduct a formal search for a permanent replacement. Brockman will report to Murati, the company noted. Ritu Jyoti, group vice president for worldwide AI and automation research at IDC, called the shakeup “astonishing,” noting that Altman had been present at many public events, even in the days before his ouster, and that there was no sense of trouble in the offing for the CEO. “I’m sure the board has taken the right decision,” she said. “But it’s quite unexpected.”" Brockman and Murati were both promoted to their former roles in May 2023. Brockman has been closely involved in both broad strategy for the company as well as personal coding contributions, and has focused on training “flagship AI systems” since assuming the role of president.

Microsoft and Meta quizzed on AI copyright

The Lords were keen to hear what the two experts thought about open and closed

data models and how to balance risk with innovation. Sherman said: “Our company

has been very focused on [this]. We’ve been very supportive of open source along

with lots of other companies, researchers, academics and nonprofits as a viable

and an important component of the AI ecosystem.” Along with the work a wider

community can provide in tuning data models and identifying security risks,

Sherman pointed out that open models lower the barrier to entry. This means the

development of LLMs is not restricted to the largest businesses – small and

mid-sized firms are also able to innovate. In spite of Microsoft’s commitment to

open source, Larter appeared more cautious. He told the committee that there

needs to be a conversation about some of the trade-offs between openness and

safety and security, especially around what he described as “highly capable

frontier models”. “I think we need to take a risk-based approach there,” he

said.

The deepfake dilemma: Detection and decree

Deepfake technology poses significant challenges in legal proceedings,

particularly in criminal cases, with potential repercussions on individuals'

personal and professional lives. The absence of mechanisms to authenticate

evidence in most legal systems puts the onus on the defendant or opposing party

to contest manipulation, potentially privatizing a pervasive problem. To address

this, a proposed rule could mandate the authentication of evidence, possibly

through entities like the Directorate of Forensic Science Services, before court

admission, albeit with associated economic costs. In India, existing laws offer

some recourse against deepfake issues, but the lack of a clear legal definition

hampers targeted prosecution. The evolving nature of deepfake technology

compounds the challenges for automated detection systems, leading to increased

difficulty, particularly in the face of contextual complexities. This poses a

significant threat to legal proceedings, potentially prolonging trials and

heightening the risk of false assumptions.

The data skills gap keeps getting bigger. Here's how one company is filling it

The key message, says Moore, is that Bentley's apprenticeship program is

adding data talent to the IT skills pool and it's helping to foster a

community of like-minded professionals. "We've got a group of people who've

gone through the program. It's not just one school leaver going to a corner of

an office and not being sure how to fit in," he says. "I've now got people on

first, second, third, and fourth years of degrees. That depth of talent means

there's always someone to ask for help and support. There's a great

community." Moore says Bentley recruits the students and then places them with

a university. "I'm keen first and foremost that they're coming to Bentley and

they're adding value. They're connecting with the brand, sustainability, and

inclusivity that we offer -- and a lot of people are resonating with that

idea." When it comes to recruiting talent for the program, Moore says he's put

a lot of effort into going to schools. He typically looks for a solid grade in

math, as both the degree and the work at Bentley are "stats-heavy".

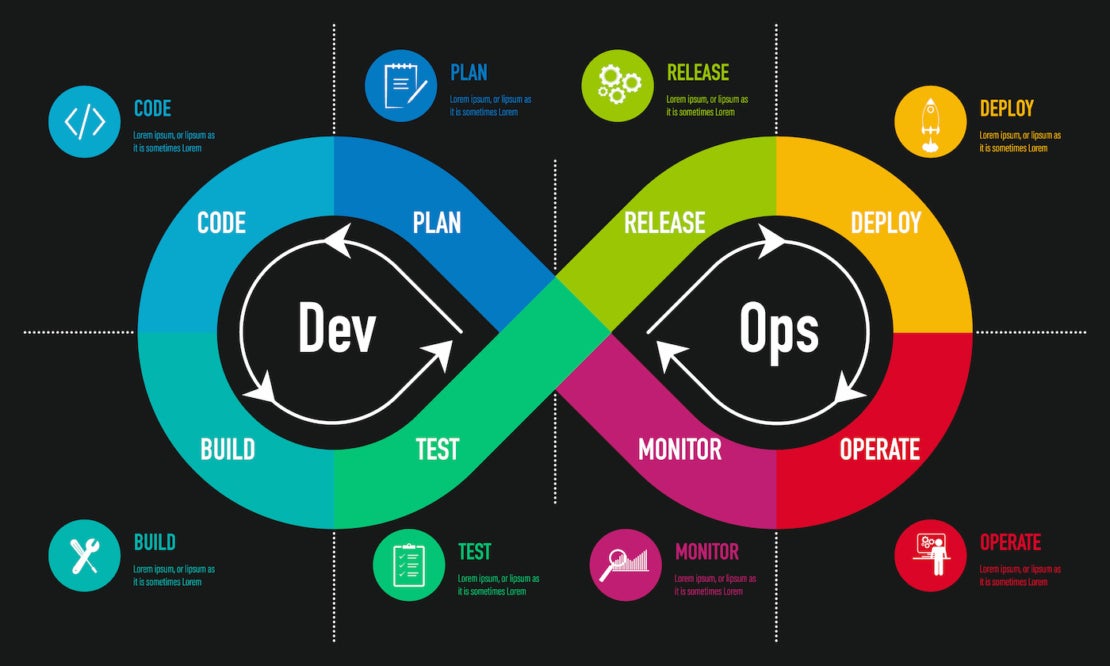

What are the Benefits of DevOps?

CI/CD is a cornerstone of DevOps. Continuous integration involves frequently

integrating code changes into a shared repository, where automated tests are

run to catch integration issues early. Continuous Deployment takes this a step

further by automating the deployment of code changes to production

environments. This automated pipeline reduces the risk of human error and

ensures that the latest code is always available to users. ... DevOps

encourages a focus on quality and reliability throughout the development

process. Automated testing, part of the CI/CD pipeline, ensures that new code

changes do not introduce regressions or bugs. This leads to more stable and

reliable software, as issues are caught and resolved before they reach

production. ... DevOps heavily relies on automation tools to streamline

processes. Infrastructure as Code (IaC) treats infrastructure setup as code,

allowing developers to define and manage infrastructure using

version-controlled files. This approach eliminates manual setup, reduces

inconsistencies and accelerates environment provisioning.

A Roadmap to True Observability

Ultimately, true observability empowers teams to deliver more reliable,

responsive, and efficient applications that elevate the overall user

experience. In order to achieve "true" observability, it's important to

understand the Observability Maturity Model. This model outlines the stages

through which organizations evolve in their observability practices, acting as

a roadmap. Here, we'll describe each maturity stage, highlight their

advantages and disadvantages, and offer some practical tips for moving from

one stage to the next. ... After understanding the Observability Maturity

Model, it's essential to explore the multifaceted approach companies must

embrace for a successful observability transition. Despite the need to adopt

advanced tools and practices, the path to "true" observability can demand

significant cultural and organizational shifts. Companies must develop

strategies that align with the observability maturity model, nurture a

collaborative culture, and make cross-team communication a priority. The

rewards are quite substantial — faster issue resolution and improved user

experience, making "true" observability a transformative journey for IT

businesses.

Make Your Dev Life Easier by Generating Tests with CodiumAI

Many developers still claim they don’t like writing tests, so the idea of

generating them using AI was always going to appeal. Of course, tests are not

necessarily a secondary issue — with Test-driven development (TDD) you write

the tests first. While it is a good thing every team should try, common

practice sees the creation of a minimum viable product (MVP) first, and when

there is some evidence this has a future, to then continue the project with

full unit testing. I had not seen CodiumAI before, but as it has generated an

easy-to-understand elevator pitch — “Generating meaningful tests for busy

devs” — right on its front page, I’m immediately ready to give it a go.

Clearly, they are trying to jump into the tool space opened up by OpenAI’s GPT

models — indeed, it is “powered by GPT-3.5&4 & TestGPT-1”. CodiumAI’s

implementation only works with Visual Studio Code and JetBrains for now; I’ll

use the former. I find VSC a bit awkward, but at least it should be happy with

C#, my preferred tipple. But I know JetBrains tools are extremely popular

too.

Meta disbanded its Responsible AI team

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/23951355/STK043_VRG_Illo_N_Barclay_1_Meta.jpg)

According to the report, most RAI members will move to the company’s

generative AI product team, while others will work on Meta’s AI

infrastructure. The company regularly says it wants to develop AI responsibly

and even has a page devoted to the promise, where the company lists its

“pillars of responsible AI,” including accountability, transparency, safety,

privacy, and more. ... He added that although the company is splitting the

team up, those members will “continue to support relevant cross-Meta efforts

on responsible AI development and use.” ... RAI was created to identify

problems with its AI training approaches, including whether the company’s

models are trained with adequately diverse information, with an eye toward

preventing things like moderation issues on its platforms. Automated systems

on Meta’s social platforms have led to problems like a Facebook translation

issue that caused a false arrest, WhatsApp AI sticker generation that results

in biased images when given certain prompts, and Instagram’s algorithms

helping people find child sexual abuse materials.

Ransomware gang files SEC complaint against company that refused to negotiate

It will be interesting to see how the SEC reacts to the possibility of

ransomware gangs taking advantage of its rules and complaint facility to

blackmail victims and whether the agency will be more lenient with how it

enforces the new disclosure requirements in the beginning. “This puts added

pressure on publicly traded MeridianLink after claiming to have breached its

network and stolen unencrypted data,” Ferhat Dikbiyik. “This move has

blindsided the industry and raised questions about the effectiveness of the

new SEC rules in the fight against cybercrime. It also begs the question: Does

ALPHV have affiliates within the US?” ... “While shocking to many, the reports

that BlackCat tattled on one of their victims to the SEC isn't surprising in

the ever-evolving ransomware economy,” Jim Doggett, CISO of cybersecurity firm

Semperis, tells CSO. “Some will argue that BlackCat's move is opportunistic at

best, and they are motivated only by greed to force quicker payments by

victims. Others will say that this aggressive move could leave the group in

the crosshairs of US law enforcement agencies. At the end of the day, the

ransomware gangs are criminal organizations, and their only motive is

profits.”

The Next Leap in Battery Tech: Lithium-Ion Batteries Are No Longer the Gold Standard

Unfortunately, the natural SEI is brittle and fragile, resulting in poor

lifespan and performance. Here, the researchers have looked into a substitute

for natural SEI, which could effectively mitigate the side reactions within

the battery system. The answer is ASEI: artificial solid electrolyte

interphase. ASEI corrects some of the issues plaguing the bare lithium metal

anode to make a safer, more reliable, and even more powerful source of power

that can be used with more confidence in electric vehicles and other similar

applications. ... The future of the ASEI layers is bright but calls for some

improvements. Researchers mainly would like to see improvement in the adhesion

of the ASEI layers on the surface of the metal, which overall improves the

function and longevity of the battery. Additional areas that require some

attention are stability in the structure and chemistry within the layers, as

well as minimizing the thickness of the layers to improve the energy density

of the metal electrodes. Once these issues are worked out, the road ahead for

an improved lithium metal battery should be well-paved.

Quote for the day:

"When you expect the best from people,

you will often see more in them than they see in themselves." --

Mark Miller

No comments:

Post a Comment