What CIOs need to become better enablers of sustainability

Key to this is a greater understanding of business operations and their

production of CO2, or use of unsustainable practices and resources. As with most

business challenges, data is instrumental. “Like anything, the hard work is the

initial assessment,” says CGI director of business consulting and CIO advisor

Sean Sadler. “From a technology perspective, you need to look at the

infrastructure, where it’s applied, how much energy it draws, and then how it

fits into the overall sustainability scheme.” CIOs who create data cultures

across organizations enable not only sustainable business processes but also

reduce reliance on consultancies, according to IDC. “Organizations with the most

mature environmental, social, and governance (ESG) strategies are increasingly

turning to software platforms to meet their data management and reporting

needs,” says Amy Cravens, IDC research manager, ESG Reporting and Management

Technologies.

How to implement observability in your IT architecture

Although it has grown out of the APM market, observability is more than just APM

with a new name and marketing approach. The most crucial factor differentiating

observability from APM is that observability includes three distinct monitoring

approaches—tracing, metrics, and logs—while APM provides tracing alone. By

collecting and aggregating these various types of data from multiple sources,

observability offers a much broader view of the overall system and application

health and performance, with the ability to gain much deeper insights into

potential performance issues. Another important distinction is that open source

tools are the foundation of observability, but not APM. While some APM vendors

have recently open-sourced the client side of their stack, the server side of

all the popular commercial APM solutions is still proprietary. These

distinctions do not mean that observability and APM are unconnected. Application

performance management can still be an important component of an observability

implementation.

How Conversational Programming Will Democratize Computing

The scope of a conversation must mirror a human “mental stack”, not that of a

computer. When I use a conventional Windows interface on my laptop, I am

confronted with the computer’s file system which is presented as folders and

files. That effort is reversed in conversational programming — the LLM system

has to work with my limited human cognition facilities. This means creating

things in response to requests, and reporting outcomes at the same level that

I asked for them. Returning arcane error codes in response to requests will

immediately break the conversation. We have already seen ChatGPT reflect on

its errors, which means a conversation should retain its value for the user.

... The industrialization of LLMs is the only thing we can be reasonably sure

about, because the investment has already been made. However, the rapid

advancement of GPT systems will likely run aground in the same areas that

other large-scale projects have in the past. The lack of collaboration between

large competitors has eroded countless good ideas that depended on

interoperability.

Dark Side of DevOps - the Price of Shifting Left and Ways to Make it Affordable

On the one hand, not having a gatekeeper feels great. Developers don’t have to

wait for somebody’s approval - they can iterate faster and write better code

because their feedback loop is shorter, and it is easier to catch and fix

bugs. On the other hand, the added cognitive load is measurable - all the

tools and techniques that developers have to learn now require time and mental

effort. Some developers don’t want that - they just want to concentrate on

writing their own code, on solving business problems. ... However, as

companies grow, so does the complexity of their IT infrastructure. Maintaining

dozens of interconnected services is not a trivial task anymore. Even locating

their respective owners is not so easy. At this point, companies face a choice

- either reintroducing the gatekeeping practices that negatively affect

productivity, or to provide a paved path - a set of predefined solutions that

codifies the best practices, and takes away mental toil, allowing developers

to concentrate on solving business problems.

Why generative AI will turbocharge low-code and no-code development

Generative AI's integration into low-code and no-code platforms will lower the

barriers to adoption of these development environments in enterprises, agreed

John Bratincevic, principal analyst at Forrester. “The integration of

generative AI will see adoption of low-code by business users, since the

learning curve for getting started on developing applications will be even

lower,” Bratincevic said. The marriage of generative AI with low-code and

no-code platforms will aid professional developers as well, analysts said. ...

“These generative AI coding capabilities will be most helpful for developers

working on larger projects that are looking for shortcuts to support

commoditized or common sense requests,” said Hyoun Park, principal analyst at

Amalgam Insights. “Rather than searching for the right library or getting

stuck on trying to remember a specific command or term, GPT and other similar

generative AI tools will be able to provide a sample of code that developers

can then use, edit, and augment,” Park said.

Start with Sound Policies, Then Customize with Required Exceptions

Number one is our culture of security, not just within the cybersecurity

organization, but broader than the cybersecurity organization looking at the

entire Providence org – instilling security practices into our business

practices, or business processes, instilling security mindset into our

caregivers, because our caregivers truly are on the front lines of the

cybersecurity battlefield. They’re the ones that are receiving phishing

emails, they’re the ones that are making decisions on what they click on, what

they don’t click on, interactions with our clinical device vendors, or

clinical application vendors. They’re making risk choices every day. So

informing them about security, training them on security, and instilling

security culture – broader than just the security organization – has been a

real focus of ours this year. Another focus of ours has been on implementing

or continuing the journey, I should say, toward a zero trust approach here at

Providence. And when I say zero trust, a lot of people use the term, “never

trust, always verify.”

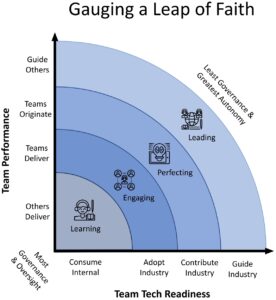

Leap of Faith: Building Trust and Commitment with Engineering

Leaping before you’re ready will result in disappointment if not outright disaster. It is important to understand what knowledge and muscle is required along the various stages that lead to full Engineering Trust & Autonomy. Each organization must determine this trust criteria for themselves, however, it is imperative to recognize starting from the future end-state goal and working backwards promotes the greatest benefit (e.g., innovative inspirational differentiation). To be most effective, seek out Leading teams already doing this in your organization. They do exist, but they most likely are considered one-offs, rogue, and exceptions to the internal norm. Good. That’s what you’re looking for! ... Once trust criteria is shared and definitive trust-boundaries are in place, the hardest piece of this puzzle must be executed: Executive Leadership and Individual Commitment Putting your strategy into play takes time and during that time doubts will creep in. This is normal, however, there are a few tricks to leverage that ensure you stay the course

Used Routers Often Come Loaded With Corporate Secrets

The big danger is that the wealth of information on the devices would be

valuable to cybercriminals and even state-backed hackers. Corporate

application logins, network credentials, and encryption keys have high value

on dark web markets and criminal forums. Attackers can also sell information

about individuals for use in identity theft and other scamming. Details

about how a corporate network operates and the digital structure of an

organization are also extremely valuable, whether you're doing

reconnaissance to launch a ransomware attack or plotting an espionage

campaign. For example, routers may reveal that a particular organization is

running outdated versions of applications or operating systems that contain

exploitable vulnerabilities, essentially giving hackers a road map of

possible attack strategies. ... Since secondhand equipment is discounted, it

would potentially be feasible for cybercriminals to invest in purchasing

used devices to mine them for information and network access and then use

the information themselves or resell it.

ChatGPT may hinder the cybersecurity industry

ChatGPT’s AI technology is readily available to most of the world.

Therefore, as with any other battle, it’s simply a race to see which side

will make better use of the technology. Cybersecurity companies will need to

continuously combat nefarious users who will figure out ways to use ChatGPT

to cause harm in ways that cybersecurity businesses haven’t yet fathomed.

And yet this fact hasn’t deterred investors, and the future of ChatGPT looks

very bright. With Microsoft investing $10 billion in Open AI, it’s clear

that ChatGPT’s knowledge and abilities will continue to expand. For future

versions of this technology, software developers need to pay attention to

its lack of safety measures, and the devil will be in the details. ChatGPT

probably won’t be able to thwart this problem to a large degree. It can have

mechanisms in place to evaluate users’ habits and home in on individuals who

use obvious prompts like, “write me a phishing email as if I’m someone’s

boss,” or try to validate individuals’ identities. Open AI could even work

with researchers to train its datasets to evaluate when their text has been

used in attacks elsewhere.

A New Era of Natural Language Search Emerges for the Enterprise

Due to the statistical nature of their underlying technology, chatbots can

hallucinate incorrect information, as they do not actually understand the

language but are simply predicting the next best word. Often, the training

data is so broad that explaining how a chatbot arrived at the answer it gave

is nearly impossible. This “black box” approach to AI with its lack of

explainability simply will not fly for many enterprise use cases. Welsh

gives the example of a pharmaceutical company that is delivering answers to

a healthcare provider or a patient who visits its drug website. The company

is required to know and explain each search result that could be given to

those asking questions. So, despite the recent spike in demand for systems

like ChatGPT, adapting them for these stringent enterprise requirements is

not an easy task, and this demand is often unmet, according to Welsh. ...

Welsh predicts the companies that will win during this new era of the

enterprise search space are those that had the foresight to have a product

on the market now, and though the competition is currently heating up, some

of these newer companies are already behind the curve.

Quote for the day:

"Leaders must be good listeners.

It's rule number one, and it's the most powerful thing they can do to

build trusted relationships." -- Lee Ellis

No comments:

Post a Comment