AI might not steal your job, but it could change it

People whose jobs deal with language could, unsurprisingly, be particularly

affected by large language models like ChatGPT and GPT-4. Let’s take one

example: lawyers. I’ve spent time over the past two weeks looking at the legal

industry and how it’s likely to be affected by new AI models, and what I found

is as much cause for optimism as for concern. The antiquated, slow-moving legal

industry has been a candidate for technological disruption for some time. In an

industry with a labor shortage and a need to deal with reams of complex

documents, a technology that can quickly understand and summarize texts could be

immensely useful. So how should we think about the impact these AI models might

have on the legal industry? ... AI in law isn’t a new trend, though. It has

already been used to review contracts and predict legal outcomes, and

researchers have recently explored how AI might help get laws passed. Recently,

consumer rights company DoNotPay considered arguing a case in court using an

argument written by AI, known as the “robot lawyer,” delivered through an

earpiece.

Microservice Architecture Key Concepts

Generally, you can use a message broker for asynchronous communication between

services, though it’s important to use one that doesn’t add complexity to your

system and possible latency if it doesn’t scale as the messages grow. Version

your APIs: Keep an ongoing record of the attributes and changes you make to each

of your services. “Whether you’re using REST API, gRPC, messaging…” wrote Sylvia

Fronczak for OpsLevel, “the schema will change, and you need to be ready for

that change.” A typical pattern is to embed the API (application programming

interface) version into your data/schema and gracefully deprecate older data

models. For example, for your service product information, the requestor can ask

for a specific version, and that version will be indicated in the data returned

as well. Less chit-chat, more performance: Synchronous communications create a

lot of back and forth between services. If synchronous communication is really

needed, this will work okay for a handful of microservices, but when dozens or

even hundreds of microservices are in play, synchronous communication can bring

scaling to a grinding halt.

'Silent Success': How to master the art of quiet hiring for your business

“Ironically, quiet hiring is neither ‘quiet’ nor is any ‘hiring’ involved in it

in the traditional sense,” says Bensely Zachariah, Global Head of Human

Resources at Fulcrum Digital, a business platform and digital engineering

services company. Quiet hiring entails companies upskilling their existing

employees and moving them to new roles or new sets of responsibilities, on a

temporary or in some cases, permanent basis to meet the ever-evolving demands of

the business environment. Zachariah says: “Quiet hiring is essentially the

opposite of ‘quite quitting’, a buzzword during 2022, which, in simple words,

means doing the bare minimum for what it takes to keep your job. The concept

behind quiet hiring is rewarding high-performing individuals with more

challenging roles, pay rises, bonuses, or promotions. This is not a new concept

per se, in fact it is an age-old practice which was referred to as ‘facilitated

talent mobility’ or ‘career advancement’ where organisations have spent

considerable time and resources to facilitate upskilling/cross-skilling

employees to give them new roles/avenues for work.”

AI and privacy concerns go hand in hand

Whether personal information is publicly available or not, its collection and

use is still subject to the Privacy Act. While it’s on businesses to operate

within the law, it pays for the public to upskill themselves and be savvy about

what information they’re posting, and where. We know that criminals are becoming

an even greater threat online because cybersecurity breaches are increasing and

result in costly hacks of personal information. AI can be used to supercharge

these criminals, leading to more privacy breaches, and making it even harder for

cybersecurity systems to protect your information or for post-breach measures

such as injunctions to protect stolen data that criminals may make available

online. Powerful AI can aggregate data to a much greater degree, much more

swiftly than humans can, meaning AI can potentially identify people that would

otherwise not be identifiable through more time-intensive methods. Even

seemingly benign online interactions could reveal more about you than you ever

intended.

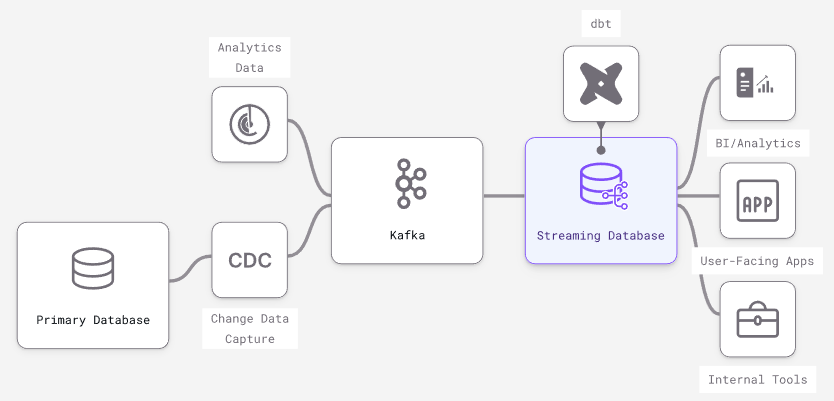

The Benefits of a Streaming Database

Experienced engineers understand that no software stack or tooling is perfect

and comes with a series of trade-offs for each specific use case. With that in

mind, let’s examine the particular trade-offs inherent to streaming databases to

understand better the use cases they align best with. Incrementally updated

materialized views – Streaming databases build on different dataflow paradigms

that shift limitations elsewhere and efficiently handle incremental view

maintenance on a broader SQL vocabulary. Other databases like Oracle, SQLServer

and Redshift have varying levels of support for incrementally updating a

materialized view. They could expand support, but will hit walls on fundamental

issues of consistency and throughput. True streaming inputs – Because they are

built on stream processors, streaming databases are optimized to individually

process continuous streams of input data (e.g., messages from Kafka). Scaling

streaming inputs involves batching them into larger transactions, slowing down

data and losing granularity. In traditional databases (especially OLAP data

warehouses), larger, less frequent batch updates are more performant.

6 steps to measure the business value of IT

A challenge for determining the value contribution is the selection of suitable

key figures. According to the study, IT departments today primarily use

technical and IT-related metrics. That is legitimate, but in this way, there’s

no direct connection to the business. Plus, there’s often a lack of affinity for

meaningful KPIs, both in IT and in the specialist departments, says Jürgen

Stoffel, CIO at global reinsurer Hannover Re. Therefore, in practice, only a few

metrics suitable for both sides would be found, and the result is the IT value

proposition is often unseen. “A consistent portfolio of metrics coordinated with

the business would be helpful,” says Thomas Kleine, CIO of Pfizer Germany, and

Held from the University of Regensburg adds: “Companies have to get away from

purely technical key figures and develop both quantitative and qualitative

metrics with a business connection.” In order to make progress along this path,

the consultants developed a process model with several development and

evaluation phases, using current scientific findings and speaking to CIOs.

Strategic risk analysis is key to ensure customer trust in product, customer-facing app security

Assessing risk requires identifying baseline security criteria around key

elements such as customer contracts and regulatory requirements, Neil Lappage,

partner at LeadingEdgeCyber and ISACA member, tells CSO. “From the start, you've

got things you’re committed to such as requirements in customer contracts and

regulatory requirements and you have to work within those parameters. And you

need to understand who your interested parties are, the stakes they've got in

the game, and the security objectives.” The process of defining the risk profile

of an organization also requires strong collaboration among IT, cybersecurity,

and risk professionals. “How the organization knows the risk profile of the

organization involves the cybersecurity team working with the IT and reporting

to the business so these three things — cyber, IT and risk — work in unison,” he

says. “If cyber sits isolated from the rest of the business, if it doesn't

understand the business, the risk is not optimized.”

FBI Seizes Genesis Cybercriminal Marketplace in 'Operation Cookie Monster'

The seizure of Genesis was a collaborative effort between international law

enforcement agencies and the private sector, according to the notice, which

included the logos of European law enforcement agency Europol; Guardia Civil in

Spain; Polisen, the police force in Sweden; and the Canadian government. The FBI

also is seeking to speak those who've been active on the Genesis Market or who

are in touch with administrators of the forum, offering an email address for

people to contact the agency. ... Indeed, Genesis demonstrated the "growing

professionalization and specialization of the cybercrime sphere," with the site

earning money by gaining and maintaining access to victim systems until

administrators could sell that access to other criminals, according to Sophos.

The various tasks that the Genesis Market bots could undertake included

large-scale infection of consumer devices to steal digital fingerprints,

cookies, saved logins, and autofill-form data stored on them. The marketplace

would package up that data and list it for sale, with prices ranging from less

than $1 to $370, depending on the amount of embedded data that the packages

contained.

Beyond Hype: How Quantum Computing Will Change Enterprise IT

“If you have a problem that can be put into an algorithm that leverages the

parallelism of quantum computers, that’s where you can get a very dramatic

potential speed up,” Lucero says. “If you have a problem that for every

additional variable, you add to the problem, and doubles the computational

complexity -- that is probably a good candidate to be adapted into a quantum

computational problem.” The so-called “traveling salesperson problem,” for

example, would be a fitting problem for a quantum computer. The algorithm asks

the following: “Given a list of cities and the distances between each pair of

cities, what is the shortest possible route that visits each city exactly once

and returns to the origin city.” This and other combinatorial optimization

problems are important to theoretical computer science because of the complexity

of variations involved. Used as a benchmark, the algorithm can be applied to

planning, logistics, microchip manufacturing and even DNA sequencing. In theory,

a quantum computer could make quick work of this complicated algorithm and

provide greater efficiency for programming.

How to build next-gen applications with serverless infrastructure

When explaining the benefits of serverless infrastructure and containers, I'm

often asked why you need containers at all. Don't instances already provide

isolation from underlying hardware? Yes, but containers provide other important

benefits. Containers allow users to fully utilize virtual machine (VM) resources

by hosting multiple applications (on distinct ports) on the same instance. As a

result, engineering teams get portable runtime application environments with

platform-independent capabilities. This allows engineers to build an application

once and then deploy it anywhere, regardless of the underlying operating system.

... Implementing event-driven architecture (EDA) can work for serverless

infrastructure through either a publisher/subscriber (pub/sub) model or an

event-stream model. With the pub/sub model, notifications go out to all

subscribers when events are published. Each subscriber can respond according to

whatever data processing requirements are in place. On the event-stream model

side, engineers set up consumers to read from a continuous flow of events from a

producer.

Quote for the day:

"When I finally got a management

position, I found out how hard it is to lead and manage people." --

Guy Kawasaki

No comments:

Post a Comment