When will the fascination with cloud computing fade?

This is a fair question considering other technology trends in the IT industry,

such as client/server, enterprise application integration, business-to-business,

distributed objects, service-oriented architecture, and then cloud computing. I

gladly rode most of these waves. All these concepts still exist, perhaps in

larger scale than when public interest was hot. However, they are not discussed

as much these days since other technology trends grab more headlines, such as

cloud computing and artificial intelligence. So, should the future hold more

interest in cloud computing, less interest, or about the same? On one hand,

cloud computing is becoming more standardized, and, dare I say, commoditized,

with most cloud providers offering similar services and capabilities as their

competitors. This means businesses no longer need to spend as much time and

resources evaluating different providers. Back in the day, I spent a good deal

of time talking about the advantages of cloud storage on one provider over

another.

Gen Z mental health: The impact of tech and social media

Younger generations tend to engage with social media regularly, in both active

and passive ways. Almost half of both millennial and Gen Z respondents check

social media multiple times a day. Over one-third of Gen Z respondents say they

spend more than two hours each day on social media sites; however, millennials

are the most active social media users, with 32 percent stating they post either

daily or multiple times a day. Whether less active social media use by Gen Z

respondents could be related to greater caution and self-awareness among youth,

reluctance to commit, or more comfort with passive social media use remains up

for debate. ... Across generations, there are more positive than negative

impacts reported by respondents; however, the reported negative impact is higher

for Gen Z. Respondents from high-income countries (as defined by World Bank)

were twice as likely to report a negative impact of social media on their lives

than respondents from lower-middle-income countries.

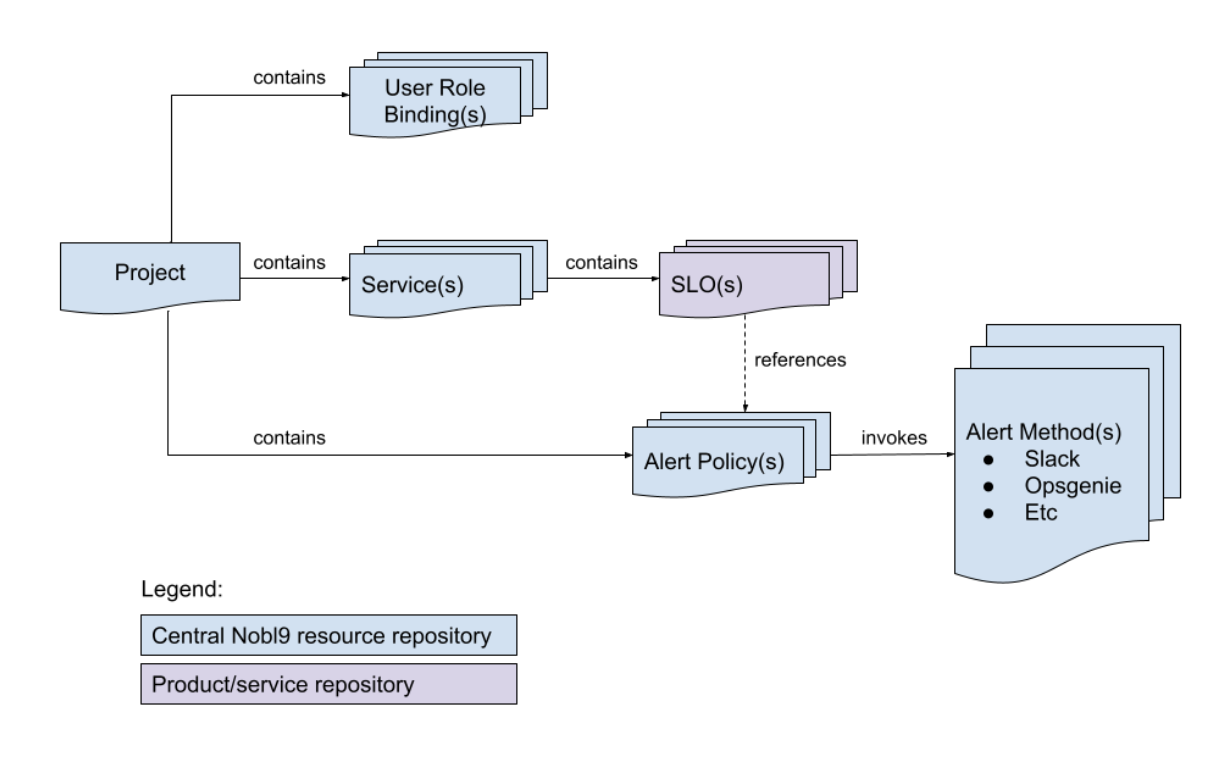

Implementing SLOs-as-Code: A Case Study

Procore’s Observability team has designed our SLO-as-code approach to scale with

Procore’s growing number of teams and services. Choosing YAML as the source of

truth allows Procore a scalable approach for the company through centralized

automation. Following the examples put forth by openslo.com and embracing a

ubiquitous language like YAML helps avoid adding the complexities of Terraform

for development teams and is easier to embed in every team’s directories. We

used a GitOps approach to infrastructure-as-code (IaC) to create and maintain

our Nobl9 resources. The Nobl9 resources can be defined as YAML configuration

(config) files. In particular, one can declaratively define a resource’s

properties (in the config file) and have a tool read and process that into a

live and running resource. It’s important to draw a distinction between the

resource and its configuration, as we’ll be discussing both throughout this

article. All resources, from projects (the primary grouping of resources in

Nobl9) to SLOs, can be defined through YAML.

Data Management: Light At The End Of The Tunnel?

Bansal explains that the answer to the question about the technologies firms

can use to manage their data better from inception to final consumption and

analysis should be prefaced by a clear understanding of the type of operating

model they are looking to establish—centralized, regional or local. It should

also be answered, he says, in the context of the data value chain, the first

step of which entails cataloging data with the business definitions at

inception to ensure it is centrally located and discoverable to anyone across

the business. Firms also need to use the right data standards to ensure

standardization across the firm and their business units. Then, with respect

to distribution, they need to establish a clear understanding of how they will

send that data to the various market participants they interact with, as well

as internal consumers or even regulators. “That’s where application

programming interfaces [APIs] come in, but it’s not a one-size-fits-all,” he

says. “It’s a common understanding that APIs are the most feasible way of

transferring data, but APIs without common data standards do not work.”

Three Soft Skills Leaders Should Look For When They're Recruiting

Adaptability and flexibility have always been valuable soft skills. But given

the experiences we’ve all lived through over the last two-odd years, I think

it’s safe to say they’ve never been more important. The continued uncertainty

of today’s economic environment makes them two competencies you definitely

want your employees to possess. Given what the business world can dish out,

you should assess whether a candidate has what it takes to adapt to changing

situations before you hire them. Adaptability and flexibility are essential

components of problem-solving, time management, critical thinking and

leadership. To gauge whether an interviewee has these two qualities,

have them tell you about changes they made during the pandemic. What kind of

direction and support did they receive from their employers? What did they

think they needed but didn’t get? And how did they continue to work without

whatever they lacked? Explore the candidate’s response to learning new

technologies, handling last-minute client changes and sticking to a project

timeline when something’s handed off to them late.

5 Benefits of Enterprise Architecture Tools

Enterprise architecture tools provide valuable insights for strategic planning

and decision-making. By capturing and analyzing data on IT assets, processes,

and interdependencies, these tools enable organizations to assess the impact

of proposed changes or investments on their IT landscape. This helps in

identifying potential risks and opportunities, evaluating different scenarios,

and making informed decisions about IT investments, initiatives, and resource

allocations. With improved strategic planning, organizations can prioritize IT

projects, optimize their technology investments, and align their IT roadmap

with their business objectives. Collaboration is crucial for effective IT

landscape management, and enterprise architecture tools facilitate

collaboration among different stakeholders, including IT teams, business

units, and executives. These tools provide a centralized platform for sharing

and accessing IT-related information, documentation, and visualizations. This

promotes cross-functional collaboration, enables effective communication, and

ensures that all stakeholders are on the same page when it comes to the

organization’s IT landscape.

Underutilized SaaS Apps Costing Businesses Millions

Managing a SaaS portfolio is truly a team sport and requires stakeholders from

across the organization — but specifically IT and finance teams are most

directly involved, driving the charge, Pippenger said. "For both of these

teams, having a complete picture of all SaaS applications in use is crucial,"

he said. "It provides IT the information they need to mitigate risks,

strengthen the organization's security posture, and maximize adoption."

Improved visibility provides finance teams with the information they need to

properly forecast and budget for software in the future and identify

opportunities for cost savings. "Both of these groups need to partner with

business units and employees who are purchasing software to understand use

cases, ensure that the software being purchased is necessary, and align to the

organization's holistic application strategy," he said. ... Another proven

method to reduce software bloat is to rationalize the SaaS portfolio,

Pippenger said. "We see a lot of redundancy, especially in categories like

online training, team collaboration, project management, file storage and

sharing," he said.

Elevate Your Decision-Making: The Impact of Observability on Business Success

In the business world, as complexity grows, finding the answer to “why”

becomes important. And in the world of computing, observability is about

answering “why something is happening this way.” The advanced tools of

observability act as the heart of your environment, giving you enough context

to debug the issues and prevent the systems from business outages, if followed

with the best practices. But how can observability serve as the catalyst for

your organization’s growth? According to The Observability Market and

Gartner’s report, enterprises will increase their adoption rate of

observability tools by 30% by 2024. In recent years, the emergence of

technologies such as big data, artificial intelligence, and machine learning

has accelerated the adoption of observability practices in organizations.

Harnessing these advanced tools (to name a few top open-source observability

tools – Prometheus, Grafana, OpenTelemetry) empowers organizations to become

more agile and responsive in their decision-making processes.

How Cybersecurity Leaders Can Capitalize on Cloud and Data Governance Synergy

In today’s modern organizations, explosive amounts of digital information are

being used to drive business decisions and activities. However, both

organizations and individuals may not have the necessary tools and resources

to effectively carry out data governance at a large scale. I’ve experienced

this scenario in both large private and public sector organizations: trying to

wrangle data in complex environments with multiple stakeholders, systems, and

settings. It often leads to incomplete inventories of systems and their data,

along with who has access to it and why. Cloud-native services, automation,

and innovation enable organizations to address these challenges as part of

their broader data governance strategies and under the auspices of cloud

governance and security. Many IaaS hyperscale cloud service providers offer

native services to enable activities such as data loss protection (DLP). For

example, AWS Macie automates the discovery of sensitive data, provides

cost-efficient visibility, and helps mitigate the threats of unauthorized data

access and exfiltration.

How Not to Use the DORA Metrics to Measure DevOps Performance

Part of the beauty of DevOps is that it doesn't pit velocity and resilience

against each other but makes them mutually beneficial. For example, frequent

small releases with incremental improvements can more easily be rolled back if

there's an error. Or, if a bug is easy to identify and fix, your team can roll

forward and remediate it quickly. Yet again, we can see that the DORA metrics

are complementary; success in one area typically correlates with success across

others. However, driving success with this metric can be an anti-pattern - it

can unhelpfully conceal other problems. For example, if your strategy to recover

a service is always to roll back, then you’ll be taking value from your latest

release away from your users, even those that don’t encounter your new-found

issue. While your mean time to recover will be low, your lead time figure may

now be skewed and not account for this rollback strategy, giving you a false

sense of agility. Perhaps looking at what it would take to always be able to

roll forward is the next step on your journey to refine your software delivery

process.

Quote for the day:

"A little more persistence, a little

more effort, and what seemed hopeless failure may turn to glorious success."

-- Elbert Hubbard

No comments:

Post a Comment