CEOs need to start caring about the cybersecurity talent gap crisis, new report shows

The focus on cybersecurity needs to start in the boardroom, Morgan argues. CEOs

at every Fortune 500 company and midsize to large organization should advocate

to have those with cybersecurity experience on their board, he says. “That could

be the [chief information security officer (CISO)] or an outside executive with

real-world cybersecurity experience,” he says. “Do it now to protect your

organization, not after a breach or hack to protect your reputation.” By 2025,

35% of Fortune 500 companies will have board members with cybersecurity

experience, according to the Cybersecurity Ventures report, and by 2031 that

will climb to more than 50%. By comparison, last year just 17% of Fortune 500

companies had board members with this type of background. The thought is that if

cybersecurity is a regular boardroom discussion, then the importance of it will

trickle down to the rest of the organization, Morgan says, becoming a part of

the company’s DNA. He encourages executives to take cybersecurity as seriously

as profit and loss discussions.

5 elements of a successful digital platform

“Data is everything for us,” Rotenberg said. Making sure you have high quality

data and that you can constantly iterate on it and improve it should be a

priority when building a platform. “That’s something that we spend a lot of time

on because it’s such an important foundation,” she said. One way the company

uses it is to personalize the experience for clients. For example, this might

mean using digital credentials. It may sound simple, but having the right mobile

phone number means that Fidelity can interact with clients in the way they want.

“Sometimes it’s the most basic things that actually make the biggest

difference,” she said. ... There are a lot of different ways that fintechs and

Fidelity could work with or against each other. “A fintech could be our

competitor, our vendor, [or] we could be a client as well, and vice versa,” she

said. Successful fintechs, in particular, usually have gotten something right in

understanding a “customer friction” that other firms haven’t figured out. “They

go deep in understanding the friction, they create success, and then they scale

outward,” Rotenberg said.

Top cybersecurity products unveiled at Black Hat 2022

Software composition analysis (SCA), static application security testing (SAST),

and container scanning are the latest capabilities in the new update to the

Cycode supply chain security management platform. All new components will add to

Cycode’s knowledge graph, which structures and correlates data from the tools

and phases of the software development life cycle to allow programmers and

security professionals to understand risks and coordinate responses to threats.

A key function of the knowledge graph includes the ability to coordinate

security tools on the platform to do tasks such as identifying when leaked code

contains secrets like API keys or passwords, in order to reduce risk. Support

for vulnerability detection and protection across runtime environments including

Java Virtual Machine (JVM), Node.js, and .NET CLR, has been added to the

Application Security Module in the Dynatrace software and infrastructure

monitoring platform. Additionally, Dynatrace has extended its support to

applications running in Go, a fast-growing, open-source programming language

developed at Google.

Google Cloud and Apollo24|7: Building Clinical Decision Support System (CDSS) together

For any health organization that wants to build a CDSS system, one key block

is to locate and extract the medical entities that are present in the clinical

notes, medical journals, discharge summaries, etc. Along with entity

extraction, the other key components of the CDSS system are capturing the

temporal relationships, subjects, and certainty assessments. ... The advantage

of AutoML Entity Extraction is that it gives the option to train on a new

dataset. However, one of the prerequisites to keep in mind is that it needs a

little pre-processing to capture the input data in the required JSONL format.

Since this is an AutoML model just for Entity Extraction, it does not extract

relationships, certainty assessments, etc. ... The major advantage of these

BERT-based models is that they can be finetuned on any Entity Recognition task

with minimal efforts. However, since this is a custom approach, it requires

some technical expertise. Additionally, it does not extract relationships,

certainty assessments, etc. This is one of the main limitations of using

BERT-based models.

In a hybrid workforce world, what happens to all that office space?

Amy Loomis, a research director for IDC's worldwide Future of Work market

research service, said her research isn't showing an overall reduction in

square footage, but said more companies my be subleasing unused space or

reconfiguring it to better suit hybrid work. The key phrase is "space

optimization," which is being done to attract new employees and for

environmental sustainability. In North America, 34% of companies surveyed by

IDC said that was a key driver in real estate investments. “What we’re seeing

is repurposing of office space,” Loomis said. “Organizations are investing in

office spaces and making them as dynamic, reconfigurable, and sustainable as

possible. "So, yes they left that building during the pandemic and

predominantly went remote and hybrid, but as people are going forward into the

new office space, it’s more likely to be multi-purpose, multifunction,

multi-tenant,” Loomis added. Many real estate developers now see the value in

repurposing spaces to include not only room for commercial use, but also space

for retail and even residential housing.

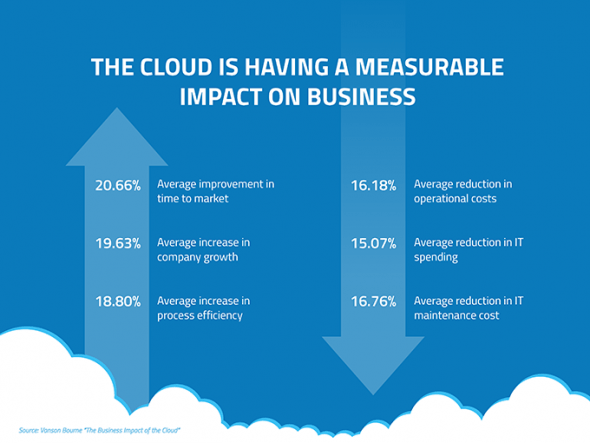

6 Myths About the Cloud That You Should Stop Believing

Cloud migration is an enticing prospect, but you’ve probably heard what

happens when you have too much of a good thing. Going the cloud route and

cloud data integration doesn’t have to mean dumping your entire business at

once. Despite the recognized short and long-term benefits, the expense alone

would be too daunting a concept for many. Cloud migration can take many forms.

Implementing a hybrid approach to cloud technology is considerably more

common, with many people starting with a particular area or application (such

as email) and working their way up. ... True, virtualization is a vital

technology for cloud computing, but virtualization doesn’t equally cloud

computing. While virtualization is mainly concerned with workload and server

consolidation to reduce infrastructure costs, Hadoop in cloud computing

encompasses much more. Consider that, according to an IOUG (Independent Oracle

User Group) study of its members, cloud clients are embracing Platform as a

Service faster than Infrastructure as a Service.

Department of Health investigates bias in medical devices and algorithms

As part of an independent review on equity in medical devices, led by Margaret

Whitehead, WH Duncan chair of public health in the Department of Public Health

and Policy, the government is seeking to tackle disparities in healthcare by

gathering evidence on how medical devices and technologies may be biased

against patients of different ethnicities, genders and other socio-demographic

groups. For instance, some devices employing infrared light or imaging may not

perform as well on patients with darker skin pigmentation, which has not been

accounted for in the development and testing of the devices. Experts are being

asked to provide as much information as possible about biases in medical

devices. Along with information about the device type, name, brand or

manufacturer, the independent review is also looking to gather as much detail

as possible about the intended use of medical devices that may be

discriminatory, the patient population on which they are used, and how and why

these devices may not be equally effective or safe for all the intended

patient groups.

Event-Driven Architectures & the Security Implications

It’s never easy to crush a rock, but it is far from impossible. Taking an

existing application from traditional architecture to EDA requires extensive

resources and development time. Also, while building something new can be

exciting, reworking the old may be unstimulating, especially when it still

seems functional. This can sometimes result in postponing such a drastic

transition. However, this transformation can be quite enlightening—both from a

technical and an operational viewpoint. Developers perceive EDA to be

inherently complex, especially for businesses with intricate processes. There

is the concern that EDA does not effectively capture critical aspects of a

company and that monitoring and debugging the system is more challenging

because of the lack of a centralized structure. However, this complexity does

not simply disappear by opting for a different architecture. Monitoring and

debugging are easier with suitable tracing tools that are tailor-made for

distributed systems, proper encapsulation of individual services, and an

in-depth understanding of the functions of individual services and the events

that should trigger them.

Composing the future of banks

The biggest challenge for any bank is how do they reach such a vision of

composable banking when over decades of investment in technology automation

they have hundreds or thousands of systems, with some sharing data through

extraction, some integrated through technical bridges and maybe a few more

modern solutions through APIs? Integration is one of the biggest headaches a

bank has, so the idea of composable banking would be simpler if every system

had APIs, but that just isn’t the real world. In addition to this, not every

process is based around system-to-system interaction. There are processes that

require human intervention, often managed by business process automation

software. Sometimes these processes are necessary because systems integration

may not be possible without them: the swivel chair problem of keying data from

one system into another. In the last few years, artificial intelligence (AI)

has been added to the mix to make the routing of flows smarter. As always,

technologists are great at solving individual processes, but business tends to

be more complex, and it is only much later we start to see a bigger

picture.

How to Hire the Best AI & Machine Learning Consultants

AI and machine learning consultants consist of qualified and experienced AI

designers, developers, and other experts that help design, implement, and

integrate AI solutions into the company’s business environment. They can

provide, develop, and advise on a wide range of AI capabilities like

predictive analytics, data science, natural language processing (NLP),

computer vision, process automation, voice-enabled technology, and much more.

These consultants can evaluate the potential of data, software infrastructure,

and technology to effectively deploy AI systems and workflows. When bringing

on the best AI and machine learning consultants, you should look for

specialists that go beyond just data science. Most AI and machine learning

projects involve far more than data science. For example, they involve

engineering and aggregating data and formatting it to teach an AI system.

These types of projects also often involve hardware, wireless, and networking,

meaning the consultant should be an expert in the cloud and the Internet of

Things (IoT).

Quote for the day:

"The great leaders are like best

conductors. They reach beyond the notes to reach the magic in the players."

-- Blaine Lee

No comments:

Post a Comment