Intel Hopes To Accelerate Data Center & Edge With A Slew Of Chips

McVeigh noted that Intel’s integrated accelerators will be complemented by the

upcoming discrete GPUs. He called the Flex Series GPUs “HPC on the edge,” with

their low power envelopes, and pointed to Ponte Vecchio – complete with 100

billion transistors in 47 chiplets that leverage both Intel 7 manufacturing

processes as well as 5 nanometer and 7 nanometer processes from Taiwan

Semiconductor Manufacturing Co – and then Rialto Bridge. Both Ponte Vecchio and

Sapphire Rapids will be key components in Argonne National Labs’ Aurora exascale

supercomputer, which is due to power on later this year and will deliver more

than 2 exaflops of peak performance. .... “Another part of the value of the

brand here is around the software unification across Xeon, where we leverage the

massive amount of capabilities that are already established through decades

throughout that ecosystem and bring that forward onto our GPU rapidly with

oneAPI, really allowing for both the sharing of workloads across CPU and GPU

effectively and to ramp the codes onto the GPU faster than if we were starting

from scratch,” he said.

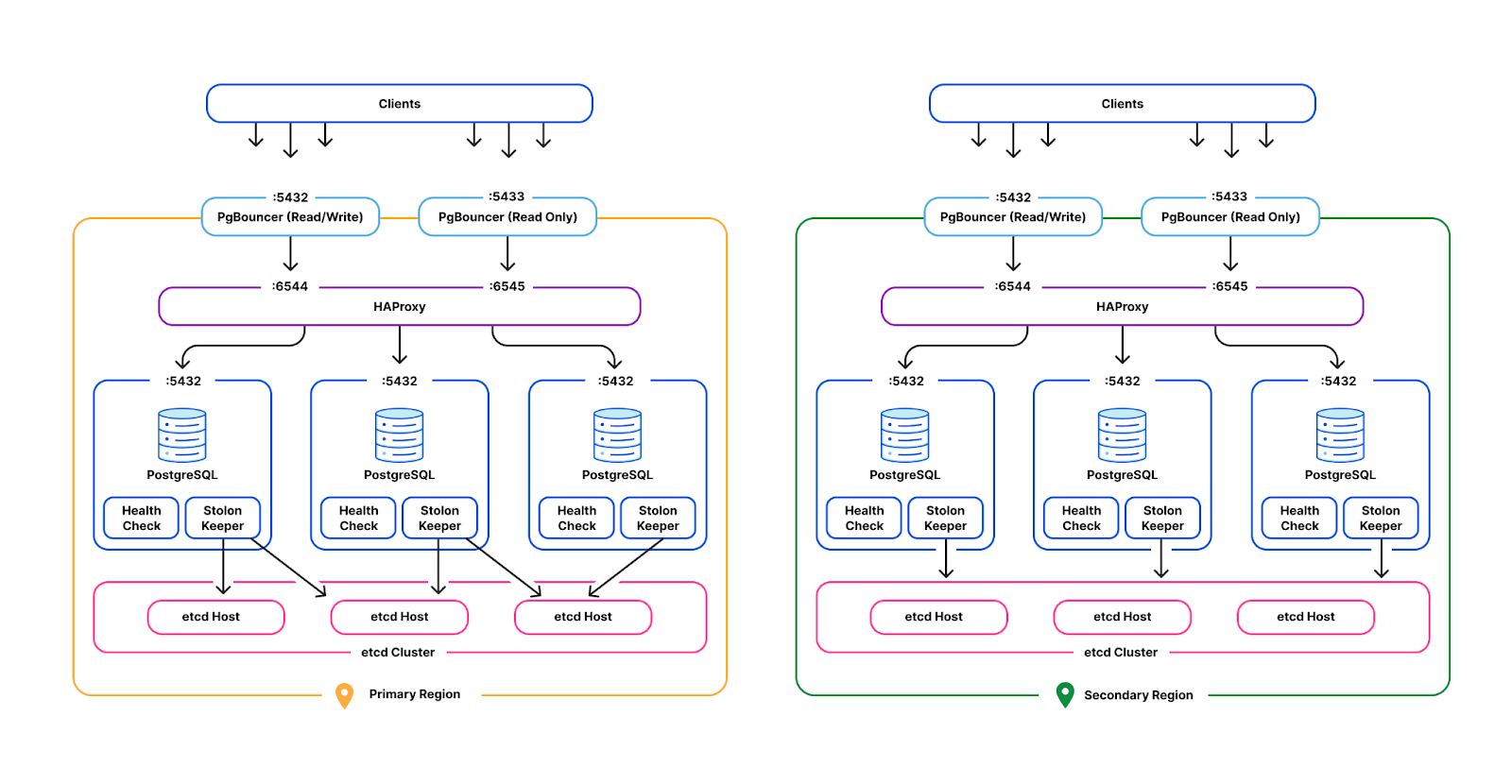

Performance isolation in a multi-tenant database environment

Our multi-tenant Postgres instances operate on bare metal servers in

non-containerized environments. Each backend application service is considered a

single tenant, where they may use one of multiple Postgres roles. Due to each

cluster serving multiple tenants, all tenants share and contend for available

system resources such as CPU time, memory, disk IO on each cluster machine, as

well as finite database resources such as server-side Postgres connections and

table locks. Each tenant has a unique workload that varies in system level

resource consumption, making it impossible to enforce throttling using a global

value. This has become problematic in production affecting neighboring

tenants:Throughput. A tenant may issue a burst of transactions, starving shared

resources from other tenants and degrading their performance. Latency: A single

tenant may issue very long or expensive queries, often concurrently, such as

large table scans for ETL extraction or queries with lengthy table locks. Both

of these scenarios can result in degraded query execution for neighboring

tenants. Their transactions may hang or take significantly longer to execute due

to either reduced CPU share time, or slower disk IO operations due to many seeks

from misbehaving tenant(s).

Quantum Encryption Is No More A Sci-Fi! Real-World Consequences Await

Quantum will enable enterprise customers to perform complex simulations in

significantly less time than traditional software using quantum computers.

Quantum algorithms are very challenging to develop, implement, and test on

current Quantum computers. Quantum techniques also are being used to improve the

randomness of computer-based random number generators. The world’s leading

quantum scientists in the field of quantum information engineering, working to

turn what was once in the realm of science fiction. Businesses need to deploy

next-generation data security solutions with equally powerful protection based

on the laws of quantum physics, literally fighting quantum computers with

quantum encryption Quantum computers today are no longer considered to be

science fiction. The main difference is that quantum encryption uses quantum

bits or qubits comprised of optical photons compared to electrical binary digits

or bits. Qubits can also be inextricably linked together using a phenomenon

called quantum entanglement.

What Is The Difference Between Computer Vision & Image processing?

We are constantly exposed to and engaged with various visually similar objects

around us. By using machine learning techniques, the discipline of AI known as

computer vision enables machines to see, comprehend, and interpret the visual

environment around us. It uses machine learning approaches to extract useful

information from digital photos, movies, or other observable inputs by

identifying patterns. Although they have the same appearance and sensation, they

differ in a few ways. Computer vision aims to distinguish between, classify, and

arrange images according to their distinguishing characteristics, such as size,

color, etc. This is similar to how people perceive and interpret images. ...

Digital image processing uses a digital computer to process digital and optical

images. A computer views an image as a two-dimensional signal composed of pixels

arranged in rows and columns. A digital image comprises a finite number of

elements, each located in a specific place with a particular value. These

so-called elements are also known as pixels, visual, and image elements.

Lessons in mismanagement

In the decades since the movie’s release, the world has become a different place

in some important ways. Women are now everywhere in the world of business, which

has changed irrevocably as a result. Unemployment is quite low in the United

States and, by Continental standards, in Europe. Recent downturns have been

greeted by large-scale stimuli from central banks, which have blunted the impact

of stock market slides and even a pandemic. But it would be foolish to think

that the horrendous managers and desperate salesmen of Glengarry Glen Ross exist

only as historical artifacts. Mismanagement and desperation go hand in hand and

are most apparent during hard times, which always come around sooner or later.

By immersing us in the commercial and workplace culture of the past, movies such

as Glengarry can help us understand our own business culture. But they can also

help prepare us for hard times to come—and remind us how not to manage, no

matter what the circumstances. ... Everyone, in every organization, has to

perform.

How the energy sector can mitigate rising cyber threats

As energy sector organisations continue expanding their connectivity to improve

efficiency, they must ensure that the perimeters of their security processes

keep up. Without properly secured infrastructure, no digital transformation will

ever be successful, and not only internal operations, but also the data of

energy users are bound to become vulnerable. But by following the above

recommendations, energy companies can go a long way in keeping their

infrastructure protected in the long run. This endeavour can be strengthened

further by partnering with cyber security specialists like Dragos, which

provides an all-in-one platform that enables real-time visualisation, protection

and response against ever present threats to the organisation. These

capabilities, combined with threat intelligence insights and supporting services

across the industrial control system (ICS) journey, is sure to provide peace of

mind and added confidence in the organisation’s security strategy. For more

information on Dragos’s research around cyber threat activity targeting the

European energy sector, download the Dragos European Industrial Infrastructure

Cyber Threat Perspective report, here.

How to hire (and retain) Gen Z talent

The global pandemic has forever changed the way we work. The remote work model

has been successful, and we’ve learned that productivity does not necessarily

decrease when managers and their team members are not physically together. This

has been a boon for Gen Z – a generation that grew up surrounded by technology.

Creating an environment that gives IT employees the flexibility to conduct their

work remotely has opened the door to a truly global workforce. Combined with the

advances in digital technologies, we’ve seen a rapid and seamless transition in

how employment is viewed. Digital transformation has leveled the playing field

for many companies by changing requirements around where employees need to work.

Innovative new technologies, from videoconferencing to IoT, have shifted the

focus from an employee’s location to their ability. Because accessing

information and managing vast computer networks can be done remotely, the

location of workers has become a minor issue.

'Sliver' Emerges as Cobalt Strike Alternative for Malicious C2

Enterprise security teams, which over the years have honed their ability to

detect the use of Cobalt Strike by adversaries, may also want to keep an eye out

for "Sliver." It's an open source command-and-control (C2) framework that

adversaries have increasingly begun integrating into their attack chains. "What

we think is driving the trend is increased knowledge of Sliver within offensive

security communities, coupled with the massive focus on Cobalt Strike [by

defenders]," says Josh Hopkins, research lead at Team Cymru. "Defenders are now

having more and more successes in detecting and mitigating against Cobalt

Strike. So, the transition away from Cobalt Strike to frameworks like Sliver is

to be expected," he says. Security researchers from Microsoft this week warned

about observing nation-state actors, ransomware and extortion groups, and other

threat actors using Sliver along with — or often as a replacement for — Cobalt

Strike in various campaigns. Among them is DEV-0237, a financially motivated

threat actor associated with the Ryuk, Conti, and Hive ransomware families; and

several groups engaged in human-operated ransomware attacks, Microsoft said.

Data Management in the Era of Data Intensity

When your data is spread across multiple clouds and systems, it can introduce

latency, performance, and quality problems. And bringing together data from

different silos and getting those data sets to speak the same language is a

time- and budget-intensive endeavor. Your existing data platforms also may

prevent you from managing hybrid data processing, which, as Ventana Research

explains, “enable[s] analysis of data in an operational data platform without

impacting operational application performance or requiring data to be extracted

to an external analytic data platform.” The firm adds that: “Hybrid data

processing functionality is becoming increasingly attractive to aid the

development of intelligent applications infused with personalization and

artificial intelligence-driven recommendations.” Such applications are clearly

important because they can be key business differentiators and enable you to

disrupt a sector. However, if you are grappling with siloed systems and data and

legacy technology that is unable to ingest high volumes of complex data fast so

that you can act in the moment, you may believe that it is impossible for your

business to benefit from the data synergies that you and your customers might

otherwise enjoy.

How to Achieve Data Quality in the Cloud

Everybody knows data quality is essential. Most companies spend significant

money and resources trying to improve data quality. However, despite these

investments, companies lose money yearly because of insufficient data, ranging

from $9.7 million to $14.2 million annually. Traditional data quality programs

do not work well for identifying data errors in cloud environments because:Most

organizations only look at the data risks they know, which is likely only the

tip of an iceberg. Usually, data quality programs focus on completeness,

integrity, duplicates and range checks. However, these checks only represent 30

to 40 percent of all data risks. Many data quality teams do not check for data

drift, anomalies or inconsistencies across sources, contributing to over 50

percent of data risks. The number of data sources, processes and applications

has exploded because of the rapid adoption of cloud technology, big data

applications and analytics. These data assets and processes require careful data

quality control to prevent errors in downstream processes. The data engineering

team can add hundreds of new data assets to the system in a short

period.

Quote for the day:

"Problem-solving leaders have one thing

in common: a faith that there's always a better way." --

Gerald M. Weinberg

No comments:

Post a Comment