6 key board questions CIOs must be prepared to answer

The board wants assurances that the CIO has command of tech investments tied to

corporate strategy. “Demystify that connection,” Ferro says. “Show how those

investments tie to the bigger picture and show immediate return as much as you

can.” Global CIO and CDO Anupam Khare tries to educate the board of manufacturer

Oshkosh Corp. in his presentations. “My slide deck is largely in the context of

the business so you can see the benefit first and the technology later. That

creates curiosity about how this technology creates value,” Khare says. “When we

say, ‘This project or technology has created this operating income impact on the

business,’ that’s the hook. Then I explain the driver for that impact, and that

leads to a better understanding of how the technology works.” Board members may

also come in with technology suggestions of their own that they hear about from

competitors or from other boards they’re on. ... Avoid the urge to break out

technical jargon to explain the merits of new cloud platforms, customer-facing

apps, or Slack as a communication tool, and “answer that question from a

business context, not from a technology context,” Holley says. “

From applied AI to edge computing: 14 tech trends to watch

:quality(70)/cloudfront-eu-central-1.images.arcpublishing.com/thenational/VQ56ARTBSKPJ4OO3DMFY6AU3ZY.jpg)

Mobility has arrived at a “great inflection” point — a shift towards autonomous,

connected, electric and smart technologies. This shift aims to disrupt markets

while improving efficiency and sustainability of land and air transportation of

people and goods. ACES technologies for road mobility saw significant adoption

during the past decade, and the pace could accelerate because of sustainability

pressures, McKinsey said. Advanced air-mobility technologies, on the other hand,

are either in pilot phase — for example, airborne-drone delivery — or remain in

the early stages of development — for example, air taxis — and face some

concerns about safety and other issues. Overall, mobility technologies, which

attracted $236bn last year, intend to improve the efficiency and sustainability

of land and air transportation of people and goods. ... It focuses on the use of

goods and services that are produced with minimal environmental impact by using

low carbon technologies and sustainable materials. At a macro level, sustainable

consumption is critical to mitigating environmental risks, including climate

change.

Why Memory Enclaves Are The Foundation Of Confidential Computing

Data encryption has been around for a long time. It was first made available for

data at rest on storage devices like disk and flash drives as well as data in

transit as it passed through the NIC and out across the network. But data in use

– literally data in the memory of a system within which it is being processed –

has not, until fairly recently, been protected by encryption. With the addition

of memory encryption and enclaves, it is now possible to actually deliver a

Confidential Computing platform with a TEE that provides data confidentiality.

This not only stops unauthorized entities, either people or applications, from

viewing data while it is in use, in transit, or at rest. ... It effectively

allows enterprises in regulated industries as well as government agencies and

multi-tenant cloud service providers to better secure their environments.

Importantly, Confidential Computing means that any organization running

applications on the cloud can be sure that any other users of the cloud capacity

and even the cloud service providers themselves cannot access the data or

applications residing within a memory enclave.

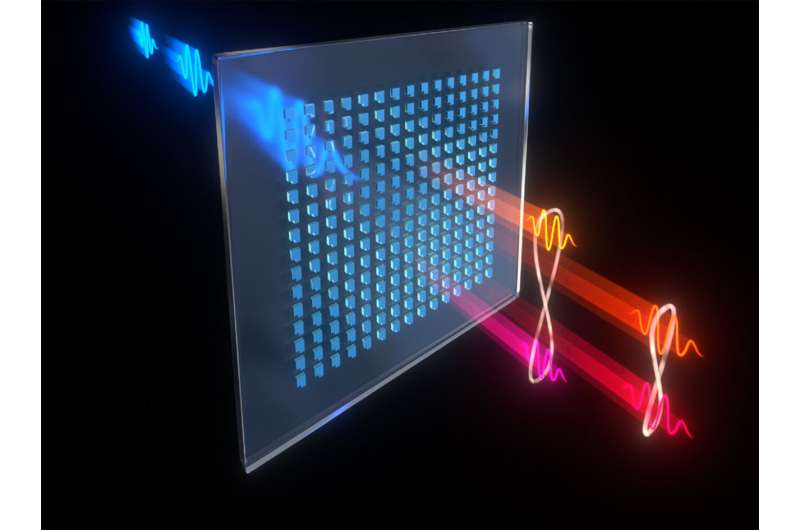

Metasurfaces offer new possibilities for quantum research

Metasurfaces are ultrathin planar optical devices made up of arrays of

nanoresonators. Their subwavelength thickness (a few hundred nanometers)

renders them effectively two-dimensional. That makes them much easier to

handle than traditional bulky optical devices. Even more importantly, due to

the lesser thickness, the momentum conservation of the photons is relaxed

because the photons have to travel through far less material than with

traditional optical devices: according to the uncertainty principle,

confinement in space leads to undefined momentum. This allows for multiple

nonlinear and quantum processes to happen with comparable efficiencies and

opens the door for the usage of many new materials that would not work in

traditional optical elements. For this reason, and also because of being

compact and more practical to handle than bulky optical elements, metasurfaces

are coming into focus as sources of photon pairs for quantum experiments. In

addition, metasurfaces could simultaneously transform photons in several

degrees of freedom, such as polarization, frequency, and path.

Agile: Starting at the top

Having strong support was key to this change in beliefs among the leadership

team. Aisha Mir, IT Agile Operations Director for Thales North America, has a

track record of successful agile transformations under her belt and was eager

to help the leadership team overcome any initial hurdles. “The best thing I

saw out of previous transformations I’ve been a part of was the way that the

team started working together and the way they were empowered. I really wanted

that for our team,” says Mir. “In those first few sprints, we saw that there

were ways for all of us to help each other, and that’s when the rest of the

team began believing. I had seen that happen before – where the team really

becomes one unit and they see what tasks are in front of them – and they scrum

together to finish it.” While the support was essential, one motivating factor

helped them work through any challenge in their way: How could they ask other

parts of the IT organization to adopt agile methodologies if they couldn’t do

it themselves? “When we started, we all had some level of skepticism but were

willing to try it because we knew this was going to be the life our

organization was going to live,” says Daniel Baldwin

AutoML: The Promise vs. Reality According to Practitioners

The data collection, data tagging, and data wrangling of pre-processing are

still tedious, manual processes. There are utilities that provide some time

savings and aid in simple feature engineering, but overall, most practitioners

do not make use of AutoML as they prepare data. In post-processing, AutoML

offerings have some deployment capabilities. But Deployment is famously a

problematic interaction between MLOps and DevOps in need of automation. Take

for example one of the most common post-processing tasks: generating reports

and sharing results. While cloud-hosted AutoML tools are able to auto-generate

reports and visualizations, our findings show that users are still adopting

manual approaches to modify default reports. The second most common

post-processing task is deploying models. Automated deployment was only

afforded to users of hosted AutoML tools and limitations still existed for

security or end user experience considerations. The failure of AutoML to be

end-to-end can actually cut into the efficiency improvements.

Best Practices for Building Serverless Microservices

There are two schools of thoughts when it comes to structuring your

repositories for an application: monorepo vs multiple repos. A monorepo is a

single repository that has logical separations for distinct services. In other

words, all microservices would live in the same repo but would be separated by

different folders. Benefits of a monorepo include easier discoverability and

governance. Drawbacks include the size of the repository as the application

scales, large blast radius if the master branch is broken, and ambiguity of

ownership. On the flip side, having a repository per microservice has its ups

and downs. Benefits of multiple repos include distinct domain boundaries,

clear code ownership, and succinct and minimal repo sizes. Drawbacks include

the overhead of creating and maintaining multiple repositories and applying

consistent governance rules across all of them. In the case of serverless, I

opt for a repository per microservice. It draws clear lines for what the

microservice is responsible for and keeps the code lightweight and

focused.

5 Super Fast Ways To Improve Core Web Vitals

High-quality images consume more space. When the image size is big, your

loading time will increase. If the loading time increases, the user experience

will be affected. So, keeping the image size as small as possible is best.

Compress the image size. If you have created your website using WordPress, you

can use plugins like ShortPixel to compress the image size. If not, many

online sites are available to compress image size. However, you might have a

doubt - does compression affect the quality of the image? To some extent, yes,

it will damage the quality, but only it will be visible while zooming in on

the image. Moreover, use JPEG format for images and SVG format for logos and

icons. It is even best if you can use WebP format. ... One of the important

metrics of the core web vitals is the Cumulative Layout shift. Imagine that

you're scrolling through a website on your phone. You think that it is all set

to engage with it. Now, you see a text which has a hyperlink that has grasped

your interest, and you're about to click it. When you click it, all of a

sudden, the text disappears, and there is an image in the place of the

text.

Cyber-Insurance Firms Limit Payouts, Risk Obsolescence

While the insurers' position is understandable, businesses — which have

already seen their premiums skyrocket over the past three years — should

question whether insurance still mitigates risk effectively, says Pankaj

Goyal, senior vice president of data science and cyber insurance at Safe

Security, a cyber-risk analysis firm. "Insurance works on trust, [so answer

the question,] 'will an insurance policy keep me whole when a bad event

happens?' " he says. "Today, the answer might be 'I don't know.' When

customers lose trust, everyone loses, including the insurance companies." ...

Indeed, the exclusion will likely result in fewer companies relying on cyber

insurance as a way to mitigate catastrophic risk. Instead, companies need to

make sure that their cybersecurity controls and measures can mitigate the cost

of any catastrophic attack, says David Lindner, chief information security

officer at Contrast Security, an application security firm. Creating data

redundancies, such as backups, expanding visibility of network events, using a

trusted forensics firm, and training all employees in cybersecurity can all

help harden a business against cyberattacks and reduce damages.

Data security hinges on clear policies and automated enforcement

The key is to establish policy guardrails for internal use to minimize cyber

risk and maximize the value of the data. Once policies are established, the

next consideration is establishing continuous oversight. This component is

difficult if the aim is to build human oversight teams, because combining

people, processes, and technology is cumbersome, expensive, and not 100%

reliable. Training people to manually combat all these issues is not only hard

but requires a significant investment over time. As a result, organizations

are looking to technology to provide long-term, scalable, and automated

policies to govern data access and adhere to compliance and regulatory

requirements. They are also leveraging these modern software approaches to

ensure privacy without forcing analysts or data scientists to “take a number”

and wait for IT when they need access to data for a specific project or even

everyday business use. With a focus on establishing policies and deciding who

gets to see/access what data and how it is used, organizations gain visibility

into and control over appropriate data access without the risk of

overexposure.

Quote for the day:

"Leadership is a journey, not a

destination. It is a marathon, not a sprint. It is a process, not an

outcome." -- John Donahoe

No comments:

Post a Comment