Automation Is Becoming A C-Suite Priority

While automation maturity is at its highest in the US, with over 60% of organizations making extensive use of automation, there are some interesting findings from India. The country shows the maximum level of enthusiasm about automation among CIOs and other senior executives. 84% believe RPA is a high or essential priority to meet strategic business objectives for Indian businesses as against the global average of 76%. Also 90% C-level executives expect their company’s financial results to improve as a result of automation, namely profitability, operating costs and revenue growth. Sector wise, IT and manufacturing have outpaced other industries in automating business processes. By contrast, government and public sector institutions have made the least headway among surveyed sectors. Of CIOs who have implemented automation, most have automated highly repetitive back-office functions. “Automation of functions is most extensive in IT, operations and production, customer service and finance.

FTC data privacy enforcement will threaten corporate bottom lines

Despite the mounting concerns over data security and privacy practices that put consumers’ data at risk, the U.S. Congress still has yet to adopt national legislation to address cybersecurity, and security spending will see a nominal increase given the current administration’s recent budget proposal. Consequently, organizations are subject to a patchwork of laws and regulations relevant to cybersecurity and privacy practices, including differing laws and regulations in each state and the District of Columbia, as well as from multiple federal administrative agencies. Therefore, the FTC has taken a comprehensive directive to extend its supervision over all companies operating in the United States. In fact, the FTC has assumed a leading role in policing corporate cybersecurity practices since 2002. Since that time, it brought more than 200 cases against companies for unfair or deceptive practices that endangered the personal data of consumers.

Huge jump in cyber incidents reported by finance sector

Overall, Snaith said there remain serious vulnerabilities across some financial services businesses when it comes to the effectiveness of their cyber controls. “More needs to be done to embed a cyber resilient culture and ensure effective incident reporting processes are in place,” he said. UK law enforcement is also calling for improvements in cyber crime reporting. “It is crucial that businesses report cyber crime to us because every incident is an investigative opportunity,” Rob Jones, director of threat leadership at the UK National Crime Agency (NCA) told Computer Weekly. “Failure to report creates an unpoliced space and a situation where incident response companies just sweep up the glass, but don’t deal with the underlying issue, which emboldens criminals,” he said. “As a result, the problem will continue and prevalence, severity and sophistication of attacks will increase.” Nigel Hawthorn, data privacy expert at security firm McAfee said that it is widely recognised that cyber incidents were previously under-reported.

How does the CVE scoring system work?

The first thing to understand is that there are three types of Metrics used in this system: Base Score Metrics - depends on sub-formulas for Impact Sub-Score (ISS), Impact, and Exploitability; Temporal Score Metrics is equal to a roundup of BaseScore * EploitCodeMaturity * RemediationLevel * ReportConfidence; and Environmental Score Metrics - depends on sub-formulas for Modified Impact Sub-Score (MISS), ModifiedImpact, and ModifiedExploitability. The formula for this is Minimum ( 1 - [ (1 -ConfidentialityRequirement * ModifiedConfidentiality) * (1 - IntegrityRequirement × ModifiedIntegrity) * (1 - AvailabilityRequirement * ModifiedAvailability) ], 0.915). Within each set of metrics are the following sub-categories: Base Score Metrics: Attack Vector, Attack Complexity, Privileges Required, User Interaction, Scope, Confidentiality Impact, Integrity Impact, Availability Impact; Temporal Score Metrics: Exploitability, Remediation Level, Report Confidence; and Environmental Score Metrics: Attack Vector, Attack Complexity, Privileges Required, User Interaction, Scope, Confidentiality Impact, Integrity Impact, Availability Impact, ...

AI is changing the entire nature of compute

"Hardware capabilities and software tools both motivate and limit the type of ideas that AI researchers will imagine and will allow themselves to pursue," said LeCun. "The tools at our disposal fashion our thoughts more than we care to admit." It's not hard to see how that's already been the case. The rise of deep learning, starting in 2006, came about not only because of tons of data, and new techniques in machine learning, such as "dropout," but also because of greater and greater compute power. In particular, the increasing use of graphics processing units, or "GPUs," from Nvidia, led to greater parallelization of compute. That made possible training of vastly larger networks than in past. The premise offered in the 1980s of "parallel distributed processing," where nodes of an artificial network are trained simultaneously, finally became a reality. Machine learning is now poised to take over the majority of the world's computing activity, some believe. During that ISSCC in February, LeCun spoke to ZDNet about the shifting landscape of computing.

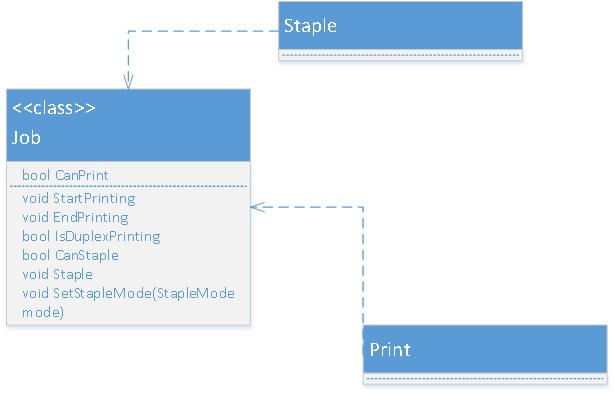

SOLID Principles: Interface Segregation Principle (ISP)

A great simple definition of the Interface Segregation Principle was given in the book you have already heard of, “Agile Principles, Patterns, and Practices in C#”. So, the definition is:“The Interface Segregation Principle states that Clients should not be forced to depend on methods they do not use.” ... Here is an interesting historical note about the ISP. I’m pretty sure that ISP was first used long ago before Robert Martin, but the first public formulation belongs to Robert C. Martin. He applied the ISP first time while consulting for Xerox. Xerox had created a new printer system that could perform a variety of tasks such as stapling and faxing. The software for this system was created from the ground up. As the software grew, making modifications became more and more difficult so that even the smallest change would take a redeployment cycle of an hour, which made development nearly impossible. The redeployment cycle took so much time because at that time there were no C# or Java, these languages compile very fast. What we can’t say about C++ for example. Bad design of a C++ program can lead to significant compilation time.

Mist Wi-Fi no longer just cloud

The Mist Edge hardware appliance avoids having access points (APs) in each office on a campus communicate directly with Mist's WxLAN technology in the cloud. Instead, WxLAN policies created through Mist's cloud-based dashboard are stored in the on-premises appliance. WxLAN policies assign resources, such as servers and printers, to groups of users. Network managers can also create a service set identifier for a select group of users and assign services or devices that only they can access. Mist Edge is available only as a stand-alone appliance. Mist plans to ship the appliance's software on a virtual machine this year. Mist Edge reflects the preference of some enterprises to split management technology between the cloud and on premises. Companies more comfortable with an on-site WLAN controller, for example, could switch to Mist Edge, said Brandon Butler, an analyst at IDC. "Overall, we see more and more enterprises gaining comfort with managing their WLAN environments from the cloud but giving customers a choice in how to manage their environments is always good," he said.

Beyond Limits: Rethinking the next generation of AI

Beyond Limits evolved out of work with NASA's Jet Propulsion Laboratory (JPL) for remote rovers used to explore places like the moon and Mars. Due to the communications lag in space, real-time control is virtually impossible. Any AI solution must be not only fully autonomous, it must be able to train and, ideally, correct itself. When there is a problem it can’t correct, the bandwidth limitations for communication make full reprograming problematic…but point patches are certainly possible. This resulted in an AI platform uniquely able to be updated, modified and, to a certain and initially limited extent, able to both teach itself and make corrections while disconnected. This unusual requirement likely has made the resulting AI nearly ideal for areas where the AI must often act independent of oversight – and/or in areas where problems can escalate very rapidly – and the AI must be able to both deal with a diversity of known and unknown issues. ... Although still its infancy, Beyond Limits represents a new class of AI. It’s better enabled to operate fully autonomously, it can both learn on the fly and increasingly make corrections to its own programing

HPE promises 100% reliability with its new storage system

Primera was announced last week at HPE’s Discover event in Las Vegas. Phil Davis, chief sales officer for HPE, said in the announcement keynote, “If you think about traditional storage, it’s full of compromises and complexity. Do I want fast or reliable? Do I want agility or simplicity? But not any more. We’re going to combine the simplicity of Nimble with the intelligence of Infosight and mission-critical heritage of 3Par and we’ve created a new class of storage that eliminates the traditional compromises and truly redefines what is possible with storage.” Davis said Primera will run out of the box with just a few cable connections and be can be autoprovisioning storage within 20 minutes. That means no need for IT consultants to install and configure the hardware. The more workloads you add to a storage system, the more unpredictable latency becomes. Using InfoSight’s parallelism, Primera improved throughput and latency of an Oracle database by 122% over the prior storage system, which HPE did not identify.

Using AI-powered intelligent automation for digital transformation success

A maturity model assessment begins with evaluating automation readiness from a technology and process perspective. IT should be involved in the discussion early on because they understand how automation technologies will fit within the larger IT framework. They’re also responsible for managing the environments that these technologies operate in and for ensuring proper security protocols are followed throughout the deployment process. From a business process and operations standpoint, organizations should assess how well-documented current processes are during this stage. If there’s room to improve prior to automation, this presents an opportunity to make upfront investments in this respect. Automation is most powerful when deployed against processes that are already running properly; it isn’t intended to ‘fix’ or alleviate the pain points around broken processes. In other words, optimize first and then automate for the best results.

Quote for the day:

"One of the sad truths about leadership is that, the higher up the ladder you travel, the less you know." -- Margaret Heffernan

No comments:

Post a Comment