Understanding Blockchain Fundamentals, Part 3: Delegated Proof of Stake

The gist is that PoW provides the most proven security to date, but at the cost of consuming an enormous amount of energy. PoS, the primary alternative, removes the energy requirements of PoW, and replaces miners with “validators”, who are given the chance to validate (“mine”) the next block with a probability proportional to their stake. Another consensus algorithm that is often discussed is Delegated Proof of Stake (DPoS) — a variant of PoS that provides a high level of scalability at the cost of limiting the number of validators on the network. ... DPoS is a system in which a fixed number of elected entities (called block producers or witnesses) are selected to create blocks in a round-robin order. Block producers are voted into power by the users of the network, who each get a number of votes proportional to the number of tokens they own on the network (their stake). Alternatively, voters can choose to delegate their stake to another voter, who will vote in the block producer election on their behalf.

Cryptocurrency Theft Drives 3x Increase in Money Laundering

"We're now seeing, in the last probably eight to 12 months, a real influx of new criminals that are highly technically sophisticated," he explains. There's a major difference between seasoned threat actors and those who have been dabbling in cybercrime for less than 12 months: operational security. It isn't a question of technical prowess so much as lack of experience, Jevans continues. Cybercrime's newest threat actors can craft advanced malware designed to target cryptocurrency addresses and inject similar addresses, under their control, to receive funds. Their malware is designed to target digital funds in a way traditional malware isn't, created by people who grew up learning about virtual currencies and can exploit them in new ways. The problems start when they secure the money. ... "It's clear these people really understand cryptocurrency and crypto assets really, really well," he explains. "What they don't understand is old-school operational security … they're just not sophisticated that way. Legacy folks, they definitely have better operational security. They're better at how they interface with it, how they distribute malicious code, how they manage user handles on different forums."

Dell New XPS 13 vs. HP Spectre x360 13t: Which laptop is better

With completely refreshed models at hand, we're putting these two dream machines through an old-fashioned smackdown. We're comparing them on everything from design and features to price and performance, declaring a winner in each category. Keep reading to see who comes out ahead. ... Both laptops are extremely portable for what they offer in capability and performance. In pure weight contests, our scale put the New XPS 13 at 2 pounds, 10.5 ounces, and the Spectre x360 13t at 2 pounds, 11.7 ounces. Unless you’re looking for a true featherweight-class devices that's closer to two pounds, it’s going to be hard to beat these two. Where it might matter to someis how large the actual body is, which can affect the size of your laptop bag or your comfort on a cramped airplane. While we think this is a pretty close battle, the nod obviously goes to the New XPS 13, which is just incredibly small despite having a 13.3-inch screen. ... It’s interesting that both the XPS 13 and Spectre x360 13t are the last refuge of “good keyboards.” There's no marketing to make you believe that less key travel is better.

4 reasons why CISOs must think like developers

Developers are constantly looking for ways to extend services and share data using API’s & Microservices. Microservices help weave a digital fabric through a set of loosely-coupled services stitched together as a platform. Platform-centric architectures provide for extensibility with the ability to plug-and-play new tools and services using API’s with open data formats like JSON. CISO’s similarly must start thinking of ways to break down data silos and integrate the data from various tools and sub-systems. The list of “sensors” generating security data is endless and keeps growing every day. Anti-virus scan reports, firewall logs, vulnerability scan data, server access logs, authentication logs and threat profiles are just some of the sources of critical security information. All this data only makes sense when integrated into one single view and analyzed using AI-models. The volume, velocity and variety of data make it impossible for human-beings to analyze and react. AI-driven models help discern anomalous behavior from regular patterns and are the only scalable approach for detecting threats in near real-time. Security operations, automation, analytics and incident response as an integrated platform is the way to go.

Network professionals should think SD-Branch, not just SD-WAN

Doyle defines the SD-Branch as having SD-WAN, routing, network security, and LAN/Wi-Fi functions all in one platform with integrated, centralized management. An SD-Branch can be thought of as the next step after SD-WAN, as the latter transforms the transport and the former focuses on things in the branch, such as optimizing user experience and improving security. ... Most SD-WAN solutions focus on WAN transport, but apps continue on inside the branch. Aruba’s SD-Branch provides fine-grained contextual awareness and QoS across the WAN, but also inside the branch, and can be extended to mobile users. This is an important step in breaking down the management silos of remote networks, in office, and WAN. Network engineers should think of the end-to-end network instead of discrete places. Apps don’t care about network boundaries, and it’s time for network operations to think that way, as well. From an operations perspective, Aruba’s SD-Branch would enable IT organizations to manage more branches with fewer people. The automated capabilities and ZTP takes care of many of the tasks that were historically done manually.

Open source isn’t the community you think it is

The interesting thing is just how strongly the central “rules” of open source engagement have persisted, even as open source has become standard operating procedure for a huge swath of software development, whether done by vendors or enterprises building software to suit their internal needs. While it may seem that such an open source contribution model that depends on just a few core contributors for so much of the code wouldn’t be sustainable, the opposite is true. Each vendor can take particular interest in just a few projects, committing code to those, while “free riding” on other projects for which it derives less strategic value. In this way, open source persists, even if it’s not nearly as “open” as proponents sometimes suggest. Is open source then any different from a proprietary product? After all, both can be categorized by contributions by very few, or even just one, vendor. Yes, open source is different. Indeed, the difference is profound. In a proprietary product, all the engagement is dictated by one vendor.

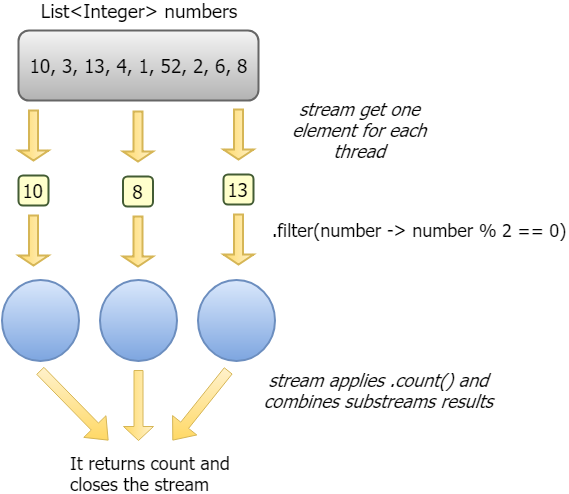

A stream is a sequence of elements. An array is a data structure that stores a sequence of values. Then, a stream is an array? Well, not really - let's look at what a stream really is and see how it works. First of all, streams don't store elements, an array does. So, no, a stream is not an array. Also, while collections and arrays have a finite size, streams don't. But, if a stream doesn't store elements, how can it be a sequence of elements? Streams are actually a sequence of data being moved from one point to the another, but they're computed on demand. So, they have at least one source, like arrays, lists, I/O resources, and so on. Let's take a file for an example: when a file is opened for editing, all or part of it remains in memory, thus allowing for changes, so only when it is closed there's a guarantee that no data will be lost or damaged. Fortunately, a stream can read/write data chunk by chunk, without buffering the whole file at once. Just so you know, a buffer is a region of a physical memory storage (usually RAM) used to temporarily store data while it is being moved from one place to another.

The marketplace consists of suppliers and consumers that either rent out or purchase computing power to perform their tasks. Consumers who connect to the virtual space can either select a rental time or buy available power for their projects, and then calculate the cost accordingly. When the power resource is theirs, consumers can then take advantage of SONM’s capabilities to render videos, host apps and websites, make scientific calculations, manage data storage, or work with machine learning. Suppliers — the computing power owners — earn SNM tokens when they sell computer resources to consumers. SONM is completely decentralized, which means the platform is transparent and free from ownership, and the company claims it is less expensive than centralized competitors. “Blockchain enables the creation of a genuinely open decentralized system without a single control center,” Antonio said. “Additionally, using blockchain to manage settlements on-platform with the help of the SNM cryptocurrency allows the interests of participants to be protected.”

Economic viability is important, says Sharma, because of the public policy imperative to find cost-effective solutions to the problems facing urban areas. “In general, cities are stretched in terms of their budgets,” he says, “They are thinking about how to efficiently utilize all of the assets they have. For example, better traffic management can be an economic alternative to building a new highway. The ultimate goal is not necessarily to build roads, it’s to improve mobility, and do a better job of getting people from point A to point B.” Sharma says that social media and awareness of new technology is increasing the motivation of urban planners and politicians to implement smarter solutions to problems such as traffic congestion, parking shortages, security, and first-responder response times. “Citizens are demanding more from their leaders,” he says. “I think this will motivate policymakers, and result in the right decisions when it comes to using digital technology.” A recently released report from Juniper Research, sponsored by Intel, looks at the evolution of smart cities in the context of mobility, healthcare, public safety and productivity.

Facial Recognition: Big Trouble With Big Data Biometrics

Amazon Web Services, for example, in 2016 began to offer biometric capabilities via Amazon Rekognition, and it's ready to highlight positive use cases. "We have seen customers use the image and video analysis capabilities of Amazon Rekognition in ways that materially benefit both society (e.g. preventing human trafficking, inhibiting child exploitation, reuniting missing children with their families, and building educational apps for children), and organizations (enhancing security through multi-factor authentication, finding images more easily, or preventing package theft)," Matt Wood, general manager for deep learning and artificial intelligence at Amazon Web Services, said in a blog post last month. ... As data breach expert Troy Hunt has written as well as extensively documented: "Sooner or later, big repositories of data will be abused. Period." Hunt was specifically writing about India's Aadhaar implementation, which is the world's largest biometric system, storing about 1.2 billion individuals' details, and which has not been a security success story

Quote for the day:

"The essence of leadership is the willingness to make the tough decisions. Prepared to be lonely." -- Colin Powell

No comments:

Post a Comment