Red Hat announced the general availability of .NET Core 2.1 for its Red Hat Enterprise Linux and OpenShift container platforms. While .NET Core is a modular, open source, cross-platform (Windows, Linux and macOS) .NET implementation for creating console, Web and other apps, the Red Had version focuses on microservice and container projects. Its .NET Core 2.1 efforts primarily target enterprise Linux (RHEL) and OpenShift, the company's Kubernetes container application platform . ... "With .NET Core you have the flexibility of building and deploying applications on Red Hat Enterprise Linux or in containers," the company said in a blog post last week. "Your container-based applications and microservices can easily be deployed to your choice of public or private clouds using Red Hat OpenShift. All of the features of OpenShift and Kubernetes for cloud deployments are available to you." Red Hat said developers can use .NET Core 2.1 to develop and deploy applications

Why banks like Barclays are testing quantum computing

“Quantum computing will become increasingly important over time,” he said. "In 20 years, quantum computing will not be just an option. It may be our only option, from an energy perspective, let alone from a computational standpoint.” Quantum computing got its start in 1981, but it still feels like science fiction. Quantum chips have to be kept at subzero temperatures in an isolated environment. They promise performance gains of a billion times and more, through the processors’ ability to exist in multiple states simultaneously, and therefore to perform tasks using all possible permutations in parallel. Currently, the chief use case banks and other financial firms see for it relates to investments. "Banks and financial institutions like hedge funds now appear to be mostly interested in quantum computing to help minimize risk and maximize gains from dynamic portfolios of instruments,” said Dr. Bob Sutor, vice president, IBM Q Strategy and Ecosystem. “The most advanced organizations are looking at how early development of proprietary mixed classical-quantum algorithms will provide competitive advantage."

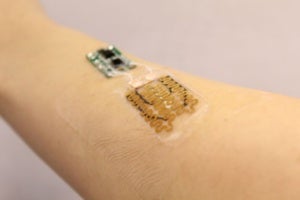

Taking the temperature of IoT for healthcare

Developed by researchers at Tufts University using flexible electronics, these smart bandages not only monitor the conditions of chronic skin wounds, but they also use a microprocessor to analyze that information to electronically deliver the right drugs to promote healing. By tracking temperature and pH of chronic skin wounds, the 3mm-thick smart bandages are designed to deliver tailored treatments (typically antibiotics) to help ward off persistent infections and even amputations, which too often result from non-healing wounds associated with burns, diabetes, and other medical conditions. Sameer Sonkusale, Ph.D., professor of electrical and computer engineering at Tufts University’s School of Engineering, a co-author of Smart Bandages for Monitoring and Treatment of Chronic Wounds, said in a statement: “Bandages have changed little since the beginnings of medicine. We are simply applying modern technology to an ancient art in the hopes of improving outcomes for an intractable problem.” It’s unclear if Tufts’ smart bandages will be internet connected, but the potential benefits of an IoT connection here seems obvious.

Google Announces Firestore Security Rules Simulator

Some of the functions that developers can write rule tests for include document reads, writes, and deletes, all of which can be tested against an organization’s actual Firestore database. There is also the option to simulate a particular user being signed in, which can be useful for testing permissions that may be assigned to various user accounts. In addition to releasing the Firestore Security Rules Simulator, Google also increased the number of calls that can be made per security rule from three to ten for single document requests. For those that are using batch-requests or other multi-resource requests, a total of 20 combined calls is allowed for all of the documents included in the call. Google also mentioned that is has improved its reference documentation related to Firebase Security Rules and the specific language that is used to write them. Security is something that cannot be taken lightly, especially with the amount of data that is stored in the cloud today. While there are many advantages to having data stored in the cloud or hybrid environments, security has to be one of the top priorities of developers and administrators that work with that data on a daily basis.

No blank checks: The value of cloud cost governance

Although you can make a case for the cloud’s value around agility and compressing time to market, that will fall on deaf ears among your business leaders if you’re 20 to 30 percent over budget for ongoing cloud costs. There’s no reason to not know your ongoing cloud costs. In the planning phase, it’s just a matter of doing simple math to figure out the likely costs month to month. In the operational phase, it’s about putting in cost monitoring and cost controls. This is called cloud cost governance. Cloud cost governance uses a tool to both monitor usage and produce cost reports to find out who, what, when, and how cloud resources were used. Having this information also means that you can do chargebacks to the departments that incurred the costs—including overruns. But the most important aspect with cloud governance is not monitoring but the ability to estimate. Cloud cost governance tools can tell you not just about current use but also about likely costs in the future. You can use that information for budgeting.

The 5 factors driving workers away from the gig economy

The success doesn't come as a huge surprise, as gig economy jobs have many advantages. For one, employees set their own schedules, simply logging onto the app when they feel like working. That means no more requesting off for doctor's appointments or vacations—you are able to work on your own time. Gig economies provide more opportunity for more people, Forrester researchers Marc Cecere and Matthew Guarini wrote in a January 2018 report. Between students, retirees, underemployed workers, remote employees, and other non-traditional professionals, the gig economy opens its doors to any and all backgrounds, said the report. Companies also benefit from the gig economy with its significantly lower costs. Saving money is the number one reason companies form or adopt the contingent workforce concept, according to the Forrester report. Since freelance employees have to provide their own devices, vehicles, and work spaces, companies save big. And with freedom for employees to opt in or out at any time, companies get to eliminate the expense of recruiting firms, the report found.

Blockchain and bluster: Why politicians always get tech wrong

Part of the problem is that few politicians really understand or care about technology. Government technology projects regularly run over time and over budget, and still fail to deliver their design goals -- often because ministers fail to grasp the complexities involved. Twenty years ago such an attitude towards technology on the part of our elected representatives might have been understandable, if unwise. Now it's positively dangerous: technology is one of the biggest drivers of change in society, because it underpins almost everything we do. It's one of the biggest sources both of threats and opportunities. Issues from artificial intelligence to fake news to mass surveillance -- and yes, perhaps even blockchain -- require an informed and engaged political class, able to steer societies around potential risks and make the right decisions about how we should best employ these innovations. For politicians, bashing tech companies to score cheap points and then hoping that immature technology will save them from self-inflicted problems is no longer an option.

Getting to Know Graal, the New Java JIT Compiler

It must be clearly understood that despite the enormous promise of Graal and GraalVM, it currently is still early stage / experimental technology. It is not yet optimized or productionized for general-purpose use cases, and it will take time to reach parity with HotSpot / C2. Microbenchmarks are also often misleading - they can point the way in some circumstances, but in the end only user-level benchmarks of entire production applications matters for performance analysis. One way to think about this is that C2 is essentially a local maximum of performance and is at the end of its design lifetime. Graal gives us the opportunity to break out of that local maximum and move to a new, better region - and potentially rewrite a lot of what we thought we knew about VM design and compilers along the way. It's still immature tech though - and it is very unlikely to be fully mainstream for several more years. This means that any performance tests undertaken today should therefore be analysed with real caution.

Mobile devices lost in London underline security risk

Mobile phones represent the greatest risk of identity theft to individuals and important data loss to businesses, the report said. Laptops represent the next most commonly lost device, with a total of 1,155 lost, followed by tablet computers, with 1,082 devices lost. Barry Scott, CTO for Europe at identity and access management firm Centrify, said that with tens of thousands of electronic devices going missing every year, businesses need to wake up to the fact that fraudsters will be attempting to gain access to critical information through lost or stolen devices. “With cyber attacks increasing at an alarming rate, simple password-based security measures are no longer fit for purpose,” he said. Instead, Scott said businesses needed to adopt a zero-trust approach, verifying users, their devices and limiting access to the volume of data they can access. “Failure to take action acts as an open invitation to cyber criminals and hackers, who see lost devices as an easy way into a corporate enterprise,” he said.

Data Quality Evolution with Big Data and Machine Learning

With limited data sets and structured data, data quality issues are relatively clear. The processes creating the data are generally transparent and subject to known errors: data input errors, poorly filled forms, address issues, duplication, etc. The range of possibilities is fairly limited, and the data format for processing is rigidly defined. With machine learning and big data, the mechanics of data cleansing must change. In addition to more and faster data, there is a great increase in uncertainty from unstructured data. Data cleansing must interpret the data and put it into a format suitable for processing without introducing new biases. The quality process, moreover, will differ according to specific use. Data quality is now more relative than absolute. Queries need to be better matched to data sets depending on research objectives and business goals. Data cleansing tools can reduce some of the common errors in the data stream, but the potential for unexpected bias will always exist. At the same time, queries need to be timely and affordable. There has never been a greater need for a careful data quality approach.

Quote for the day:

"Change the changeable. Accept the unchangeable. And remove yourself from the unacceptable." -- Denis Waitley

No comments:

Post a Comment