Quote for the day:

“When we give ourselves permission to fail, we, at the same time, give ourselves permission to excel.” -- Eloise Ristad

6 tips for consolidating your vendor portfolio without killing operations

Behind every sprawling vendor relationship is a series of small extensions that

compound over time, creating complex entanglements. To improve flexibility when

reviewing partners, Dovico is wary of vendor entanglements that complicate the

ability to retire suppliers. Her aim is to clearly define the service required

and the vendor’s capabilities. “You’ve got to be conscious of not muddying how

you feel about the performance of one vendor, or your relationship with them.

You need to have some competitive tension and align core competencies with your

problem space,” she says. Klein prefers to adopt a cross-functional approach

with finance and engineering input to identify redundancies and sprawl.

Engineers with industry knowledge cross-reference vendor services, while IT

checks against industry benchmarks, such as Gartner’s Magic Quadrant, to

identify vendors providing similar services or tools. ... Vendor sprawl also

lurks in the blind spot of cloud-based services that can be adopted without IT

oversight, fueling shadow purchasing habits. “With the proliferation of SaaS and

cloud models, departments can now make a few phone calls or sign up online to

get applications installed or services procured,” says Klein. This shadow IT

ecosystem increases security risks and vendor entanglement, undermining

consolidation efforts. This needs to be tackled through changes to IT

governance.

Behind every sprawling vendor relationship is a series of small extensions that

compound over time, creating complex entanglements. To improve flexibility when

reviewing partners, Dovico is wary of vendor entanglements that complicate the

ability to retire suppliers. Her aim is to clearly define the service required

and the vendor’s capabilities. “You’ve got to be conscious of not muddying how

you feel about the performance of one vendor, or your relationship with them.

You need to have some competitive tension and align core competencies with your

problem space,” she says. Klein prefers to adopt a cross-functional approach

with finance and engineering input to identify redundancies and sprawl.

Engineers with industry knowledge cross-reference vendor services, while IT

checks against industry benchmarks, such as Gartner’s Magic Quadrant, to

identify vendors providing similar services or tools. ... Vendor sprawl also

lurks in the blind spot of cloud-based services that can be adopted without IT

oversight, fueling shadow purchasing habits. “With the proliferation of SaaS and

cloud models, departments can now make a few phone calls or sign up online to

get applications installed or services procured,” says Klein. This shadow IT

ecosystem increases security risks and vendor entanglement, undermining

consolidation efforts. This needs to be tackled through changes to IT

governance.

Should I stay or should I go? Rethinking IT support contracts before auto-renewal bites

Contract inertia, which is the tendency to stick with what you know, even when

it may no longer be the best option, is a common phenomenon in business

technology. There are several reasons for it, such as familiarity with an

existing provider, fear of disruption, the administrative effort involved in

reviewing and comparing alternatives, and sometimes just a simple lack of

awareness that the renewal date is approaching. The problem is that inertia can

quietly erode value. As organisations grow, shift priorities or adopt new

technologies, the IT support they once chose may no longer be fit for purpose.

... A proactive approach begins with accountability. IT leaders need to know

what their current provider delivers and how they are being used by the company.

Are remote software tools performing as expected? Are updates, patches and

monitoring processes being applied consistently across all platforms? Are issues

being resolved efficiently by our internal IT team, or are inefficiencies

building up? Is this the correct set-up and structure for our business, or could

we be making better use of existing internal capacity, by leveraging better

remote management tools? Gathering this information allows organisations to have

an honest conversation with their provider (and themselves) about whether the

contract still aligns with their objectives.

Contract inertia, which is the tendency to stick with what you know, even when

it may no longer be the best option, is a common phenomenon in business

technology. There are several reasons for it, such as familiarity with an

existing provider, fear of disruption, the administrative effort involved in

reviewing and comparing alternatives, and sometimes just a simple lack of

awareness that the renewal date is approaching. The problem is that inertia can

quietly erode value. As organisations grow, shift priorities or adopt new

technologies, the IT support they once chose may no longer be fit for purpose.

... A proactive approach begins with accountability. IT leaders need to know

what their current provider delivers and how they are being used by the company.

Are remote software tools performing as expected? Are updates, patches and

monitoring processes being applied consistently across all platforms? Are issues

being resolved efficiently by our internal IT team, or are inefficiencies

building up? Is this the correct set-up and structure for our business, or could

we be making better use of existing internal capacity, by leveraging better

remote management tools? Gathering this information allows organisations to have

an honest conversation with their provider (and themselves) about whether the

contract still aligns with their objectives.

AI Data Security: Core Concepts, Risks, and Proven Practices

Morgan Stanley Open Sources CALM: The Architecture as Code Solution Transforming Enterprise DevOps

CALM enables software architects to define, validate, and visualize system

architectures in a standardized, machine-readable format, bridging the gap

between architectural intent and implementation. Built on a JSON Meta Schema,

CALM transforms architectural designs into executable specifications that both

humans and machines can understand. ... The framework structures architecture

into three primary components: nodes, relationships, and metadata. This modular

approach allows architects to model everything from high-level system overviews

to detailed microservices architectures. ... CALM’s true power emerges in its

seamless integration with modern DevOps workflows. The framework treats

architectural definitions like any other code asset, version-controlled,

testable, and automatable. Teams can validate architectural compliance in their

CI/CD pipelines, catching design issues before they reach production. The CALM

CLI provides immediate feedback on architectural decisions, enabling real-time

validation during development. This shifts compliance left in the development

lifecycle, transforming potential deployment roadblocks into preventable design

issues. Key benefits for DevOps teams include machine-readable architecture

definitions that eliminate manual interpretation errors, version control for

architectural changes that provides clear change history, and real-time feedback

on compliance violations that prevent downstream issues.

CALM enables software architects to define, validate, and visualize system

architectures in a standardized, machine-readable format, bridging the gap

between architectural intent and implementation. Built on a JSON Meta Schema,

CALM transforms architectural designs into executable specifications that both

humans and machines can understand. ... The framework structures architecture

into three primary components: nodes, relationships, and metadata. This modular

approach allows architects to model everything from high-level system overviews

to detailed microservices architectures. ... CALM’s true power emerges in its

seamless integration with modern DevOps workflows. The framework treats

architectural definitions like any other code asset, version-controlled,

testable, and automatable. Teams can validate architectural compliance in their

CI/CD pipelines, catching design issues before they reach production. The CALM

CLI provides immediate feedback on architectural decisions, enabling real-time

validation during development. This shifts compliance left in the development

lifecycle, transforming potential deployment roadblocks into preventable design

issues. Key benefits for DevOps teams include machine-readable architecture

definitions that eliminate manual interpretation errors, version control for

architectural changes that provides clear change history, and real-time feedback

on compliance violations that prevent downstream issues.

Shadow AI is surging — getting AI adoption right is your best defense

Despite the clarity of this progression, many organizations struggle to begin.

One of the most common reasons is poor platform selection. Either no tool is

made available, or the wrong class of tool is introduced. Sometimes what is

offered is too narrow, designed for one function or team. Sometimes it is too

technical, requiring configuration or training that most users aren’t prepared

for. In other cases, the tool is so heavily restricted that users cannot

complete meaningful work. Any of these mistakes can derail adoption. A tool that

is not trusted or useful will not be used. And without usage, there is no

feedback, value, or justification for scale. ... The best entry point is a

general-purpose AI assistant designed for enterprise use. It must be simple to

access, require no setup, and provide immediate value across a range of roles.

It must also meet enterprise requirements for data security, identity

management, policy enforcement, and model transparency. This is not a niche

solution. It is a foundation layer. It should allow employees to experiment,

complete tasks, and build fluency in a way that is observable, governable, and

safe. Several platforms meet these needs. ChatGPT Enterprise provides a secure,

hosted version of GPT-5 with zero data retention, administrative oversight, and

SSO integration. It is simple to deploy and easy to use. =

Despite the clarity of this progression, many organizations struggle to begin.

One of the most common reasons is poor platform selection. Either no tool is

made available, or the wrong class of tool is introduced. Sometimes what is

offered is too narrow, designed for one function or team. Sometimes it is too

technical, requiring configuration or training that most users aren’t prepared

for. In other cases, the tool is so heavily restricted that users cannot

complete meaningful work. Any of these mistakes can derail adoption. A tool that

is not trusted or useful will not be used. And without usage, there is no

feedback, value, or justification for scale. ... The best entry point is a

general-purpose AI assistant designed for enterprise use. It must be simple to

access, require no setup, and provide immediate value across a range of roles.

It must also meet enterprise requirements for data security, identity

management, policy enforcement, and model transparency. This is not a niche

solution. It is a foundation layer. It should allow employees to experiment,

complete tasks, and build fluency in a way that is observable, governable, and

safe. Several platforms meet these needs. ChatGPT Enterprise provides a secure,

hosted version of GPT-5 with zero data retention, administrative oversight, and

SSO integration. It is simple to deploy and easy to use. =

AI and the impact on our skills – the Precautionary Principle must apply

There is much public comment about AI replacing jobs or specific tasks within roles, and this is often cited as a source of productivity improvement. Often we hear about how junior legal professionals can be easily replaced since much of their work is related to the production of standard contracts and other documents, and these tasks can be performed by LLMs. We hear much of the same narrative from the accounting and consulting worlds. ... The greatest learning experiences come from making mistakes. Problem-solving skills come from experience. Intuition is a skill that is developed from repeatedly working in real-world environments. AI systems do make mistakes and these can be caught and corrected by a human, but it is not the same as the human making the mistake. Correcting the mistakes made by AI systems is in itself a skill, but a different one. ... In a rapidly evolving world in which AI has the potential to play a major role, it is appropriate that we apply the Precautionary Principle in determining how to automate with AI. The scientific evidence of the impact of AI-enabled automation is still incomplete, but more is being learned every day. However, skill loss is a serious, and possibly irreversible, risk. The integrity of education systems, the reputations of organisations and individuals, and our own ability to trust in complex decision-making processes, are at stake.Ransomware-Resilient Storage: The New Frontline Defense in a High-Stakes Cyber Battle

/articles/ransomware-resilient-storage-cyber-defense/en/smallimage/ransomware-resilient-storage-cyber-defense-thumbnail-1755589602893.jpg) The cornerstone of ransomware resilience is immutability: data written to

storage cannot be altered or deleted ever. This

write-once-read-many capability means backup snapshots or data blobs are

locked for prescribed retention periods, impervious to tampering even by

attackers or system administrators with elevated privileges. Hardware and

software enforce this immutability by preventing any writes or deletes on

designated volumes, snapshots, or objects once committed, creating a "logical

air gap" of protection without the need for physical media isolation. ... Moving

deeper, efforts are underway to harden storage hardware directly. Technologies

such as FlashGuard, explored experimentally by IBM and Intel collaborations,

embed rollback capabilities within SSD controllers. By preserving prior versions

of data pages on-device, FlashGuard can quickly revert files corrupted or

encrypted by ransomware without network or host dependency. ... Though not

widespread in production, these capabilities signal a future where storage

devices autonomously resist ransomware impact, a powerful complement to

immutable snapshotting. While these cutting-edge hardware-level protections

offer rapid recovery and autonomous resilience, organizations also consider

complementary isolation strategies like air-gapping to create robust

multi-layered defense boundaries against ransomware threats.

The cornerstone of ransomware resilience is immutability: data written to

storage cannot be altered or deleted ever. This

write-once-read-many capability means backup snapshots or data blobs are

locked for prescribed retention periods, impervious to tampering even by

attackers or system administrators with elevated privileges. Hardware and

software enforce this immutability by preventing any writes or deletes on

designated volumes, snapshots, or objects once committed, creating a "logical

air gap" of protection without the need for physical media isolation. ... Moving

deeper, efforts are underway to harden storage hardware directly. Technologies

such as FlashGuard, explored experimentally by IBM and Intel collaborations,

embed rollback capabilities within SSD controllers. By preserving prior versions

of data pages on-device, FlashGuard can quickly revert files corrupted or

encrypted by ransomware without network or host dependency. ... Though not

widespread in production, these capabilities signal a future where storage

devices autonomously resist ransomware impact, a powerful complement to

immutable snapshotting. While these cutting-edge hardware-level protections

offer rapid recovery and autonomous resilience, organizations also consider

complementary isolation strategies like air-gapping to create robust

multi-layered defense boundaries against ransomware threats.

How an Internal AI Governance Council Drives Responsible Innovation

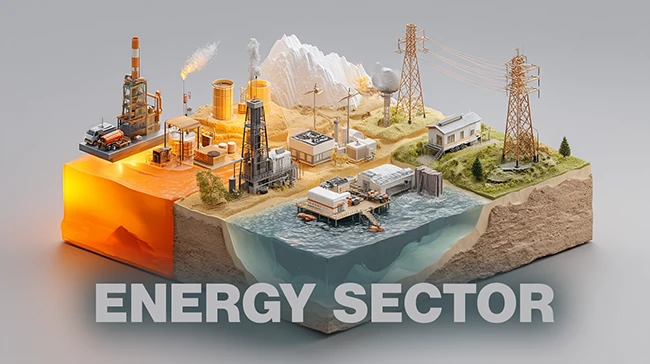

The efficacy of AI governance hinges on the council’s composition and operational approach. An optimal governance council typically includes cross-functional representation from executive leadership, IT, compliance and legal teams, human resources, product management, and frontline employees. This diversified representation ensures comprehensive coverage of ethical considerations, compliance requirements, and operational realities. Initial steps in operationalizing a council involve creating strong AI usage policies, establishing approved tools, and developing clear monitoring and validation protocols. ... While initial governance frameworks often focus on strict risk management and regulatory compliance, the long-term goal shifts toward empowerment and innovation. Mature governance practices balance caution with enablement, providing organizations with a dynamic, iterative approach to AI implementation. This involves reassessing and adapting governance strategies, aligning them with evolving technologies, organizational objectives, and regulatory expectations. AI’s non-deterministic, probabilistic nature, particularly generative models, necessitates a continuous human oversight component. Effective governance strategies embed this human-in-the-loop approach, ensuring AI enhances decision-making without fully automating critical processes.The energy sector has no time to wait for the next cyberattack

Recent findings have raised concerns about solar infrastructure. Some

Chinese-made solar inverters were found to have built-in communication equipment

that isn’t fully explained. In theory, these devices could be triggered remotely

to shut down inverters, potentially causing widespread power disruptions. The

discovery has raised fears that covert malware may have been installed in

critical energy infrastructure across the U.S. and Europe, which could enable

remote attacks during conflicts. ... Many OT systems were built decades ago

and weren’t designed with cyber threats in mind. They often lack updates,

patches, and support, and older software and hardware don’t always work with new

security solutions. Upgrading them without disrupting operations is a complex

task. OT systems used to be kept separate from the Internet to prevent

remote attacks. Now, the push for real-time data, remote monitoring, and

automation has connected these systems to IT networks. That makes operations

more efficient, but it also gives cybercriminals new ways to exploit weaknesses

that were once isolated. Energy companies are cautious about overhauling old

systems because it’s expensive and can interrupt service. But keeping legacy

systems in play creates security gaps, especially when connected to networks or

IoT devices. Protecting these systems while moving to newer, more secure tech

takes planning, investment, and IT-OT collaboration.

Recent findings have raised concerns about solar infrastructure. Some

Chinese-made solar inverters were found to have built-in communication equipment

that isn’t fully explained. In theory, these devices could be triggered remotely

to shut down inverters, potentially causing widespread power disruptions. The

discovery has raised fears that covert malware may have been installed in

critical energy infrastructure across the U.S. and Europe, which could enable

remote attacks during conflicts. ... Many OT systems were built decades ago

and weren’t designed with cyber threats in mind. They often lack updates,

patches, and support, and older software and hardware don’t always work with new

security solutions. Upgrading them without disrupting operations is a complex

task. OT systems used to be kept separate from the Internet to prevent

remote attacks. Now, the push for real-time data, remote monitoring, and

automation has connected these systems to IT networks. That makes operations

more efficient, but it also gives cybercriminals new ways to exploit weaknesses

that were once isolated. Energy companies are cautious about overhauling old

systems because it’s expensive and can interrupt service. But keeping legacy

systems in play creates security gaps, especially when connected to networks or

IoT devices. Protecting these systems while moving to newer, more secure tech

takes planning, investment, and IT-OT collaboration.

Agentic AI Browser an Easy Mark for Online Scammers

In an Wednesday blog post, researchers from Guardio wrote that Comet - one of

the first AI browsers to reach consumers - clicked through fake storefronts,

submitted sensitive data to phishing sites and failed to recognize malicious

prompts designed to hijack its behavior. The Tel Aviv-based security firm calls

the problem "scamlexity," a messy intersection of human-like automation and

old-fashioned social engineering creates "a new, invisible scam surface" that

scales to millions of potential victims at once. In a clash between the

sophistication of generative models built into browsers and the simplicity of

phishing tricks that have trapped users for decades, "even the oldest tricks in

the scammer's playbook become more dangerous in the hands of AI browsing." One

of the headline features of AI browsers is one-click shopping. Researchers spun

up a fake "Walmart" storefront complete with polished design, realistic listings

and a seamless checkout flow. ... Rather than fooling a user into downloading

malicious code to putatively fix a computer problem - as in ClickFix - a

PromptFix attack is a malicious instruction was hidden inside what looks like a

CAPTCHA. The AI treated the bogus challenge as routine, obeyed the hidden

command and continued execution. AI agents are expected to ingest unstructured

logs, alerts or even attacker-generated content during incident response.

In an Wednesday blog post, researchers from Guardio wrote that Comet - one of

the first AI browsers to reach consumers - clicked through fake storefronts,

submitted sensitive data to phishing sites and failed to recognize malicious

prompts designed to hijack its behavior. The Tel Aviv-based security firm calls

the problem "scamlexity," a messy intersection of human-like automation and

old-fashioned social engineering creates "a new, invisible scam surface" that

scales to millions of potential victims at once. In a clash between the

sophistication of generative models built into browsers and the simplicity of

phishing tricks that have trapped users for decades, "even the oldest tricks in

the scammer's playbook become more dangerous in the hands of AI browsing." One

of the headline features of AI browsers is one-click shopping. Researchers spun

up a fake "Walmart" storefront complete with polished design, realistic listings

and a seamless checkout flow. ... Rather than fooling a user into downloading

malicious code to putatively fix a computer problem - as in ClickFix - a

PromptFix attack is a malicious instruction was hidden inside what looks like a

CAPTCHA. The AI treated the bogus challenge as routine, obeyed the hidden

command and continued execution. AI agents are expected to ingest unstructured

logs, alerts or even attacker-generated content during incident response.

/dq/media/media_files/2024/12/26/6xyoLeZW8RbIx2FykASq.jpg)