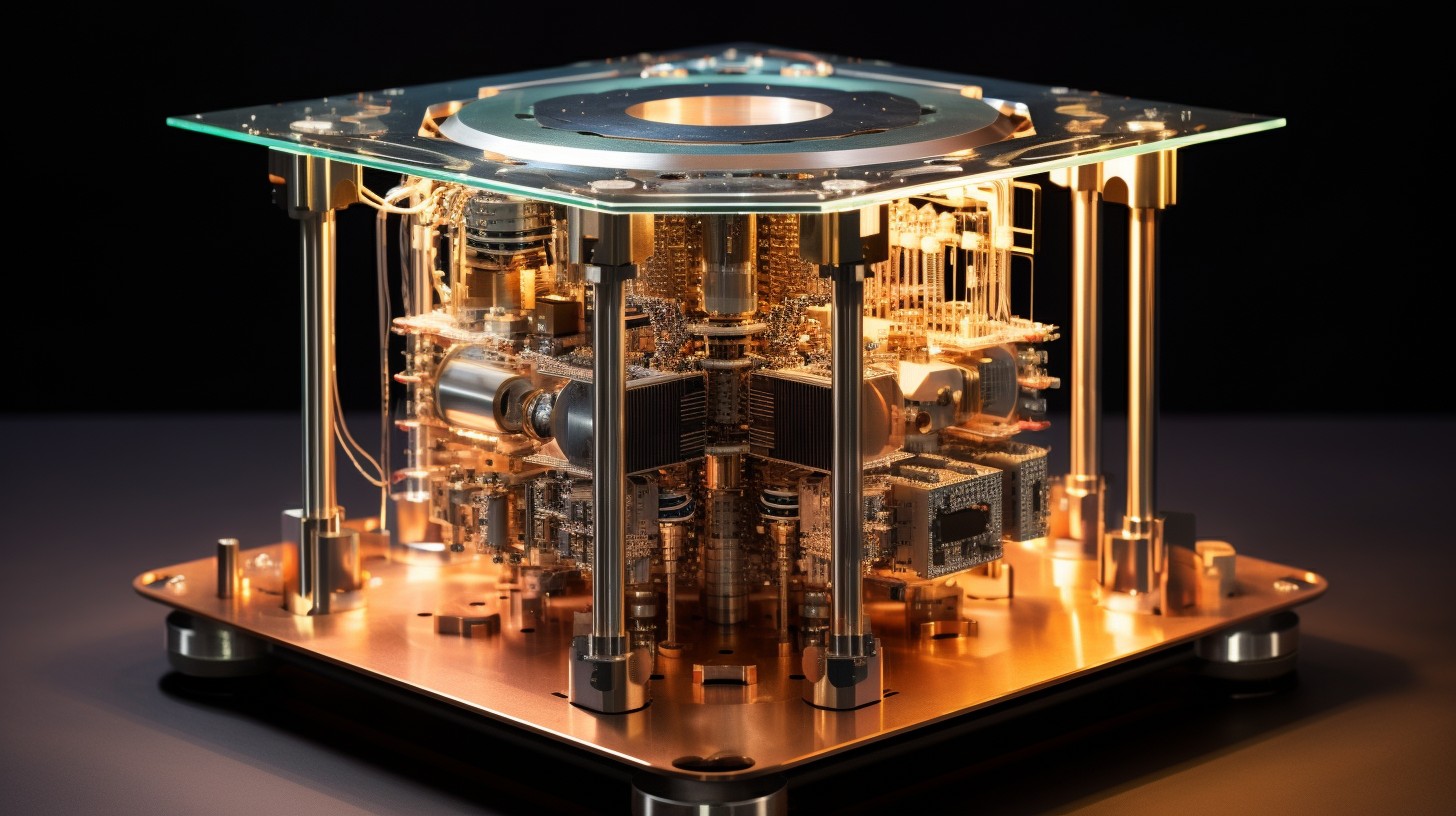

Can anyone buy a quantum computer?

One of the primary reasons quantum computers are not readily available to the

general public is their extraordinary technical requirements. These machines

require an extremely controlled environment with temperatures close to

absolute zero to prevent interference from external factors. Additionally, the

delicate nature of qubits makes them susceptible to errors caused by even the

slightest disturbances, necessitating advanced error correction techniques.

Moreover, the cost of building and operating quantum computers is exorbitant.

The infrastructure required to house and maintain these machines, along with

the specialized equipment and expertise needed to handle them, makes them

financially unattainable for most individuals or even small businesses.

However, despite the current limitations, efforts are being made to

democratize access to quantum computing. Some companies are exploring

cloud-based quantum computing services, allowing users to access quantum

computers remotely through the internet. This approach eliminates the need for

users to have their own quantum hardware, making the technology more

accessible to a wider audience.

The real impact of the cybersecurity poverty line on small organizations

The ‘cybersecurity poverty line’ is real! That said, I don’t believe people,

processes, or technology are limiting factors because significant risk

reduction is simple (technology), easy (people/process), and cheap. Bluntly,

many organizations aren’t ‘brushing their teeth’ in cybersecurity. China isn’t

targeting 99.9% of organizations, and ransomware isn’t advanced – things like

‘100% of people use strong MFA’ is the most cost-effective thing most

organizations can do to reduce their cyber risk dramatically. ... Appreciate

that Maslow’s hierarchy of needs applied to cybersecurity dictates that

revenue trumps security. We have a responsibility to steward finite resources,

and the fact is that most organizations can be adequately secured with a very

modest budget. The limiting factor is knowledge/leadership – what to do, when,

and why. ... ‘Everyone knows’ that when you are a CISO, you first do a risk

assessment against a framework. This takes X months, costs Y dollars, and

involves many discussions with the IT and security folks. I’d rather take a

few days to talk to the various executives to understand the business and see

where I can massively reduce risk while enabling the business.

Lost and Stolen Devices: A Gateway to Data Breaches and Leaks

When a computer is lost or stolen, the data it contains becomes vulnerable to

unauthorized access. Despite substantial investments in endpoint security

controls, devices are often not as secure as organizations would hope. This

vulnerability has led to numerous high-profile data breaches over the years.

... When a computer falls into the wrong hands, unauthorized access to

sensitive data becomes a real threat. Even if the device is

password-protected, threat actors can employ various techniques to bypass

security measures and gain access to files, emails, and other confidential

information. ... Without encryption, thieves can easily access and misuse

sensitive data, putting both individuals and organizations at risk. Having

encryption enabled is often a legally required control, and not being able to

prove its efficacy can expose an organization to liability. ... In some cases,

lost or stolen computers are used as a means to gain physical access to

corporate networks. If an employee’s laptop is stolen, and it contains access

credentials or VPN configurations, the thief may use this information to

infiltrate the organization’s network.

Cracking the Code: Secure Software Architecture in a Generative AI World

Code vulnerabilities serve as entry points for attackers. Given the complexity

of GAI models, these vulnerabilities can be nuanced. We are in the early days

of using code generation to inject vulnerabilities. Now is the time to take

action by keeping humans in the loop with static code analysis and code

reviews. Static Code Analysis:- Conducting static code analysis(SCA) can help

identify vulnerabilities in the code without running the program. This is

crucial as running a program with vulnerabilities could compromise the entire

system. SCA also enables compliance monitoring to standards such as Federal

Information Processing Standards FIPS) and other NIST guidelines. Code

Reviews:- Peer-reviewed coding practices allow for a second set of eyes to

catch potential vulnerabilities, reducing the likelihood of a security breach.

Make this a mandatory step in your DevSecOps process to catch and fix issues

before they escalate. The intricate nature of GAI models amplifies the risks

associated with code-level vulnerabilities.

Global Chip Shortage: Everything You Need to Know

Supplies of chips began to improve in 2022, due in part to additional capacity

with the slowdown in sales of PCs, smartphones and consumer electronics.

Foundries in Taiwan reallocated some of this capacity to the automobile and

industrial end markets, according to JP Morgan. However, automakers are

increasingly requiring chips with higher computing power — especially as the

industry transitions to electric and autonomous vehicles, which are

significantly different from the ones used in PCs and smartphones. Other

issues include tensions between the U.S. and China, which continue to impact

the global supply chain. This is ” … spurring new government controls on sales

of chips to China,” the world’s largest semiconductor market, the

Semiconductor Industry Association noted in its State of the Industry report.

There are other significant policy challenges as well, such as the ability to

strengthen the U.S. semiconductor workforce by reforming the country’s

high-skilled immigration and STEM education systems to increase the number of

workers and help contain the talent shortage, according to the SIA.

CDO interview: Carter Cousineau, vice-president of data and model governance, Thomson Reuters

Cousineau says a key part of the work she’s undertaking at Thomson Reuters

involves building the foundational elements for effective data governance.

“That’s anything around applying policies and standards, and then moving those

approaches into action, which involves the implementation of any controls and

tools that can help, support and validate the work we’re doing in practice,”

she says. .... “My approach to governance and ethics was not to build

different frameworks and tools that wouldn’t be able to fit into everyone’s

everyday workflows. These workflows differ greatly around the business. The

way finance, for example, uses AI machine learning models is very different

than product or sales,” she says. “We spent a lot of time understanding the

workflows. The last thing I want to do is to make data scientists, model

developers and product owners have another list of things to do. If you can

make governance and ethics part of their workflows automatically, it becomes a

lot easier – and we’ve done that.”

Open Source Development Threatened in Europe

European developers would stop contributing upstream to open source software

projects in the event of the passage of the CRA, said Greg Kroah-Hartman, a

fellow at the Linux Foundation and the maintainer of the stable branch for

Linux. Furthermore, it may mean the use of Linux in Europe is untenable. ...

As it stands now, the CRA burdens open source developers. It makes them liable

for the open source code they share. Technologies considered “critical” face

the most significant scrutiny. These critical technologies include operating

systems, container runtimes, networking interfaces, password managers,

microcontrollers, etc. The language may change, but it will go into the CRA

unless some last-minute changes are made. The CRA calls for standards that

still need to be developed. High-risk critical products like an OS would

require mandatory third-party assessments. Developers must perform a

cybersecurity risk assessment to ensure the product delivers without

vulnerabilities.

How to use structured concurrency in C#

Structured concurrency is a strategy for handling concurrent operations in

asynchronous programming. It relies on task scopes and proper resource cleanup

to provide several benefits including cleaner code, simpler error handling,

and prevention of resource leaks. Structured concurrency emphasizes the idea

that all asynchronous tasks should be structured within a specific context,

allowing developers to effectively compose and control the flow of these

tasks. To better manage the execution of async operations, structured

concurrency introduces the concept of task scopes. Task scopes provide a

logical unit that sets boundaries for concurrent tasks. All tasks executed

within a task scope are closely monitored and their lifecycle is carefully

managed. If any task within the scope encounters failure or cancellation, all

other tasks within that scope are automatically canceled as well. This ensures

proper cleanup and prevents resource leaks. ... In C#, we can implement

structured concurrency by using the features available in the

System.Threading.Tasks.Channels namespace. This namespace offers helpful

constructs like Channel and ChannelReader that make implementing structured

concurrency easier.

CIOs press ahead for gen AI edge — despite misgivings

Power supply giant Generac is one company that’s all in on gen AI, says CIO

Tim Dickson. “We are now fully embracing generative AI, with three innovative

pilots that are live,” he says. “First, we launched a private instance of

GPT-3.5 for internal enterprise exploration. Next, we launched a customer

service chatbot to answer customer call questions for our customer service

reps. Lastly, we tapped into our data lake to enrich and tailor specific

customer emails to drive the conviction of our products and ultimately

increased sales. These three programs are already delivering value for the

business.” And doing so requires taking risks, he says, something he believe

IT leaders must embrace to succeed today. “We are indoctrinating a culture of

gen AI within the company,” he adds. Still, the widening availability of gen

AI to the public at large keeps many CIOs awake at night. Few enterprises have

slammed the brakes, but no doubt it has led to a high emphasis on corporate

guardrails, frameworks, and shared responsibility in the C-suite.

Data Governance vs. Data Management

Data Management covers implementations of policies and procedures that do not

fall under the mantle of Data Governance. Mainly, focusing on specific

technologies and tools and their applications lies outside Data Governance. To

understand why these Data Management activities and discussions happen outside

of Data Governance, consider that Data Governance meetings mainly comprise

businesspeople, councils, subject matter experts (SMEs), stewards, and

partners without specialized IT knowledge. While Data Governance members want

to remain informed about Data Management at a high level, they do not need the

technical details. For example, Data Governance discussions may center around

protecting data and creating standards around encrypting data. However, IT

staff may take conversations deeper, outside of Data Governance, by discussing

what encryption algorithm to use and when, how to customize it through

ENCRYPT-CSA, or how big to make the critical size. By moving the technical

details outside of Data Governance, organizations can focus on data-driven

culture initiatives, change an organization’s approach towards data, and

address other human behaviors without getting bogged down in minutia.

Quote for the day:

''The manager asks how and when, the leader aks what and why.'' --

Warren Bennis

No comments:

Post a Comment