How to Become an IT Thought Leader

Being overly tech-centric is a common mistake aspiring thought leaders make. Such individuals start with a technology, then look for problems to solve. “Instead, it's important to remember that an IT thought leader drives digital change,” Zhao says. “Understanding the technology is only one aspect of IT thought leadership.” Ross concurs. “I’ve seen several troubling examples of large technology purchases occurring before key business requirements were fully understood,” he says. “Seek first to understand the desired business outcomes and remember that technology is a potential enabler of those outcomes, but never a cure-all.” A strong business case is essential for any proposed new technology, Bethavandu says. “If your company is not ready for, say, DevOps or containerization, be self-aware and don’t push for those projects until your organization is ready,” he states. On the other hand, excessive caution can also be dangerous. “If you want to be a thought leader, you have to be bold and you cannot be afraid of failing,” Bethavandu says.

Most Attackers Need Less Than 10 Hours to Find Weaknesses

Overall, nearly three-quarters of ethical hackers think most organizations lack the necessary detection and response capabilities to stop attacks, according to the Bishop Fox-SANS survey. The data should convince organizations to not just focus on preventing attacks, but aim to quickly detect and respond to attacks as a way to limit damage, Bishop Fox's Eston says. "Everyone eventually is going to be hacked, so it comes down to incident response and how you respond to an attack, as opposed to protecting against every attack vector," he says. "It is almost impossible to stop one person from clicking on a link." In addition, companies are struggling to secure many parts of their attack surface, the report stated. Third parties, remote work, the adoption of cloud infrastructure, and the increased pace of application development all contributed significantly to expanding organizations' attack surfaces, penetration testers said. Yet the human element continues to be the most critical vulnerability, by far.

Discover how technology helps manage the growth in digital evidence

With limited resources, even the most skilled law-enforcement personnel are

hard-pressed to comb through terabytes of data that may include hours of videos,

tens of thousands of images, and hundreds of thousands of words in the form of

text, email, and other sources. One possible solution is to augment skilled

investigators and forensic examiners with technology. Some of the key

technological capabilities that can be applied to this problem are AI and

machine learning. AI and machine learning models and applications create

processes that read, watch, extract, index, sort, filter, translate, and

transcribe information from text, images, and video. By utilizing technology to

carve through and analyze data, it’s possible to reduce the data mountain to a

series of small hills of related content and add tags that make it searchable.

That allows people to spend their time and energy on work that is most valuable

in the investigation. The good news is that help is available. Microsoft has

multiple AI and machine learning processes within our Microsoft Azure Cognitive

Services.

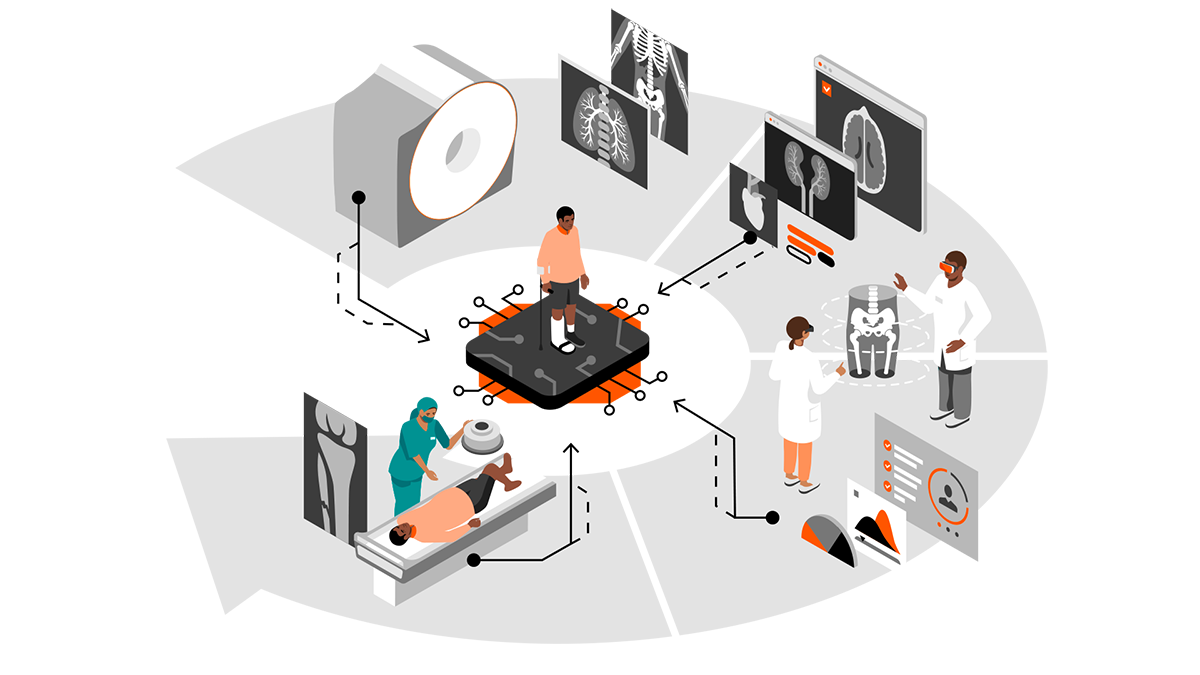

The modern enterprise imaging and data value chain

The costs and consequences of the current fragmented state of health care data

are far-reaching: operational inefficiencies and unnecessary duplication,

treatment errors, and missed opportunities for basic research. Recent medical

literature is filled with examples of missed opportunities—and patients put at

risk because of a lack of data sharing. More than four million Medicare patients

are discharged to skilled nursing facilities (SNFs) every year. Most of them are

elderly patients with complex conditions, and the transition can be hazardous.

... “Weak transitional care practices between hospitals and SNFs compromise

quality and safety outcomes for this population,” researchers noted. Even within

hospitals, sharing data remains a major problem. ... Data silos and incompatible

data sets remain another roadblock. In a 2019 article in the journal JCO

Clinical Cancer Informatics, researchers analyzed data from the Cancer Imaging

Archive (TCIA), looking specifically at nine lung and brain research data sets

containing 659 data fields in order to understand what would be required to

harmonize data for cross-study access.

Cloud’s key role in the emerging hybrid workforce

One key to the mistakes may be the overuse of cloud computing. Public clouds

provide more scalable and accessible systems on demand, but they are not always

cost-effective. I fear that much like when any technology becomes what the cool

kids are using, cloud is being picked for emotional reasons and not business

reasons. On-premises hardware costs have fallen a great deal during the past 10

years. Using these more traditional methods of storage and compute may be way

more cost-effective than the cloud in some instances and may be just as

accessible, depending on the location of the workforce. My hope is that moving

to the cloud, which was accelerated by the pandemic, does not make us lose sight

of making business cases for the use of any technology. Another core mistake

that may bring down companies is not having security plans and technology to

support the new hybrid workforce. Although few numbers have emerged, I suspect

that this is going to be an issue for about 50% of companies supporting a remote

workforce.

Why zero trust should be the foundation of your cybersecurity ecosystem

Recently, zero trust has developed a large following due to a surge in insider

attacks and an increase in remote work – both of which challenge the

effectiveness of traditional perimeter-based security approaches. A 2021 global

enterprise survey found that 72% respondents had adopted zero trust or planned

to in the near future. Gartner predicts that spending on zero trust solutions

will more than double to $1.674 billion between now and 2025. Governments are

also mandating zero trust architectures for federal organizations. These

endorsements from the largest organizations have accelerated zero trust adoption

across every sector. Moreover, these developments suggest that zero trust will

soon become the default security approach for every organization. Zero trust

enables organizations to protect their assets by reducing the chance and impact

of a breach. It also reduces the average breach cost by at least $1.76 million,

can prevent five cyber disasters per year, and save an average of $20.1 million

in application downtime costs.

Walls between technology pros and customers are coming down at mainstream companies

Tools assisting with this engagement include "prediction, automation, smart

job sites and digital twins," he says. "We have resources in each of our

geographic regions where we scale new technology from project to project to

ensure the 'why' is understood, provide necessary training and support, and

educate teams on how that technology solution makes sense in current processes

and day-to-day operations." At the same time, getting technology professionals

up to speed with crucial pieces of this customer collaboration -- user

experience (UX) and design thinking -- is a challenge, McFarland adds. "There

is a widely recognized expectation to create seamless and positive customer

experiences. That said, specific training and technological capabilities are a

headwind that professionals are experiencing. While legacy employees may be

fully immersed and knowledgeable about a certain program and its technical

capabilities, it is more unusual to have both the technical and UX design

expertise. The construction industry is working to find the right balance of

technology expertise and awareness with UX and design proficiencies."

Why Is the Future of Cloud Computing Distributed Cloud?

Distributed cloud freshly redefines cloud computing. It states that a

distributed cloud is a public cloud architecture that handles data processing

and storage in a distributed manner. Said, a business using dispersed cloud

computing can store and process its data in various data centers, some of

which may be physically situated in other regions. A content delivery network

(CDN), a network architecture that is geographically spread, is an example of

a distributed cloud. It is made to send content (most frequently video or

music) quickly and efficiently to viewers in various places, significantly

lowering download speeds. Distributed clouds, however, offer advantages to

more than just content producers and artists. They can be utilized in multiple

business contexts, including transportation and sales. It is possible to use a

distributed cloud even in particular geographical regions. For instance, a

supplier of file transfer services can format video and store content on CDNs

spread out globally using centralized cloud resources.

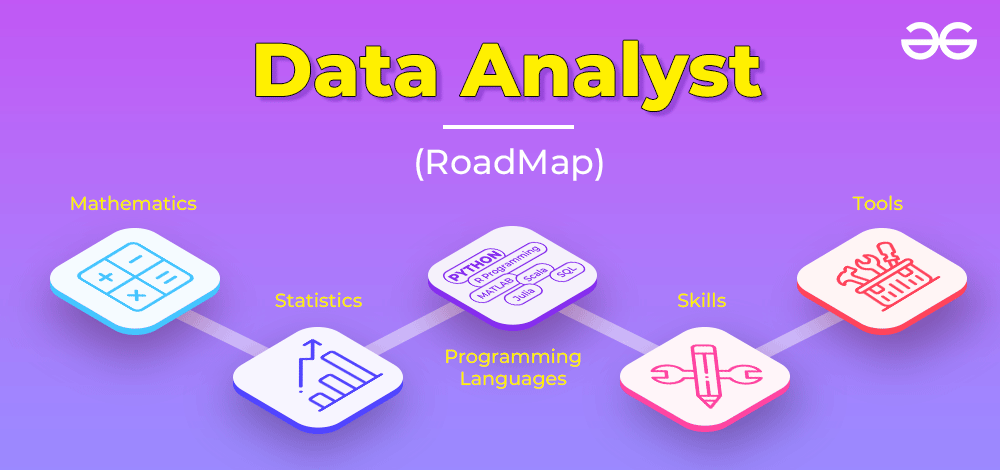

How to Become a Data Analyst – Complete Roadmap

First, understand this, the field of Data Analyst is not about computer

science but about applying computational, analysis, and statistics. This field

focuses on working with large datasets and the production of useful insights

that helps in solving real-life problems. The whole process starts with a

hypothesis that needs to be answered and then involvement in gathering new

data to test those hypotheses. There are 2 major categories of Data Analyst:

Tech and Non-Tech. Both of them work on different tools and Tech domain

professionals are required to possess knowledge of required programming

languages too (such as R or Python). The working professional should be fluent

in statistics so that they can present any given amount of raw data into a

well-aligned structure. ... Today, Billions of companies are generating data

on daily basis and using it to make crucial business decisions. It helps in

deciding their future goals and setting new milestones. We’re living in a

world where Data is the new fuel and to make it useful data analysts are

required in every sector.

Software developer: A day in the life

An analytics role will require you to learn new skills continuously, look at

things in new ways, and embrace new perspectives. In technology and business,

things happen quickly. It is important to always keep up with what is

happening in the industries in which you are involved. Never forget that at

its core, technology is about problem-solving. Don’t get too attached to any

coding language; just be aware that you probably won’t be able to use the

language you like, do the refactor you want, or perform the update you expect

all the time. The end focus is always on the client, and their needs take

priority over developer preferences. Be prepared to use English every day.

To keep your skills sharp, read documentation, talk to others often, and

watch videos. ... Any analytics professional who is interested in elevating

their career should always be attentive to new technologies and updates,

become an expert in some specific language/technology, and understand the low

level of programming in a variety of languages. Finally, if you enjoy logic,

math, and problem-solving, consider a career in software development. The

world needs your skills to solve big challenges.

Quote for the day:

"Leadership Principle: As hunger

increases, excuses decrease." -- Orrin Woodward

No comments:

Post a Comment