The strict regulatory fashion of the finance industry makes it an ideal sector to invest in AI, because the technology can automate tasks that are based on specific systems, set rules and procedures. We are likely to see an increasing presence of artificial intelligence in the financial services sector in the next few years. Tasks such as providing soliciting or financial advice could easily be provided through a bot interface, using existing artificial intelligence and automation technologies. Some companies are already using more advanced AI applications, such as IBM’s Watson. However, most of these companies are using this technology in an experimental fashion, as opposed to completely overhauling their existing procedures in favour of artificial intelligence. Despite its potential, artificial intelligence is still in its infancy. Until it is fully developed, there will still be arguments about the accuracy and ability of AI over a well-trained human representative.

An AI system might be just as good at translating the document, but a human can probably also perform related tasks such as understanding Chinese speech, answering questions about Chinese culture and recommending a good Chinese restaurant. “Very different AI systems would be needed for each of these tasks,” the report said. “Machine performance may degrade dramatically if the original task is modified even slightly.” Alan Mackworth, a professor at the University of British Columbia who holds the Canada research chair in artificial intelligence, said he thinks it will be at least a decade or two before researchers make any real progress in artificial general intelligence. Some experts don’t think general AI is possible at all, but Mackworth cautioned against dismissing anything as impossible with a long enough time horizon. “It’s very risky to say AI won’t be able to do this, that and the other thing,” he said. “Almost every time that prediction has been made, it’s eventually been proven false.”

RegTech Companies in the US Driving Down Compliance Costs to Enable Innovation

Regulatory and compliance issues are some of the most important, complex and resource-consuming problems to solve for any organization, especially for startups with limited resources. Over decades of development, regulatory requirements and documentation have grown into a matter of special expertise and skills to decode. Globally, ~$80 billion is spent on governance, risk and compliance, and the market is only expected to grow, reaching $120 billion in the next five years. The costly and complex procedures imposed on every financial institution around the world resulted in the growth and development of solutions addressing the issue. RegTech companies nowadays are offering advanced, AI-powered solutions and various hubs are represented by a set of own market leaders. Further, we will review some of the RegTech solutions providers unleashing innovative capacity for financial institutions in the United States.

This is probably the most non-value-add activity a Data Scientists, and something they are not trained in the first place. The foundation of clean connected data is critical for a successful outcome. Data cleansing, connecting keys across data sources, and managing the data set is a significant amount of work that requires special skills. For example, In the customer analytics world the ability to draw signals from customer data from one channel and predict their behavior in another channel is the holy grail. People want to know what is the value of a positive review on their brand page. If the social marketer could quantify that a customer who leaves a positive review is twice as likely to become a high value customer, he will be able to justify the value of social marketing. The data scientist can do this, but they need a single-view-of-the-customer to start their work. Today the data scientist is spending 70 to 80% of their efforts getting the data and not doing their real job.

The Difference Between Clarity and Focus, and Why You Need Both

Focus is knowing which actions to take every single day to get there. When you get into your car, you have a starting place and a destination. The map or GPS gets you from where you are to where you want to be. Clarity is where you want to be. Focus is doing what you need to do to get the car to its destination. Every choice you make behind the wheel will either get you closer to your destination, or further away. To further the analogy, the GPS or map is your mentor or guide who will shorten the learning curve, so you're not driving around in circles. And the fuel is your why. Your why is the energy behind every action you take. My why was to get my mother out of debt. It's what kept me motivated every day I was trying to grow my business, and championed me through the inevitable setbacks.

What Is The Hidden RoI Of Mentoring? What You Need To Know

The limits you place upon yourself are far greater than the limits that others could ever place on you. A mentor can help you remove the limits you place upon yourself, and help you use more of the potential that is within you. This ROI seems hidden, because it is not within what you do or even what you think; but is often within your subconscious beliefs… the part of you that is framing the opportunities you will be able to see or not see. The benefits of seeing more opportunities and taking action on them can be limitless. ... You can only achieve to the level of the potential you see within you. So, when a mentor helps you see more of your potential, it is a way of opening a door for you to see more ways of you using that potential too. A mentor often helps you see more of what you already know and do, and helps you see that not using the potential inside you is also limiting you from using the potential that is within your team.

Nissan Canada Finance Issues Data Breach Alert

Nissan Canada Finance, which provides financing for vehicle buyers and leasers, is warning 1.13 million current and former customers that their personal information may have been stolen. NCF, headquartered in Ontario, says in a security alert that it is "a victim of a data breach that may have involved unauthorized person(s) gaining access to the personal information of some customers that have financed their vehicles through Nissan Canada Finance and Infiniti Financial Services Canada." NCF is a subsidiary of carmaker Nissan Canada, which builds 60 models of vehcicles under the Nissan, Infiniti and Datsun brand names. "At this time, we have no indication that Nissan or Infiniti customers in Canada who did not obtain financing through NCF are affected," it says in the notification, issued Dec. 21. The company says it is informing customers by letter and where possible also email.

Business Process Mining Promises Big Data Payoff

“Honestly, what we’re doing is removing the need to pay a Deloitte or McKinsey to come in and do all this discovery and find the issue,” says Celonis Chief Marketing Officer Sam Werner. “We can replace the expensive consultants that get paid today to go do this work, which by the way can be very disruptive for your organization.” Process mining starts with data discovery. Its product, called Proactive Insights (or PI), Celonis scans mainstream ERP systems from the likes of SAP, Oracle, and Microsoft, identifies the business processes, and then constructs a visual representation of how the process works. This gives architects a low-level view of how many different places each individual purchase order waits for approval, for example. If you’ve ever seen a BPM tool, you know that visualizations of business processes can get quite messy. The so-called “process spaghetti” demonstrates that, while there typically is a standard way to do things, that variations inevitably creep into the process.

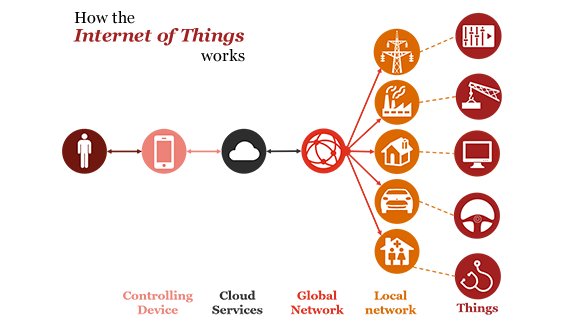

IoT is an invitation to ransomware

With IoT ransomware, the attacker’s goal is to prevent the victim from controlling a device and the function it provides. Imagine that it’s winter and you’re locked out of your home thermostat when it’s 10 degrees Fahrenheit outside. The homeowners’ instinct will be to figure out how to pay the ransomware and get back control before they freeze. Now imagine that this same scenario plays out on a larger scale, like with the HVAC system of a corporate data center. What damage could an air conditioning shutdown do to the data center’s servers? Similarly, widespread lockouts of IoT-based medical devices, such as pacemakers or drug infusion pumps, could have dire consequences. All of these IoT devices have an embedded data gathering application, which communicates with other cloud-based applications and storage facilities. A cybercriminal can corrupt or encrypt data being sent to the cloud application in the same way that a computer system can be subverted to lock the device.

The countdown begins: Top fintech trends that India can expect in 2018

It has cemented the significance of the fintech sector in India. From increased momentum behind digital payments to product innovation in digital loan disbursement platforms, the entire sector is leaving no stone unturned to ensure that the nation functions the way it does and achieves the two-digit growth rate that it has persistently been longing for. 2018 promises to be a great year for FinTech industry with increasing digitization and data availability. Here's what else we can expect from the fintech sector in the year to come and the top trends that will dominate this rapidly evolving space The fast-paced digitization has unlocked fresher avenues for fintech players, especially the ones associated with SME-based lending. With the increased digital adoption by business owing to demonetization and the rollout of GST, both of which have exponentially increased the business-centric data, 2018 will be the year of action for SME lending-based fintech players.

Quote for the day:

"Formal education will make you a living. Self education will make you a fortune." -- Jim Rohn