Is your CISO stressed? According to Nominet, they are

Overworked CISOs would sacrifice their salary for a better work-life balance, according to the research. Investigating the causes of CISO stress, the research found that almost all CISOs are working beyond their contracted hours, on average by 10 hours per week. And, the report suggests that even when they are not at work many CISOs feel unable to switch off. As a result, CISOs reported missing family birthdays, holiday, weddings and even funerals. They’re also not taking their annual leave, sick days or time for doctor appointments — contributing to physical and mental health problems. The key findings: 71% of CISOs said their work-life balance is too heavily weighted towards work; 95% work more than their contracted hours — on average, 10 hours longer a week — which means CISOs are giving organisations $30,319 (£23,503) worth of extra time per year; Only 2% of CISOs said they were always able to switch off from work outside of the office, with the vast majority (83%) reporting that they spend half their evenings and weekends or more thinking about work.

This latest phishing scam is spreading fake invoices loaded with malware

The attachment claims the user needs to 'enable content' in order to see the document; if this is done it allows malicious macros and malicious URLs to deliver Emotet to the machine. Because Emotet is such a prolific botnet, the malicious emails don't come from any one particular source, but rather infected Windows machines around the world. If a machine falls victim to Emotet, not only does the malware provide a backdoor into the system, allowing attackers to steal sensitive information, it also allows the attackers to use the machine to spread additional malware – or allow other hackers to exploit compromised PCs for their own gain. The campaign spiked towards the end of January and while activity has dropped for now, financial institutions are still being targeted with Emotet phishing campaigns. "We are continuing to see Emotet traffic, though the intensity has reduced considerably," Krishnan Subramanian, researcher at Menlo Labs told ZDNet. In order to protect against Emotet malware, it's recommended that users are wary of documents asking them to enable macros, especially if it's from an untrusted or unknown source. Businesses can also disable macros by default.

Research network for ethical AI launched in the UK

The initiative is being led by the Ada Lovelace Institute, an independent data and AI think tank, in partnership with the Arts and Humanities Research Council (AHRC), and will also seek to inform the development of policy and best practice around the use of AI. “The Just AI network will help ensure the development and deployment of AI and data-driven technologies serves the common good by connecting research on technical solutions with understanding of social and ethical values and impact,” said Carly Kind, director of the Ada Lovelace Institute. “We’re pleased to be working in partnership with the AHRC and with Alison Powell, whose expertise in the interrelationships between people, technology and ethics make her the ideal candidate to lead the Just AI network.” Powell, who works at the London School of Economics (LSE), specifically researches how people’s values influence how technology is built, as well as how it changes the way we live and work.

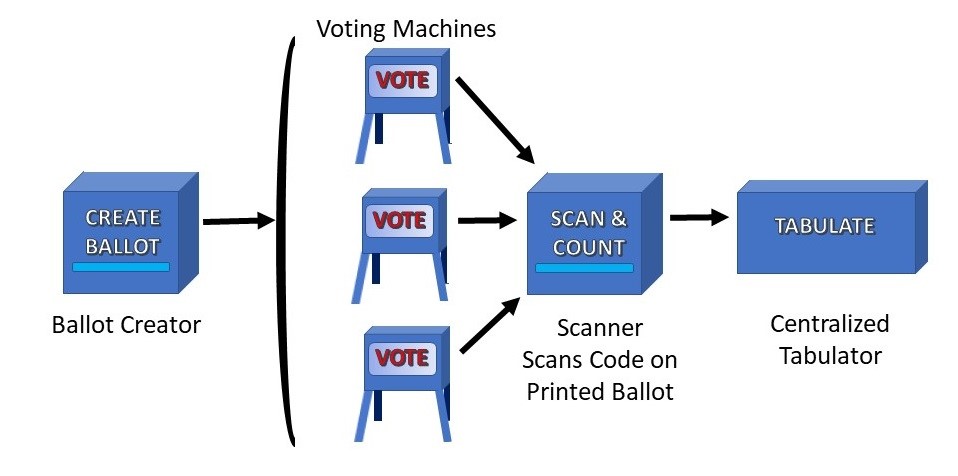

How Can We Make Election Technology Secure?

Let's start with some common problems presented by modern-day election machines. Single point of failure. A compromise or malfunction of election technology could decide a presidential election. Between elections. Election devices might be compromised while they are stored between elections. Corrupt updates. Any pathway for installing new software in voting machines before each election, including USB ports, may allow corrupt updates to render the system untrustworthy. Weak system design. Without clear guidelines and thorough, expert evaluation, the election system is likely susceptible to many expected and unexpected attacks. Misplaced trust. Technology is not a magic bullet. Even voting equipment from leading brands has delivered wildly wrong results in real elections. Election administrators need to safeguard the election without relying too heavily on third parties or technologies they don't control. It takes a lot of work to lock down a complex voting system to the point where you'd bet the children's college fund — or the future of society — on its safety.

The Human-Powered Companies That Make AI Work

Machine learning is what powers today’s AI systems. Organizations are implementing one or more of the seven patterns of AI, including computer vision, natural language processing, predictive analytics, autonomous systems, pattern and anomaly detection, goal-driven systems, and hyperpersonalization across a wide range of applications. However, in order for these systems to be able to create accurate generalizations, these machine learning systems must be trained on data. The more advanced forms of machine learning, especially deep learning neural networks, require significant volumes of data to be able to create models with desired levels of accuracy. It goes without saying then, that the machine learning data needs to be clean, accurate, complete, and well-labeled so the resulting machine learning models are accurate. Whereas it has always been the case that garbage in is garbage out in computing, it is especially the case with regards to machine learning data.

There are multiple IaC frameworks and technologies, the most common based on Palo Alto's collection effort being Kubernetes YAML (39%), Terraform by HashiCorp (37%) and AWS CloudFormation (24%). Of these, 42% of identified CloudFormation templates, 22% of Terraform templates and 9% of Kubernetes YAML configuration files had a vulnerability. Palo Alto's analysis suggests that half the infrastructure deployments using AWS CloudFormation templates will have an insecure configuration. The report breaks this down further by type of impacted AWS service -- Amazon Elastic Compute Cloud (Amazon EC2), Amazon Relational Database Service (RDS), Amazon Simple Storage Service (Amazon S3) or Amazon Elastic Container Service (Amazon ECS). ... The absence of database encryption and logging, which is important to protect data and investigate potential unauthorized access, was also a commonly observed issue in CloudFormation templates. Half of them did not enable S3 logging and another half did not enable S3 server-side encryption.

Serverless computing: Ready or not?

By nature, serverless computing architectures tend to be more cost-effective than alternative approaches. "A core capability of serverless is that it scales up and down to zero so that when it’s not being used you aren’t paying for it," Austin advises. With serverless technology, the customer pays for consumption, not capacity, says Kevin McMahon, executive director of mobile and emerging technologies at consulting firm SPR. He compares the serverless model to owning a car versus using a ride-sharing service. "Prior to ride sharing, if you wanted to get from point A to B reliably you likely owned a car, paid for insurance and had to maintain it," he explains. "With ride-sharing, you no longer have to worry about the car, you can just pay to get from A to B when you want—you simply pay for the job that needs to be done instead of the additional infrastructure and maintenance." Serverless computing can also help adopters avoid costs related to the overallocation of resources, ensuring that expenses are in line with actual consumption, observes Craig Tavares, head of cloud at IT service management company Aptum.

Oops! Microsoft gets 'black eye' from Teams outage

“This is definitely a black eye for Microsoft, especially when it has touted its reliability in the wake of some high-profile Slack outages in the last couple of years,” said Irwin Lazar, vice president and Service Director at Nemertes Research. “It is surprising that Microsoft didn't renew its certificate, and it shows that as Teams rapidly grows they will have to ensure they are addressing operational issues to prevent further downtime.” Indeed, the prompt reaction to the outage is an indication of the growing importance of Teams as more and more office workers rely on team messaging tools. “There is nothing like taking a service down to illustrate its popularity and importance. However, this is not a best practice we recommend,” Larry Cannell, a research director at Gartner, dryly noted. An SSL certificate enables a secure connection between a web browser or app and a server, and is required for HTTPS-enabled sites. It helps protect users against security risks such as man-in-the-middle attacks by allowing data to be encrypted. When a certificate expires, the server can’t be identified and information cannot be sent. That was the case with Teams on Monday.

Looking to hire a '10x developer'? You can try, but it probably won't boost productivity

As Nichols notes in a blog, various studies since the 1968 one have estimated that top-performing developers are between four and 28 times more productive than average performers. But Nichols says his study found evidence to contradict the idea that some programmers are inherently far more skilled or productive than others. Performance differences are partly attributable to the skill of an individual, he writes, but each person's productivity also varies every day, depending on the task and other factors. "First, I found that most of the differences resulted from a few, very low performances, rather than exceptional high performance. Second, there are very few programmers at the extremes. Third, the same programmers were seldom best or worst," he explains. He argues that these findings should change the way a software project manager approaches recruitment. For example, they shouldn't necessarily just focus on getting the top programmers to boost organizational productivity, but find "capable" programmers and develop that talent. The study involved 494 students with an average of 3.7 years' industry experience. The students used the C programming language and were tasked with programming solutions through a set of 10 assignments.

5 steps to creating a strong data archiving policy

Suppose you decide to archive data that hasn't been modified or accessed in three years. That decision leads to a number of other questions related to the data management. For example, should all the data that meets the three-year criteria be archived, or can some types of data simply be deleted rather than archived? Likewise, will data remain in your archives forever or will the data be purged at some point? You must have specific plans that address the exact circumstances under which data should be archived, as well as a plan for what will eventually happen to archived data. Many companies assume that having a data archiving policy means they have a deletion policy; they eventually wind up wishing they had spelled out the specifics of deletion and archival. ... Regulatory compliance is also critical. Not every organization is subject to federal regulatory requirements surrounding data retention policy, but those that are can face severe penalties if they fail to properly retain required data. Multinational companies also must be aware of varying regulatory policies.

Quote for the day:

"Leadership does not always wear the harness of compromise." -- Woodrow Wilson

/cdn.vox-cdn.com/uploads/chorus_image/image/66227166/acastro_180406_1777_facebook_Congress_0002.0.jpg)