Forcing the SOC to change its approach to detection

Make no mistake, we are not talking about the application of AI in the usual

sense when it comes to threat detection. Up until now, AI has seen Large

Language Models (LLMs) used to do little more than summarise findings for

reporting purposes in incident response. Instead, we are referring to the

application of AI in its truer and broader sense, i.e. via machine learning,

agents, graphs, hypergraphs and other approaches – and these promise to make

detection both more precise and intelligible. Hypergraphs gives us the power to

connect hundreds of observations together to form likely chains of events.

... The end result is that the security analyst is no longer perpetually

caught in firefighting mode. Rather than having to respond to hundreds of alerts

a day, the analyst can use the hypergraphs and AI to detect and string together

long chains of alerts that share commonalities and in so doing gain a complete

picture of the threat. Realistically, it’s expected that adopting such an

approach should see alert volumes decline by up to 90 per cent. But it doesn’t

end there. By applying machine learning to the chains of events it will be

possible to prioritise response, identifying which threats require immediate

triage.

Sole Source vs. Single Source Vendor Management

A Sole source is a vendor that provides a specific product or service to your

company. This vendor makes a specific widget or service that is custom tailored

to your company’s needs. If there is an event at this Sole Source provider, your

company can only wait until the event has been resolved. There is no other

vendor that can produce your product or service quickly. They are the sole

source, on a critical path to your operations. From an oversight and assessment

perspective, this can be a difficult relationship to mitigate risks to your

company. With sole source companies, we as practitioners must do a deeper dive

into these companies from a risk assessment perspective. From a vendor audit

perspective, we need to go into more details of how robust their business

continuity, disaster recovery, and crisis management programs are. ... Single

Source providers are vendors that provide a service or product to your company

that is one company that you choose to do business with, but there are other

providers that could provide the same product or services. An example of a

single source provider is a payment processing company. There are many to choose

from, but you chose one specific company to do business with. Moving to a new

single source provider can be a daunting task that involves a new RFP process,

process integration, assessments of their business continuity program,

etc.

Central Africa needs traction on financial inclusion to advance economic growth

Beyond the infrastructure, financial inclusion would see a leap forward in CEMAC

if the right policies and platforms exist. “The number two thing is that you

have to have the right policies in place which are going to establish what would

constitute acceptable identity authentication for identity transactions. So, be

it for onboarding or identity transactions, you have to have a policy. Saying

that we’re going to do biometric authentication for every transaction, no matter

what value it is and what context it is, doesn’t make any sense,” Atick holds.

“You have to have a policy that is basically a risk-based policy. And we have

lots of experience in that. Some countries started with their own policies, and

over time, they started to understand it. Luckily, there is a lot of knowledge

now that we can share on this point. This is why we’re doing the Financial

Inclusion Symposium at the ID4Africa Annual General Meeting next year [in Addis

Ababa], because these countries are going to share their knowledge and

experiences.” “The symposium at the AGM will basically be on digital identity

and finance. It’s going to focus on the stages of financial inclusion, and what

are the risk-based policies countries must put in place to achieve the desired

outcome, which is a low-cost, high-robustness and trustworthy ecosystem that

enables anybody to enter the system and to conduct transactions securely.”

2025 Data Outlook: Strategic Insights for the Road Ahead

By embracing localised data processing, companies can turn compliance into an

advantage, driving innovations such as data barter markets and

sovereignty-specific data products. Data sovereignty isn’t merely a regulatory

checkbox—it’s about Citizen Data Rights. With most consumer data being

unstructured and often ignored, organisations can no longer afford complacency.

Prioritising unstructured data management will be crucial as personal

information needs to be identified, cataloged, and protected at a granular level

from inception through intelligent, policy-based automation. ... Individuals are

gaining more control over their personal information and expect transparency,

control, and digital trust from organisations. As a result, businesses will

shift to self-service data management, enabling data stewards across departments

to actively participate in privacy practices. This evolution moves privacy

management out of IT silos, embedding it into daily operations across the

organisation. Organisations that embrace this change will implement a “Data

Democracy by Design” approach, incorporating self-service privacy dashboards,

personalised data management workflows, and Role-Based Access Control (RBAC) for

data stewards.

Defining & Defying Cybersecurity Staff Burnout

According to the van Dam article, burnout happens when an employee buries their

experience of chronic stress for years. The people who burn out are often

formerly great performers, perfectionists who exhibit perseverance. But if the

person perseveres in a situation where they don't have control, they can

experience the kind of morale-killing stress that, left unaddressed for months

and years, leads to burnout. In such cases, "perseverance is not adaptive

anymore and individuals should shift to other coping strategies like asking for

social support and reflecting on one's situation and feelings," the article

read. ... Employees sometimes scoff at the wellness programs companies put out

as an attempt to keep people healthy. "Most 'corporate' solutions — use this

app! attend this webinar! — felt juvenile and unhelpful," Eden says. And it does

seem like many solutions fall into the same quick-fix category as home

improvement hacks or dump dinner recipes. Christina Maslach's scholarly work

attributed work stress to six main sources: workload, values, reward, control,

fairness, and community. An even quicker assessment is promised by the

Matches Measure from Cindy Muir Zapata.

Revolutionizing Cloud Security for Future Threats

Is it possible that embracing Non-Human Identities can help us bridge the

resource gap in cybersecurity? The answer is a definite yes. The cybersecurity

field is chronically understaffed and for firms to successfully safeguard their

digital assets, they must be equipped to handle an infinite number of parallel

tasks. This demands a new breed of solutions such as NHIs and Secrets Security

Management that offer automation at a scale hitherto unseen. NHIs have the

potential to take over tedious tasks like secret rotation, identity lifecycle

management, and security compliance management. By automating these tasks, NHIs

free up the cybersecurity workforce to concentrate on more strategic

initiatives, thereby improving the overall efficiency of your security

operations. Moreover, through AI-enhanced NHI Management platforms, we can

provide better insights into system vulnerabilities and usage patterns,

considerably improving context-aware security. Can the concept of Non-Human

Identities extend its relevance beyond the IT sector? ... From healthcare

institutions safeguarding sensitive patient data, financial services firms

securing transactional data, travel companies protecting customer data, to

DevOps teams looking to maintain the integrity of their codebases, the strategic

relevance of NHIs is widespread.

Digital Transformation: Making Information Work for You

Digital transformation is changing the organization from one state to another

through the use of electronic devices that leverage information. Oftentimes,

this entails process improvement and process reengineering to convert business

interactions from human-to-human to human-to-computer-to-human. By introducing

the element of the computer into human-to-human transactions, there is a digital

breadcrumb left behind. This digital record of the transaction is important in

making digital transformations successful and is the key to how analytics can

enable more successful digital transformations. In a human-to-human interaction,

information is transferred from one party to another, but it generally stops

there. With the introduction of the digital element in the middle, the data is

captured, stored, and available for analysis, dissemination, and amplification.

This is where data analytics shines. If an organization stops with data storage,

they are missing the lion’s share of the potential value of a digital

transformation initiative. Organizations that focus only on collecting data from

all their transactions and sinking this into a data lake often find that their

efforts are in vain. They end up with a data swamp where data goes to die and

never fully realize its potential value.

Secure and Simplify SD-Branch Networks

/dq/media/media_files/2024/12/26/6xyoLeZW8RbIx2FykASq.jpg)

The traditional WAN relies on expensive MPLS connectivity and a hub-and-spoke

architecture that backhauls all traffic through the corporate data centre for

centralized security checks. This approach creates bottlenecks that interfere

with network performance and reliability. In addition to users demanding fast

and reliable access to resources, IoT applications need reliable WAN connections

to leverage cloud-based management and big data repositories. ... The

traditional WAN relies on expensive MPLS connectivity and a hub-and-spoke

architecture that backhauls all traffic through the corporate data centre for

centralized security checks. This approach creates bottlenecks that interfere

with network performance and reliability. In addition to users demanding fast

and reliable access to resources, IoT applications need reliable WAN connections

to leverage cloud-based management and big data repositories. ... To reduce

complexity and appliance sprawl, SD-Branch consolidates networking and security

capabilities into a single solution that provides seamless protection of

distributed environments. It covers all critical branch edges, from the WAN edge

to the branch access layer to a full spectrum of endpoint devices.

Breaking up is hard to do: Chunking in RAG applications

The most basic is to chunk text into fixed sizes. This works for fairly

homogenous datasets that use content of similar formats and sizes, like news

articles or blog posts. It’s the cheapest method in terms of the amount of

compute you’ll need, but it doesn’t take into account the context of the content

that you’re chunking. That might not matter for your use case, but it might end

up mattering a lot. You could also use random chunk sizes if your dataset is a

non-homogenous collection of multiple document types. This approach can

potentially capture a wider variety of semantic contexts and topics without

relying on the conventions of any given document type. Random chunks are a

gamble, though, as you might end up breaking content across sentences and

paragraphs, leading to meaningless chunks of text. For both of these types, you

can apply the chunking method over sliding windows; that is, instead of starting

new chunks at the end of the previous chunk, new chunks overlap the content of

the previous one and contain part of it. This can better capture the context

around the edges of each chunk and increase the semantic relevance of your

overall system. The tradeoff is that it requires greater storage requirements

and can store redundant information.

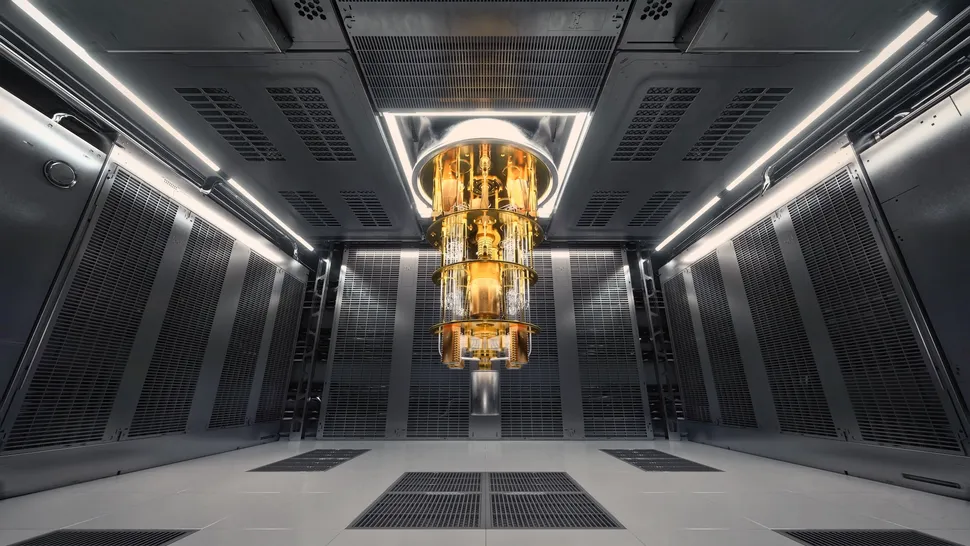

What is quantum supremacy?

A definitive achievement of quantum supremacy will require either a significant

reduction in quantum hardware's error rates or a better theoretical

understanding of what kind of noise classical approaches can exploit to help

simulate the behavior of error-prone quantum computers, Fefferman said. But this

back-and-forth between quantum and classical approaches is helping push the

field forwards, he added, creating a virtuous cycle that is helping quantum

hardware developers understand where they need to improve. "Because of this

cycle, the experiments have improved dramatically," Fefferman said. "And as a

theorist coming up with these classical algorithms, I hope that eventually, I'm

not able to do it anymore." While it's uncertain whether quantum supremacy has

already been reached, it's clear that we are on the cusp of it, Benjamin said.

But it's important to remember that reaching this milestone would be a largely

academic and symbolic achievement, as the problems being tackled are of no

practical use. "We're at that threshold, roughly speaking, but it isn't an

interesting threshold, because on the other side of it, nothing magic happens,"

Benjamin said. ... That's why many in the field are refocusing their efforts on

a new goal: demonstrating "quantum utility," or the ability to show a

significant speedup over classical computers on a practically useful problem.

Shift left security — Good intentions, poor execution, and ways to fix it

One of the first steps is changing the way security is integrated into

development. Instead of focusing on a “gotcha”, after-the-fact approach, we need

security to assist us as early as possible in the process: as we write the code.

By guiding us as we’re still in ‘work-in-progress’ mode with our code, security

can adopt a positive coaching and helping stance, nudging us to correct issues

before they become problems and go clutter our backlog. ... The security tools

we use need to catch vulnerabilities early enough so that nobody circles back to

fix boomerang issues later. Very much in line with my previous point, detecting

and fixing vulnerabilities as we code saves time and preserves focus. This also

reduces the back-and-forth in peer reviews, making the entire process smoother

and more efficient. By embedding security more deeply into the development

workflow, we can address security issues without disrupting productivity. ...

When it comes to security training, we need a more focused approach. Developers

don’t need to become experts in every aspect of code security, but we do need to

be equipped with the knowledge that’s directly relevant to the work we’re doing,

when we’re doing it — as we code. Instead of broad, one-size-fits-all training

programs, let’s focus on addressing specific knowledge gaps we personally

have.

Quote for the day:

“Whenever you see a successful person,

you only see the public glories, never the private sacrifices to reach

them.” -- Vaibhav Shah

No comments:

Post a Comment