Beginner's Guide to Quantum Machine Learning

Whenever you think of the word "quantum," it might trigger the idea of an atom

or molecule. Quantum computers are made up of a similar idea. In a classical

computer, processing occurs at the bit-level. In the case of Quantum

Computers, there is a particular behavior that governs the system; namely,

quantum physics. Within quantum physics, we have a variety of tools that are

used to describe the interaction between different atoms. In the case of

Quantum Computers, these atoms are called "qubits" (we will discuss that in

detail later). A qubit acts as both a particle and a wave. A wave distribution

stores a lot of data, as compared to a particle (or bit). Loss functions are

used to keep a check on how accurate a machine learning solution is. While

training a machine learning model and getting its predictions, we often

observe that all the predictions are not correct. The loss function is

represented by some mathematical expression, the result of which shows by how

much the algorithm has missed the target. A Quantum Computer also aims to

reduce the loss function. It has a property called Quantum Tunneling which

searches through the entire loss function space and finds the value where the

loss is lowest, and hence, where the algorithm will perform the best and at a

very fast rate.

How to Develop Microservices in Kubernetes

Iterating from local development to Docker Compose to Kubernetes has allowed

us to efficiently move our development environment forward to match our needs

over time. Each incremental step forward has delivered significant

improvements in development cycle time and reductions in developer

frustration. As you refine your development process around microservices,

think about ways you can build on the great tools and techniques you have

already created. Give yourself some time to experiment with a couple of

approaches. Don’t worry if you can’t find one general-purpose

one-size-fits-all system that is perfect for your shop. Maybe you can leverage

your existing sets of manifest files or Helm charts. Perhaps you can make use

of your continuous deployment infrastructure such as Spinnaker or ArgoCD to

help produce developer environments. If you have time and resources, you could

use Kubernetes libraries for your favorite programming language to build a

developer CLI to manage their own environments. Building your development

environment for sprawling microservices will be an ongoing effort. However you

approach it, you will find that the time you invest in continuously improving

your processes pays off in developer focus and productivity.

Enabling the Digital Transformation of Banks with APIs and an Enterprise Architecture

One is the internal and system APIs. Core banking systems are monolith

architectures. They are still based on mainframes and COBOL [programming

language]. They are legacy technologies and not necessarily coming out of the

box with open APIs. Having internal and system APIs helps to speed up the

development of new microservices-based on these legacy systems or services

that use legacies as back-ends. The second category of APIs is public APIs.

These are APIs that connect a bank’s back-end systems and services. They are a

service layer, which is necessary for external services. For example, they

might be used to obtain a credit rating or address validation. You don’t want

to do these validations for yourself when the validity of a customer record is

checked. Take the confirmation of postal codes in the U.S. In the process of

creating a customer record, you use an API from your own system to link to an

external express address validation system. That system will let you know if

the postal code is valid or not. You don’t need to have their own internal

resources to do that. And the same applies, obviously, to credit rating, which

is information that you can’t have as a bank. The third type of API, and

probably the most interesting one, is the public APIs that are more on the

service and front-end layers.

Can't Afford a Full-time CISO? Try the Virtual Version

For a fraction of the salary of a full-time CISO, companies can hire a vCISO,

which is an outsourced security practitioner with executive level experience,

who, acting as a consultant, offers their time and insight to an organization

on an ongoing (typically part-time) basis with the same skillset and expertise

of a conventional CISO. Hiring a vCISO on a part-time (or short-term basis)

allows a company the flexibility to outsource impending IT projects as needed.

A vCISO will work closely with senior management to establish a well

communicated information security strategy and roadmap, one that meets the

requirements of the organization and its customers, but also state and federal

requirements. Most importantly, a vCISO can provide companies unbiased

strategic and operational leadership on security policies, guidelines,

controls, and standards, as well as regulatory compliance, risk management,

vendor risk management, and more. Since vCISOs are already experts, it saves

the organization time and money by decreasing ramp-up time. Businesses are

able to eliminate the cost of benefits and full-time employee onboarding

requirements.

Why the insurance industry is ready for a data revolution

As it stands today, when a customer chooses a traditional motor insurance

policy and is provided with a quote, the price they are given will be based

on broad generalisations made about their personal background as an

approximate proxy for risk. This might include their age, their gender,

their nationality, and there have even been examples of people being charged

hundreds of pounds more for policies because of their name. If this kind of

profiling took place in other financial sectors, there would be outcry, so

why is insurance still operating with such an outdated model? Well, up until

now, there has been little innovation in the insurance sector and as a

result, little alternative in the way that policies can be costed. But now,

thanks to modern telematics, the industry finally has the ability to provide

customers with an accurate and fair policy, based on their true risk on the

road: how they really drive. Telematics works by monitoring and gathering

vehicle location and activity data via GPS and today we can track speed, the

number of hours spent in the vehicle, the times of the day that customers

are driving, and even the routes they take. We also have the technology

available to consume and process swathes of this data in real time.

Foiling RaaS attacks via active threat hunting

One of the tactics that really stands out, and they’re not the only

attackers to do it, but they are one of the first to do it, is actually

making a copy and stealing the victim’s data prior to the ransomware payload

execution. The benefit that the attacker gets from this is they can now

leverage this for additional income. What they do is they threaten the

victim to post sensitive information or customer data publicly. And this is

just another element of a way to further extort the victim and to increase

the amount of money that they can ask for. And now you have these victims

that have to worry about not only having all their data taken from them, but

actual public exposure. It’s becoming a really big problem, but those sorts

of tactics – as well as using social media to taunt the victim and hosting

their own infrastructure to store and post data – all of those things are

elements that prior to seeing it used with Ransomware-as-a Service, were not

widely seen in traditional enterprise ransomware attacks. ... You can’t

trust that paying them is going to keep you protected. Organizations are in

a bad spot when this happens, and they’ll have to make those decisions on

whether it’s worth paying.

Sizing Up Synthetic DNA Hacking Risks

Rami Puzis, head of the Ben-Gurion University Complex Networks Analysis Lab

and a co-author of the study, tells ISMG that the researchers decided to

examine potential cybersecurity issues involving the synthetic

bioengineering supply chain for a number of reasons. "As with any new

technology, the digital tools supporting synthetic biology are developed

with effectiveness and ease of use as the primary considerations," he says.

"Cybersecurity considerations usually come in much later when the technology

is mature and is already being exploited by adversaries. We knew that there

must be security gaps in the synthetic biology pipeline. They just need to

be identified and closed." The attack scenario described by the study

underscores the need to harden the synthetic DNA supply chain with

protections against cyber biological threats, Puzis says. "To address these

threats, we propose an improved screening algorithm that takes into account

in vivo gene editing. We hope this paper sets the stage for robust,

adversary resilient DNA sequence screening and cybersecurity-hardened

synthetic gene production services when biosecurity screening will be

enforced by local regulations worldwide."

Securing the Office of the Future

The vast majority of the things that we see every day are things that you

never read about or hear about. It’s the proverbial iceberg diagram. That

being said, in this interesting and very unique time that we are in, there

is a commonality—and Sean’s actually already mentioned it once today—there

are two major attack patterns that we’re seeing over and over, and these are

not new things, they’re just very opportunistically preyed upon right now

because of COVID and because of the remote work environment, but that’s

ransomware and kind of spear phishing or regular old phishing attacks.

Because people are at a distance and expected to be working virtually today

and threat actors know that, so they’re getting better and better at laying

booby traps, if you will, and e-mail to get people to click on attachments

and other sorts of links. ... Coincidentally, or perhaps not coincidentally,

one of the characters in our comic is called Phoebe the Phisher, and we were

very deliberate about creating that character. She has a harpoon, of course,

which is for, you know, whale phishing. She has a spear for targeted spear

phishing, and she also has a, you know, phishing rod for kind of regular,

you know, spray and pray kind of phishing.

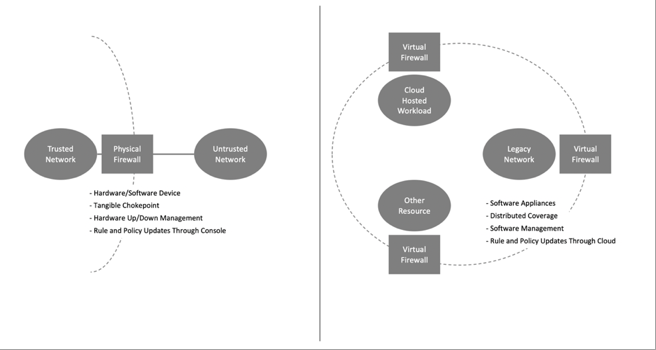

How to maximize traffic visibility with virtual firewalls

The biggest advantage of a virtual firewall, however, is its support for the

obvious dissolution of the enterprise perimeter. Even if an active edge DMZ

is maintained through load balanced operation, every enterprise is

experiencing the zero trust-based extension of their operation to more

remote, virtual operation. Introducing support for virtual firewalls, even

in traditional architectures, is thus an excellent forward-looking

initiative. An additional consideration is that cloud-based functionality

requires policy management for hosted workloads – and virtual firewalls are

well-suited to such operation. Operating in a public, private, or hybrid

virtual data center, virtual firewalls can protect traffic to and from

hosted applications. This can include connections from the Internet, or from

tenants located within the same data center enclave. One of the most

important functions of any firewall – whether physical or virtual – involves

the inspection of traffic for evidence of anomalies, breaches, or other

policy violations. It is here that virtual firewalls have emerged as

offering particularly attractive options for enterprise security teams

building out their threat protection.

More than data

First of all, the system has to be told where to find the various clauses in

a set of sample contracts. This can be easily done by marking the respective

portions of text and labelling them with the clauses names they contain. On

this basis we can train a classifier model that – when reading through a

previously unseen contract – recognises what type of contract clause can be

found in a certain text section. With a ‘conventional’ (i.e. not DL-based)

algorithm, a small number of examples should be sufficient to generate an

accurate classification model that is able to partition the complete

contract text into the various clauses it contains. Once a clause is

identified within a certain contract of the training data, a human can

identify and label the interesting information items contained within. Since

the text portion of one single clause is relatively small, only a few

examples are required to come up with an extraction model for the items in

one particular type of clause. Depending on the linguistic complexity and

variability of the formulations used, this model can be either generated

using ML, by writing extraction rules making use of keywords, or – in

exceptionally complicated situations – by applying natural language

processing algorithms digging deep into the syntactic structure of each

sentence.

Quote for the day:

"You have achieved excellence as a leader when people will follow you everywhere if only out of curiosity." -- General Colin Powell

No comments:

Post a Comment