API3: The Glue Connecting the Blockchain to the Digital World

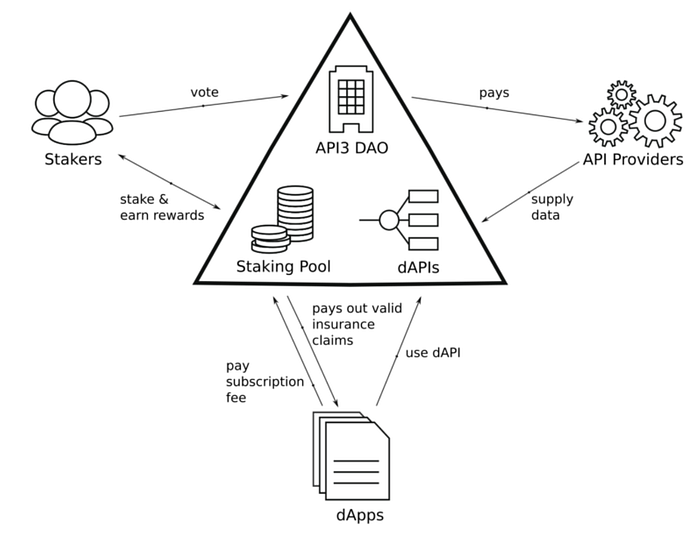

dAPIs are on-chain data feeds that are comprised of aggregated responses from

first-party (API provider-operated) oracles. This allows for the removal of

many vulnerabilities, unnecessary redundancies, and middleman taxes created by

existing third-party oracle solutions. Further, using first-party oracles

leverages the off-chain reputation of the API provider (compare this to the

nonexistent reputation of anonymous third-party oracles). See our article

“First-Party vs Third-Party Oracles” for a more extended treatise on these

issues. Further, dAPIs are data feeds built with transparency. What we mean by

this is: you know exactly where the data comes from — this ensures things like

data quality as well as independence of data sources to mitigate skewness in

aggregated results. Rather than having oracle-level staking — which is

impractical and arguably infeasible for reasons alluded to in this article —

API3 has a staking pool. API3 holders can provide stake to the protocol. This

stake backs insurance services that protect users from potential damages

caused by dAPI malfunctions. The collateral utility has the participants share

API3’s operational risk and incentivizes them to minimize it. Staking in the

protocol also grants stakers inflationary rewards and shares in profits.

Reconciling political beliefs with career ambitions

Data has been on the front lines in recent culture wars due to accusations of

racial, gender, and other forms of socioeconomic bias perpetrated in whole or

in part through algorithms. Algorithmic biases have become a hot-button issue

in global society, a trend that has spurred many jurisdictions and

organizations to institute a greater degree of algorithmic accountability in

AI practices. Data scientists who’ve long been trained to eliminate biases

from their work now find their practices under growing scrutiny from

government, legal, regulatory, and other circles. Eliminating bias in the data

and algorithms that drive AI requires constant vigilance on the part of not

only data scientists but up and down the corporate ranks. As Black Lives

Matter and similar protests have pointed out, data-driven algorithms can embed

serious biases that harm demographic groups (racial, gender, age, religious,

ethnic, or national origin) in various real-world contexts. Much of the recent

controversy surrounding algorithmic biases has focused on AI-driven facial

recognition software. Biases in facial recognition applications are especially

worrisome if used to direct predictive policing programs or potential abuse by

law enforcement in urban areas with many disadvantaged minority groups.

Why Data Privacy Is Crucial to Fighting Disinformation

In essence, if you can create a digital clone of a person, you can much better

predict his or her online behavior. That’s a core part of the monetization

model of social media companies, but it could become a capability of

adversarial states who acquire the same data through third parties. That would

enable much more effective disinformation. A new paper from the Center

For European Analysis, or CEPA, also out on Wednesday, observes that while

there has been progress against some tactics that adversaries used in 2016,

policy responses to the broader threat of micro-targeted disinformation “lag.”

“Social media companies have concentrated on takedowns of inauthentic

content,” wrote authors Alina Polyakova and Daniel Fried. “That is a good (and

publicly visible) step but does not address deeper issues of content

distribution (e.g., micro-targeting), algorithmic bias toward extremes, and

lack of transparency. The EU’s own evaluation of the first year of

implementation of its Code of Practice concludes that social media companies

have not provided independent researchers with data sufficient for them to

make independent evaluations of progress against disinformation.” Polyakova

and Fried suggest the U.S. government make several organizational changes to

counter foreign disinformation.

How to assess the transformation capabilities of intelligent automation

We’re talking about smart, multi-tasking robots that are increasingly being

trusted catalysts at the core of digital work transformation strategies. This

is because they effortlessly perform joined up, data-driven work across

multiple operating environments of complex, disjointed, difficult to modify

legacy systems and manual workflows. And unlike any other robot, they deliver

work without interruption, automatically making adjustments according to

obstacles – different screens, layouts or fonts, application versions, system

settings, permissions, and even language. These robots also uniquely solve the

age old problem of system interoperability by reading and understanding

applications’ screens in the same way humans do. They’re re-purposing the

human interface as a machine-usable API – crucially without touching

underlying system programming logic. This ‘universal connectivity’ also means

that all current and future technologies can be used by robots – without the

need of APIs, or any form of system integration. ... This capability breathes

new life into any age of technology and enables these robots to be continually

augmented with the latest cloud, artificial intelligence, machine learning,

and cognitive capabilities that are simply ‘dragged and dropped’ into newly

designed work process flows.

Basics of the pairwise, or all-pairs, testing technique

All-pairs testing greatly reduces testing time, which in turn controls testing

costs. The QA team only checks a subset of input/output values -- not all --

to generate effective test coverage. This technique proves useful when there

are simply too many possible configuration options and combinations to run

through. Pairwise testing tools make this task even easier. Numerous open

source and free tools exist to generate pairwise value sets. The tester must

inform the tool about how the application functions for these value sets to be

effective. With or without a pairwise testing tool, it's crucial for QA

professionals to analyze the software and understand its function to create

the most effective set of values. Pairwise testing is not a no-brainer in a

testing suite. Beware these factors that could limit the effectiveness of

all-pairs testing: unknown interdependencies of variables within the

software being tested; unrealistic value combinations, or ones that don't

reflect the end user; defects that the tester can't see, such as ones

that don't reflect in a UI view but trigger error messages into a log or other

tracker; and tests that don't find defects in the back-end processing

engines or systems.

How can companies secure a hybrid workforce in 2021?

Even before remote work was ubiquitous, accidental and malicious insider

threats posed a serious risk to data security. As trusted team members,

employees have unprecedented access to company and customer data, which, when

left unchecked, can undermine company, customer, and employee privacy. These

risks are magnified by remote work. Not only has the pandemic’s impact on the

job market made malicious insiders more likely to capture or compromise data

to gain leverage with new employment prospects or to generate extra income,

but accidental insiders are especially prone to errors when working remotely.

For example, many employees are blurring the lines between personal and

professional technology, sharing or accessing sensitive data in ways that

could undermine its integrity. In response, companies need to be proactive

about establishing and enforcing clear data management guidelines. In this

regard, communication is key, and accountability through monitoring

initiatives or other efforts will help keep data protected during the

transition.

Working from home dilemma: How to manage your team, without the micro-management

Employees need to feel connected and trusted. Yet leaders who find it tough to

trust their workforce might opt for micro-management; they'll continue to

check-up on their workers rather than checking-in to see how they're getting

on. Peterson says leaders should look to develop a management style that

cultivates wellbeing. In uncertain times, employees need a sense of certainty

from their leaders. Executives should ensure their staff feel engaged, not

micro-managed. "It's more important than ever for managers to ask whether

people are getting their ABCs: their autonomy, belonging and competence.

Leaders who don't get that from their own boss will tend to overcompensate

with the people they're managing; they'll micro-manage, and that's not

helpful," he says. Lily Haake, head of the CIO Practice at recruiter Harvey

Nash, agrees that leaders who micro-manage will struggle in the new normal.

They won't get the best from the workers and their effectiveness will suffer.

Haake says managers who want to cultivate wellbeing need to pick up on subtle

signs that all isn't well. Executives should adopt a considered approach,

using a technique like active listening, to pick up on potential issues before

they become major problems.

The Fourth Industrial Revolution: Legal Issues Around Blockchain

Stakeholders in blockchain solutions will need to ensure that their products

comply with a legal and regulatory framework that was not conceived with this

technology in mind. From a commercial law standpoint, smart contracts must be

contemplated for negotiation, execution and administration on a blockchain,

and in a legal and compliant fashion. Liability needs to be addressed. What if

the contract has been miscoded? What if it does not achieve the parties'

intent? The parties must also agree on applicable law, jurisdiction, proper

governance, dispute resolution, privacy and more. There are public policy

concerns that should be taken into account in shaping new laws, rules and

regulations. For example, permissionless blockchains can be used for illegal

purposes such as money laundering or circumventing competition laws. Also,

participants may be exposed to irresponsible actions on the part of the

"miners" who create new blocks. Unfortunately, there aren't any current legal

remedies for addressing corrupt miners. As lawyers and technologists ponder

these issues, several solutions are being bandied about. One possible remedy

involves a hybrid of permissioned and permissionless blockchains.

Why enterprises are turning from TensorFlow to PyTorch

PyTorch is seeing particularly strong adoption in the automotive

industry—where it can be applied to pilot autonomous driving systems from the

likes of Tesla and Lyft Level 5. The framework also is being used for content

classification and recommendation in media companies and to help support

robots in industrial applications. Joe Spisak, product lead for artificial

intelligence at Facebook AI, told InfoWorld that although he has been pleased

by the increase in enterprise adoption of PyTorch, there’s still much work to

be done to gain wider industry adoption. “The next wave of adoption will come

with enabling lifecycle management, MLOps, and Kubeflow pipelines and the

community around that,” he said. “For those early in the journey, the tools

are pretty good, using managed services and some open source with something

like SageMaker at AWS or Azure ML to get started.” ... “The TensorFlow object

detector brought memory issues in production and was difficult to update,

whereas PyTorch had the same object detector and Faster-RCNN, so we started

using PyTorch for everything,” Alfaro said. That switch from one framework to

another was surprisingly simple for the engineering team too.

Techno-nationalism isn’t going to solve our cyber vulnerability problem

Techno-nationalism is fueled by a complex web of justified economic, political

and national security concerns. Countries engaging in “protectionist” practices

essentially ban or embargo specific technologies, companies, or digital

platforms under the banner of national security, but we are seeing it used more

often to send geopolitical messages, punish adversary countries, and/or prop up

domestic industries. Blanket bans give us a false sense of security. At the same

time, when any hardware or software supplier is embedded within critical

infrastructure – or on almost every citizen’s phone – we absolutely need to

recognize the risk. We need to take seriously the concern that their kit could

contain backdoors that could allow that supplier to be privy to sensitive data

or facilitate a broader cyberattack. Or, as is the lingering case with TikTok,

the concern is whether the collection of data on U.S. citizens via an

entertainment app could be forcibly seized under Chinese law and enable

state-backed cyber actors to then target and track federal employees or conduct

corporate espionage.

Quote for the day:

"Stand up for what you believe, let your team see your values and they will trust you more easily." -- Gordon Tredgold

No comments:

Post a Comment