The commodification of customer data privacy

B2B customers want personalized experiences, too. Aside from the data they

might input into a contact form; B2B buyers put plenty of data online for the

world to see. You can build a B2B buyer profiles just by gleaning data from

their LinkedIn profile and their interactions online. Software exists that

enable businesses to automate the process by scraping data from public

sources. But it needs to be clear that this information is being collected and

stored in good faith. Businesses should limit the amount of data they collect

from customers, only using the data essential to their

operations. Customers should always be made aware of what data is being

collected, why, and how it will be used. This information should be easy to

find and understand, not obfuscated by legal jargon and fine print. Some good

examples of this are the “cookie” statements businesses place on their

websites under the EU’s General Data Protection Regulation (GDPR). Finally,

data must be stored in a secure environment, then erased when it is no longer

being used. The customer should be made aware of what policies and protections

are in place regarding the use of their data.

Unethical AI unfairly impacts protected classes - and everybody else as well

Why is ethics so important now with AI? Wherever there is a social context,

anything involving people, ethical questions are necessary because it becomes

personal. Before big data and data science, researchers categorized people

into cohorts, or categories, such as tofu lovers with a college degree, or

evangelical Christians. There wasn't enough data available at the individual

level to draw inference on a single person. Even when evaluating a single

person for credit or life insurance, the few available characteristics were

used to compare with a larger group. What is different today is an avalanche

intimate, personal detail, exacerbated by a shift in sources, from interval

"operational exhaust" to a myriad of external, non-traditional data, such as

pictures and videos that are not even vetted. In the wrong hands, with the

wrong model, it can wreak havoc to people's lives. The capability to produce

errant models and inferences and put them in production at a scale that is

orders of magnitude greater than anything before compounds the potential

adverse outcomes. Today, your "digital footprint," information about you on

the internet, is so enormous that it is estimated the growth of your personal

data on the internet is two megabytes per second.

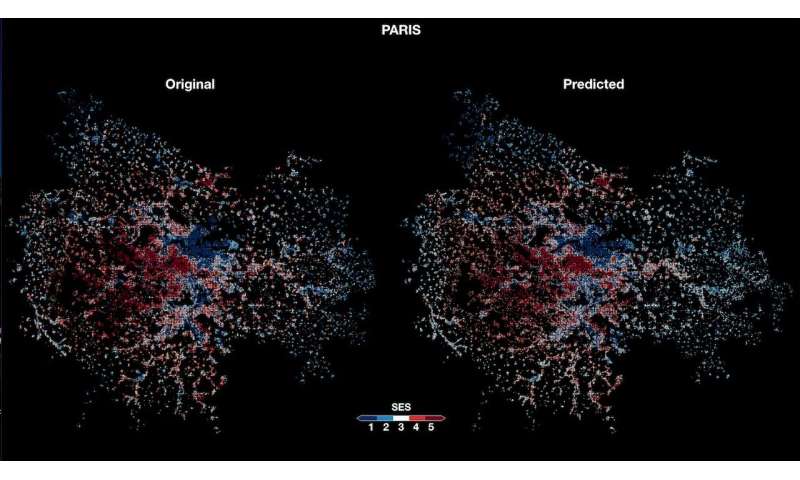

Using deep learning to infer the socioeconomic status of people in different urban areas

Researchers at the Ecole Normale Superieure (ENS) de Lyon and Central European

University (CEU) have recently developed a deep neural network that could be

used to study the socioeconomic inequalities that can arise from urbanization.

Their study, featured in Nature Machine Intelligence, confirms the potential

of convolutional neural networks (CNNs) for the in-depth analysis of

geographical regions. For many years, efficiently tracking urbanization, the

process through which an urban area becomes increasingly large and populated,

has proved fairly challenging. The development of increasingly advanced remote

sensing and satellite technologies, however, opened up new exciting

possibilities for the observation of specific geographical regions and

consequently for urbanization-related research. In their study, the

researchers ENS Lyon and CEU tried to use deep learning algorithms to analyze

the images collected by these tools. "Our initial goal was actually to check

what was the finest spatial resolution that we could get our algorithm (i.e.,

predicting the average income of an area based on its satellite image) to work

with," Jacob Levy Abitbol and Marton Karsai, the researchers who carried out

the study, told TechXplore.

Digital transformation: 4 ways to help IT teams adapt to disruption

Prioritize user adoption and buy-in. That includes understanding

generational and workstyle differences of various users and establishing

clear metrics around adoption, usage, and engagement. Analyzing the depth of

communication and relationships that result from the collaborations will

reduce communication gaps and breakdowns and provide a clear indication that

the collaboration is working. ... IT leaders aiming for digital success must

better identify future skills requirements, push for increased investment

and uptake in skills acquisition, improve access to quality training to

support future skills, and create an agile skills development system that

can adapt to market needs to fuel a culture of lifelong learning. Sometimes

those answers can come from within. ... This tells us we need a

different kind of leadership, one in which leaders inspire rather than

require. ... Adaptive design allows the transformation strategy and resource

allocation to adjust over time. That includes flexible talent allocation, a

key differentiator in a transformation’s success, and ensuring resources are

earmarked for initiatives that span organizational silos. It’s also

important to practice the art of simplicity by valuing what works well

enough and accepting solutions that satisfy business needs – you can enhance

a simple solution later on.

FireEye, a Top Cybersecurity Firm, Says It Was Hacked by a Nation-State

The F.B.I. on Tuesday confirmed that the hack was the work of a state, but

it also would not say which one. Matt Gorham, assistant director of the

F.B.I. Cyber Division, said, “The F.B.I. is investigating the incident and

preliminary indications show an actor with a high level of sophistication

consistent with a nation-state.” The hack raises the possibility that

Russian intelligence agencies saw an advantage in mounting the attack while

American attention — including FireEye’s — was focused on securing the

presidential election system. At a moment that the nation’s public and

private intelligence systems were seeking out breaches of voter registration

systems or voting machines, it may have a been a good time for those Russian

agencies, which were involved in the 2016 election breaches, to turn their

sights on other targets. The hack was the biggest known theft of

cybersecurity tools since those of the National Security Agency were

purloined in 2016 by a still-unidentified group that calls itself the

ShadowBrokers. That group dumped the N.S.A.’s hacking tools online over

several months, handing nation-states and hackers the “keys to the digital

kingdom,” as one former N.S.A. operator put it.

Dealing with Remote Team Challenges

Most of us are social creatures who enjoy the company of others. The concept

of coming together to solve a common goal isn’t necessarily displaced by the

concept of remote or distributed, but it can be trickier. There are

opportunities for asynchronous communication, increased productivity through

"flow" or uninterrupted time, and reduced travel and asset management costs.

On the other hand, there are the challenges of equitable access, ensuring

adequate resources and tooling as well as the need to address social

isolation and the issue of trust. What seems to be happening more and more

though is the shift away from a hierarchical structure to a more neural one

with teams becoming smaller, more agile and cross-functional, as suggested

by the May 2020 McKinsey Report. Mullenweg’s five stages of remote

working suggest that those teams that have moved beyond trying to replicate

the office model to be remote-first and truly asynchronous are edging closer

to Nirvana, a state where distributed teams would consistently perform

better than any in-person team. At this point, the creativity, energy,

health and productivity of the team are at their peak with individuals

performing at their highest level.

CIO interview: John Davison, First Central Group

“Intelligent automation means so much more for us than an efficiency tool,”

says Davison. “We are building an entirely new technical competency into our

business, so that it becomes part of our DNA. This not only changes

operational execution but, importantly, changes the management mindset about

the art of the possible and strategic decision-making.” The automated

renewal process is another area where Blue Prism has been deployed. With the

support of Blue Prism’s partner, IT and automation consultancy T-Tech, the

First Central team can check for accuracy the issue of more than 3,000

renewal invitations daily in just two hours. The new process verifies each

renewal notice, removing the need for costly, time-intensive manual work

downstream to correct anomalies and reduce the risk of a regulatory

incident. Along with driving operational efficiencies, Davison

believes RPA also boosts business confidence. “Risk mitigation is a lot more

intangible, but can measure the cost of distraction and can measure our

effectiveness from a robotics perspective,” he says. Davison’s team has

established a robotics capability for the business capability. “It is not my

job to close down operational risk,” he says.

The best programming language to learn now

The typed-language lovers are smart and they write good code, but if you

think your code is good enough to run smoothly without the extra information

about the data types for each variable, well, Python is ready for you. The

computer can figure out the type of the data when you store it in a

variable. Why make extra work for yourself? Note that this freewheeling

approach may be changing, albeit slowly. The Python documentation announces

that the Python runtime does not enforce function and variable type

annotations but they can still be used. Perhaps in time adding types will

become the dominant way to program in the language, but for now it’s all

your choice. ... If you’re writing software to work with data, there’s a

good chance you’ll want to use Python. The simple syntax has hooked many

scientists, and the language has found a strong following in the labs around

the country. Now that data science is taking hold in all layers of the

business world, Python is following. One of the best inventions for creating

and sharing interactive documents, the Jupyter Notebook, began with the

Python community before embracing other languages.

Millions of IoT Devices at Risk From TCP/IP Stack Flaws

The research is a continuation of Forescout's exploration of TCP/IP stacks.

In June, Forescout revealed the so-called Ripple20 flaws in a single but

widely used TCP/IP stack made by an Ohio-based company, Treck. This time

around, Forescout broadened its research into more types of TCP/IP stacks.

The stacks enable basic network communication. Software developers don't

develop their own but instead use off-the-shelf open-source stacks in their

products or forks of those projects. "We discovered...33 vulnerabilities in

four of seven [TCP/IP] stacks that we analyzed," Costante says. The flaws

were found in uIP, FNET, PicoTCP and Nut/Net. Forescout also examined IwIP,

CycloneTCP and uC/TCP-IP but didn't find any of the most common coding

errors. But Forescout says it doesn't mean those TCP/IP stacks are

necessarily free of problems. Many of the issues are centered around Domain

Name System functionality. "We find that the DNS, TCP and IP sub-stacks are

the most often vulnerable," Forescout says in its report. "DNS, in

particular, seems to be vulnerable because of its complexity." Brad Ree, who

is CTO of the consultancy ioXt and board member at the ioXt Alliance, says

it is concerning to see the IPv6 vulnerabilities in Forescout's findings.

How Kali Linux creators plan to handle the future of penetration testing

The Kali Linux distribution, designed specifically for penetration testing and

digital forensics, is still offered free of charge. Under her leadership

OffSec has formed a dedicated Kali team and made quarterly releases since

January 2019, which has received positive reviews from the community. “Kali

and other projects like Exploit Database, the largest collection of exploits

and vulnerabilities on the internet, keep us uniquely in tune with the needs

of the security community and continue to inform our company direction,” she

explained. But the thing she’s most proud of is that OffSec has become a

company with a clear set of well-defined core company values: family, passion,

integrity, community and innovation. “We live by these values as we scale,

hire and operate. As a CEO, I found my own style through the support of our

team members: have the courage to be authentic and vulnerable. We have

cultivated an environment to embrace and practice a growth mindset, build

vulnerability-based trust, and empower and enable our team to do their best.

My job as CEO is about how to make our people happier in ways I or OffSec can

influence.”

Quote for the day:

"Success consists of going from failure to failure without loss of enthusiasm." -- Winston Churchill

No comments:

Post a Comment