How foundation agents can revolutionize AI decision-making in the real world

Some of the key characteristics of foundation models can help create foundation

agents for the real world. First, LLMs can be pre-trained on large unlabeled

datasets from the internet to gain a vast amount of knowledge. Second, the

models can use this knowledge to quickly align with human preferences and

specific tasks. ... Developing foundation agents presents several challenges

compared to language and vision models. The information in the physical world is

composed of low-level details instead of high-level abstractions. This makes it

more difficult to create unified representations for the variables involved in

the decision-making process. There is also a large domain gap between different

decision-making scenarios, which makes it difficult to develop a unified policy

interface for foundation agents. ... However, it can make the model increasingly

complex and uninterpretable. While language and vision models focus on

understanding and generating content, foundation agents must be involved in the

dynamic process of choosing optimal actions based on complex environmental

information.

Soon, LLMs Can Help Humans Communicate with Animals

Understanding non-human communication can be significantly aided by the insights

provided by models like OpenAI’s GPT-3 and Google’s LaMDA, which are examples of

such generative AI tools. ESP has recently developed the Benchmark for Animal

Sounds, or BEANS for short, the first-ever benchmark for animal vocalisations.

It established a standard against which to measure the performance of machine

learning algorithms on bioacoustics data. On the basis of self-supervision, it

has also created the Animal

Vocalisation Encoder, or AVES. This is the first foundational model for animal

vocalisations and can be applied to many other applications, including signal

detection and categorisation. The nonprofit is just one of many groups that have

recently emerged to translate animal languages. Some organisations, like Project

Cetacean Translation Initiative (CETI), are dedicated to attempting to

comprehend a specific species — in this case, sperm whales. CETI’s research

focuses on deciphering the complex vocalisations of these marine mammals.

DeepSqueak is another machine learning technique developed by University of

Washington researchers Kevin Coffey and Russell Marx, capable of decoding rodent

chatter.

AI supply is way ahead of AI demand

We’ve been in a weird wait-and-see moment for AI in the enterprise, but I

believe we’re nearing the end of that period. Surely the boom-and-bust economics

that Cahn highlights will help make AI more cost-effective, but ironically, the

bigger driver may be lowered expectations. Once enterprises can get past the

wishful thinking that AI will magically transform the way they do everything at

some indeterminate future date, and instead find practical ways to put it to

work right now, they’ll start to invest. No, they’re not going to write $200

billion checks, but it should pad the spending they’re already doing with their

preferred, trusted vendors. The winners will be established vendors that already

have solid relationships with customers, not point solution aspirants. Like

others, The Information’s Anita Ramaswamy suggests that “companies [may be]

holding off on big software commitments given the possibility that AI will make

that software less necessary in the next couple of years.” This seems unlikely.

More probable, as Jamin Ball posits, we’re in a murky economic period and AI has

yet to turn into a tailwind.

How AI-powered attacks are accelerating the shift to zero trust strategies

Afterall, a lack of in-house expertise and adequate budget are both largely

within an organization’s control through funding for resources, tools, and

training. So, if a gap exists at the top, help your senior leadership and board

make the critical linkage between zero trust and strong corporate governance. An

ever-intensifying threat landscape means senior leadership teams and boards have

a duty of care to make the right investments and provide the strategic guidance

and oversight to help keep the organization and its stakeholders safe. As

further motivation to make this strategic link to zero trust, federal agencies

are continuing efforts to hasten breach disclosures and hold executives liable

for security and data privacy incidents. Beyond that, it is about sourcing and

retaining top tech talent which speaks to the need to build and maintain an

inclusive company culture with continuous training and development opportunities

for technical teams. Ensuring security teams are inclusive of neurodiverse

talent, for example, is important for encouraging the diverse ways of thinking

needed to spot and curtail novel AI-powered attack strategies.

Cryptographers Discover a New Foundation for Quantum Secrecy

Working together, the four researchers quickly proved that Kretschmer’s state

discrimination problem could still be intractable even for computers that could

call on this NP oracle. That means that practically all of quantum cryptography

could remain secure even if every problem underpinning classical cryptography

turned out to be easy. Classical cryptography and quantum cryptography

increasingly seemed like two entirely separate worlds. The result caught Ma’s

attention, and he began to wonder just how far he could push the line of work

that Kretschmer had initiated. Could quantum cryptography remain secure even

with more outlandish oracles — ones that could instantly solve computational

problems far harder than those in NP? “Problems in NP are not the hardest

classical problems one can think about,” said Dakshita Khurana, a cryptographer

at the University of Illinois, Urbana-Champaign. “There’s hardness beyond that.”

Ma began brainstorming how best to approach that question, together with Alex

Lombardi, a cryptographer at Princeton University, and John Wright, a quantum

computing researcher at the University of California, Berkeley.

Data centers challenges in the German market

The potential for the German data center industry to meet growing demands is

substantial, but there are hurdles that should not be underestimated. For

instance, the tightening of legal regulations and technological barriers poses a

risk that expansion efforts may shift focus to other European countries. In

particular, the proposed Energy Efficiency Act presents a significant challenge.

It mandates that data center operators supply their surplus heat to external

customers, particularly local authorities and district heating providers.

Ultimately, all operators will have to achieve the same decarbonization targets,

and successful waste heat utilization requires seamless collaboration among all

parties involved. Although the first district heating projects are already being

implemented in urban areas, there is limited interest in connecting older

high-calorific district heating networks to data centers with low-calorific

surplus heat. ... Additional challenges in developing new projects include the

scarcity of suitable land and renewable energy sources, as well as a lack of

grid capacity in top-tier regions. As a result, future capacities can only be

planned medium to long term.

How to Attract the Right Talent for Your Engineering Team

As humans, we generally stick with (and hire) what we already know, but our

unconscious biases can hinder creativity and hurt the company’s bottom line if

left unchecked. The best way to prevent this is by including different types of

people — from various departments and experience levels — in the interview

process. Anyone we hire must have the ability to operate in a cross-functional

manner, therefore it makes sense that the people they’ll be interacting with are

included in the process. ... When assessing potential candidates, look for

people who understand that they don’t always need to figure everything out

themselves versus those who sell themselves as the best at their craft. This

signals an aptitude for continued learning and problem-solving, as well as a

willingness to collaborate in a team setting. Other good signs to look for

include folks who talk about outcomes instead of just outputs, and acknowledge

the importance of cross-functional collaboration in achieving outcome-driven

success. ... Mentorship is a key ingredient for nurturing top talent, and it has

the added benefit of increasing employee retention.

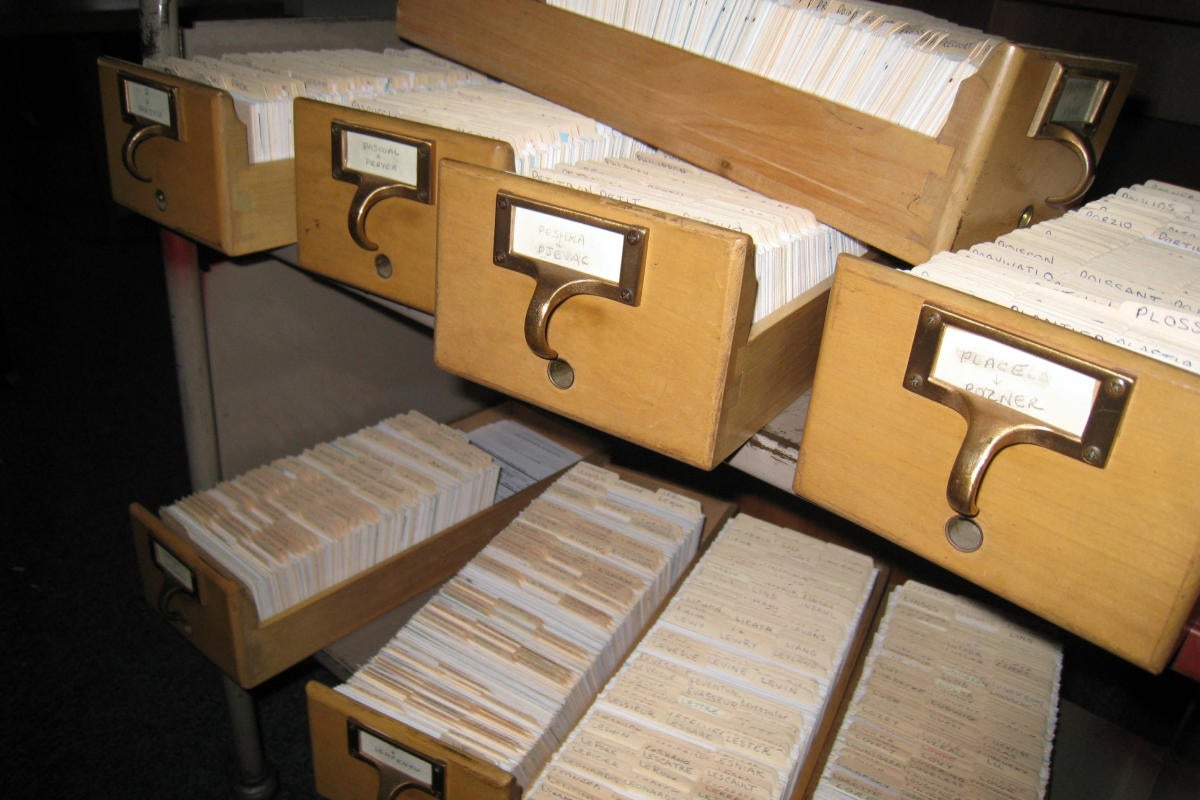

How data champions can boost regulatory compliance

At the heart of successful compliance are people. Utilising the organisational

design of a company and working intimately with employees, by making them ‘Data

champions’ organisations can empower staff to take responsibility for adherence.

Too often companies place the responsibility on one person or department to

ensure compliance. However, data champions working in specific departments

throughout an organisation can have a much better overview of where the risk

lies and what needs to be implemented to close vulnerabilities. Making

compliance a part of everyday life or as it’s sometimes known ‘data protection

by design and default’, means that it becomes a much more manageable task,

rather than a daunting one. Alongside this, implementing a solution that can

help manage the policies brought in to deal with data protection risks (and also

keep a record of who owns the policies as well as, crucially, who has read and

understood the policies) means that suddenly companies have a more accurate and

comprehensive overview of how the company sits in terms of its adherence to

regulation.

Beyond Chatbots: Meet Your New Digital Human Friends

Digital humans are important players in virtual and augmented reality

environments, says Matt Aslett, a director with technology research and advisory

firm ISG via email. "They are also used in gaming and animation and are being

deployed as interactive customer service assistants, providing real-time

conversational interfaces to access help and information in multiple industries,

including retail and healthcare." ... Tomik believes there are times when people

may feel more comfortable talking to a digital human than a real person, such as

when seeking advice on discomforting issues, including addiction, anger

management, relationship difficulties, and other deeply personal topics. "It

allows people to feel comfortable asking for help without judgment," he notes.

... Digital human technology is evolving rapidly, but it's still far from being

a complete replacement for human-to-human interaction. "Like AI chatbot

interfaces, a digital human interface may struggle to detect nuanced

communication traits, such as sarcasm, emotion, and deception, and may be

unsuitable for dealing with complex and critical user requests," Aslett says.

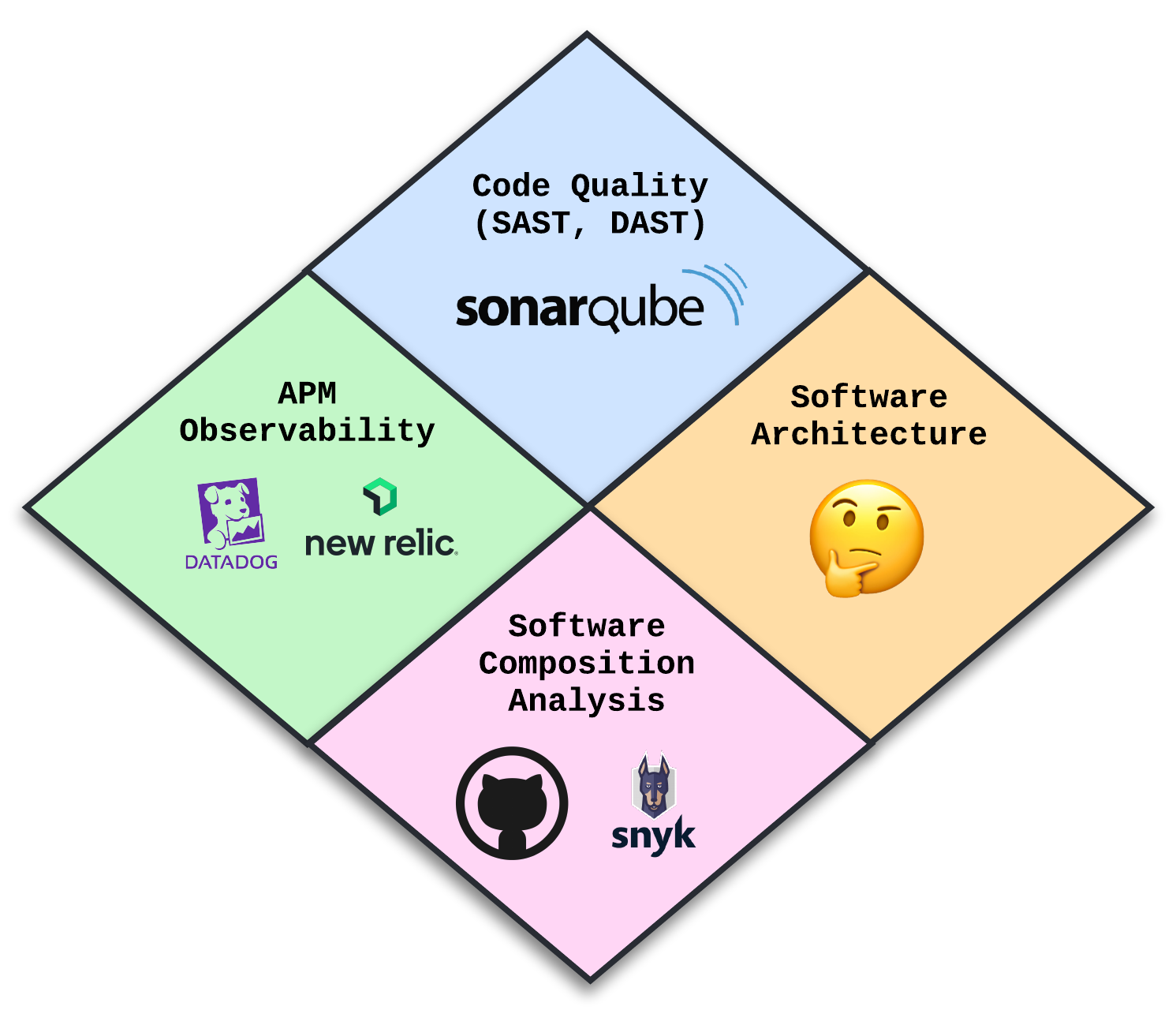

Navigating Data Readiness for Generative AI

Technical challenges posed by data readiness for generative AI are -

Insufficient data preparation: Anonymizing data is especially important for

health and finance applications, but it also reduces an organization’s liability

and helps it meet compliance requirements. Labeling the data is a form of

annotation that identifies its context, sentiment, and other features for NLP

and other uses. ... Finding the right size LLMs: Smaller models help companies

reduce their resource consumption while making the models more efficient, more

accurate, and easier to deploy. Organizations may start with large models for

proof of concept and then gradually reduce their size while testing to ensure

the model’s results remain accurate. ... Retrieval-augmented generation: This AI

framework supplements the LLM’s internal representation of information with

external sources of knowledge. ...Overcoming data silos: Data silos prevent data

the model needs from being discovered, introduces incomplete data sets, and

results in inaccurate reports while also driving up data-management costs.

Preventing data silos entails identifying disconnected data, creating a data

governance framework, promoting collaboration across teams, and establishing

data ownership.

Quote for the day:

"Sometimes it takes a good fall to

really know where you stand." -- Hayley Williams

/filters:no_upscale()/articles/trade-offs-minimizing-unhappiness/en/resources/22fig1-1717160045706.jpg)