Should Your Organization Use a Hyperscaler Cloud Provider?

Vendor lock-in is perhaps the biggest hyperscaler pitfall. "Relying too heavily

on a single hyperscaler can make it difficult to move workloads and data between

clouds in the future," Inamdar warns. Proprietary services and tight

integrations with a particular hyperscaler cloud provider's ecosystem can also

lead to lock-in challenges. Cost management also requires close scrutiny.

"Hyperscalers’ pay-as-you-go models can lead to unexpected or runaway costs if

usage isn't carefully monitored and controlled," Inamdar cautions. "The massive

scale of hyperscaler cloud providers also means that costs can quickly

accumulate for large workloads." Security and compliance are additional

concerns. "While hyperscalers invest heavily in security, the shared

responsibility model means customers must still properly configure and secure

their cloud environments," Inamdar says. "Compliance with regulatory

requirements across regions can also be complex when using global hyperscaler

cloud providers." On the positive side, hyperscaler availability and durability

levels exceed almost every enterprise's requirements and capabilities, Wold

says.

Innovate Through Insight

The common core of both strategy and innovation is insight. An insight results

from the combination of two or more pieces of information or data in a unique

way that leads to a new approach, new solution, or new value. Mark Beeman,

professor of psychology at Northwestern University, describes insight in the

following way: “Insight is a reorganization of known facts taking pieces of

seemingly unrelated or weakly related information and seeing new connections

between them to arrive at a solution.” Simply put, an insight is learning that

leads to new value. ... Innovation is the continual hunt for new value;

strategy is ensuring we configure resources in the best way possible to

develop and deliver that value. Strategic innovation can be defined as the

insight-based allocation of resources in a competitively distinct way to

create new value for select customers. Too often, strategy and innovation are

approached separately, even though they share a common foundation in the form

of insight. As authors Campbell and Alexander write, “The fundamental building

block of good strategy is insight into how to create more value than

competitors can.”

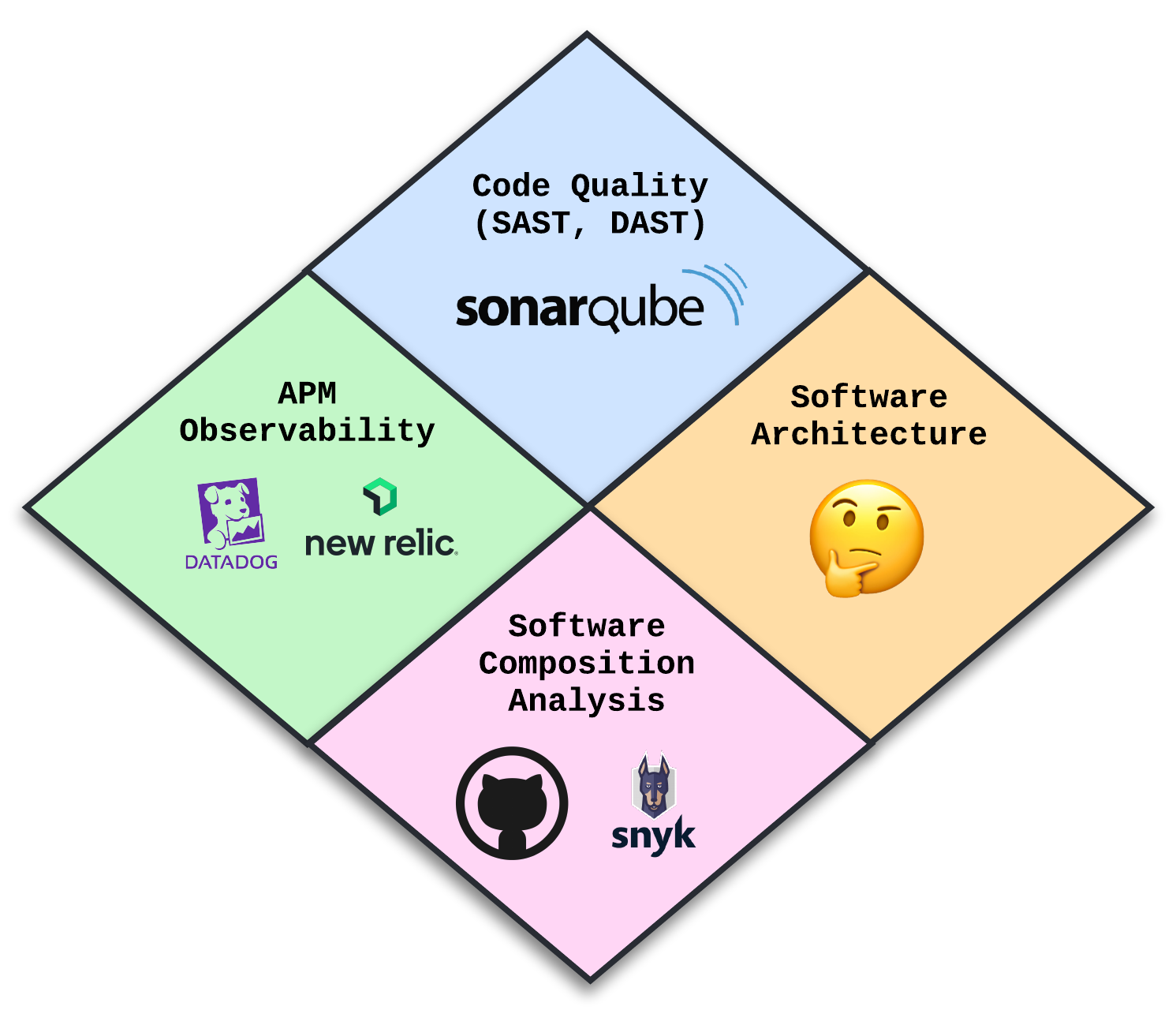

Managing Architectural Tech Debt

Architectural technical debt is a design or construction approach that's

expedient in the short term, but that creates a technical context in which the

same work requires architectural rework and costs more to do later than it

would cost to do now (including increased cost over time). ... The shift-left

approach embraces the concept of moving a given aspect closer to the beginning

than at the end of a lifecycle. This concept gained popularity with shift-left

for testing, where the test phase was moved to a part of the development

process and not a separate event to be completed after development was

finished. Shift-left can be implemented in two different ways in managing

ATD:Shift-left for resiliency: Identifying sources that have an impact on

resiliency, and then fixing them before they manifest in performance.

Shift-left for security: Detect and mitigate security issues during the

development lifecycle. Just like shift-left for testing, a prioritized focus

on resilience and security during the development phase will reduce the

potential for unexpected incidents.

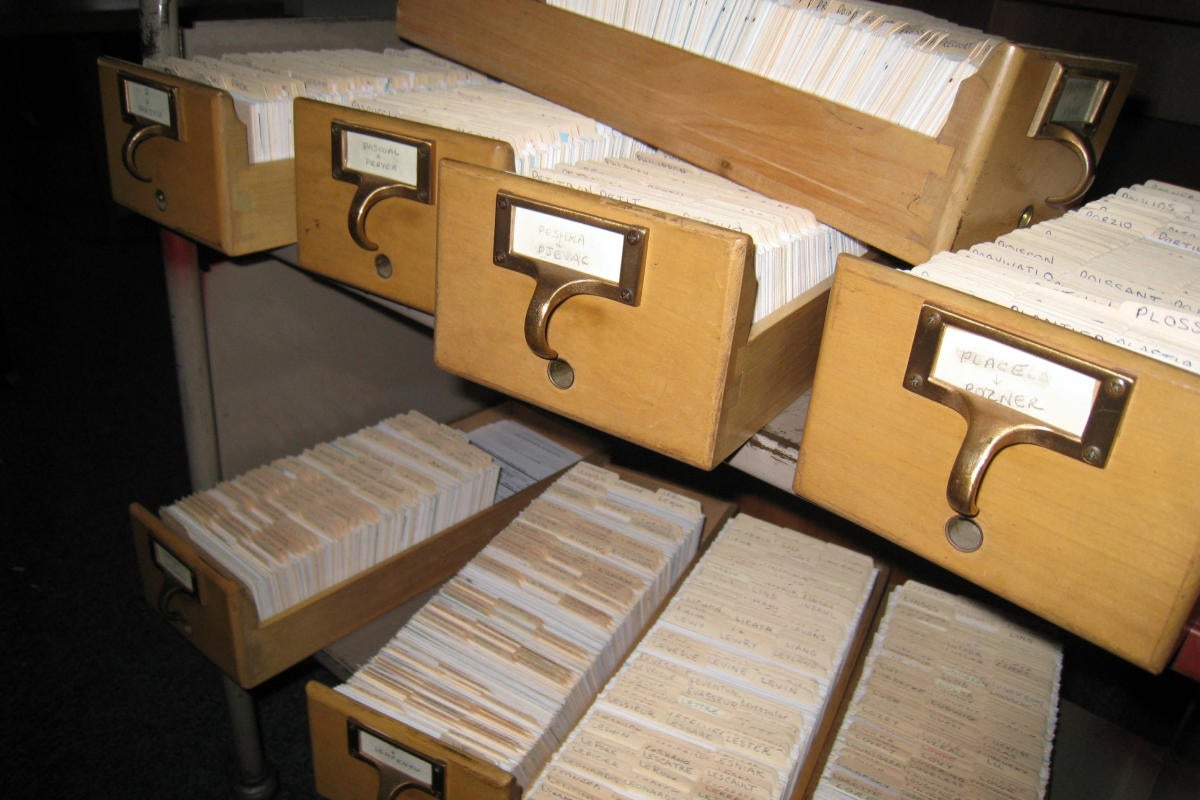

Snowflake adopts open source strategy to grab data catalog mind share

The complexity and diversity of data systems, coupled with the universal

desire of organizations to leverage AI, necessitates the use of an

interoperable data catalog, which is likely to be open source in nature,

according to Chaurasia. “An open-source data catalog addresses

interoperability and other needs, such as scalability, especially if it is

built on top of a popular table format as Iceberg. This approach facilitates

data management across various platforms and cloud environments,” Chaurasia

said. Separately, market research firm IDC’s research vice president Stewart

Bond pointed out that Polaris Catalog may have leveraged Apache Iceberg’s

native Iceberg Catalogs and added enterprise-grade capabilities to it, such as

managing multiple distributed instances of Iceberg repositories, providing

data lineage, search capability for data utilities, and data description

capabilities among others. Polaris Catalog, which Snowflake expects to open

source in the next 90 days, can be either be hosted in its proprietary AI Data

Cloud or can be self-hosted in an enterprise’s own infrastructure using

containers such as Docker or Kubernetes.

Is it Time for a Full-Stack Network Infrastructure?

When we talk about full-stack network infrastructure management, we aren’t

referring to the seven-stack protocol layers upon which networks are built,

but rather to how these various protocol layers and the applications and IT

assets that run on top of them are managed. ... The key to choosing between a

full-stack single network management solution or just a SASE solution that

focuses on security and policy enforcement in a multi-cloud environment is

whether you are most concerned that your network governance and security

policies are uniform and enforced or if you're seeking a solution that is

above and beyond just security and governance, and that can address the entire

network management continuum—from security and governance to monitoring,

configuration, deployment, and mediation. Further complicating the decision of

how to best grow the network is the situation of network vendors themselves.

Those that offer a full-stack, multi-cloud network management solution are in

evolutionary stages themselves. They have a vision of their multi-cloud

full-stack network offerings, but a complete set of stack functionality is not

yet in place.

The expensive and environmental risk of unused assets

While critical loads are expected to be renewed, refreshed, or replaced over

the lifetime of the data center facility, older, non-energy star certified, or

inefficient servers that are still turned on but no longer being used continue

to use both power and cooling resources. Stranded assets also include

excessive redundancy or low utilization of the redundancy options, a lack of

scalable, modular design, and the use of oversized equipment or legacy

lighting and controls. While many may plan for the update and evolution of the

ITE, the mismatch of power and cooling resources versus the equipment

requiring the respective power and cooling inevitably results in stranded

assets. ... Stranded capacity is wasted energy, cooling unnecessary equipment,

and lost cooling to areas that need not be cooled. Stranded cooling capacity

can include bypass air (supply air from cooling units that is not contributing

to cooling the ITE), too much supply air being delivered from the cooling

units, lack of containment, poor rack hygiene (missing blanking panels),

unsealed openings under ITE with raised floors, just to name a few.

Architectural Trade-Offs: The Art of Minimizing Unhappiness

/filters:no_upscale()/articles/trade-offs-minimizing-unhappiness/en/resources/22fig1-1717160045706.jpg)

The critical skill in making trade-offs is being able to consider two or more

potentially opposing alternatives at the same time. This requires being able

to clearly convey alternatives so a team can decide which alternative, or

neither, acceptably meets the QARs under consideration. What makes trade-off

decisions particularly difficult is that the choice is not clear; the facts

supporting the pro and con arguments are typically only partial and often

inconclusive. If the choice was clear there would be no need to make a

trade-off decision. ... Teams who are inexperienced in specific technologies

will struggle to make decisions about how to best use those technologies. For

example, a team may decide to use a poorer-fit technology such as a relational

database to store a set of maps because they don’t understand the better-fit

technology, such as a graph database, well enough to use it. Or they may be

unwilling to take the hit in productivity for a few releases to get better at

using a graph database.

New Machine Learning Algorithm Promises Advances in Computing

Compact enough to fit on an inexpensive computer chip capable of balancing on

your fingertip and able to run without an internet connection, the team’s

digital twin was built to optimize a controller’s efficiency and performance,

which researchers found resulted in a reduction of power consumption. It

achieves this quite easily, mainly because it was trained using a type of

machine learning approach called reservoir computing. “The great thing about

the machine learning architecture we used is that it’s very good at learning

the behavior of systems that evolve in time,” Kent said. “It’s inspired by how

connections spark in the human brain.” Although similarly sized computer chips

have been used in devices like smart fridges, according to the study, this

novel computing ability makes the new model especially well-equipped to handle

dynamic systems such as self-driving vehicles as well as heart monitors, which

must be able to quickly adapt to a patient’s heartbeat. “Big machine learning

models have to consume lots of power to crunch data and come out with the

right parameters, whereas our model and training is so extremely simple that

you could have systems learning on the fly,” he said.

Getting infrastructure right for generative AI

“It was quite cost-effective at first to buy our own hardware, which was a

four-GPU cluster,” says Doniyor Ulmasov, head of engineering at Papercup. He

estimates initial savings between 60% and 70% compared with cloud-based

services. “But when we added another six machines, the power and cooling

requirements were such that the building could not accommodate them. We had to

pay for machines we could not use because we couldn’t cool them,” he recounts.

And electricity and air conditioning weren’t the only obstacles. ... Another

factor working against unmitigated power consumption is sustainability. Many

organizations have adopted sustainability goals, which power-hungry AI

algorithms make it difficult to achieve. Rutten says using SLMs, ARM-based

CPUs, and cloud providers that maintain zero-emissions policies, or that run

on electricity produced by renewable sources, are all worth exploring where

sustainability is a priority. For implementations that require large-scale

workloads, using microprocessors built with field-programmable gate arrays

(FPGAs) or application-specific integrated circuits (ASICs) are a choice worth

considering.

Security Teams Want to Replace MDR With AI: A Good or Bad Idea?

“The first stand-out takeaway is the dissatisfaction with MDR systems across

the board. A mix between high false positive rates and system inefficiencies

is driving a shift for AI solutions, the driving factor being accuracy.”

McStay said that the report’s findings that claim that AI has the potential to

automate and decrease workloads by as much as 95% are “potentially inflated”.

“I don’t think it will be that high in practice, but I would still expect a

massive reduction in workload (circa 50-80%). Perhaps opening up a new

conversation around where time should be spent best?” McStay added that she

does believe replacing MDR with AI is “smart, and certainly what the future

will look like”, based on accuracy and response time. ... “The catch is

that nobody is ‘replacing’ anything, rather AI is being integrated solely for

the purpose of expediting detection and response, which improves the

signal-to-noise ratio for human operators drastically and makes for a far more

effective SOC,” Hasse explained. When questioned whether it was a good idea to

replace MDR with AI Hasse said security teams should not be replacing MDR

services but rather augmenting them.

Quote for the day:

"Decision-making is a skill. Wisdom is

a leadership trait." -- Mark Miller

No comments:

Post a Comment