Remote DevOps is here to stay!

With a mass exodus of the workforce towards a home setting, especially in

India, the demand for skilled professionals in DevOps has dramatically

increased. A recent GitHub report, on the implications of COVID on the

developer community, suggests that developer activities have increased as

compared to last year. This also translates to the fact that developers have

shown resilience and continued to contribute, undeterred by the crisis. This

is the shining moment for DevOps which is built for remote operations. In a

‘choose your own adventure’ situation, DevOps helps organizations evaluate

their own goals, skills, bottlenecks, and blockers to curate a modern

application development and deployment process that works for them. As per an

UpGuard report, on DevOps Stats for Doubters, 63% organizations that

implemented DevOps experienced improvement in the quality of their software

deployments. Delivering business value from data is contingent on the

developers’ ability to innovate through methods like DevOps. It is about

deploying the right foundation for modern application development across both

public and private clouds. The current environment is uncharted territory for

many enterprises.

Breaking Down Serverless Anti-Patterns

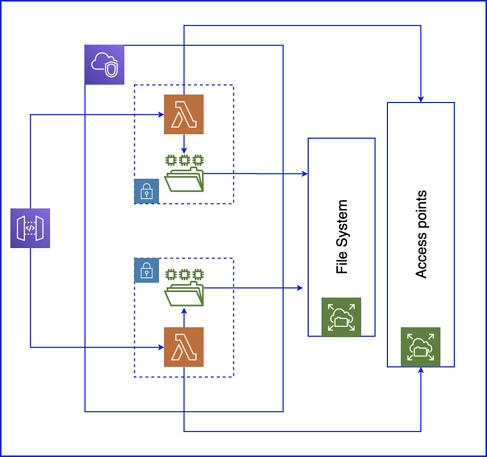

The goal of building with serverless is to dissect the business logic in a

manner that results in independent and highly decoupled functions. This,

however, is easier said than done, and often developers may run into scenarios

where libraries or business logic or, or even just basic code has to be shared

between functions. Thus leading to a form of dependency and coupling that

works against the serverless architecture. Functions depending on one another

with a shared code base and logic leads to an array of problems. The most

prominent is that it hampers scalability. As your systems scale and functions

are constantly reliant on one another, there is an increased risk of errors,

downtime, and latency. The entire premise of microservices was to avoid these

issues. Additionally, one of the selling points of serverless is its

scalability. By coupling functions together via shared logic and codebase, the

system is detrimental not only in terms of microservices but also according to

the core value of serverless scalability. This can be visualized in the image

below, as a change in the data logic of function A will lead to necessary

changes in how data is communicated and processed in function B. Even function

C may be affected depending on the exact use case.

Why Service Meshes Are Security Tools

Modern engineering organizations need to give individual developers the

freedom to choose what components they use in applications as well as how to

manage their own workflows. At the same time, enterprises need to ensure that

there are consistent ways to manage how all of the parts of an application

communicate inside the app as well as with external dependencies. A service

mesh provides a uniform interface between services. Because it’s attached as a

sidecar acting as a micro-dataplane for every component within the service

mesh, it can add encryption and access controls to communication to and from

services, even if neither are natively supported by that service. Just as

importantly, the service mesh can be configured and controlled centrally.

Individual developers don’t have to set up encryption or configure access

controls; security teams can establish organization-wide security policies and

enforce them automatically with the service mesh. Developers get to use

whatever components they need and aren’t slowed down by security

considerations. Security teams can make sure encryption and access controls

are configured appropriately, without depending on developers at all.

Review: AWS Bottlerocket vs. Google Container-Optimized OS

To isolate containers, Bottlerocket uses container control groups (cgroups)

and kernel namespaces for isolation between containers running on the system.

eBPF (enhanced Berkeley Packet Filter) is used to further isolate containers

and to verify container code that requires low-level system access. The eBPF

secure mode prohibits pointer arithmetic, traces I/O, and restricts the kernel

functions the container has access to. The attack surface is reduced by

running all services in containers. While a container might be compromised,

it’s less likely the entire system will be breached, due to container

isolation. Updates are automatically applied when running the Amazon-supplied

edition of Bottlerocket via a Kubernetes operator that comes installed with

the OS. An immutable root filesystem, which creates a hash of the root

filesystem blocks and relies on a verified boot path using dm-verity, ensures

that the system binaries haven’t been tampered with. The configuration is

stateless and /etc/ is mounted on a RAM disk. When running on AWS,

configuration is accomplished with the API and these settings are persisted

across reboots, as they come from file templates within the AWS

infrastructure.

Microsoft tells Windows 10 users they can never uninstall Edge. Wait, what?

Microsoft explained it was migrating all Windows users from the old Edge to

the new one. The update added: "The new version of Microsoft Edge gives users

full control over importing personal data from the legacy version of Microsoft

Edge." Hurrah, I hear you cry. That's surely holier than Google. Microsoft

really cares. Yet next were these words: "The new version of Microsoft

Edge is included in a Windows system update, so the option to uninstall it or

use the legacy version of Microsoft Edge will no longer be available." Those

prone to annoyance would cry: "What does it take not only to force a product

onto a customer but then make sure that they can never get rid of that

product, even if they want to? Even cable companies ultimately discovered that

customers find ways out." Yet, as my colleague Ed Bott helpfully pointed out,

there's a reason you can't uninstall Edge. Well, initially. It's the only way

you can download the browser you actually want to use. You can, therefore,

hide Edge -- it's not difficult -- but not completely eliminate it from your

life. Actually that's not strictly true either. The tech world houses many

large and twisted brains. They don't only work at Microsoft. Some immediately

suggested methods to get your legacy Edge back on Windows 10. Here's one way

to do it.

Digital public services: How to achieve fast transformation at scale

For most public services, digital reimagination can significantly enhance the

user experience. Forms, for example, can require less data and pull

information directly from government databases. Texts or push notifications

can use simpler language. Users can upload documents as scans. In addition,

agencies can link touchpoints within a single user journey and offer digital

status notifications. Implementing all of these changes is no trivial matter

and requires numerous actors to collaborate. Several public authorities are

usually involved, each of which owns different touchpoints on the user

journey. The number of actors increases exponentially when local governments

are responsible for service delivery. Often, legal frameworks must be amended

to permit digitization, meaning that the relevant regulator needs to be

involved. Yet when governments use established waterfall approaches to project

management (in which each step depends on the results of the previous step),

digitization can take a long time and the results often fall short. In many

cases, long and expensive projects have delivered solutions that users have

failed to adopt.

State-backed hacking, cyber deterrence, and the need for international norms

The issue of how cyber attack attribution should be handled and confirmed also

deserves to be addressed. Dr. Yannakogeorgos says that, while attribution of

cyber attacks is definitely not as clear-cut as seeing smoke coming out of a

gun in the real world, with the robust law enforcement, public private

partnerships, cyber threat intelligence firms, and information sharing via

ISACs, the US has come a long way in terms of not only figuring out who

conducted criminal activity in cyberspace, but arresting global networks of

cyber criminals as well. Granted, things get trickier when these actors are

working for or on behalf of a nation-state. “If these activities are part of a

covert operation, then by definition the government will have done all it can

for its actions to be ‘plausibly deniable.’ This is true for activities

outside of cyberspace as well. Nations can point fingers at each other, and

present evidence. The accused can deny and say the accusations are based on

fabrications,” he explained. “However, at least within the United States,

we’ve developed a very robust analytic framework for attribution that can

eliminate reasonable doubt amongst friends and allies, and can send a clear

signal to planners on the opposing side...."

Tackling Bias and Explainability in Automated Machine Learning

At a minimum, users need to understand the risk of bias in their data set

because much of the bias in model building can be human bias. That doesn't

mean just throwing out variables, which, if done incorrectly, can lead to

additional issues. Research in bias and explainability has grown in importance

recently and tools are starting to reach the market to help. For instance, the

AI Fairness 360 (AIF360) project, launched by IBM, provides open source bias

mitigation algorithms developed by the research community. These include bias

mitigation algorithms to help in the pre-processing, in-processing, and

post-processing stages of machine learning. In other words, the algorithms

operate over the data to identify and treat bias. Vendors, including SAS,

DataRobot, and H20.ai, are providing features in their tools that help explain

model output. One example is a bar chart that ranks a feature's impact. That

makes it easier to tell what features are important in the model. Vendors such

as H20.ai provide three kinds of output that help with explainability and

bias. These include feature importance as well as Shapely partial dependence

plots (e.g., how much a feature value contributed to the prediction) and

disparate impact analysis. Disparate impact analysis quantitatively measures

the adverse treatment of protected classes.

Chief Data Analytics Officers – The Key to Data-Driven Success?

Core to the role is the experience and desire to use data to solve real

business problems. Combining an overarching view of the data across the

organisation, with a well-articulated data strategy, the CDAO is uniquely

placed to balance specific needs for data against wider corporate goals. They

should be laser-focused on extracting value from the bank’s data assets and

‘connecting-the-dots’ for others. By seeing and effectively communicating the

links between different data and understanding how it can be combined to

deliver business benefit, the CDAO does what no other role can do: bring the

right data from across the business, plus the expertise of data scientists, to

bear on every opportunity. Balance is critical. Leveraging their understanding

of analytics and data quality, the CDAO can bring confidence to business

leaders afraid to engage with data. They understand governance, and so can

police which data can be used for innovation and which is business critical

and ‘untouchable.’ They can deploy and manage data scientists to ensure they

are focused on real business issues not pet analytics projects.

Innovation-focused CDAOs will actively look for ways to generate returns on

data assets, and to partner with commercial units to create new revenue from

data insights.

How the network can support zero trust

One broad principle of zero trust is least privilege, which is granting

individuals access to just enough resources to carry out their jobs and

nothing more. One way to accomplish this is network segmentation, which breaks

the network into unconnected sections based on authentication, trust, user

role, and topology. If implemented effectively, it can isolate a host on a

segment and minimize its lateral or east–west communications, thereby limiting

the "blast radius" of collateral damage if a host is compromised. Because

hosts and applications can reach only the limited resources they are

authorized to access, segmentation prevents attackers from gaining a foothold

into the rest of the network. Entities are granted access and authorized to

access resources based on context: who an individual is, what device is being

used to access the network, where it is located, how it is communicating and

why access is needed. There are other methods of enforcing segmentation. One

of the oldest is physical separation in which physically separate networks

with their own dedicated servers, cables and network devices are set up for

different levels of security. While this is a tried-and-true method, it can be

costly to build completely separate environments for each user's trust level

and role.

Quote for the day:

"Gratitude is the place where all dreams come true. You have to get there before they do." -- Jim Carrey

No comments:

Post a Comment