Grassroots Data Security: Leveraging User Knowledge to Set Policy

Today, the IT team owns the entire problem. They write rules to discover and

characterize content (What is this file? Do we care about it?). They write

more rules to evaluate that content (Is it stored in the right place? Is it

marked correctly?). Then they write still more rules to enforce a policy

(block, quarantine, encrypt, log). Unsurprisingly, complexity, maintenance

overhead, false positives and security lapses are inevitable. It turns out

data security policies are already defined. They’re hiding in plain sight.

That’s because content creators are also the content experts and they’re

demonstrating policy as they go. A sales team, for example, manages hundreds

of quotes, contracts and other sensitive documents. The way they mark, store,

share and use them defines an implicit data security policy. Every group of

similar documents has an implicit policy defined by the expert content

creators themselves. The problem, of course, is how to extract that grassroots

wisdom. Deep learning gives us two tools to do it: representation learning and

anomaly detection. Representation learning is the ability to process large

amounts of information about a group of “things” (files in our case) and

categorize those things. For data security, advances in natural language

processing now give us insights into a document’s meaning that are far richer

and more accurate than simple keyword matches.

IoT governance: how to deal with the compliance and security challenges

According to Ted Wagner, CISO at SAP NS2, the topics that should be included

in any IoT governance program are “software and hardware vulnerabilities, and

compliance with security requirements — whether they be regulatory or policy

based.” He refers to a typical use case of when a software flaw is discovered

within an IoT device. In this instance, it is important to determine the

severity of the flaw. Could it lead to a security incident? How quickly does

it need to be addressed? If there is no way to patch the software, is there

another way to protect the device or mitigate the risk? “A good way to deal

with IoT governance is to have a board as a governance structure. Proposals

are presented to the board, which is normally made up of 6-12 individuals who

discuss the merits of any new proposal or change. They may monitor ongoing

risks like software vulnerabilities by receiving periodic vulnerability

reports that include trends or metrics on vulnerabilities. Some boards have a

lot of authority, while others may act as an advisory function to an executive

or a decision maker,” Wagner advises.

Smart locks opened with nothing more than a MAC address

Young reached out to U-Tec on November 10, 2019, with his findings. The

company told Young not to worry in the beginning, claiming that "unauthorized

users will not be able to open the door." The cybersecurity researcher then

provided them with a screenshot of the Shodan scrape, revealing active

customer email addresses leaked in the form of MQTT topic names. Within a day,

the U-Tec team made a few changes, including the closure of an open port,

adding rules to prevent non-authenticated users from subscribing to services,

and "turning off non-authenticated user access." While an improvement, this

did not resolve everything. "The key problem here is that they focused

on user authentication but failed to implement user-level access controls,"

Young commented. "I demonstrated that any free/anonymous account could connect

and interact with devices from any other user. All that was necessary is to

sniff the MQTT traffic generated by the app to recover a device-specific

username and an MD5 digest which acts as a password." After being pushed

further, U-Tec spent the next few days implementing user isolation protocols,

resolving every issue reported by Tripwire within a week.

RPA competitors battle for a bigger prize: automation everywhere

Competitive dynamics are heating up. The two emergent leaders, Automation

Anywhere Inc. and UiPath Inc., are separating from the pack. Large incumbent

software vendors such as Microsoft Corp., IBM Corp. and SAP SE are entering

the market and positioning RPA as a feature. Meanwhile, the legacy business

process players continue to focus on taking their installed bases on a broader

automation journey. However, all three of these constituents are on a

collision course in our view where a deeper automation objective is the “north

star.” First, we have expanded our thinking on the RPA total available market

and we are extending this toward a broader automation agenda more consistent

with buyer goals. In other words, the TAM is much larger than we initially

thought and we’ll explain why. Second, we no longer see this as a

winner-take-all or winner-take-most market. In this segment we’ll look deeper

into the leaders and share some new data. In particular, although it appeared

in our previous analysis that UiPath was running the table on the market, we

see a more textured competitive dynamic setting up and the data suggests that

other players, including Automation Anywhere and some of the larger

incumbents, will challenge UiPath for leadership in this market.

Unlocking Industry 4.0: Understanding IoT In The Age Of 5G

The challenge is not just about bandwidth. Different IoT systems will have

different network requirements. Some devices will demand absolute reliability

where low latency will be critical, while other use cases will see networks

having to cope with a much higher density of connected devices than we’ve

previously seen. For example, within a production plant, one day simple

sensors might collect and store data and communicate to a gateway device that

contains application logic. In other scenarios, IoT sensor data might need to

be collected in real-time from sensors, RFID tags, tracking devices, even

mobile phones across a wider area via 5G protocols. Bottom line: Future 5G

networks could help enable a number of IoT and IIoT use cases and benefits in

the manufacturing industry. Looking ahead, don’t be surprised if you see these

five use cases transform with strong, reliable connectivity from

multi-spectrum 5G networks currently being built and the introduction of

compatible devices. With IoT/IIoT, manufacturers could connect production

equipment and other machines, tools, and assets in factories and warehouses,

providing managers and engineers with more visibility into production

operations and any issues that might arise.

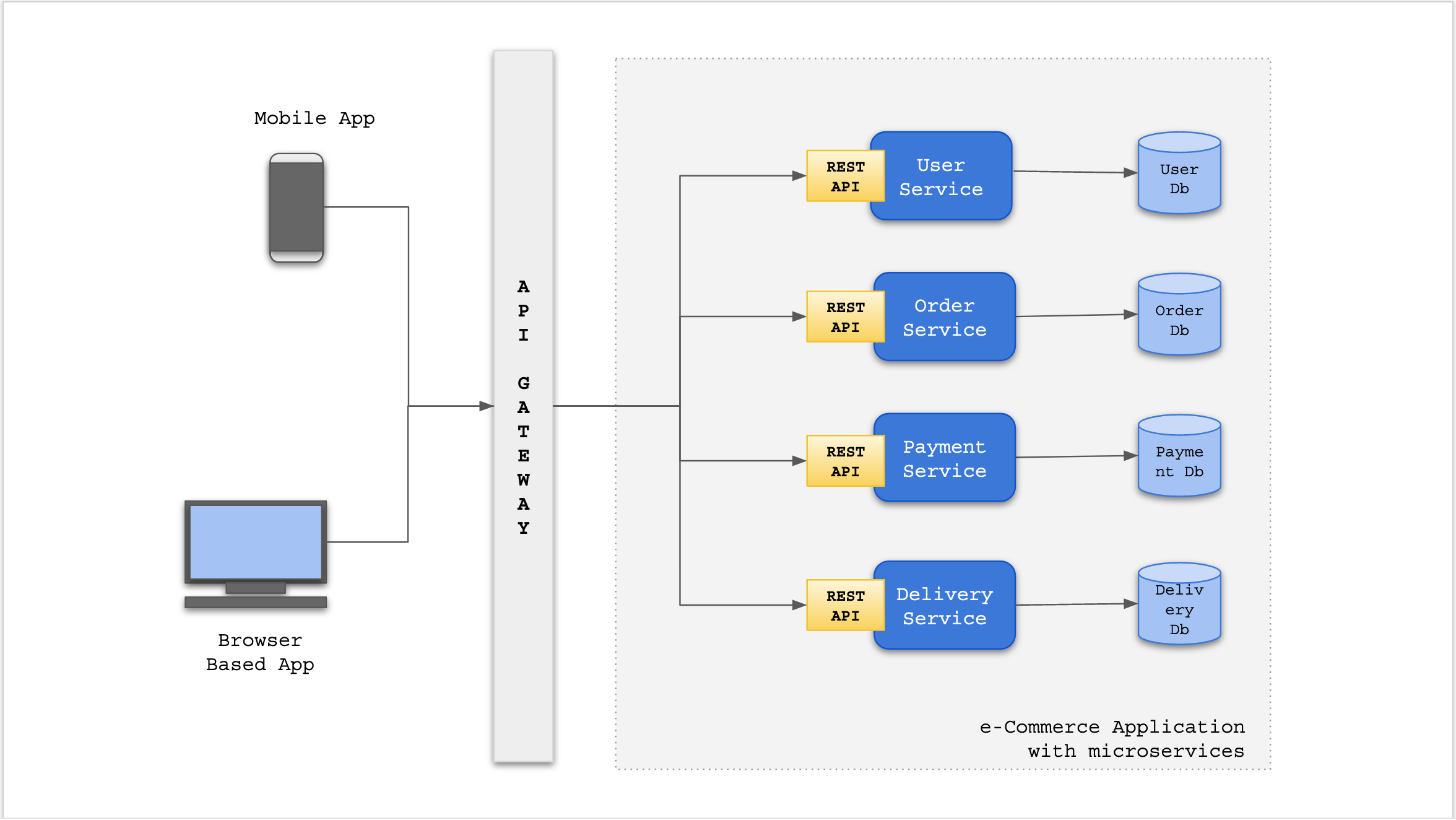

The case for microservices is upon us

For many businesses, monolithic architecture has been and will continue to be

sufficient. However, with the rise of mobile browsing and the growing ubiquity

of omnichannel service delivery, many businesses are finding their code

libraries become more convoluted and difficult to maintain with each passing

year. As businesses scale and expand their business capabilities, they

often run into the issue that the code behind their various components is too

tightly bound in a monolithic structure. This makes it difficult to deploy

updates and fixes because change cycles are tied together, which means they

need to update the whole system at once instead of simply updating the single

function that needs improvement. Microservices architecture is one of

the ways companies are overhauling their tech stacks to keep up with modern

DevOps best practices and future proof their operations, making them more

flexible and agile. Given the rapid pace of change where technologies

and consumer expectations are concerned, businesses that do not build capacity

for agility and scalability into their business model are placing themselves

at a disadvantage – particularly at a time when businesses are being forced to

pivot frequently in response to widespread market instability.

Game of Microservices

A microservice works best when it has it's own private database (database

per service). This ensures loose coupling with other services and the data

integrity will be maintained i.e. each microservice controls and updates

it's own data. ... A SAGA is a sequence of local transactions. In SAGA, a

set of services work in tandem to execute a piece of functionality and each

local transaction updates the data in one service and sends an event or a

message that triggers the next transaction in other services. The

architecture for microservices mandates (usually) the Database per Service

paradigm. The monolithic approach though having it's own operational issues,

it does deal with transactions very well. It truly offers a inherent

mechanism to provide ACID transactions and also roll-back in cases of

failure. In contrast, in the Microservices approach as we have distributed

the data and the datasources based on the service, there might be cases

where some transactions, spreads over multiple services. Achieving

transactional guarantees in such cases is of high importance or else we tend

to lose data consistency and the application can be in an unexpected state.

A mechanism to ensure data consistency across services is following the SAGA

approach. SAGA ensures data consistency across services.

Metadata Repository Basics: From Database to Data Architecture

While knowledge graphs have shown potential for the metadata repository to

find relationship patterns among large amounts of information, some

businesses want more from a metadata repository. Streaming data ingested

into databases from social media and IoT sensors, also need to be described.

According to a New Stack survey of 800 professionals developers, real-time

data use has seen a significant increase. What does this mean for the

metadata repository? Enterprises want metadata to show the who, what, why,

when, and how of their data. The centralized metadata repository database

answers these questions but remains too slow and cumbersome to handle large

amounts of light-speed metadata. Knowledge graphs have the advantage of

dealing with lots of data and quickly. However, knowledge graphs display

only specific types of patterns in their metadata repository. Companies need

another metadata repository tool. Here comes the data catalog, a metadata

repository informing consumers what data lives in data systems and the

context of this data.

Why edge computing is forcing us to rethink software architectures

The perspective on cloud hardware has since shifted. The current generation

of cloud focuses on expensive, high-performance hardware rather than cheap

commoditised systems. For one, cloud hardware and data centre architectures

are morphing into something resembling an HPC system or supercomputer.

Networking has followed the same route, with technologies like infiniband

EDR and photonics paving the way for ever greater bandwidth and tighter

latencies between servers, while using backbones and virtual networks have

led to improvements in the bandwidth between geographically distant cloud

data centres. The other shift currently underway is in the layout of these

platforms themselves. The cloud is morphing and merging into edge computing

environments where data centres are deployed with significantly greater

de-centralisation and distribution. Traditionally an entire continent may be

served by a handful of cloud data centres. Edge computing moved these

computing resources much closer to the end-user — virtually to every city or

major town. The edge data centres of every major cloud provider are now

integrated into their backbone providing a sophisticated, geographically

dispersed grid.

The Importance of Reliability Engineering

SRE isn’t just a set of practices and policies—it’s a mentality on how to

develop software in a culture free of blame. By embracing this new mindset,

your team’s morale and camaraderie will improve, allowing everyone to work at

their full potential in a psychologically safe environment. SRE teaches

us that failure is inevitable. No matter how many precautions you take,

incidents happen. While giving you the tools to respond effectively to these

incidents, SRE also challenges us to celebrate these failures. When something

new goes wrong, it means there’s a chance to learn about your systems. This

attitude creates an environment of continuous learning. When analyzing

these inevitable incidents, it’s important to maintain an attitude of

blamelessness. Instead of wasting time pointing fingers and finding fault,

work together to find the systematic issues behind the incident. By avoiding a

culture of blame and shame, engineers are less afraid to proactively raise

issues. Team members will trust each other more, assuming good faith in their

teammates’ choices. This spirit of blameless collaboration will transform the

most challenging incidents into opportunities for growing stronger

together.

Quote for the day:

"One must be convinced to convince, to have enthusiasm to stimulate the others." -- Stefan Zweig

No comments:

Post a Comment