When to use Java as a Data Scientist

When you are responsible for building an end-to-end data product, you are

essentially building a data pipeline where data is fetched from a source,

features are calculated based on the retrieved data, a model is applied to the

resulting feature vector or tensor, and the model results are stored or

streamed to another system. While Python is great for modeling training and

there’s tools for model serving, it only covers a subset of the steps in this

pipeline. This is where Java really shines, because it is the language used to

implement many of the most commonly used tools for building data pipelines

including Apache Hadoop, Apache Kafka, Apache Beam, and Apache Flink. If you

are responsible for building the data retrieval and data aggregating portions

of a data product, then Java provides a wide range of tools. Also, getting

hands on with Java means that you will build experience with the programming

language used by many big data projects. My preferred tool for implementing

these steps in a data workflow is Cloud Dataflow, which is based on Apache

Beam. While many tools for data pipelines support multiple runtime languages,

there many be significantly performance differences between the Java and

Python options.

Alert: Russian Hackers Deploying Linux Malware

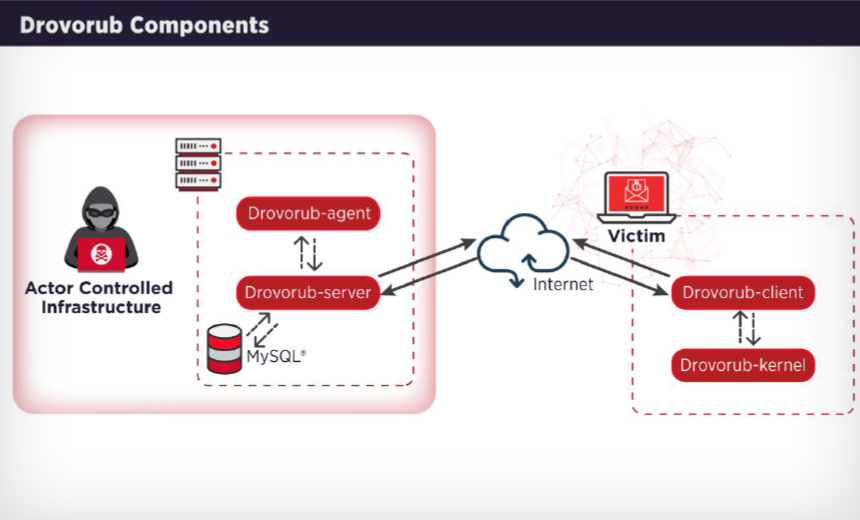

Analysts have linked Drovorub to the Russian hackers working for the GRU, the

alert states, noting that the command-and-control infrastructure associated

with this campaign had previously been used by the Fancy Bear group. An IP

address linked to a 2019 Fancy Bear campaign is also associated with the

Drovorub malware activity, according to the report. The Drovorub toolkit has

several components, including a toolset consisting of an implant module

coupled with a kernel module rootkit, a file transfer and port forwarding tool

as well as a command-and-control server. All this is designed to gain a

foothold in the network to create the backdoor and exfiltrate data, according

to the alert. "When deployed on a victim machine, the Drovorub implant

(client) provides the capability for direct communications with

actor-controlled [command-and-control] infrastructure; file download and

upload capabilities; execution of arbitrary commands as 'root'; and port

forwarding of network traffic to other hosts on the network," according to the

alert. Steve Grobman, CTO at the security firm McAfee, notes that the rootkit

associated with Drovorub can allow hackers to plant the malware within a

system and avoid detection, making it a useful tool for cyberespionage or

election interference.

How Community-Driven Analytics Promotes Data Literacy in Enterprises

Data is deeply integrated into the business processes of nearly every company

precisely because it is helping us make better decisions and not because of

its ability to hasten lofty things, such as digital transformation. The

C-suite sees the advantages data insights provide and as a result,

non-technical employees are increasingly expected to be more technically adept

at extraction and interpretation of data. Successful organizations foster a

community of data curious teams and empower them with a single platform that

enables everyone, regardless of technical ability, to explore, analyze and

share data. Furthermore, domain experts and business leaders must be able to

generate their own content, build off of content created by others and promote

high-value, trustworthy content, while also demoting old, inaccurate, or

unused content. This should resemble an active peer review process where

helpful content is promoted and bad content is flagged as such by the

community, while simultaneously being managed and governed by the data team.

The Anatomy of a SaaS Attack: Catching and Investigating Threats with AI

SaaS solutions have been an entry point for cyber-attackers for some time –

but little attention is given to how the Techniques, Tools & Procedures

(TTPs) in SaaS attacks differ significantly from traditional TTPs seen in

networks and endpoint attacks. This raises a number of questions for security

experts: how do you create meaningful detections in SaaS environments that

don’t have endpoint or network data? How can you investigate threats in a SaaS

environment? What does a ‘good’ SaaS environment look like as opposed to one

that’s threatening? A global shortage in cyber skills already creates problems

for finding security analysts able to work in traditional IT environments –

hiring security experts with SaaS domain knowledge is all the more

challenging. ... A more intricate and effective approach to SaaS security

requires an understanding of the dynamic individual behind the account. SaaS

applications are fundamentally platforms for humans to communicate – allowing

them to exchange and store ideas and information. Abnormal, threatening

behavior is therefore impossible to detect without a nuanced understanding of

those unique individuals: where and when do they typically access a SaaS

account, which files are they like to access, who do they typically connect

with?

How to maximise your cloud computing investment

“At the core of the issue is that with a conventional, router-centric

approach, access to applications residing in the cloud means traversing

unnecessary hops through the HQ data centre, resulting in inefficient use of

bandwidth, additional cost, added latency and potentially lower productivity,”

said Pamplin. “To fully realise the potential of cloud, organisations must

look to a business-driven networking model to achieve greater agility and

substantial CAPEX and OPEX savings. “When it comes to cloud usage, a

business-driven network model should also give clear application visibility

through a single pane of glass, or else organisations will be in the dark

regarding their application performance and, ultimately, their return on

investment. “Only through utilisation of advanced networking solutions, where

application policies are centrally defined based on business intent, and users

are connected securely and directly to applications wherever they reside, can

the benefits of the cloud be truly realised. “A business-driven approach

eliminates the extra hops and risk of security compromises. This ensures

optimal and cost-efficient cloud usage, as applications will be able to run

smoothly while fully supported by the network. ..."

AI Needs To Learn Multi-Intent For Computers To Show Empathy

Wael ElRifai, VP for solution engineering at Hitachi Vantara reminds us that

teaching a chatbot multi-intent is a more manual process than we’d like to

believe. He says that its core will be actions like telling the software to

search for keywords such as “end” or “and”, which act as connectors for

independent clauses, breaking down a multiple intent query into multiple

single-intent queries and then using traditional techniques. “Deciphering

intent is far more complex than just language interpretation. As humans, we

know language is imbued with all kinds of nuances and contextual inferences.

And actually, humans aren’t that great at expressing intent, either. Therein

lies the real challenge for developers,” said ElRifai. ... “In many

cases, that’s what you need, but when we look more broadly at the kinds of

problems that businesses face, across many different industries, the vast

majority of problems actually don’t follow that ‘one thing well’ model all

that well. Many of the things we’d like to automate are more like puzzles to

be solved, where we need to take in lots of different kinds of data, reason

about them and then test out potential solutions,” said IBM’s Cox.

Code Obfuscation: A Comprehensive Guide Towards Securing Your Code

Since code obfuscation brings about deep changes in the code structure, it may

bring about a significant change in the performance of the application as

well. In general, rename obfuscation hardly impacts performance, since it is

only the variables, methods, and class which are renamed. On the other hand,

control-flow obfuscation does have an impact on code performance. Adding

meaningless control loops to make the code hard to follow often adds overhead

on the existing codebase, which makes it an essential feature to implement,

but with abundant caution. A rule of thumb in code obfuscation is that

more the number of techniques applied to the original code, more time will be

consumed in deobfuscation. Depending on the techniques and contextualization,

the impact on code performance usually varies from 10 percent to 80 percent.

Hence, potency and resilience, the factors discussed above, should become the

guiding principles in code obfuscation as any kind of obfuscation (except

rename obfuscation) has an opportunity cost. Most of the obfuscation

techniques discussed above do place a premium on the code performance, and it

is up to the development and security professionals to pick and choose

techniques best suited for their applications.

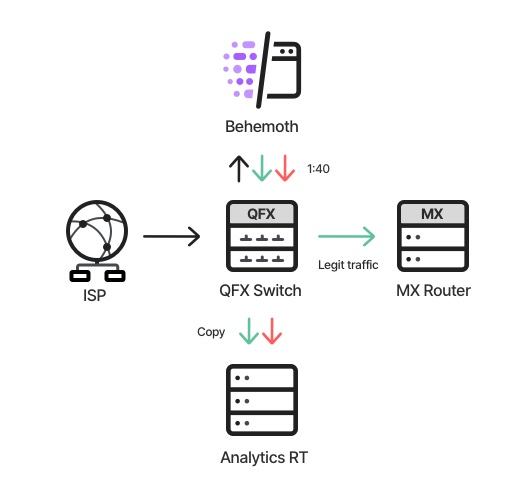

Designing a High-throughput, Real-time Network Traffic Analyzer

Run-to-completion is a design concept which aims to finish the processing of

an element as soon as possible, avoiding infrastructure-related interferences

such as passing data over queues, obtaining and releasing locks, etc. As a

data-plane component, sensitive to latency, the Behemoth’s (and some

supplementary components) design relies on that concept. This means that, once

a packet is diverted into the app, its whole processing is done in a single

thread (worker), on a dedicated CPU core. Each worker is responsible for the

entire mitigation flow – pulling the traffic from a NIC, matching it to a

policy, analyzing it, enforcing the policy on it, and, assuming it’s a legit

packet, returning it back to the very same NIC. This design results in great

performance and negligible latency, but has the obvious disadvantage of a

somewhat messy architecture, since each worker is responsible for multiple

tasks. Once we’d decided that AnalyticsRT would not be an integral “station”

in the traffic data-plane, we gained the luxury of using a pipeline model, in

which the real-time objects “travel” between different threads (in parallel),

each one responsible for different tasks.

RASP A Must-Have Thing to Protect the Mobile Applications

The concept of RASP is found to be very much effective because it helps in

dealing with the application layer attacks. The concept also allows us to deal

with custom triggers so that critical components or never compromised in the

business. The development team should also focus on the skeptical approach

about implementing the security solutions so that impact is never adverse. The

implementation of these kinds of solutions will also help to consume minimal

resources and will ensure that overall goals are very well met and there is

the least negative impact on the performance of the application. Convincing

the stakeholders was a very great issue for the organizations but with the

implementation of RASP solutions, the concept has become very much easy

because it has to provide mobile-friendly services. Now convincing the

stakeholders is no more a hassle because it has to provide clear-cut

visibility of the applications along with the handling of security threats so

that working of solutions in the background can be undertaken very easily. The

implementation of this concept is proven to be a game-changer in the company

and helps to provide several aspects so that companies can satisfy their

consumers very well. The companies can use several kinds of approaches which

can include binary instrumentation, virtualization, and several other things.

Cyber Adversaries Are Exploiting the Global Pandemic at Enormous Scale

For cyber adversaries, the development of exploits at-scale and the distribution

of those exploits via legitimate and malicious hacking tools continue to take

time. Even though 2020 looks to be on pace to shatter the number of published

vulnerabilities in a single year, vulnerabilities from this year also have the

lowest rate of exploitation ever recorded in the 20-year history of the CVE

List. Interestingly, vulnerabilities from 2018 claim the highest exploitation

prevalence (65%), yet more than a quarter of firms registered attempts to

exploit CVEs from 15 years earlier in 2004. Exploit attempts against several

consumer-grade routers and

IoT

devices were at the top of the list for IPS detections. While some of these

exploits target newer vulnerabilities, a surprising number targeted exploits

first discovered in 2014 – an indication the criminals are looking for exploits

that still exist in home networks to use as a springboard into the corporate

network. In addition, Mirai (2016) and Gh0st (2009) dominated the most prevalent

botnet detections, driven by an apparent growing interest by attackers targeting

older vulnerabilities in consumer IoT products.

Quote for the day:

"Nothing is so potent as the silent influence of a good example." -- James Kent

No comments:

Post a Comment