When hybrid multicloud has technical advantages

Big companies can’t turn their ships fast enough, and the CIO must consider

setting priorities — based on business impact and time to value — for

application modernization. As Keith Townsend, co-founder of The CTO Advisor,

put it on Twitter, “Will moving all of my Oracle apps to Amazon RDS net

business value vs. using that talent to create new apps for different business

initiatives? The problem is today, these are the same resources.” Then ask

software developers, and you’ll find many prefer building applications that

deploy to public clouds, and that leverage serverless architectures. They can

automate application deployment with CI/CD, configure the infrastructure with

IaC, and leave the low-level infrastructure support to the public cloud vendor

and other cloud-native managed service providers. And will your organization

be able to standardize on a single public cloud? Probably not. Acquisitions

may bring in different public clouds than your standards, and many commercial

applications run only on specific public clouds. Chances are, your

organization is going to be multicloud even if it tries hard to avoid it. In

the discussion below, we’ll examine a number of scenarios in which a hybrid

cloud architecture offers technical advantages over private cloud only or

multiple public clouds.

Layering domains and microservices using API Gateways

Bounded contexts are the philosophical building blocks of microservice

architectures. If we want to layer our architecture, we need to layer our

concepts. And as you might imagine, this is not difficult at all! We have the

entire organization’s structure to be inspired, and since domain driven

systems tie in very closely with how organizations are organized, there is

plenty of opportunity to copy-paste. Our organization’s structure clearly

tells us that a “domain” can mean very different things at different levels of

abstractions. As soon as we say “abstraction”, we know that we are in a

hierarchical world. If you have ever seen a junior developer try to explain a

production outage to a senior manager, you know what I am talking about. The

minutiae of system implementation don’t matter to the senior manager because

at his level of operation, “outage due to timeout in calling payment

authentication service from checkout validator service” is interpreted as

“outage in checkout due to payment system”. He doesn’t care about “timeout”,

“authentication”, “validator” or “service” – he cares about “checkout”,

“outage”, and “payment”. The CEO doesn’t even care about “checkout” and

“payment”, he probably just hears “tech” and “outage”.

5G: What Does The Future Hold?

According to David Hardman, until 5G networks cover a much greater area of the

UK, major initiatives around the technology are likely to focus more on what

is possible in the future, rather than necessarily providing solutions today.

“It’s a chicken and egg situation,” he says. “New, innovative products and

services need to be developed in parallel with infrastructure roll-out in

order to take full commercial advantage. Businesses coming through the 5G

incubator, 5PRING, in the early days are likely to be larger established

businesses that can plug in to what 5G currently offers. Full implementation

will enable real commercial returns for these organisations, with the next

wave of innovation then coming from new businesses that establish themselves

when the 5G service is fully up and running.” “Although Huawei concerns and

covid-19 have impacted progress, the pandemic has also woken people up to what

true digital communication is about. Can you imagine what the working world

would have looked like if the virus had struck 15 years ago, when none of the

remote working technology was readily available? If we look forward another 10

years, the development of 5G will bring a further evolutionary step-jump in

what digital has to offer in all aspects of our lives.”

To lead in the postcrisis tomorrow, put leadership and capabilities in place today

By and large, the great remote-working experiment brought on by the crisis has

shown that a lot can be accomplished, immediately and virtually, with small

teams, fewer and streamlined cycles, and without so much time expended on

travel. As one executive noted when talking about his company’s meetings

budget: “The problem isn’t where we are doing the meeting or why, but why did

we have to convene two dozen people to all get together to make the decision …

instead of just three people on a disciplined conference call.” Why are we

talking about speed in a discussion about investing in an organization’s

capabilities? Because without equally addressing speed, an organization’s

progress innovating and adapting merely grinds along. Often,

counterintuitively, it may be necessary to put in some “good bureaucracy.”

During the crisis, some companies have traded in traditional

videoconferencing, replete with large numbers of contributors, in favor of

“wartime councils” in which multiple senior stakeholders gather once to act

rapidly as decision makers. Using something as simple as a two-page document,

teams can cut straight to the heart of a business issue and get to yes or no

quickly, often with better results. Such exercises are worth retaining and

propagating.

5G unmade: The UK’s Huawei reversal splits the global telecom supply chain

It would be the quintessential catalyst for market fragmentation. This was the

argument being made by every telco, every equipment producer, and every

telecom analyst with whom we spoke two years ago, without exception, back when

the world seemed more cooperative, and globalization was a good thing.

"What we don't want," explained Enders' James Barford, "is a situation where

Huawei and ZTE work in China, Ericsson and Nokia work in the rest of the

world, Samsung does a bit here and there. Ultimately, telecoms companies

everywhere have reduced choice, and at the basic standards level, suppliers

aren't working together. The best ideas aren't winning through. At the moment,

if one of Ericsson, Huawei, and Nokia have a good idea, the others have to

follow. . . to keep up. But we don't want to be in a situation where one of

them has an innovation, and the rest of the world just kind of carries on. If

Huawei has an innovation that makes China better, the rest of the world just

misses out." Dr. Lewis chuckled a bit at this scenario. His assertion is that

technology standards at all levels, but especially technology, become global

by nature. Yes, countries may seek to assert some type of sovereign control

over Internet traffic — besides China, Germany and Russia have also staked

claims to digital sovereignty, and observers do perceive this as a global

trend.

A Perfect Storm: The “New Normal” in Business and CCPA Compliance Enforcement

Privacy compliance, while something we have to do as the CCPA starts active

enforcement July 1, is not just a “one and done” task—you need to scale out

your privacy program to stay ahead of each new mandate and adapt to today’s

evolving landscape, whether COVID or the next major unpredictable event.

Simply burying one’s head in the sand in apathy has very costly consequences.

Let’s have a look at data subject reporting: While data protection is a

critical aspect of avoiding a data breach or misuse, there is also a real cost

in handling data subject rights requests from your loyal customers as the CCPA

begins enforcement and the GDPR continues on. And this requires transparency

into data access and use across your organization. A major industry analyst

firm points out in a survey last year on the GDPR that this activity can

represent a potential outlay of $1,406 per request to handle inquiries

manually, on a case-by-case basis. Without an automated approach to privacy

compliance, the costs to manage data subject requests at scale can quickly

overwhelm unprepared organizations. And to do that, you’ll need to take

advantage of automation and AI to find customer data across your organization

and report on its use, or risk privacy regulatory violations with fines and

brand reputation at stake.

Is a lack of governance hindering your cloud workload protection?

As operations in the cloud grow together with the teams managing them,

company-wide visibility and accountability become critical issues. After all,

you can’t accurately detect, stop or respond to something if you can’t see it.

In this way, workload events need to be captured, analysed and stored so that

security teams can enjoy the visibility they need to detect and stop threats

in real-time, as well as to hunt down and investigate threats. Accountability

is a critical concern for information security in cloud computing,

representing most importantly the trust in service relationships between

clients and cloud providers (Microsoft Azure et al). Indeed, without evidence

of accountability, a lack of trust and confidence in cloud computing can raise

it’s head among those concerned with managing the business. Sensitive data

(PII) is processed in the cloud and governance is critical to make sure that

such data is always processed and stored in a secure manner. Data protection

is big news these days – especially more so with the advent of both PII and

General Privacy Data Regulation (GDPR) data compliance regulations. The shared

responsibility model between the cloud platform provider you choose and your

organisation, means that you (the organisation) remain responsible for the

protection and security of any sensitive data from your end customers.

Not seeing automation success? Think like a pilot

Figuring out what processes need to be automated is one thing. Managing them

from then on is a whole new ball game – and one that will require constant

attention. After all, autopilot only kicks in once the plane is successfully

cruising. Process mining technologies help you analyse and discover processes

using your business’ data, but process intelligence goes several steps

further. This offers the deep understanding and real-time monitoring of your

processes that many businesses are missing. Then, it can drill down into the

granular details, explain why processes don’t work and how to fix them, and

give you the tools to solve problems you didn’t even know existed. It’s vital

that business leaders check in on their processes often during this phase, to

see where issues lie, which processes are most problematic, and which are ripe

for automation. Once this is in good shape, you can move on to intelligent

automation – combining process intelligence with automation like RPA. This is

the switch to autopilot. Here, the technology can spot potential issues with

processes like bottlenecks or delays before they happen, and update bots with

corrective actions to fix the failing process.

What are script-based attacks and what can be done to prevent them?

The use of scripts poses many advantages to the attacker: scripts are easy to

write and execute, trivial to obfuscate, and extremely polymorphic. Moreover,

attackers can use many types of script files to carry out an attack – the most

popular being PowerShell, JavaScript, HTA, VBA, VBS, and batch scripts. Since

fileless attacks occur in memory, traditional static file detection is

rendered useless. Furthermore, scripts complicate post-event analysis since

many artifacts related to the attack only exist in the computer’s memory and

may be overwritten or removed through a reboot, for example. In-memory

detection and artifact collection are possible through the use of heuristics

and behavioral analysis, which can detect malicious in-memory activities.

Script-based attacks run on virtually all Windows systems, increasing the

potential attack surface and the chance of infection. One major drawback of

script-based attacks is that, unless deployed via an exploit, user interaction

is required for the script to run. For example, in most cases, the script is

contained either as a script file within an email requiring user action or as

a VBA macro in a document that requires the user to enable macros.

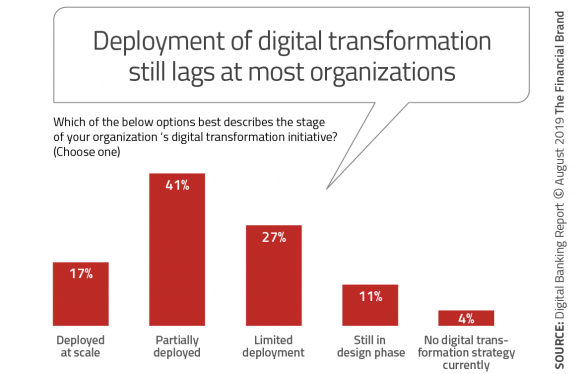

The Illusion of Digital Transformation in Banking

There are strong indications that leadership lacks experience in implementing

such massive transformations. This has resulted in a prioritization of

technology being purchased that may only scratch the surface of needed

transformation. For instance, purchasing a new mobile banking platform is only

as good as the underlying processes that also must be changed to improve the

overall digital banking customer experience. It also appears that the current

financial strength of the industry is resulting in complacency around making

large, overarching changes to what has long been the operating norm in

banking. But the challenges don’t end there. On the not-too-distant horizon,

banks and credit unions will need to address a digital skills shortage and the

internal culture shift requisite to facilitate needed innovation and

transformation. ... The organizations with the greatest digital transformation

maturity tend to be upgrading the most number of digital technologies. In most

cases, the prioritization is determined by a mix of business requirements,

cost, ease (or difficulty) of transformation, and skills available either

internally or through partners. Organizations with the highest digital

transformation maturity have also made progress on implementing the more

sophisticated technologies. These include artificial intelligence (AI),

robotic process automation (RPA), cloud computing, the Internet of Things

(IoT), and blockchain solutions.

Quote for the day:

No comments:

Post a Comment