What we've lost in the push to agile software development, and how to get it back

Team members and business partners should not have to ask questions such as

"what does that arrow mean?" "Is that a Java application?" or "is that a

monolithic application or a set of microservices," he says. Rather,

discussions should focus on the functions and services being delivered to the

business. "The thing nobody talks about is you have to do design to get

version 1," Brown says. "You have to put some foundations in place to give you

a sufficient starting point to iterate, and evolve on top of. And that's what

we're missing." Many software design teams keep upfront design to a minimum,

assuming details will be fleshed out in an agile process as things move along.

Brown says this is misplaced thinking, and design teams should incorporate

more information into their upfront designs, including the type of technology

and languages that are being proposed. "During my travels, I have been given

every excuse you can possibly imagine for why teams should not do upfront

design," he says. Some of his favorite excuses even include the question, "are

we allowed to do upfront design?" Other responses include "we don't do upfront

design because we do XP [extreme programming]," and "we're agile. It's not

expected in agile."

Those who innovate, lead: the new normal for digital transformation

Even in normal times, IT departments struggled to meet their digital

transformation goals as quickly as required. According to research, 59% of IT

directors reported that they were unable to deliver all of their projects last

year. Much of this is due to IT complexity and the challenges inherent in

trying to integrate various data sources, applications and systems in an agile

way that supports the goals of transformation. All too often, organizations

rely on linking capabilities together with point-to-point integrations, which

are inflexible and unsuited to the dynamism of modern IT environments. As a

result, they find it hard to quickly launch innovative, customer-centric

products and services, as they can’t bring together the capabilities that

drive them in a cost and time-effective manner. At the same time, it’s often

the case that digital transformation is left largely to the IT department. IT

teams – already stretched by their day-to-day maintenance responsibilities –

are increasingly tasked with driving the entire organization forward, with

limited support from other teams in the business. Understandably, this has led

to a widening ‘delivery gap’ between what the business expects, and what IT is

able to achieve.

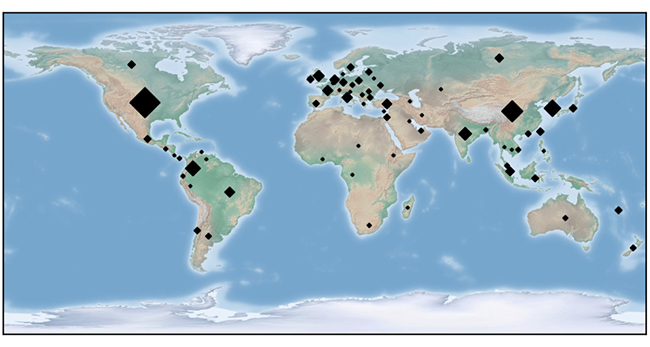

Fileless worm builds cryptomining, backdoor-planting P2P botnet

A fileless worm dubbed FritzFrog has been found roping Linux-based devices –

corporate servers, routers and IoT devices – with SSH servers into a P2P

botnet whose apparent goal is to mine cryptocurrency. Simultaneously, though,

the malware creates a backdoor on the infected machines, allowing attackers to

access it at a later date even if the SSH password has been changed in the

meantime. “When looking at the amount of code dedicated to the miner, compared

with the P2P and the worm (‘cracker’) modules – we can confidently say that

the attackers are much more interested in obtaining access to breached servers

then making profit through Monero,” Guardicore Labs lead researcher Ophir

Harpaz told Help Net Security. “This access and control over SSH servers can

be worth much more money than spreading a cryptominer. Additionally, it is

possible that FritzFrog is a P2P-infrastructure-as-a-service; since it is

robust enough to run any executable file or script on victim machines, this

botnet can potentially be sold in the darknet and be the genie of its

operators, fulfilling any of its malicious wishes.”

Post-Pandemic Digitalization: Building a Human-Centric Cybersecurity Strategy

As leaders of a global business task force responsible for advising and

providing recommendations on the future of digitalization to G20 Leaders, we

are doubling down on our efforts to build cyber resilience, and we urge

leaders to recognize the importance of cybersecurity resilience as a vital

building block of our global economy. And we must be thoughtful in our future

cyber approach. A human-centric, education-first strategy will protect

organizations where they are most vulnerable and get us closer to the point

where cybersecurity is ingrained in our daily life rather than an

afterthought. Action through collaboration, one of our guiding principles as

the voice of the private sector to the G20, is the only viable option. A

public-private partnership built on cooperation among large corporations,

MSMEs, academic institutions, and international governments is the cornerstone

of a modern and resilient cybersecurity system. A few simple but powerful

actions ingrained in a global cybersecurity strategy will bring our users into

the new age of digital transformation and embed a security mindset into our

day-to-day, making breach attempts significantly less successful.

Event Stream Processing: How Banks Can Overcome SQL and NoSQL Related Obstacles with Apache Kafka

Traditional relational databases which support SQL and NoSQL databases present

obstacles to the real-time data flows needed in financial services, but

ultimately still remain useful to banks. Jackson says that databases are good

at recording the current state and allow banks to join and query that data.

“However, they’re not really designed for storing the events that got you

there. This is where Kafka comes in. If you want to move, create, join,

process and reprocess events you really need event streaming technology. This

is becoming critical in the financial services sector where context is

everything – to customers, this can be anything from sharing alerts to let you

know you’ve been paid or instantly sorting transactions into categories.” He

continues to say that Nationwide are starting to build applications around

events, but in the meantime, technologies such as CDC and Kafka Connect, a

tool that reliably streams data between Apache Kafka and other data systems

are helping to bridge older database technologies into the realm of events.

Data caching technology can also play an important role in providing real-time

data access for performance-critical, distributed applications in financial

services as it is a well-known and tested approach to dealing with spikey,

unpredictable loads in a cost-effective and resilient way.

What is semantic interoperability in IoT and why is it important?

Semantic interoperability can today be enabled by declarative models and logic

statements (semantic models) encoded in a formal vocabulary of some sort. The

fundamental idea is that by providing these structured semantic models about a

subsystem, other subsystems can with the same mechanisms get an unambiguous

understanding of the subsystem. This unambiguous understanding is the

cornerstone for other subsystems to confidently interact with (in other words,

understand information from, as well send commands to) the given subsystem to

achieve some desired effect. It's important to note that interoperability is

beyond data exchange formats or even explicit translation of information

models between a producer and a consumer. It’s about the mechanisms to enable

this to happen automatically, without specific programming. There should be no

need for an integrator to review thick manuals in order to understand what is

really meant with a particular piece of data. It should be fully machine

processable. Today, industry standards exist that greatly improve

interoperability with significantly reduced effort. They do so by

standardizing vocabularies and concepts.

GPT-3, Bloviator: OpenAI’s language generator has no idea what it’s talking about

At first glance, GPT-3 seems to have an impressive ability to produce

human-like text. And we don’t doubt that it can used to produce entertaining

surrealist fiction; other commercial applications may emerge as well. But

accuracy is not its strong point. If you dig deeper, you discover that

something’s amiss: although its output is grammatical, and even impressively

idiomatic, its comprehension of the world is often seriously off, which means

you can never really trust what it says. Below are some illustrations of its

lack of comprehension—all, as we will see later, prefigured in an earlier

critique that one of us wrote about GPT-3’s predecessor. Before proceeding,

it’s also worth noting that OpenAI has thus far not allowed us research access

to GPT-3, despite both the company’s name and the nonprofit status of its

oversight organization. Instead, OpenAI put us off indefinitely despite

repeated requests—even as it made access widely available to the media.

Fortunately, our colleague Douglas Summers-Stay, who had access, generously

offered to run the experiments for us. OpenAI’s striking lack of openness

seems to us to be a serious breach of scientific ethics, and a distortion of

the goals of the associated nonprofit.

A Google Drive 'Feature' Could Let Attackers Trick You Into Installing Malware

An unpatched security weakness in Google Drive could be exploited by malware

attackers to distribute malicious files disguised as legitimate documents or

images, enabling bad actors to perform spear-phishing attacks comparatively

with a high success rate. The latest security issue—of which Google is aware

but, unfortunately, left unpatched—resides in the "manage versions"

functionality offered by Google Drive that allows users to upload and manage

different versions of a file, as well as in the way its interface provides a

new version of the files to the users. ... According to A. Nikoci, a system

administrator by profession who reported the flaw to Google and later

disclosed it to The Hacker News, the affected functionally allows users to

upload a new version with any file extension for any existing file on the

cloud storage, even with a malicious executable. As shown in the demo

videos—which Nikoci shared exclusively with The Hacker News—in doing so, a

legitimate version of the file that's already been shared among a group of

users can be replaced by a malicious file, which when previewed online doesn't

indicate newly made changes or raise any alarm, but when downloaded can be

employed to infect targeted systems.

What is Microsoft's MeTAOS?

MeTAOS/Taos is not an OS in the way we currently think of Windows or Linux.

It's more of a layer that Microsoft wants to evolve to harness the user data

in the substrate to make user experiences and user-facing apps smarter and

more proactive. A job description for a Principal Engineering

Manager for Taos mentions the foundational layer: "We aspire to create a

platform on top of that foundation - one oriented around people and the work

they want to do rather than our devices, apps, and technologies. This vision

has the potential to define the future of Microsoft 365 and make a dramatic

impact on the entire industry." A related SharePoint/MeTA job description adds

some additional context: "We are excited about transforming our customers into

'AI natives,' where technology augments their ability to achieve more with the

files, web pages, news, and other content that people need to get their task

done efficiently by providing them timely and actionable notifications that

understands their intents, context and adapts to their work habits." In short,

MeTAOS/Taos could be the next step along the Office 365 substrate path.

Microsoft officials haven't said a lot publicly about the substrate, but it's

basically a set of storage and other services at the heart of Office

365.

What Organizations Need to Know About IoT Supply Chain Risk

When it comes to IoT, IT, and OT devices, there is no software bill of

materials (SBOM), though there have been some industry calls for one. That

means the manufacturer has no obligation to disclose to you what components

make up a device. When a typical device or software vulnerability is

disclosed, an organization can fairly easily use tools such as device

visibility and asset management to find and patch vulnerable devices on its

network. However, without a standard requirement to disclose what components

are under the hood, it can be extremely difficult to even identify what

manufacturers or devices may be affected by a supply chain vulnerability like

Ripple20 unless the vendor confirms it. For organizations, this challenge

means pressing manufacturers for information on components when making

purchasing decisions. While it is not realistic to solely base every

purchasing decision based on security, the nature of these supply chain

challenges demand at least gaining information in order to make the best risk

calculus. What makes supply chain risk unique is that one vulnerability can

affect many types of devices.

Quote for the day:

"Learning is a lifetime process, but there comes a time when we must stop adding and start updating." -- Robert Braul

No comments:

Post a Comment